@zhangyy

2018-04-12T06:00:44.000000Z

字数 7966

阅读 724

hue 协作框架

协作框架

- 一: hue 的简介

- 二: hue 的安装与配置

- 三: 创建hue 与其它框架的集成

一: hue 的简介

Hue是一个开源的Apache Hadoop UI系统,由Cloudera Desktop演化而来,最后Cloudera公司将其贡献给Apache基金会的Hadoop社区,它是基于Python Web框架Django实现的。通过使用Hue我们可以在浏览器端的Web控制台上与Hadoop集群进行交互来分析处理数据,例如操作HDFS上的数据,运行MapReduce Job,执行Hive的SQL语句,浏览HBase数据库等等

Hue在数据库方面,默认使用的是SQLite数据库来管理自身的数据,包括用户认证和授权,另外,可以自定义为MySQL数据库、Postgresql数据库、以及Oracle数据库。其自身的功能包含有:对HDFS的访问,通过浏览器来查阅HDFS的数据。Hive编辑器:可以编写HQL和运行HQL脚本,以及查看运行结果等相关Hive功能。提供Solr搜索应用,并对应相应的可视化数据视图以及DashBoard。提供Impala的应用进行数据交互查询。最新的版本集成了Spark编辑器和DashBoard支持Pig编辑器,并能够运行编写的脚本任务。Oozie调度器,可以通过DashBoard来提交和监控Workflow、Coordinator以及Bundle。支持HBase对数据的查询修改以及可视化。支持对Metastore的浏览,可以访问Hive的元数据以及对应的HCatalog。另外,还有对Job的支持,Sqoop,ZooKeeper以及DB(MySQL,SQLite,Oracle等)的支持。

二: hue 的安装与配置

mysql 环境初始化配置

2.1 升级原来的mysql:

rpm -e MySQL-server-5.6.24-1.el6.x86_64 MySQL-client-5.6.24-1.el6.x86_64

2.2 升级成新的mysql

rpm -Uvh MySQL-server-5.6.31-1.el6.x86_64.rpmrpm -Uvh MySQL-client-5.6.31-1.el6.x86_64.rpmrpm -ivh MySQL-devel-5.6.31-1.el6.x86_64.rpmrpm -ivh MySQL-embedded-5.6.31-1.el6.x86_64.rpmrpm -ivh MySQL-shared-5.6.31-1.el6.x86_64.rpmrpm -ivh MySQL-shared-compat-5.6.31-1.el6.x86_64.rpmrpm -ivh MySQL-test-5.6.31-1.el6.x86_64.rpm

2.3 修改mysql 密码:

mysql -uroot -polCiurMmcTS1zkQn密码在/root/.mysql_scret 里面

修改密码:set password=password('123456');授权登陆:grant all on *.* to root@'namenode01.hadoo.com' identified by '123456' ;GRANT ALL PRIVILEGES ON *.* TO 'root'@'%'IDENTIFIED BY '123456' WITH GRANT OPTION;flush privileges;

2.4 创建hive的 原数据:

cd /home/hadoop/yangyang/hivebin/hive注: hive 的安装配置参看 hive 的安装

2.5 创建oozie 的数据表:

create database oozie;cd /home/hadoop/yangyang/ooziebin/oozie-setup.sh db create -run oozie.sql注: oozie 的安装参看oozie 的安装

2.6 安装hue 所需的依赖包:

yum -y install ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi gcc gcc-c++ krb5-devel libtidy libxml2-devel libxslt-devel openldap-devel python-devel sqlite-devel openssl-devel gmp-devel

2.7 最后一步操作去掉安装的jdk所需的依赖包:

rpm -e java_cup-0.10k-5.el6.x86_64 java-1.7.0-openjdk-devel-1.7.0.101-2.6.6.4.el6_8.x86_64 tzdata-java-2016d-1.el6.noarch java-1.5.0-gcj-1.5.0.0-29.1.el6.x86_64 java-1.7.0-openjdk-1.7.0.101-2.6.6.4.el6_8.x86_64 --nodeps

三: 安装 hue

3.1 安装编译Hue

tar -zxvf hue-3.7.0-cdh5.3.6.tar.gzmv hue-3.7.0-cdh5.3.6 yangyang/huecd yangyang/huemake apps

3.2 更改hue 的配置文件

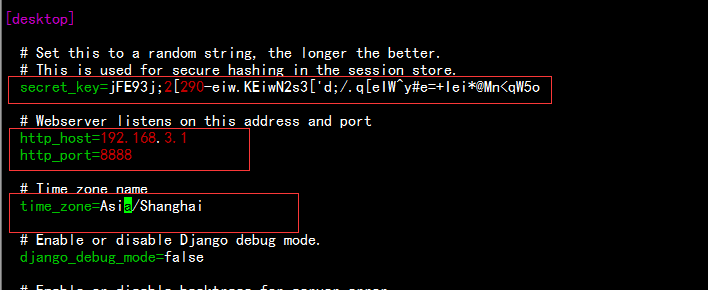

cd yangyang/hue/desktop/confvim hue.ini---[desktop]# Set this to a random string, the longer the better.# This is used for secure hashing in the session store.secret_key=jFE93j;2[290-eiw.KEiwN2s3['d;/.q[eIW^y#e=+Iei*@Mn<qW5o# Webserver listens on this address and porthttp_host=192.168.3.1http_port=8888# Time zone nametime_zone=Asia/Shanghai

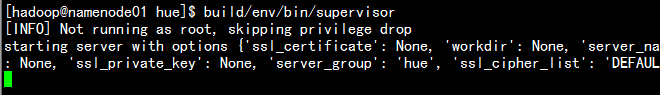

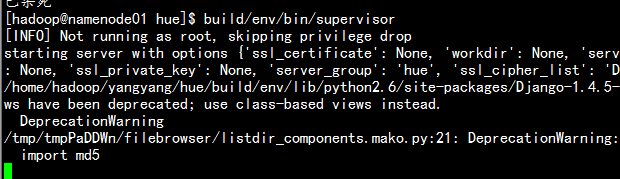

3.3 启动hue 测试

cd yangyang/huebuild/env/bin/supervisor

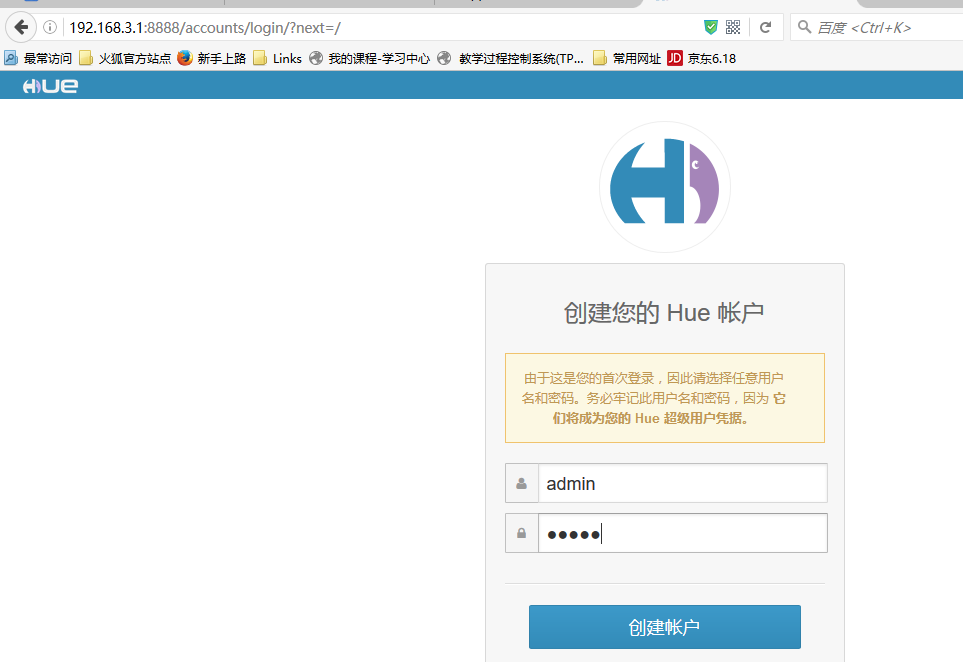

3.4 打开浏览器测试

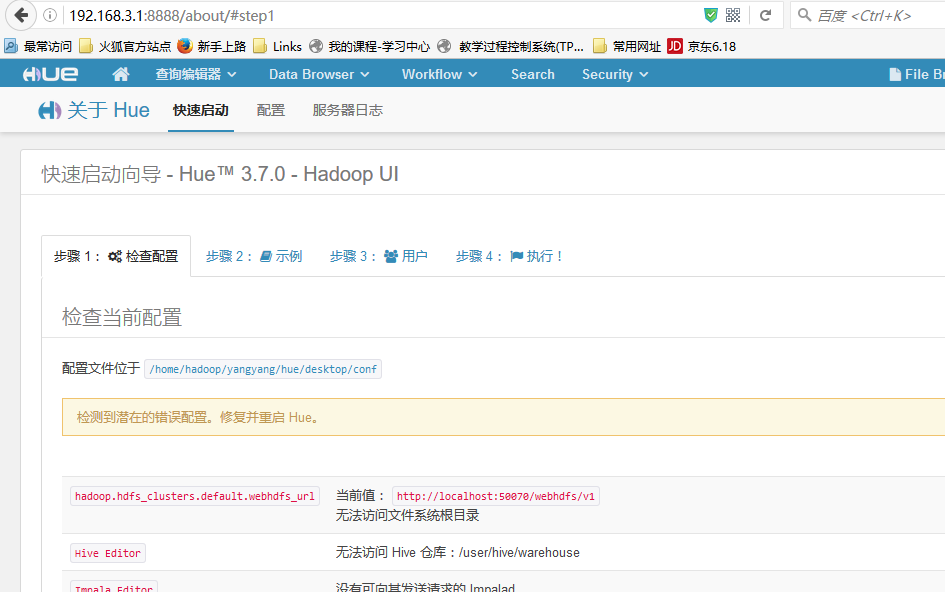

http://192.168.3.1:8888

四: 创建hue 与其它框架的集成

hue 与 hadoop2.x 系列 集成

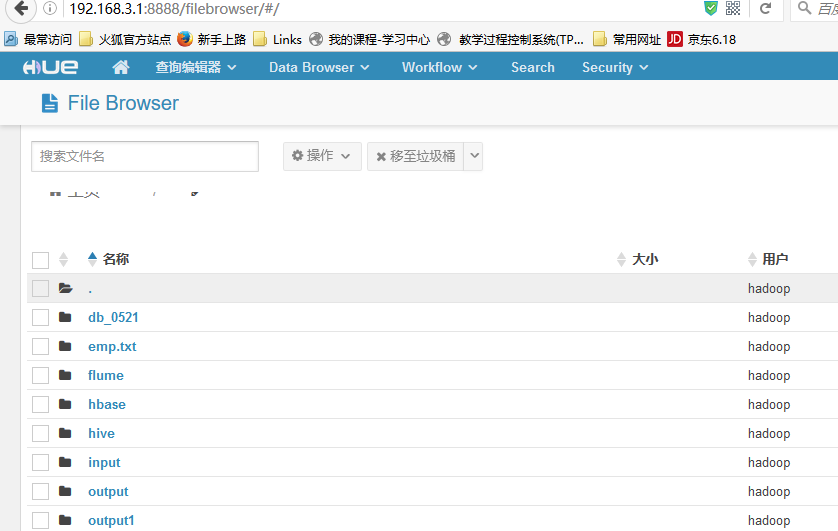

4.1 hue 与hdfs 集成:

更改hadoop的hdfs-site.xml 文件配置启动HDFS中的webHDFSvim hdfs-site.xml 增加:<property><name>dfs.webhdfs.enabled</name><value>true</value></property><!-- 关闭权限检查 --><property><name>dfs.permissions.enabled</name><value>false</value></property>更改hadoop 的core-site.xml文件配置下Hue访问HDFS用户权限vim core-site.xml<property><name>hadoop.proxyuser.hue.hosts</name><value>*</value></property><property><name>hadoop.proxyuser.hue.groups</name><value>*</value></property>

更改hue 的配置文件cd yangyang/hue/desktop/confvim hue.ini[hadoop]# Configuration for HDFS NameNode# ------------------------------------------------------------------------[[hdfs_clusters]]# HA support by using HttpFs[[[default]]]# Enter the filesystem urifs_defaultfs=hdfs://namenode01.hadoop.com:8020# NameNode logical name.## logical_name=# Use WebHdfs/HttpFs as the communication mechanism.# Domain should be the NameNode or HttpFs host.# Default port is 14000 for HttpFs.webhdfs_url=http://namenode01.hadoop.com:50070/webhdfs/v1hadoop_hdfs_home=/home/hadoop/yangyang/hadoophadoop_bin=/home/hadoop/yangyang/hadoop/bin# Change this if your HDFS cluster is Kerberos-secured## security_enabled=false# Default umask for file and directory creation, specified in an octal value.## umask=022# Directory of the Hadoop configurationhadoop_conf_dir=/home/hadoop/yangyang/hadoop/etc/hadoop

4.2 hue 与yarn 集成:

[[yarn_clusters]][[[default]]]# Enter the host on which you are running the ResourceManagerresourcemanager_host=namenode01.hadoop.com# The port where the ResourceManager IPC listens onresourcemanager_port=8032# Whether to submit jobs to this clustersubmit_to=True# Resource Manager logical name (required for HA)## logical_name=# Change this if your YARN cluster is Kerberos-secured## security_enabled=false# URL of the ResourceManager APIresourcemanager_api_url=http://namenode01.hadoop.com:8088# URL of the ProxyServer APIproxy_api_url=http://namenode01.hadoop.com:8088# URL of the HistoryServer APIhistory_server_api_url=http://namenode01.hadoop.com:19888# In secure mode (HTTPS), if SSL certificates from Resource Manager's# Rest Server have to be verified against certificate authority## ssl_cert_ca_verify=False

注释: 修改完之后要从新启动hadoop 的hdfs 与yarn 的服务

4.3 Hue与Hive集成

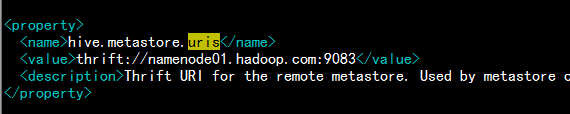

4.3.1 增加 Hive Remote MetaStore 配置

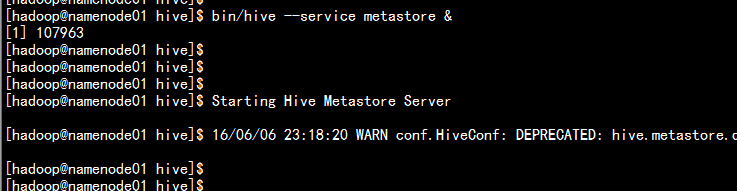

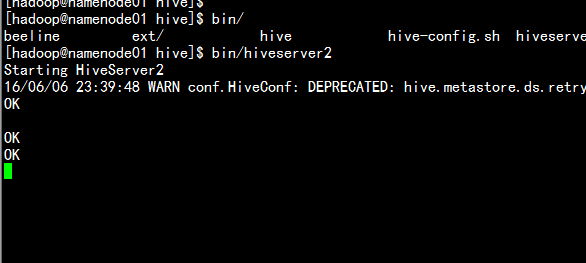

更改hive 的hive-site.xml 文件vim hive-site.xml---<property><name>hive.metastore.uris</name><value>thrift://namenode01.hadoop.com:9083</value><description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description></property>启动hive 的metastorebin/hive --service metastore &启动hive 的 hiveserver2

4.3.2 Hue与Hive集成

修改hue 的配置文件vim hue.ini---[beeswax]# Host where HiveServer2 is running.# If Kerberos security is enabled, use fully-qualified domain name (FQDN).hive_server_host=namenode01.hadoop.com# Port where HiveServer2 Thrift server runs on.hive_server_port=10000# Hive configuration directory, where hive-site.xml is locatedhive_conf_dir=/home/hadoop/yangyang/hive# Timeout in seconds for thrift calls to Hive serviceserver_conn_timeout=120# Choose whether Hue uses the GetLog() thrift call to retrieve Hive logs.# If false, Hue will use the FetchResults() thrift call instead.## use_get_log_api=true# Set a LIMIT clause when browsing a partitioned table.# A positive value will be set as the LIMIT. If 0 or negative, do not set any limit.## browse_partitioned_table_limit=250# A limit to the number of rows that can be downloaded from a query.# A value of -1 means there will be no limit.# A maximum of 65,000 is applied to XLS downloads.## download_row_limit=1000000# Hue will try to close the Hive query when the user leaves the editor page.# This will free all the query resources in HiveServer2, but also make its results inaccessible.## close_queries=false# Thrift version to use when communicating with HiveServer2## thrift_version=5

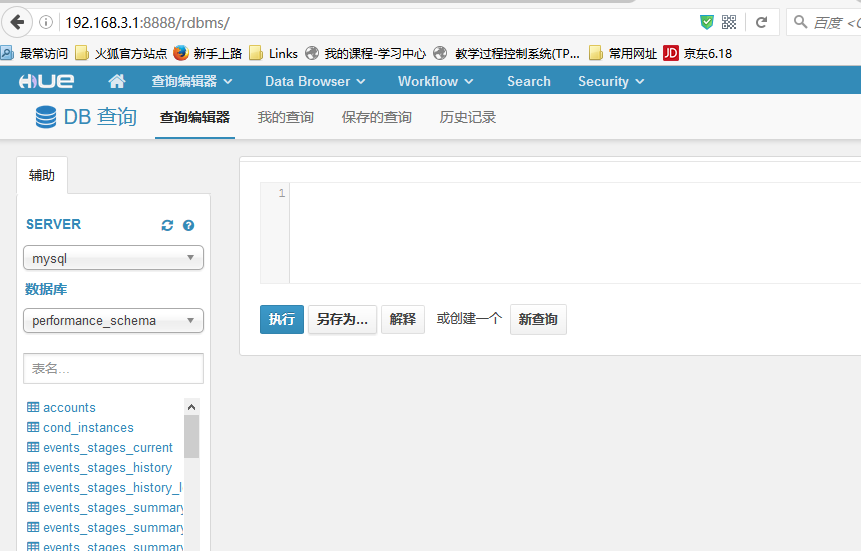

4.4 Hue与RMDBS集成

[[[mysql]]]# Name to show in the UI.## nice_name="My SQL DB"# For MySQL and PostgreSQL, name is the name of the database.# For Oracle, Name is instance of the Oracle server. For express edition# this is 'xe' by default.## name=mysqldb# Database backend to use. This can be:# 1. mysql# 2. postgresql# 3. oracleengine=mysql# IP or hostname of the database to connect to.host=namenode01.hadoop.com# Port the database server is listening to. Defaults are:# 1. MySQL: 3306# 2. PostgreSQL: 5432# 3. Oracle Express Edition: 1521port=3306# Username to authenticate with when connecting to the database.user=root# Password matching the username to authenticate with when# connecting to the database.password=123456# Database options to send to the server when connecting.# https://docs.djangoproject.com/en/1.4/ref/databases/## options={}

4.5 hue 与oozie 集成

更改hue.ini

[liboozie]# The URL where the Oozie service runs on. This is required in order for# users to submit jobs. Empty value disables the config check.oozie_url=http://namenode01.hadoop.com:11000/oozie# Requires FQDN in oozie_url if enabled## security_enabled=false# Location on HDFS where the workflows/coordinator are deployed when submitted.remote_deployement_dir=/user/hadoop/oozie-apps############################################################################ Settings to configure the Oozie app###########################################################################[oozie]# Location on local FS where the examples are stored.local_data_dir=/home/hadoop/yangyang/oozie/oozie-apps# Location on local FS where the data for the examples is stored.sample_data_dir=/home/hadoop/yangyang/oozie/oozie-apps/map-reduce/input-data# Location on HDFS where the oozie examples and workflows are stored.remote_data_dir=/user/hadoop/oozie-apps# Maximum of Oozie workflows or coodinators to retrieve in one API call.oozie_jobs_count=100# Use Cron format for defining the frequency of a Coordinator instead of the old frequency number/unit.##enable_cron_scheduling=true

五: 从新启动 hue

杀掉supervisor 相关进程 与 8888 端口所占的进程号从新启动huebuild/env/bin/supervisor &启动hiveserver2bin/hiveserver2 &

5.1 查看浏览器

http://192.168.3.1:8888

hdfs :

mysql :

hive :

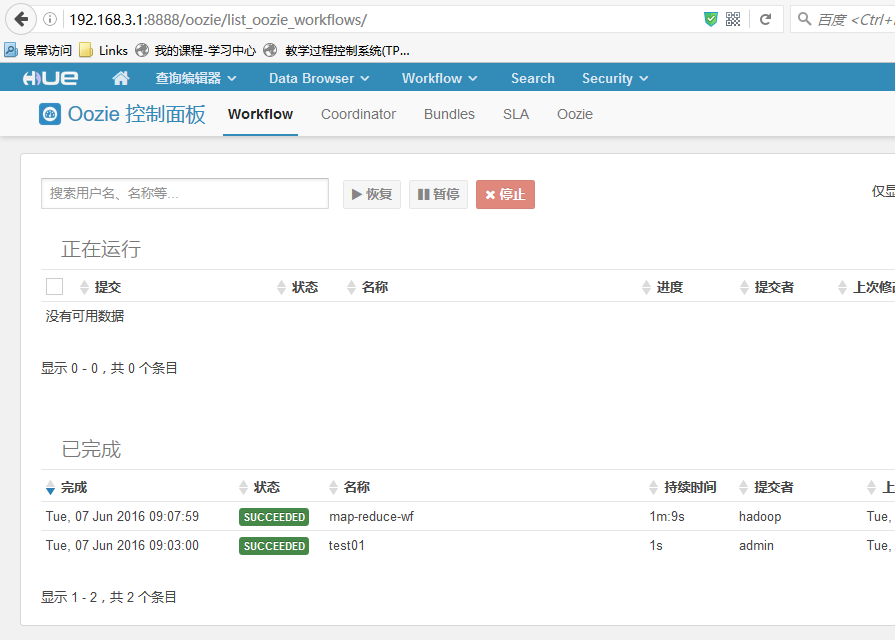

oozie: