@zhangyy

2021-11-26T07:39:25.000000Z

字数 25195

阅读 1030

kubernetes 1.20.5 安装配置部署

kubernetes系列

一: 系统环境介绍

1.1 环境准备

在开始之前,部署Kubernetes集群机器需要满足以下几个条件:操作系统: CentOS7.9-86_x64硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘30GB或更多集群中所有机器之间网络互通可以访问外网,需要拉取镜像,如果服务器不能上网,需要提前下载镜像并导入节点禁止swap分区

1.2 软件环境:

操作系统: CentOS7.9x64Docker: 20-ceKubernetes: 1.20.2

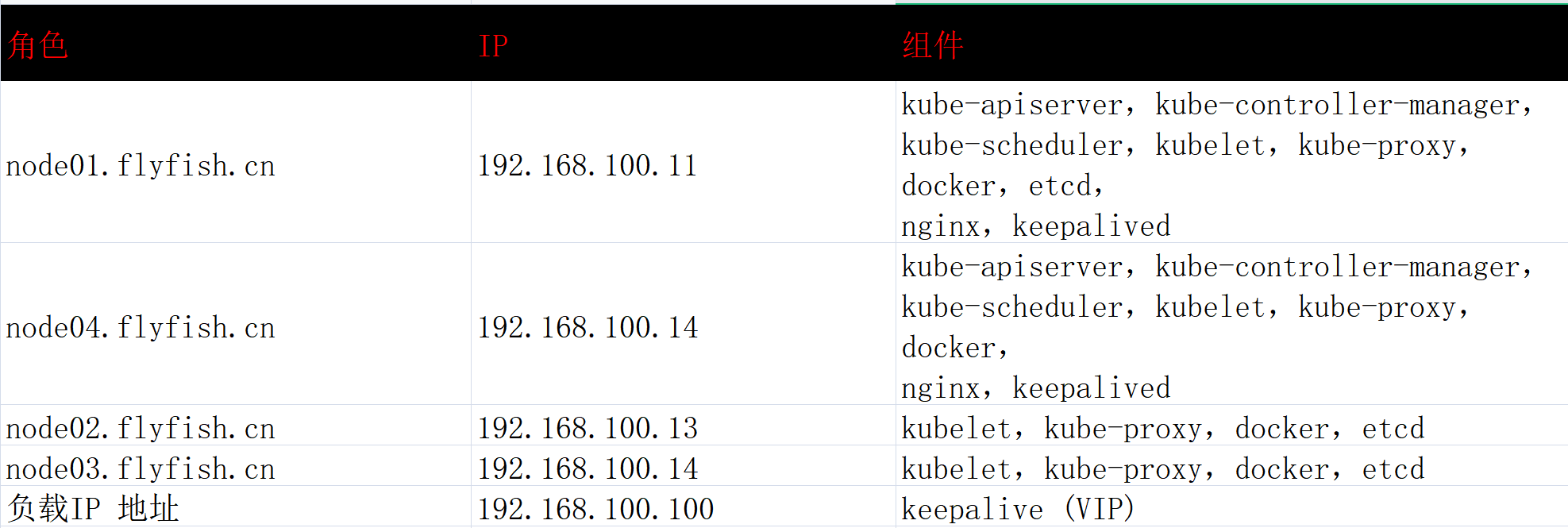

1.3 环境规划

服务器整体规划:

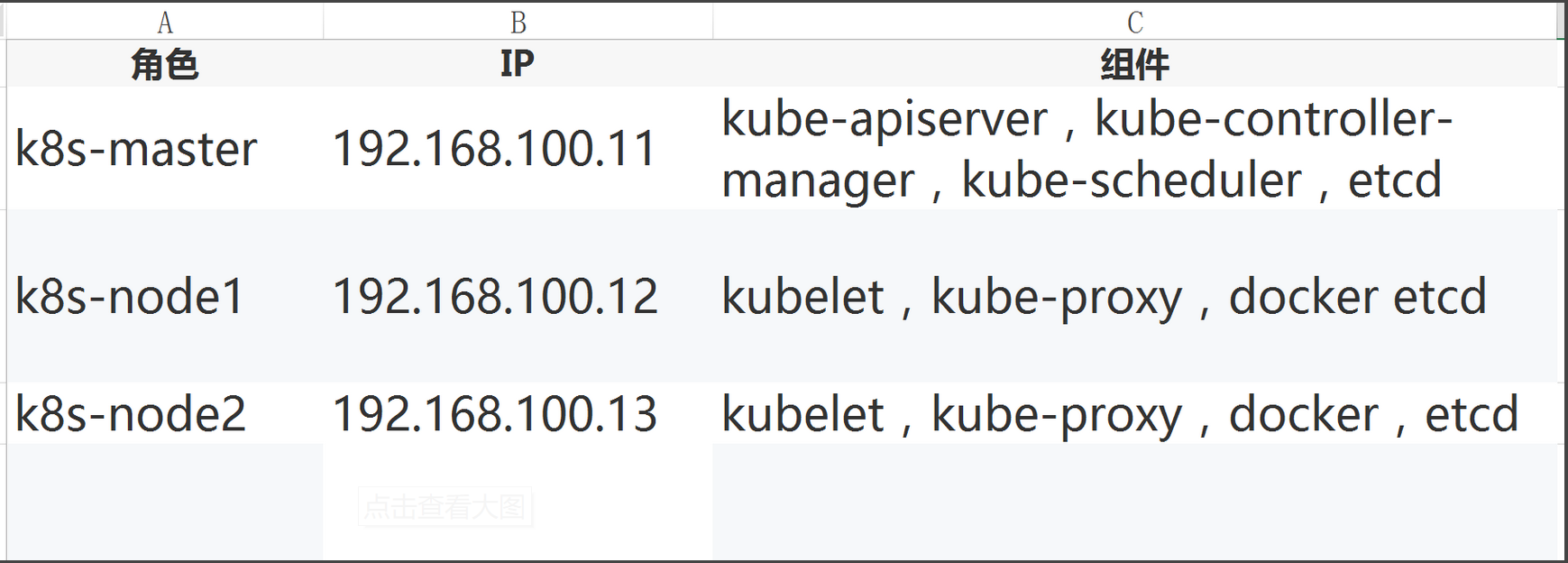

1.4 单Master架构图:

1.5 单Master服务器规划:

1.6 操作系统初始化配置

# 关闭防火墙systemctl stop firewalldsystemctl disable firewalld# 关闭selinuxsed -i 's/enforcing/disabled/' /etc/selinux/config # 永久setenforce 0 # 临时# 关闭swapswapoff -a # 临时sed -ri 's/.*swap.*/#&/' /etc/fstab # 永久# 根据规划设置主机名hostnamectl set-hostname <hostname># 在master添加hostscat >> /etc/hosts << EOF192.168.100.11 node01.flyfish192.168.100.12 node02.flyfish192.168.100.13 node03.flyfishEOF# 将桥接的IPv4流量传递到iptables的链cat > /etc/sysctl.d/k8s.conf << EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOFsysctl --system # 生效# 时间同步yum install chronydserver ntp1.aliyun.com

二:ETCD 集群部署

2.1 ETCD 集群的概念

Etcd 是一个分布式键值存储系统,Kubernetes使用Etcd进行数据存储,所以先准备一个Etcd数据库,为解决Etcd单点故障,应采用集群方式部署,这里使用3台组建集群,可容忍1台机器故障,当然,你也可以使用5台组建集群,可容忍2台机器故障

2.2准备cfssl证书生成工具

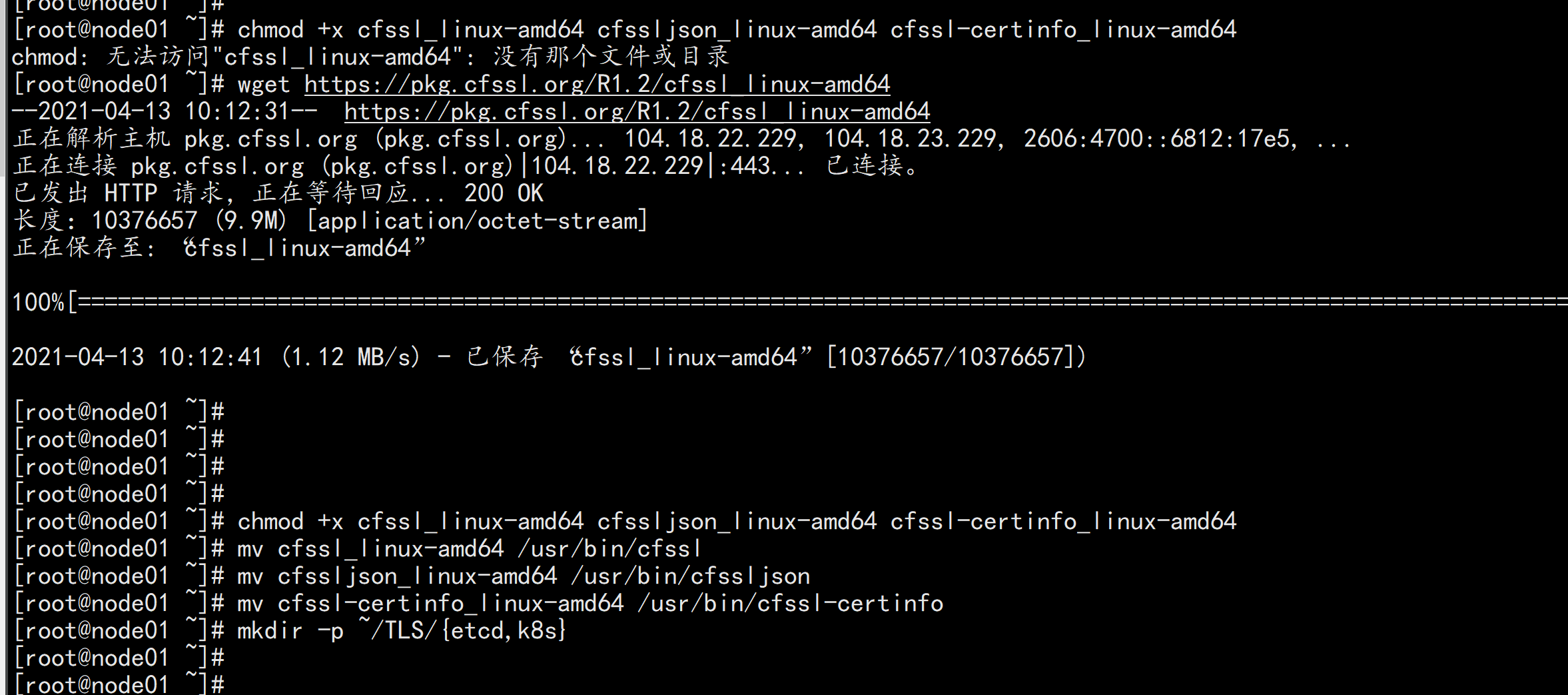

cfssl是一个开源的证书管理工具,使用json文件生成证书,相比openssl更方便使用。找任意一台服务器操作,这里用Master节点。---wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64mv cfssl_linux-amd64 /usr/bin/cfsslmv cfssljson_linux-amd64 /usr/bin/cfssljsonmv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

2.3 生成Etcd证书

1. 自签证书颁发机构(CA)创建工作目录:mkdir -p ~/TLS/{etcd,k8s}cd TLS/etcd

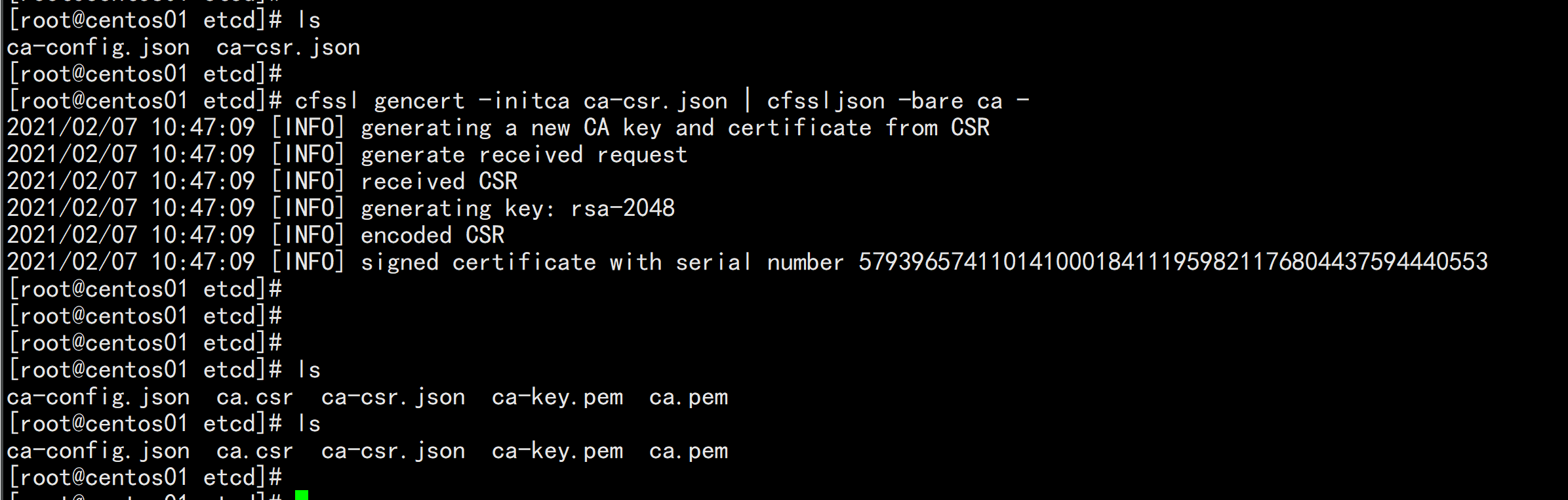

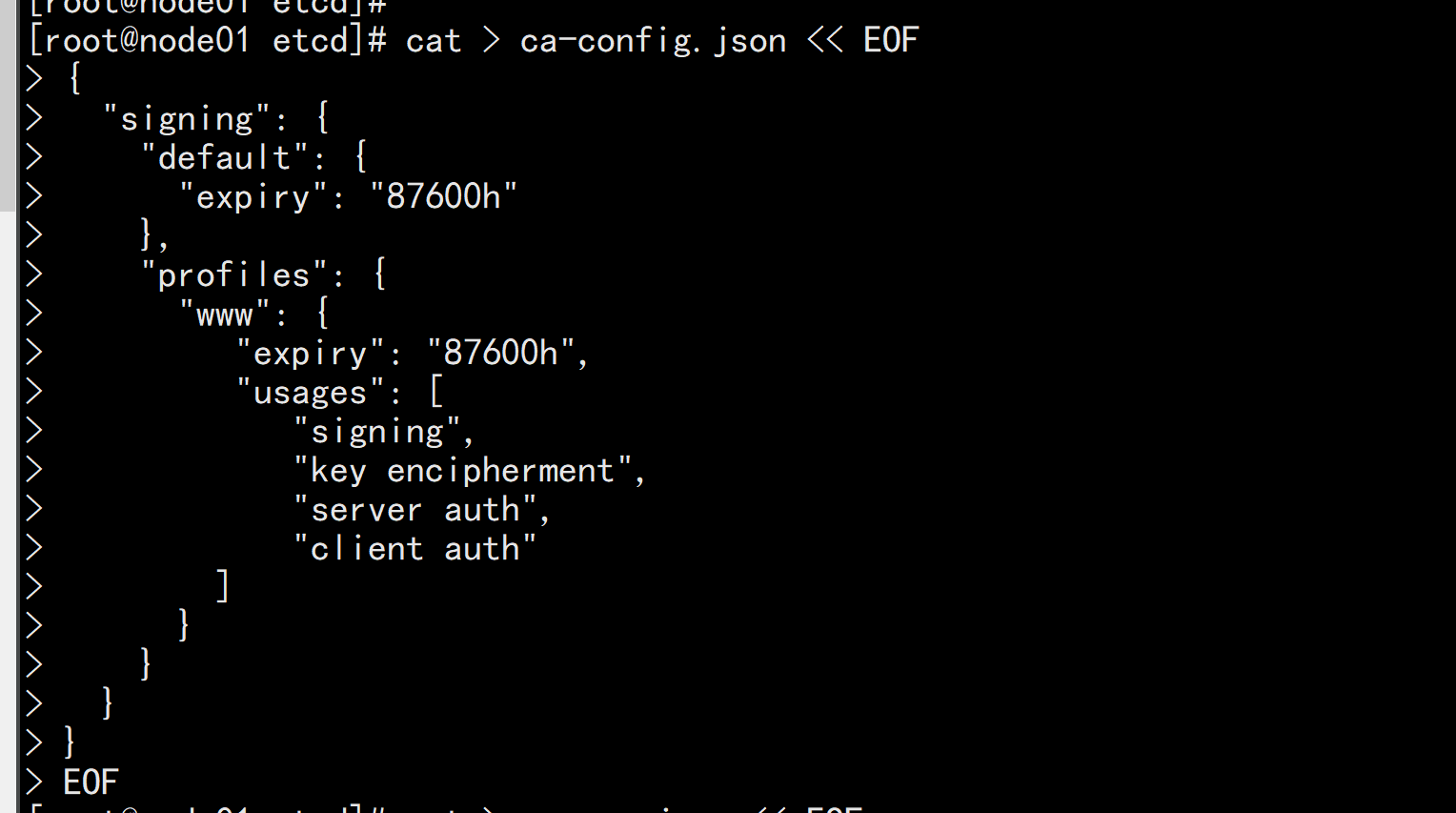

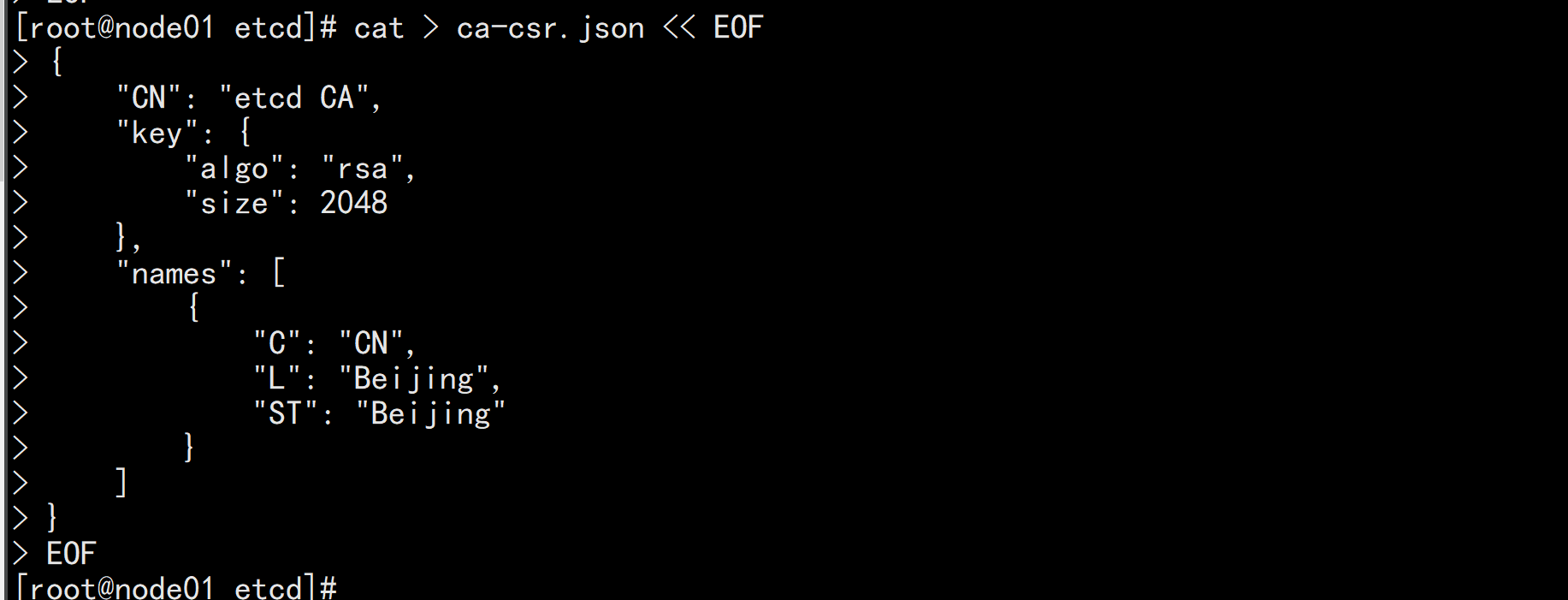

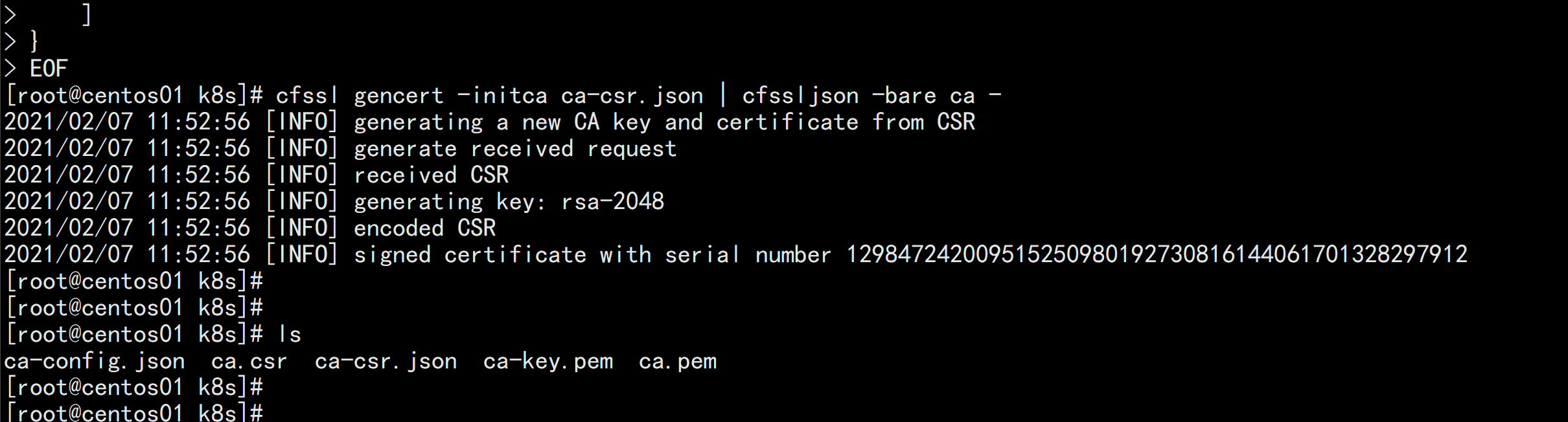

自签CA:cat > ca-config.json << EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"www": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat > ca-csr.json << EOF{"CN": "etcd CA","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing"}]}EOF生成证书cfssl gencert -initca ca-csr.json | cfssljson -bare ca -ls *pemca-key.pem ca.pem

2. 使用自签CA签发Etcd HTTPS证书创建证书申请文件:cat > server-csr.json << EOF{"CN": "etcd","hosts": ["192.168.100.11","192.168.100.12","192.168.100.13","192.168.100.14","192.168.100.15","192.168.100.16","192.168.100.17","192.168.100.100"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing"}]}EOF生成证书:cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare serverls server*pemserver-key.pem server.pem

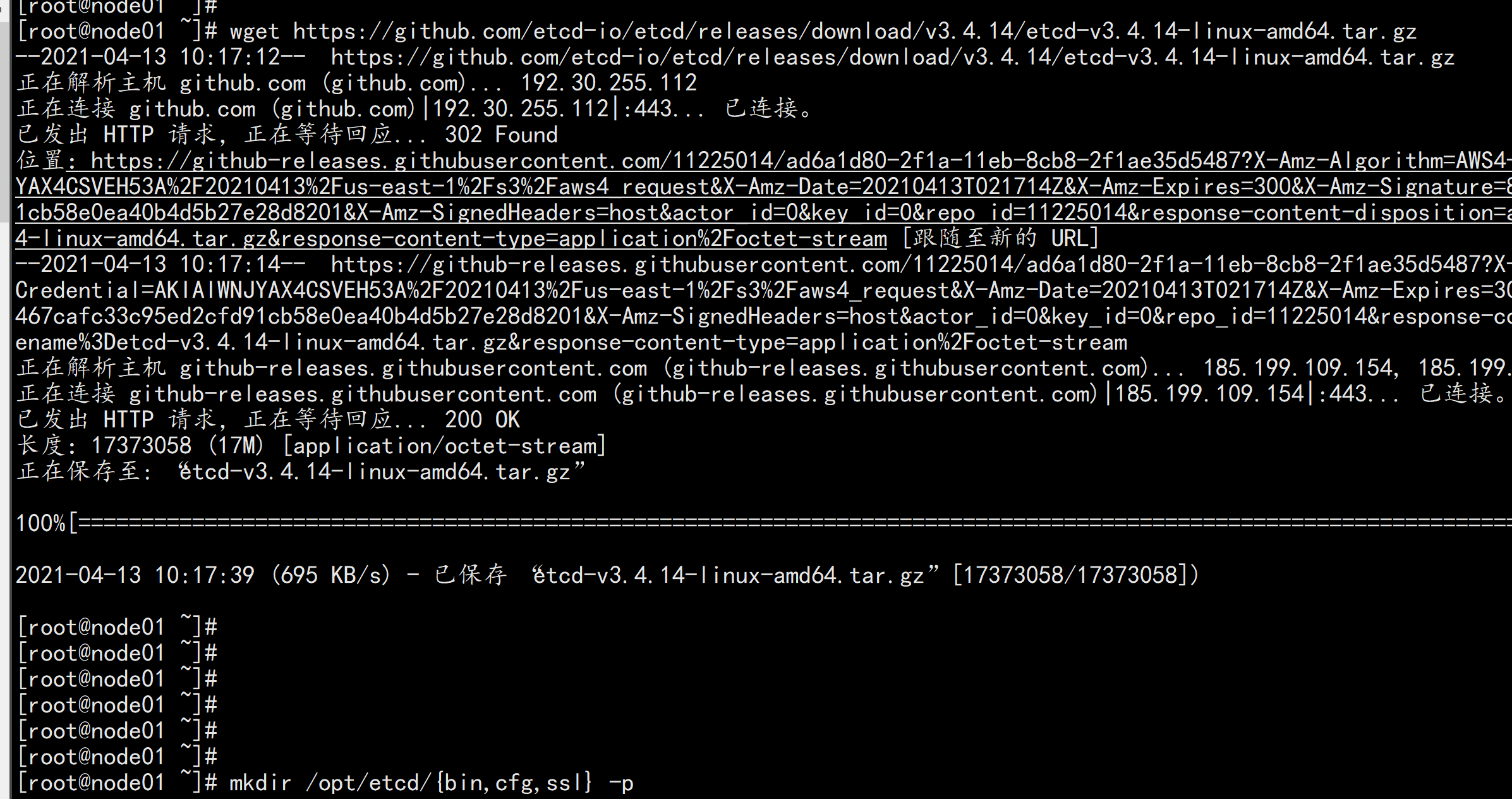

2.4 从Github下载二进制文件

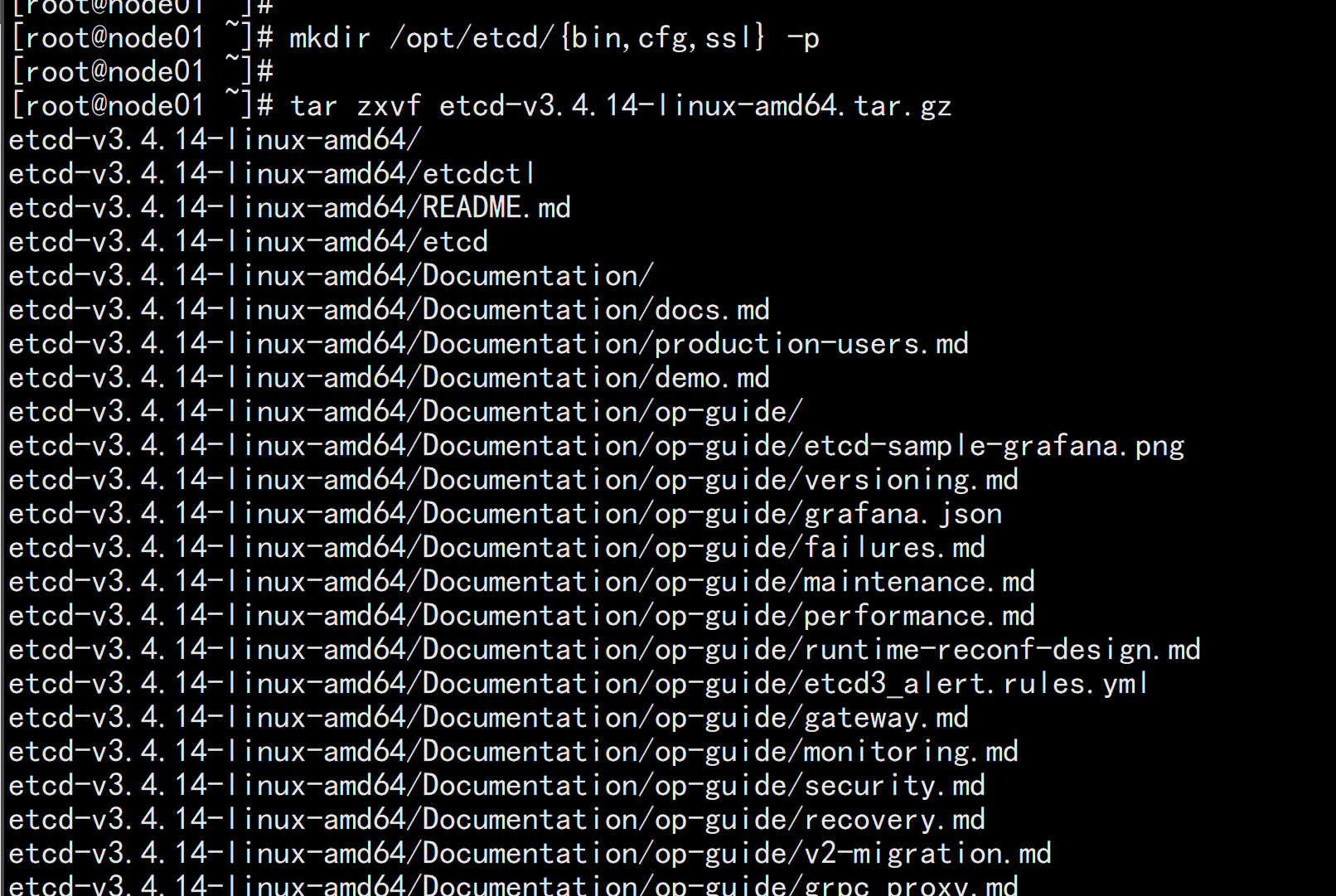

下载地址:https://github.com/etcd-io/etcd/releases/download/v3.4.14/etcd-v3.4.14-linux-amd64.tar.gz以下在节点1上操作,为简化操作,待会将节点1生成的所有文件拷贝到节点2和节点3.1. 创建工作目录并解压二进制包mkdir /opt/etcd/{bin,cfg,ssl} -ptar zxvf etcd-v3.4.14-linux-amd64.tar.gzmv etcd-v3.4.14-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

2.5 创建etcd配置文件

cat > /opt/etcd/cfg/etcd.conf << EOF#[Member]ETCD_NAME="etcd-1"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.100.11:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.100.11:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.100.11:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.100.11:2379"ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.100.11:2380,etcd-2=https://192.168.100.12:2380,etcd-3=https://192.168.100.13:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"EOF---ETCD_NAME:节点名称,集群中唯一ETCD_DATA_DIR:数据目录ETCD_LISTEN_PEER_URLS:集群通信监听地址ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址ETCD_INITIAL_CLUSTER:集群节点地址ETCD_INITIAL_CLUSTER_TOKEN:集群TokenETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

2.6. systemd管理etcd

cat > /usr/lib/systemd/system/etcd.service << EOF[Unit]Description=Etcd ServerAfter=network.targetAfter=network-online.targetWants=network-online.target[Service]Type=notifyEnvironmentFile=/opt/etcd/cfg/etcd.confExecStart=/opt/etcd/bin/etcd \--cert-file=/opt/etcd/ssl/server.pem \--key-file=/opt/etcd/ssl/server-key.pem \--peer-cert-file=/opt/etcd/ssl/server.pem \--peer-key-file=/opt/etcd/ssl/server-key.pem \--trusted-ca-file=/opt/etcd/ssl/ca.pem \--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \--logger=zapRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF

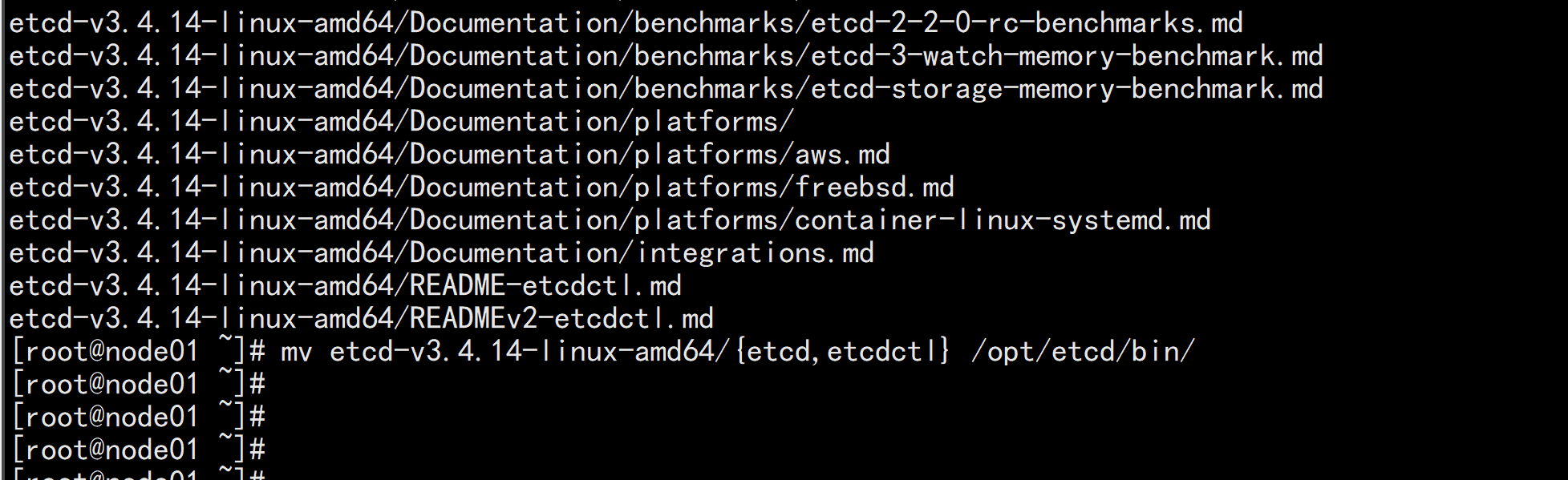

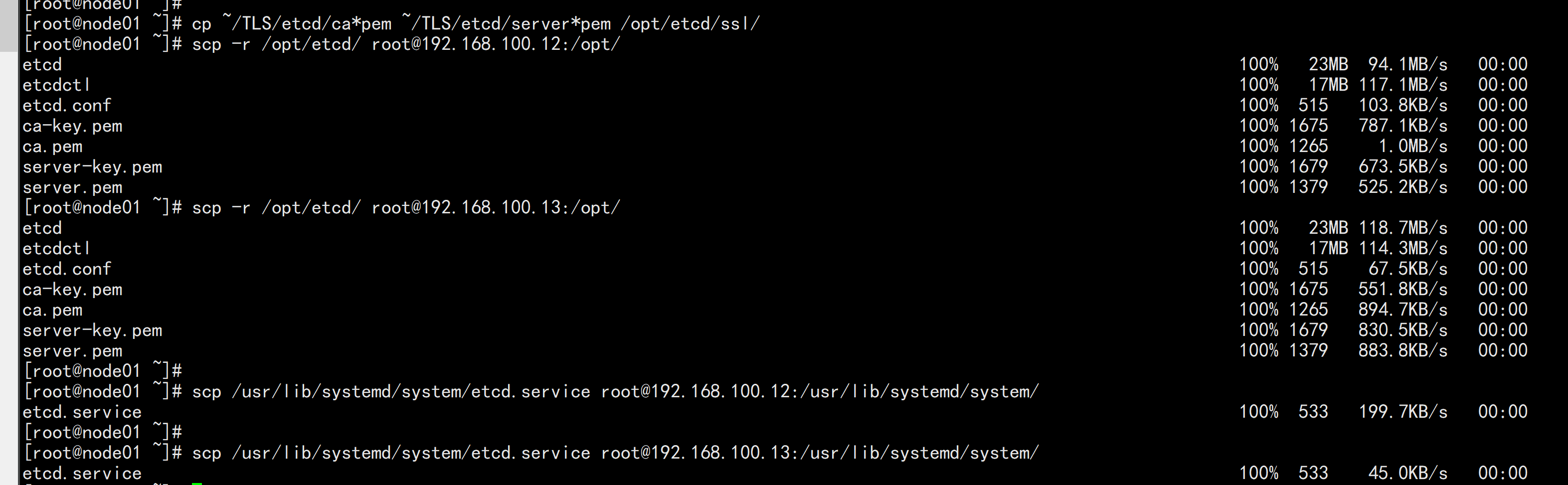

4. 拷贝刚才生成的证书把刚才生成的证书拷贝到配置文件中的路径:cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

5. 同步所有主机scp -r /opt/etcd/ root@192.168.100.12:/opt/scp -r /opt/etcd/ root@192.168.100.13:/opt/scp /usr/lib/systemd/system/etcd.service root@192.168.100.12:/usr/lib/systemd/system/scp /usr/lib/systemd/system/etcd.service root@192.168.100.13:/usr/lib/systemd/system/

修改192.168.100.12 的etcd配置文件vim /opt/etcd/cfg/etcd.conf#[Member]ETCD_NAME="etcd-2"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.100.12:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.100.12:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.100.12:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.100.12:2379"ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.100.11:2380,etcd-2=https://192.168.100.12:2380,etcd-3=https://192.168.100.13:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"

修改192.168.100.13 的etcd 配置文件vim /opt/etcd/cfg/etcd.conf#[Member]ETCD_NAME="etcd-3"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.100.13:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.100.13:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.100.13:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.100.13:2379"ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.100.11:2380,etcd-2=https://192.168.100.12:2380,etcd-3=https://192.168.100.13:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"

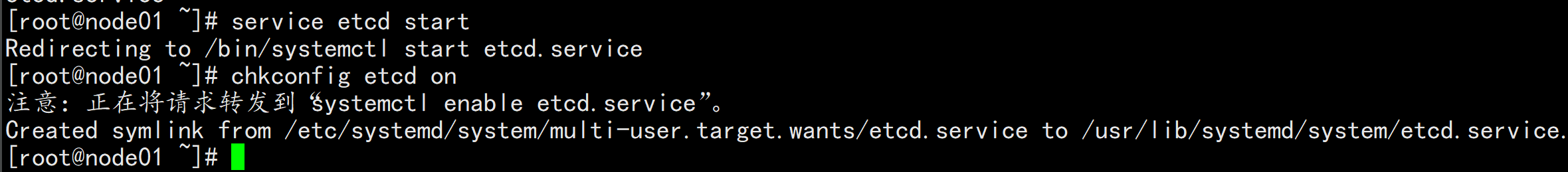

启动etcd 集群service etcd startchkconfig etcd on

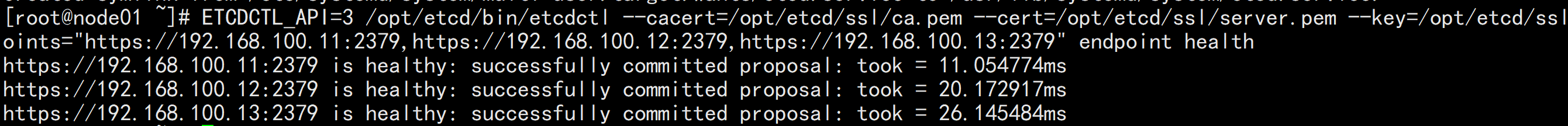

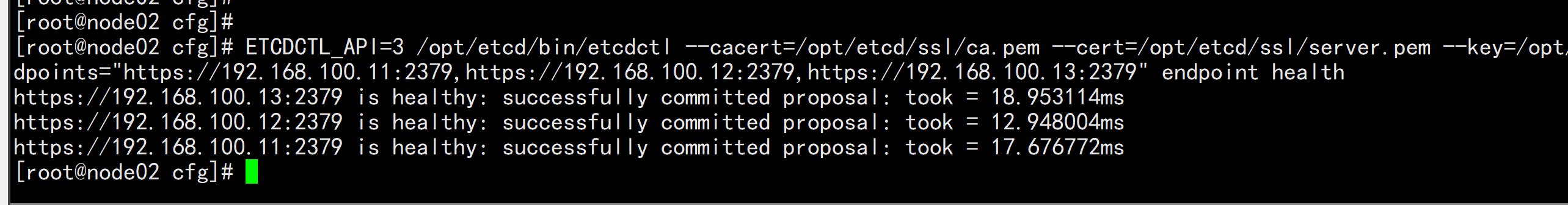

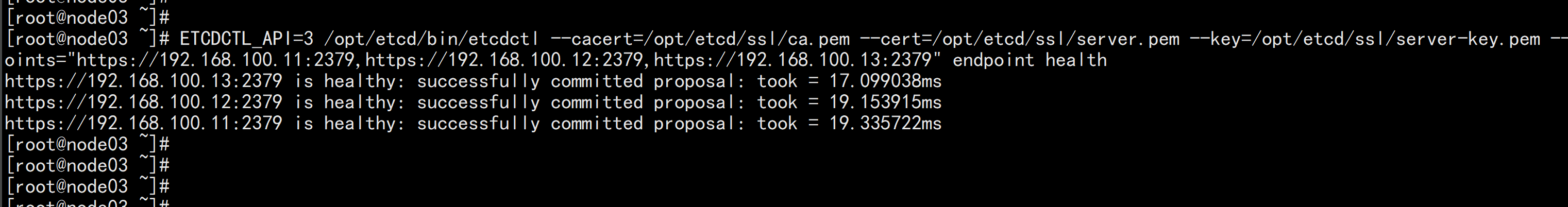

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.100.11:2379,https://192.168.100.12:2379,https://192.168.100.13:2379" endpoint health

如果输出上面信息,就说明集群部署成功。如果有问题第一步先看日志:/var/log/message 或 journalctl -u etcd

三、安装Docker

1. 下载:下载地址:https://download.docker.com/linux/static/stable/x86_64/docker-20.10.3.tgz以下在所有节点操作。这里采用二进制安装,用yum安装也一样。在node01.flyfish.cn,node02.flyfish.cn 与 node03.flyfish.cn 节点上面安装

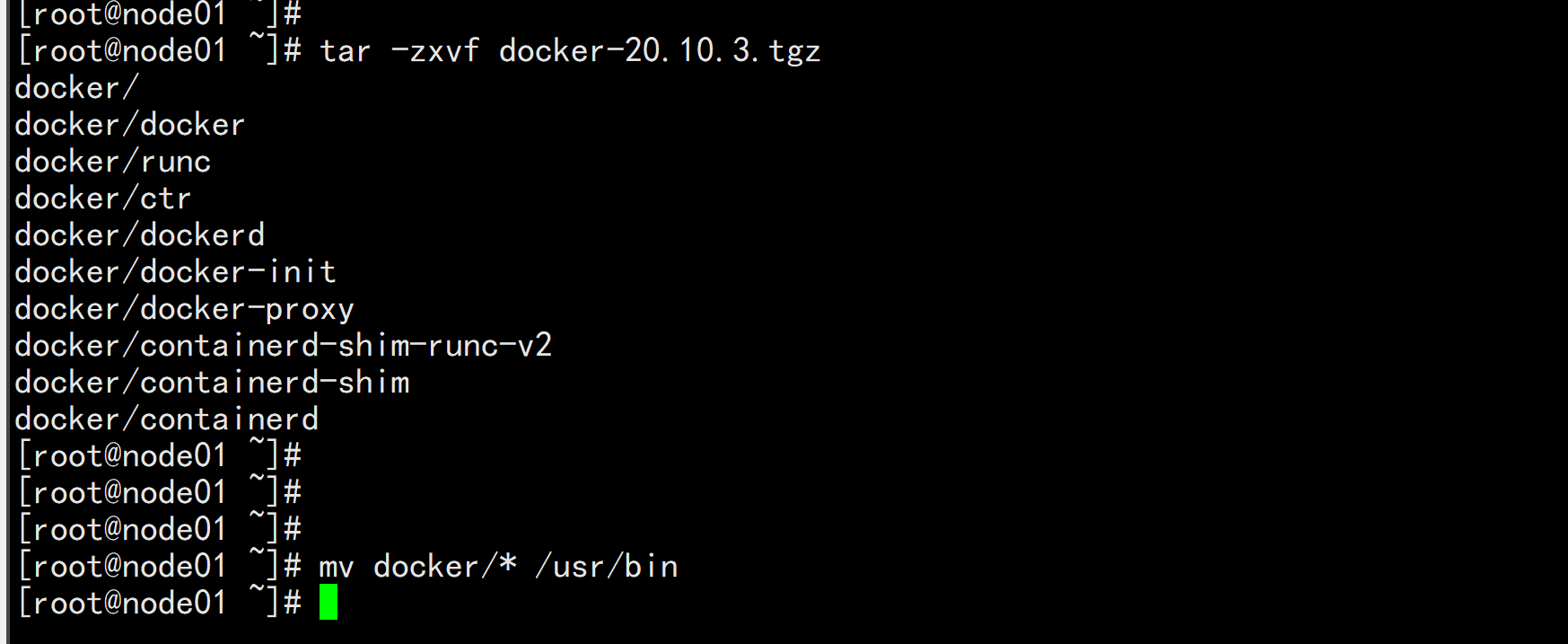

2. 部署dockertar -xvf docker-20.10.3.tarmv docker/* /usr/bin

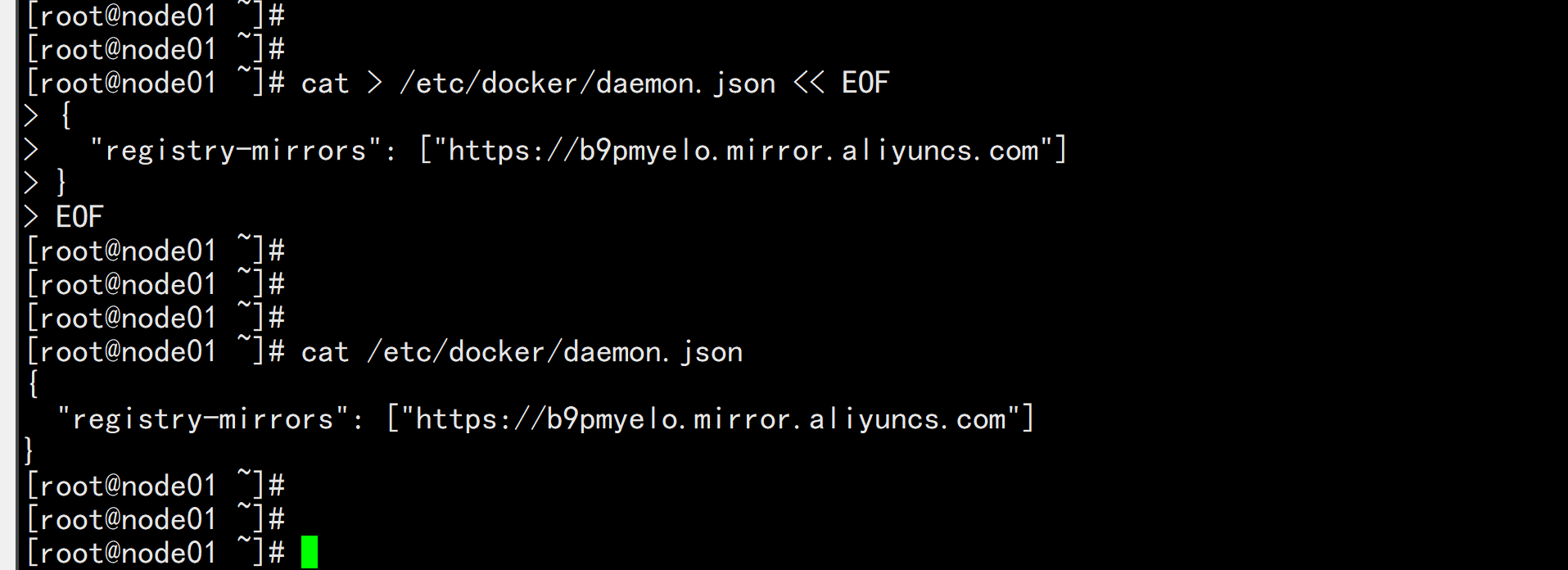

mkdir /etc/docker---cat > /etc/docker/daemon.json << EOF{"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]}EOF----

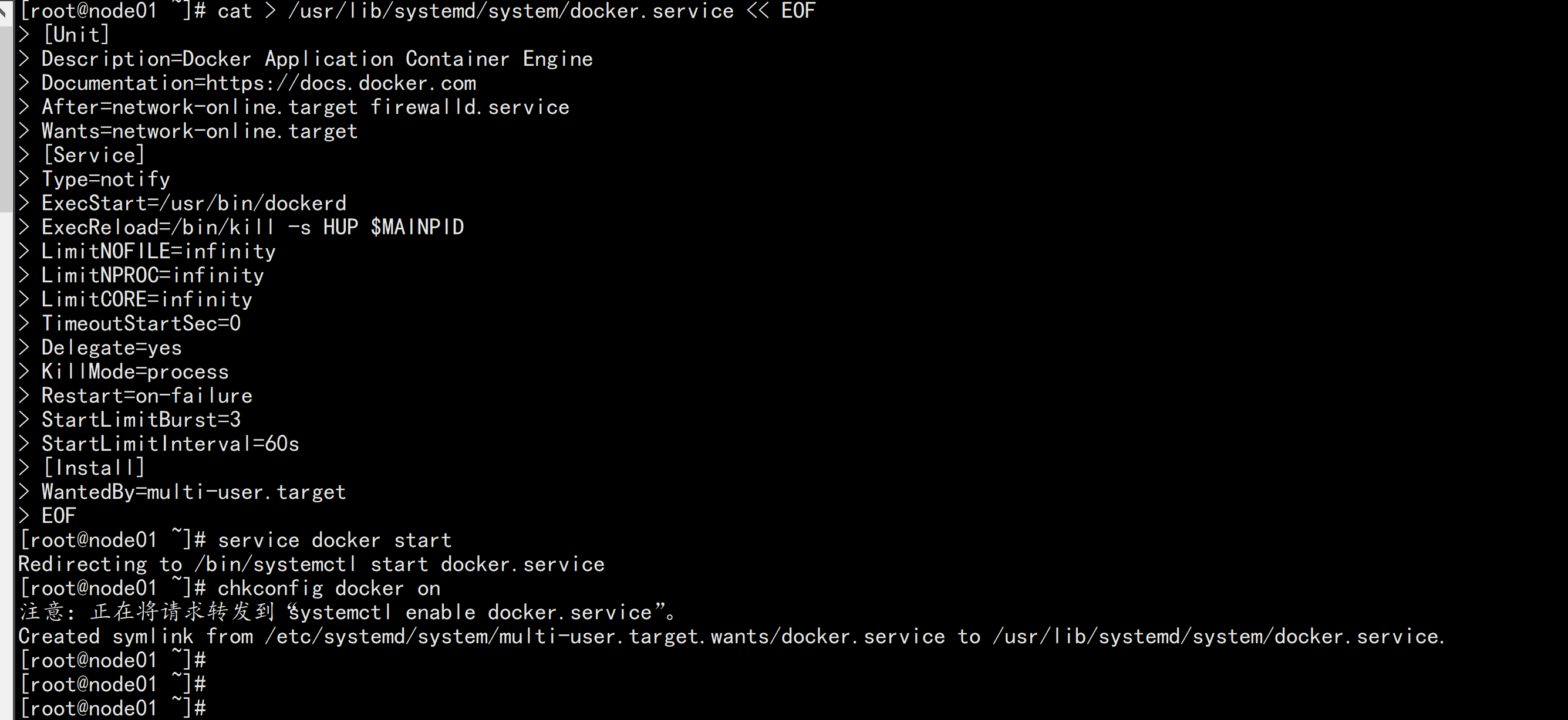

3 systemd管理dockercat > /usr/lib/systemd/system/docker.service << EOF[Unit]Description=Docker Application Container EngineDocumentation=https://docs.docker.comAfter=network-online.target firewalld.serviceWants=network-online.target[Service]Type=notifyExecStart=/usr/bin/dockerdExecReload=/bin/kill -s HUP $MAINPIDLimitNOFILE=infinityLimitNPROC=infinityLimitCORE=infinityTimeoutStartSec=0Delegate=yesKillMode=processRestart=on-failureStartLimitBurst=3StartLimitInterval=60s[Install]WantedBy=multi-user.targetEOF

service docker startchkconfig docker on

四、部署Master Node

4.1 生成kube-apiserver证书

生成kube-apiserver证书1. 自签证书颁发机构(CA)cd /root/TLS/k8s/---cat > ca-config.json << EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat > ca-csr.json << EOF{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing","O": "k8s","OU": "System"}]}EOF生成证书:cfssl gencert -initca ca-csr.json | cfssljson -bare ca -ls *pemca-key.pem ca.pem

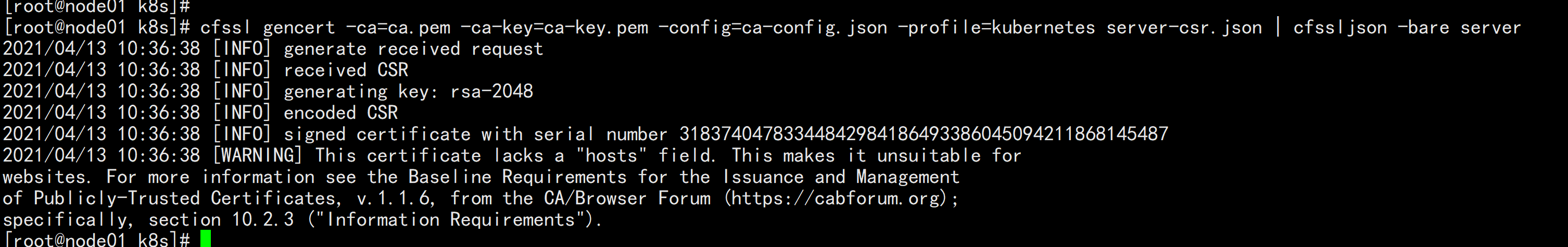

使用自签CA签发kube-apiserver HTTPS证书创建证书申请文件:cat > server-csr.json << EOF{"CN": "kubernetes","hosts": ["10.0.0.1","127.0.0.1","192.168.100.11","192.168.100.12","192.168.100.13","192.168.100.14","192.168.100.15","192.168.100.16","192.168.100.17","192.168.100.18","192.168.100.100","kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]}EOF

生成证书:cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare serverls server*pemserver-key.pem server.pem

4.2 从Github下载二进制文件

下载地址: https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.20.md#v1202注:打开链接你会发现里面有很多包,下载一个server包就够了,包含了Master和Worker Node二进制文件。

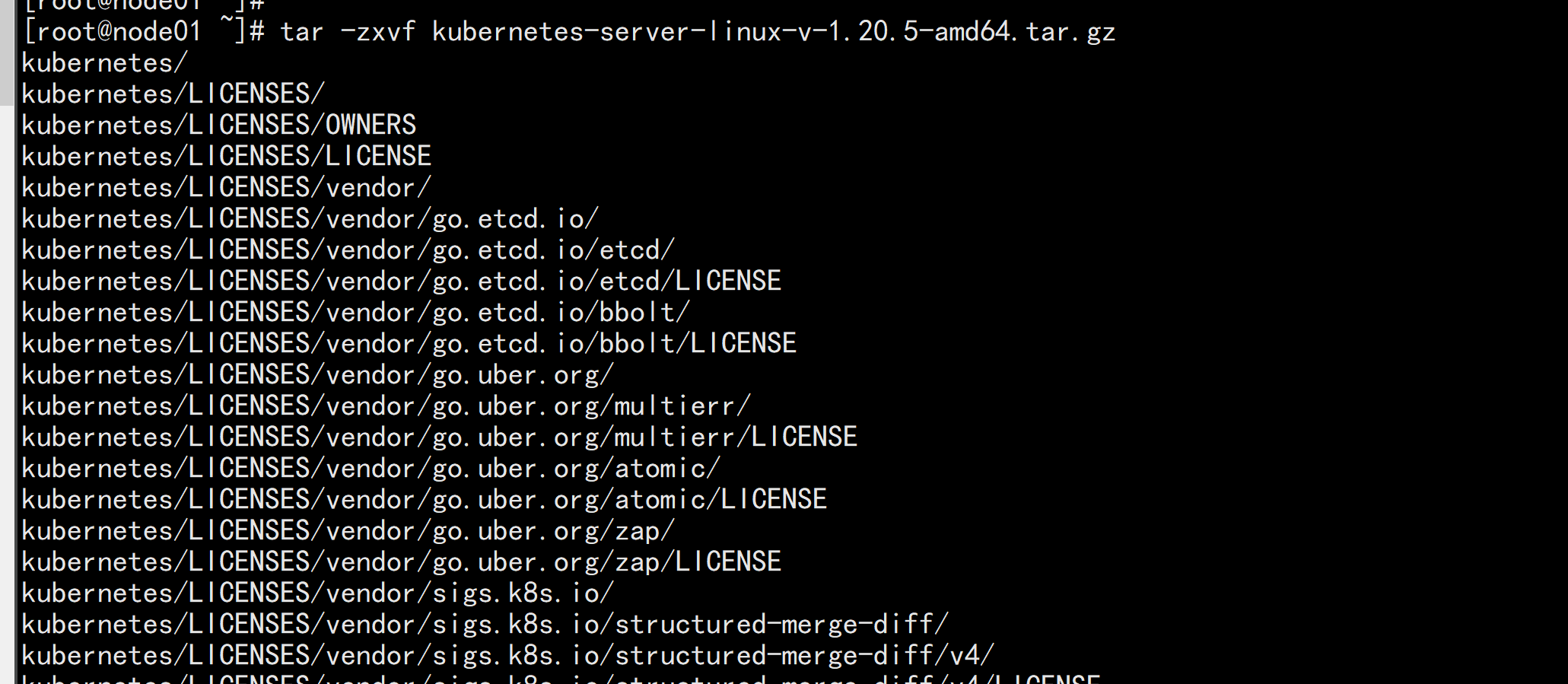

4.3 解压二进制包

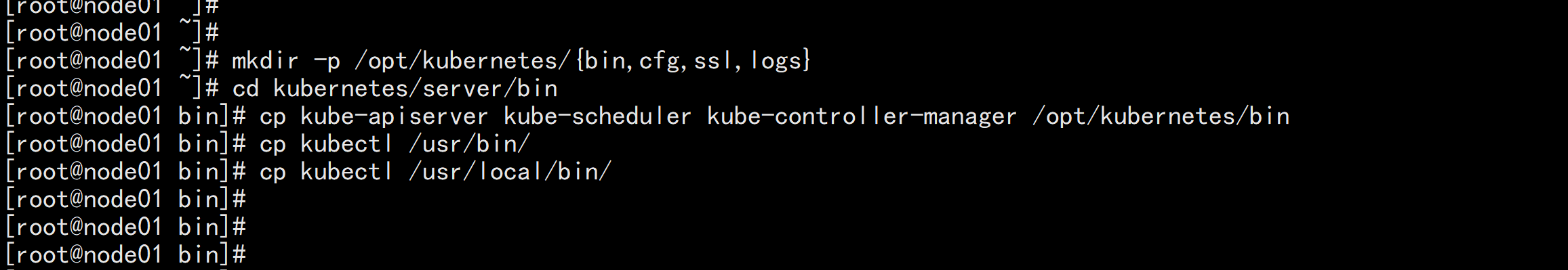

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}tar zxvf kubernetes-server-linux-amd64.tar.gzcd kubernetes/server/bincp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bincp kubectl /usr/bin/cp kubectl /usr/local/bin/

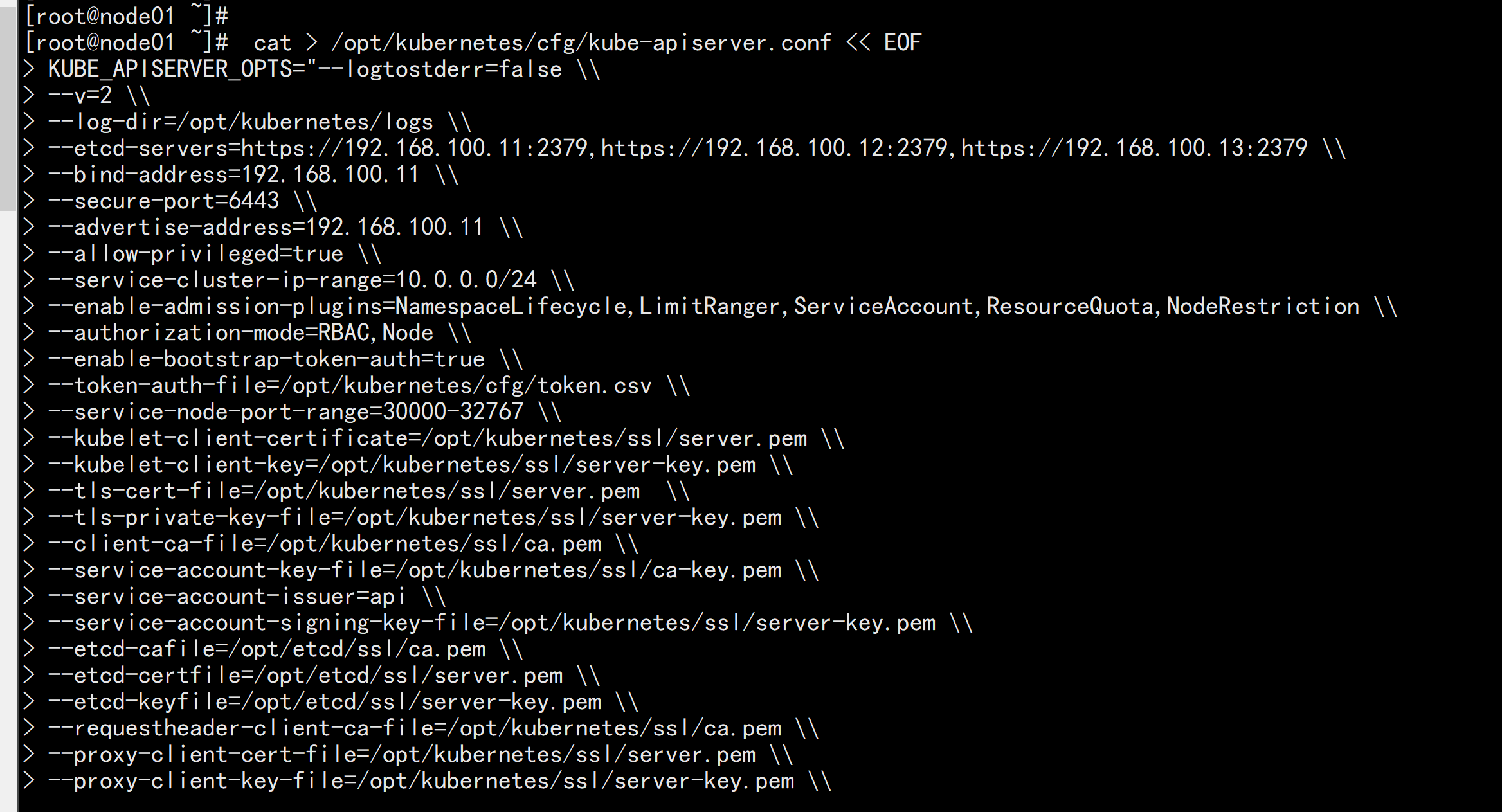

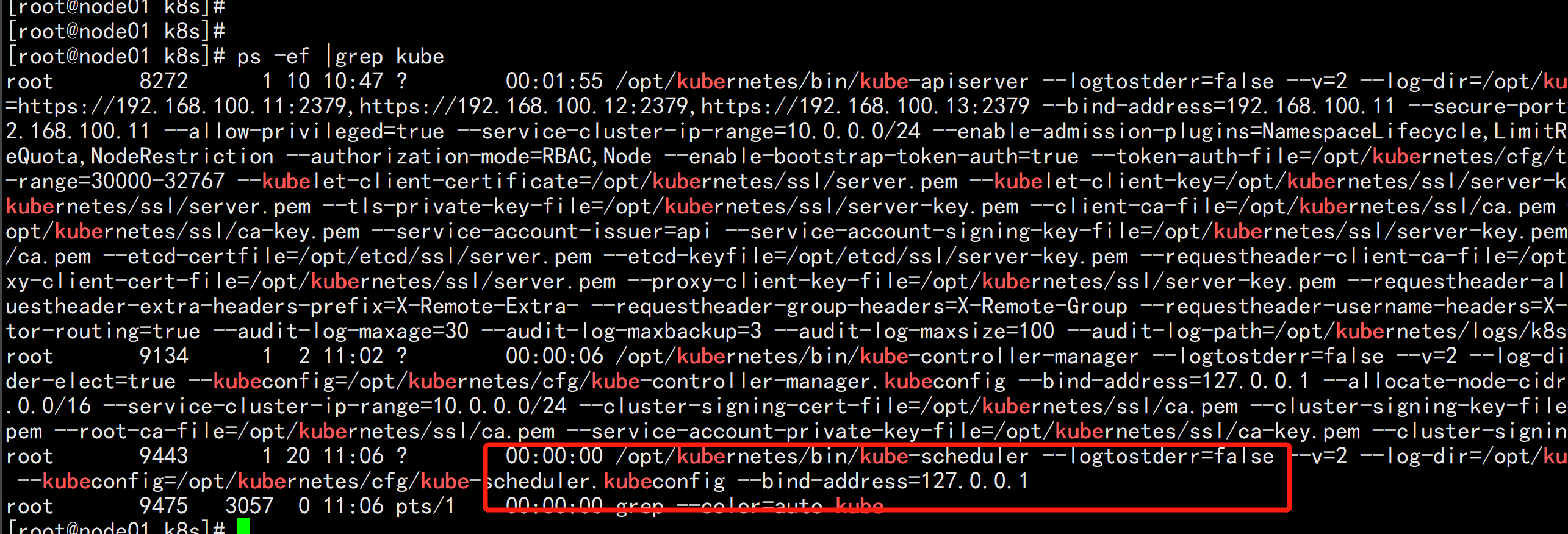

4.4 部署kube-apiserver1. 创建配置文件cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOFKUBE_APISERVER_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--etcd-servers=https://192.168.100.11:2379,https://192.168.100.12:2379,https://192.168.100.13:2379 \\--bind-address=192.168.100.11 \\--secure-port=6443 \\--advertise-address=192.168.100.11 \\--allow-privileged=true \\--service-cluster-ip-range=10.0.0.0/24 \\--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\--authorization-mode=RBAC,Node \\--enable-bootstrap-token-auth=true \\--token-auth-file=/opt/kubernetes/cfg/token.csv \\--service-node-port-range=30000-32767 \\--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\--tls-cert-file=/opt/kubernetes/ssl/server.pem \\--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\--client-ca-file=/opt/kubernetes/ssl/ca.pem \\--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\--service-account-issuer=api \\--service-account-signing-key-file=/opt/kubernetes/ssl/server-key.pem \\--etcd-cafile=/opt/etcd/ssl/ca.pem \\--etcd-certfile=/opt/etcd/ssl/server.pem \\--etcd-keyfile=/opt/etcd/ssl/server-key.pem \\--requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \\--proxy-client-cert-file=/opt/kubernetes/ssl/server.pem \\--proxy-client-key-file=/opt/kubernetes/ssl/server-key.pem \\--requestheader-allowed-names=kubernetes \\--requestheader-extra-headers-prefix=X-Remote-Extra- \\--requestheader-group-headers=X-Remote-Group \\--requestheader-username-headers=X-Remote-User \\--enable-aggregator-routing=true \\--audit-log-maxage=30 \\--audit-log-maxbackup=3 \\--audit-log-maxsize=100 \\--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"EOF---注:上面两个\ \ 第一个是转义符,第二个是换行符,使用转义符是为了使用EOF保留换行符。–logtostderr:启用日志—v:日志等级–log-dir:日志目录–etcd-servers:etcd集群地址–bind-address:监听地址–secure-port:https安全端口–advertise-address:集群通告地址–allow-privileged:启用授权–service-cluster-ip-range:Service虚拟IP地址段–enable-admission-plugins:准入控制模块–authorization-mode:认证授权,启用RBAC授权和节点自管理–enable-bootstrap-token-auth:启用TLS bootstrap机制–token-auth-file:bootstrap token文件–service-node-port-range:Service nodeport类型默认分配端口范围–kubelet-client-xxx:apiserver访问kubelet客户端证书–tls-xxx-file:apiserver https证书–etcd-xxxfile:连接Etcd集群证书–audit-log-xxx:审计日志•1.20版本必须加的参数:--service-account-issuer,--service-account-signing-key-file•--etcd-xxxfile:连接Etcd集群证书•--audit-log-xxx:审计日志•启动聚合层相关配置:--requestheader-client-ca-file,--proxy-client-cert-file,--proxy-client-key-file,--requestheader-allowed-names,--requestheader-extra-headers-prefix,--requestheader-group-headers,--requestheader-username-headers,--enable-aggregator-routing

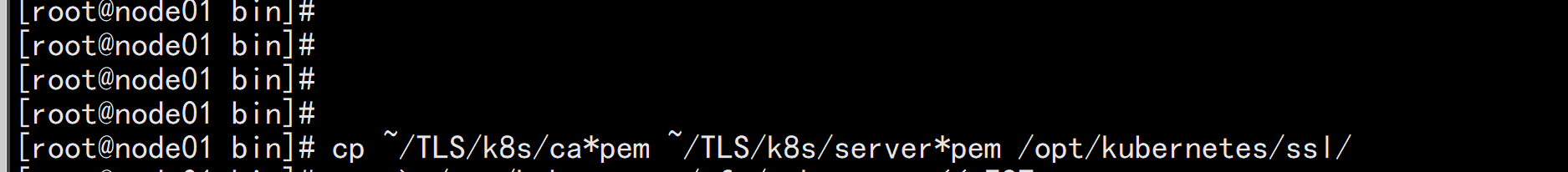

2. 拷贝刚才生成的证书把刚才生成的证书拷贝到配置文件中的路径:cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/

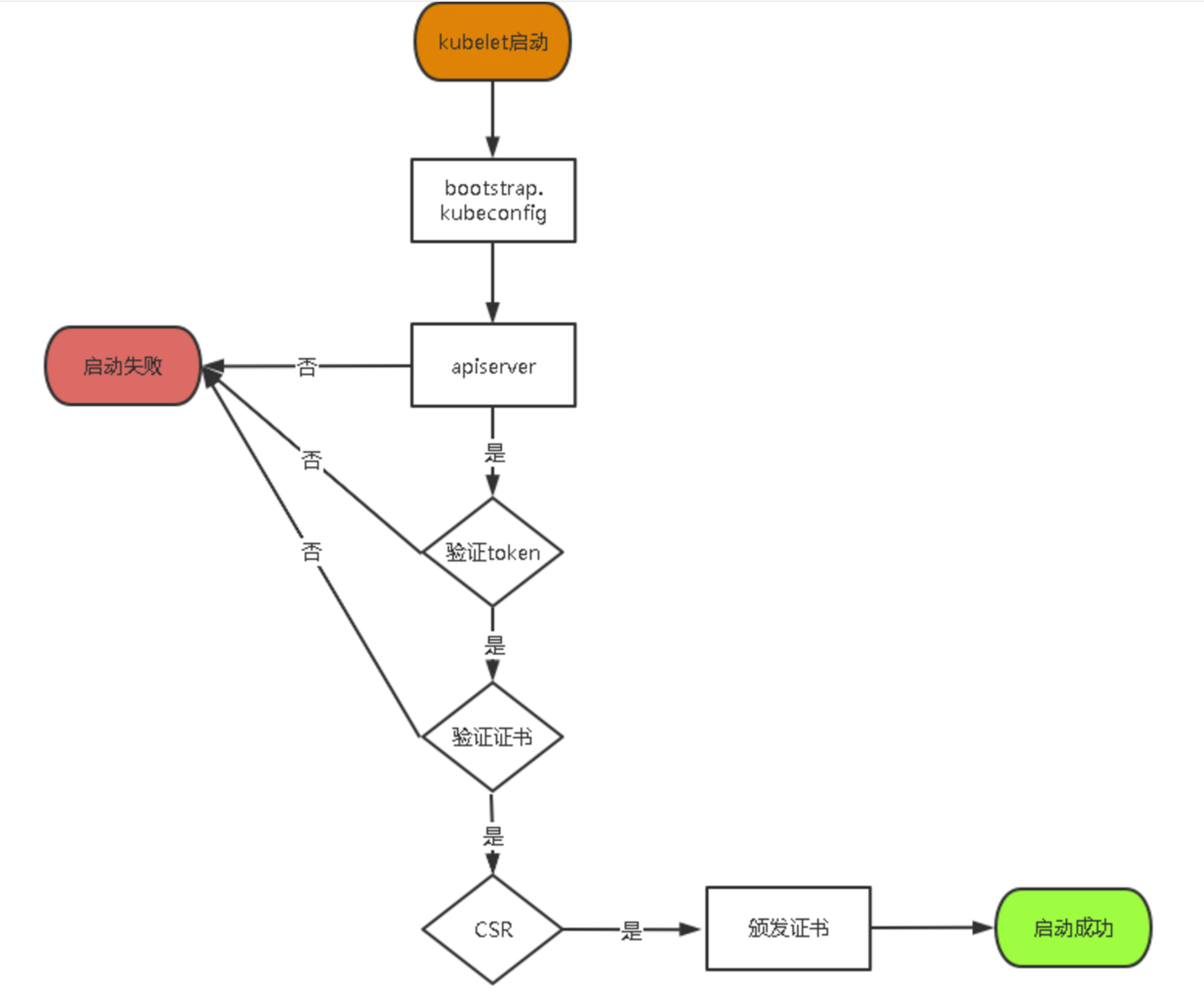

3. 启用 TLS Bootstrapping 机制TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。TLS bootstraping 工作流程:

创建上述配置文件中token文件:cat > /opt/kubernetes/cfg/token.csv << EOFc47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper"EOF格式:token,用户名,UID,用户组token也可自行生成替换:head -c 16 /dev/urandom | od -An -t x | tr -d ' '

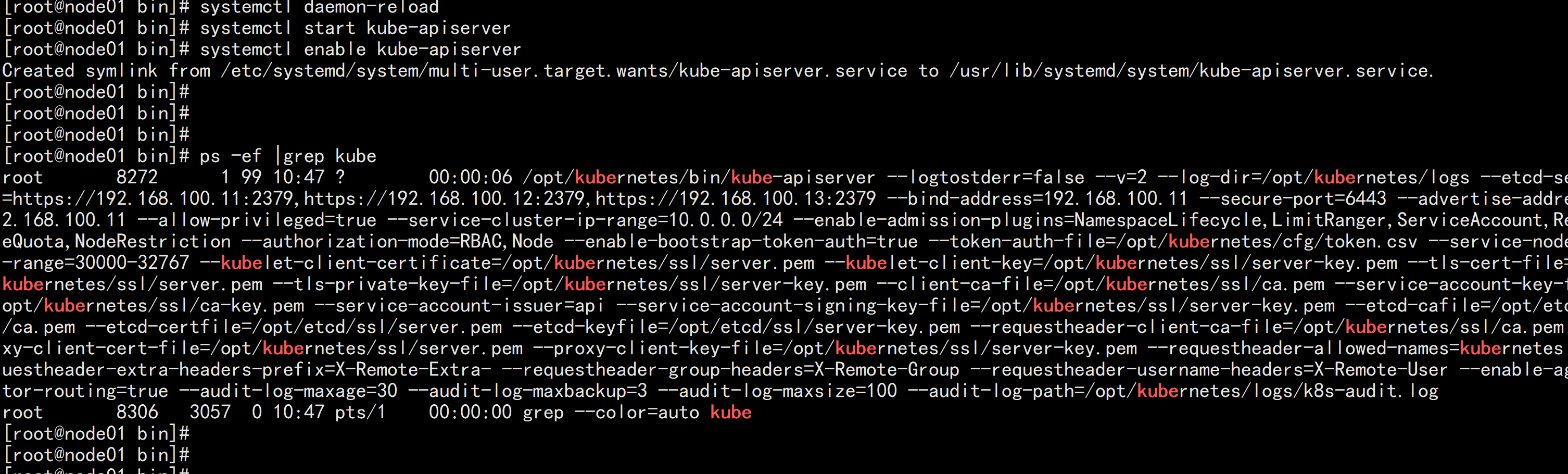

4.4 systemd管理apiserver

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.confExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF

启动并设置开机启动systemctl daemon-reloadsystemctl start kube-apiserversystemctl enable kube-apiserver

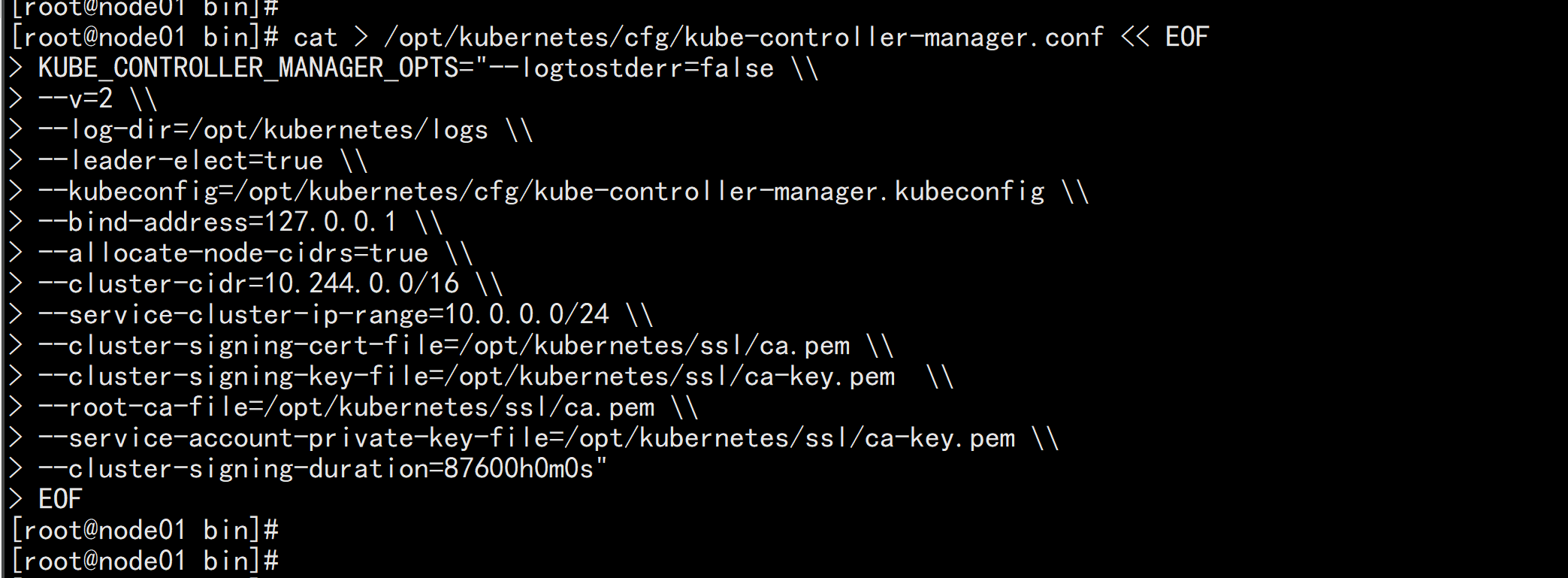

4.5 部署kube-controller-manager

1. 创建配置文件cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOFKUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--leader-elect=true \\--kubeconfig=/opt/kubernetes/cfg/kube-controller-manager.kubeconfig \\--bind-address=127.0.0.1 \\--allocate-node-cidrs=true \\--cluster-cidr=10.244.0.0/16 \\--service-cluster-ip-range=10.0.0.0/24 \\--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\--root-ca-file=/opt/kubernetes/ssl/ca.pem \\--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\--cluster-signing-duration=87600h0m0s"EOF

•--kubeconfig:连接apiserver配置文件•--leader-elect:当该组件启动多个时,自动选举(HA)•--cluster-signing-cert-file/--cluster-signing-key-file:自动为kubelet颁发证书的CA,与apiserver保持一致

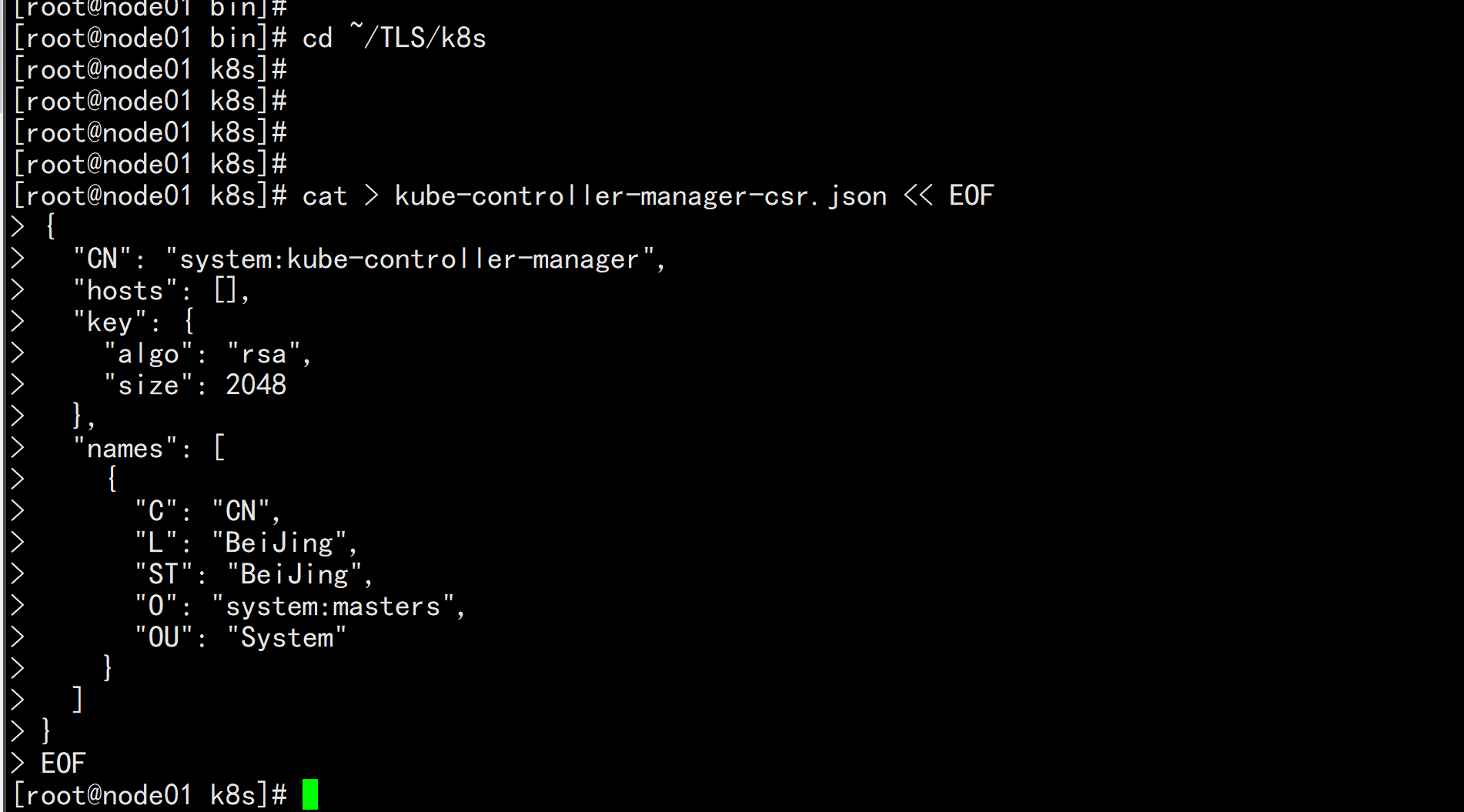

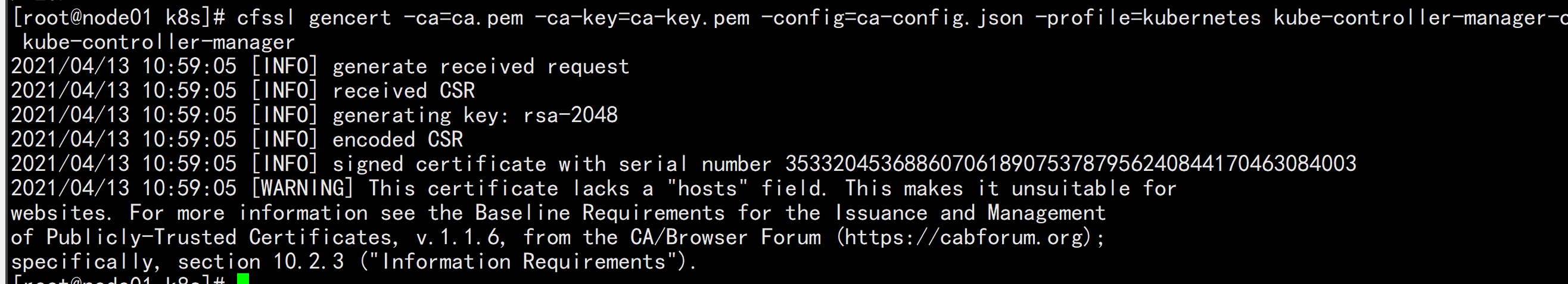

2. 生成kubeconfig文件生成kube-controller-manager证书:# 切换工作目录cd ~/TLS/k8s# 创建证书请求文件cat > kube-controller-manager-csr.json << EOF{"CN": "system:kube-controller-manager","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "System"}]}EOF# 生成证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

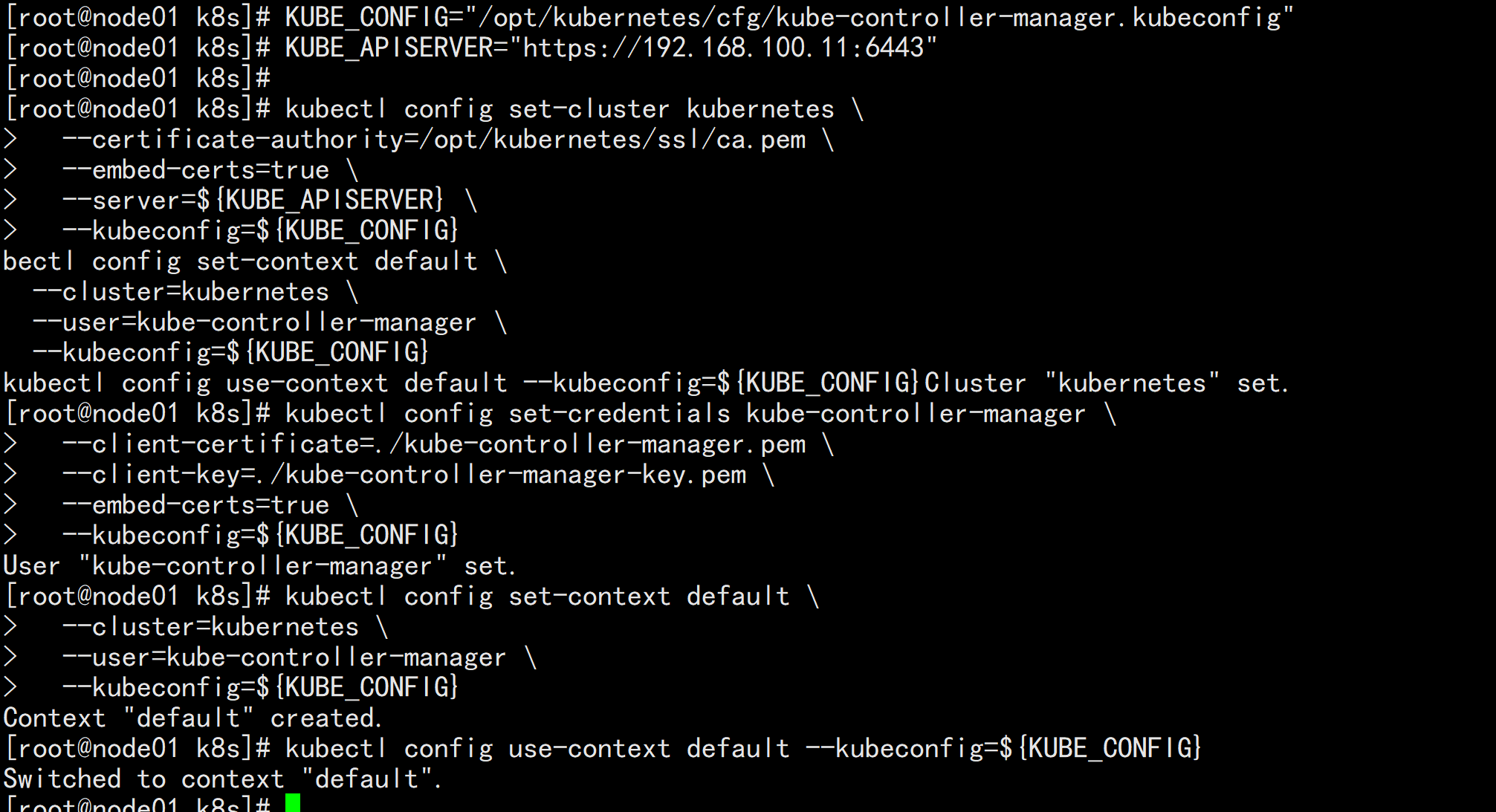

生成kubeconfig文件(以下是shell命令,直接在终端执行):KUBE_CONFIG="/opt/kubernetes/cfg/kube-controller-manager.kubeconfig"KUBE_APISERVER="https://192.168.100.11:6443"kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}kubectl config set-credentials kube-controller-manager \--client-certificate=./kube-controller-manager.pem \--client-key=./kube-controller-manager-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}kubectl config set-context default \--cluster=kubernetes \--user=kube-controller-manager \--kubeconfig=${KUBE_CONFIG}kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

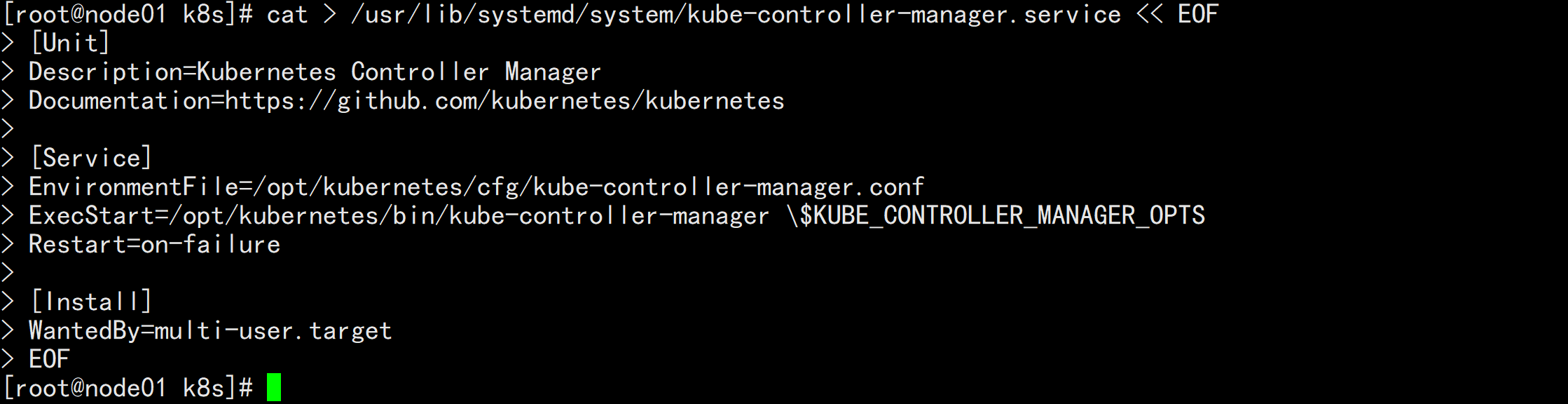

3. systemd管理controller-managercat > /usr/lib/systemd/system/kube-controller-manager.service << EOF[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.confExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF

4. 启动并设置开机启动systemctl daemon-reloadsystemctl start kube-controller-managersystemctl enable kube-controller-manager

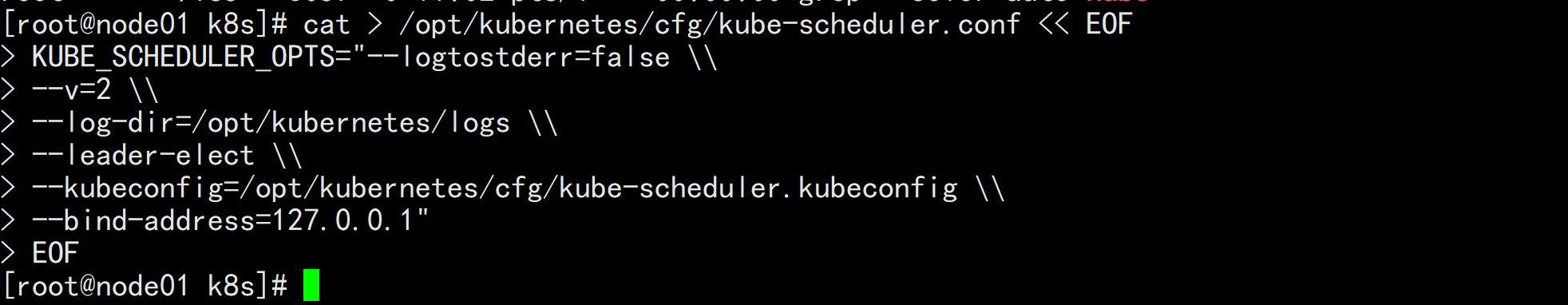

4.6 部署kube-scheduler

1. 创建配置文件cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOFKUBE_SCHEDULER_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--leader-elect \\--kubeconfig=/opt/kubernetes/cfg/kube-scheduler.kubeconfig \\--bind-address=127.0.0.1"EOF

•--kubeconfig:连接apiserver配置文件•--leader-elect:当该组件启动多个时,自动选举(HA)

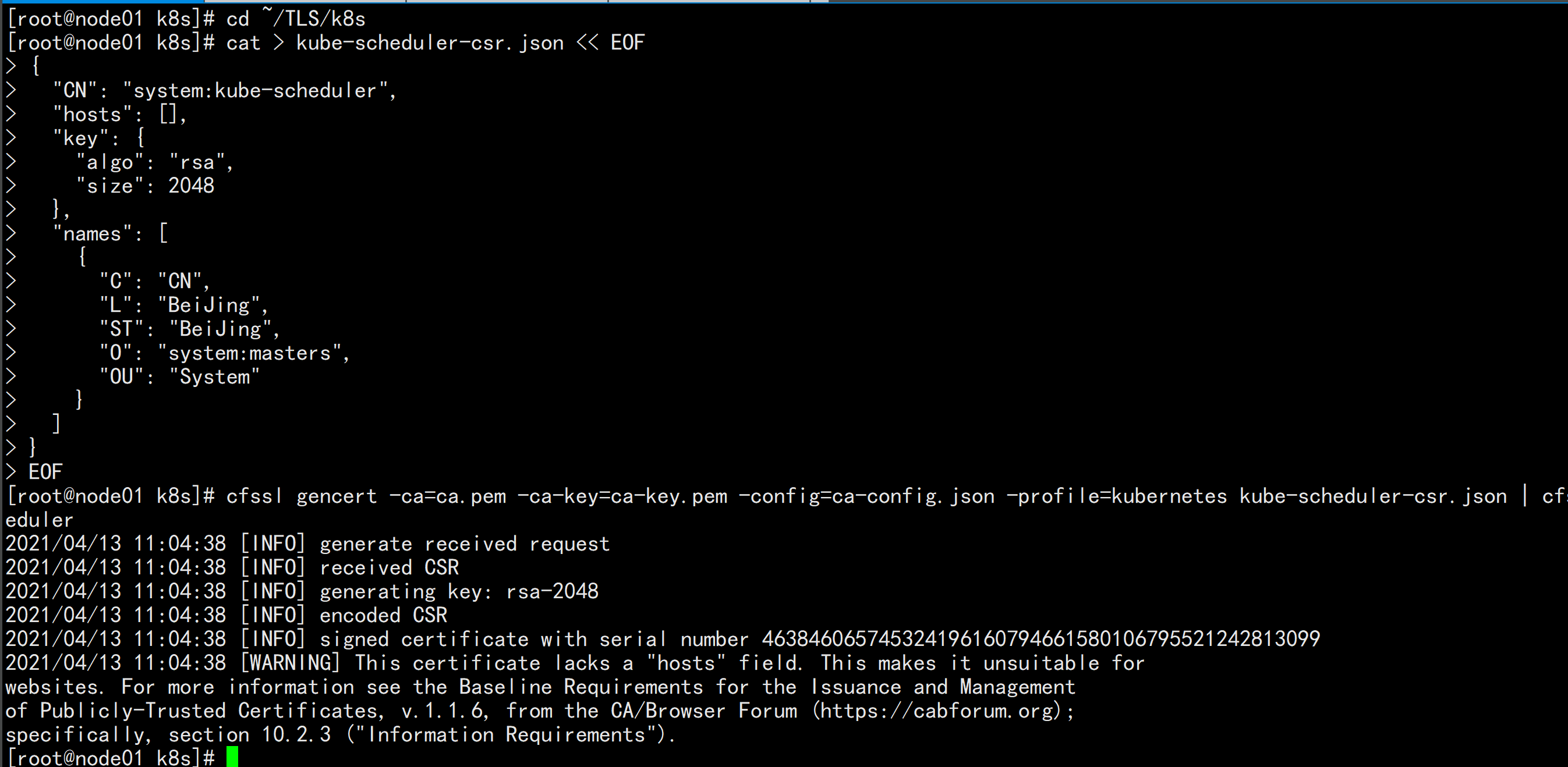

2. 生成kubeconfig文件生成kube-scheduler证书:# 切换工作目录cd ~/TLS/k8s# 创建证书请求文件cat > kube-scheduler-csr.json << EOF{"CN": "system:kube-scheduler","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "System"}]}EOF# 生成证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

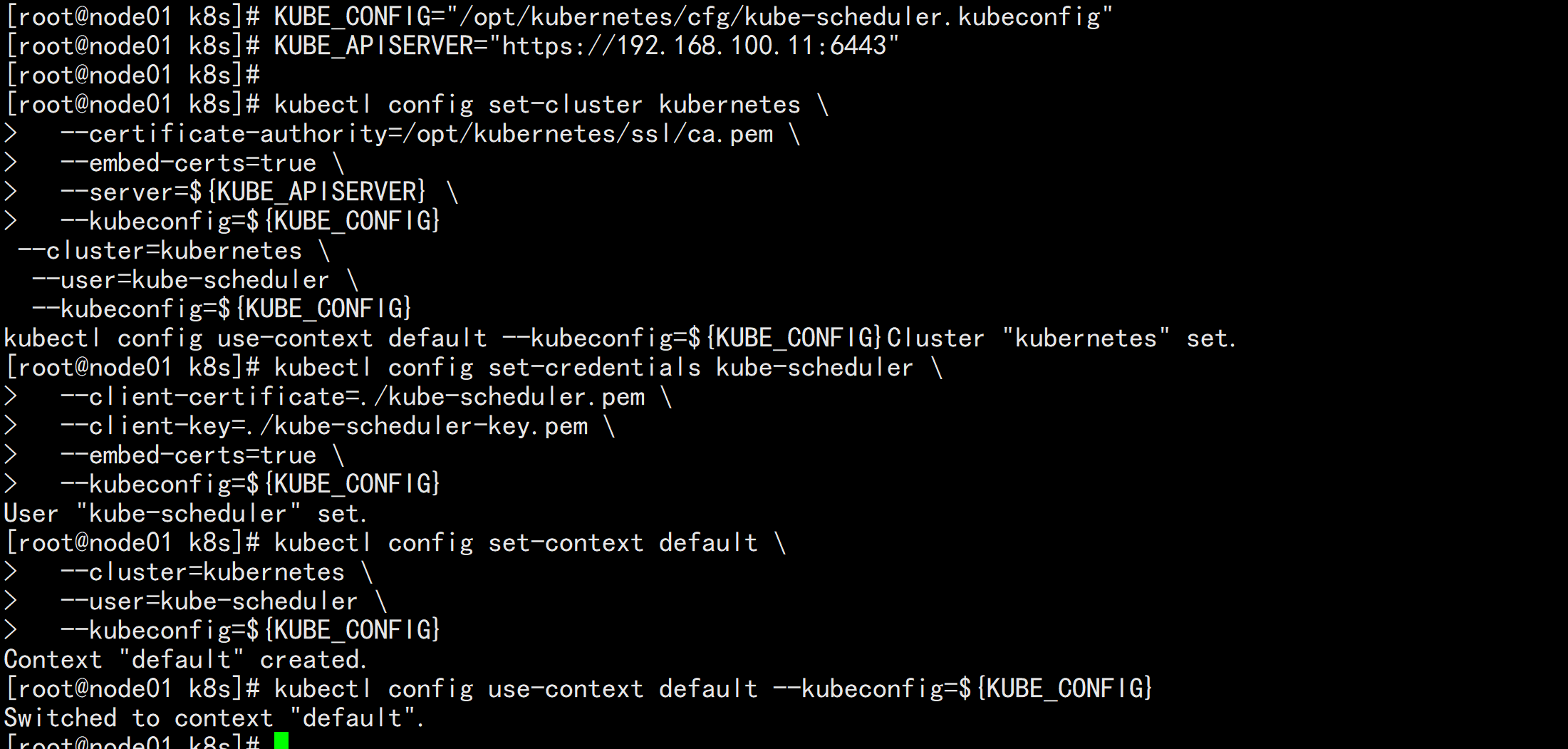

生成kubeconfig文件(以下是shell命令,直接在终端执行):KUBE_CONFIG="/opt/kubernetes/cfg/kube-scheduler.kubeconfig"KUBE_APISERVER="https://192.168.100.11:6443"kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}kubectl config set-credentials kube-scheduler \--client-certificate=./kube-scheduler.pem \--client-key=./kube-scheduler-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}kubectl config set-context default \--cluster=kubernetes \--user=kube-scheduler \--kubeconfig=${KUBE_CONFIG}kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

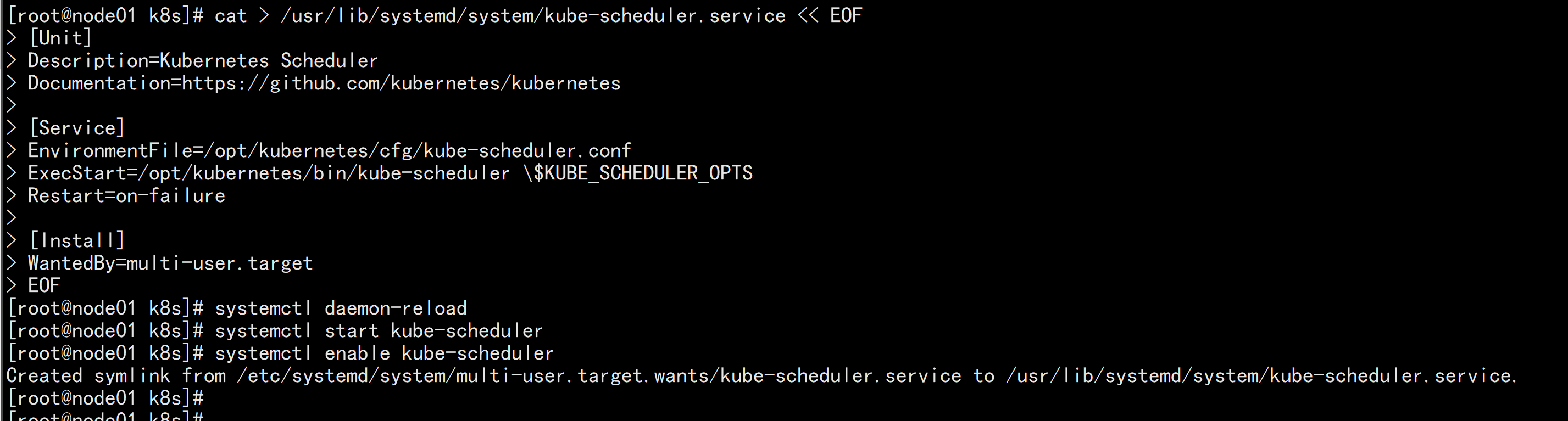

3. systemd管理schedulercat > /usr/lib/systemd/system/kube-scheduler.service << EOF[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.confExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF4. 启动并设置开机启动systemctl daemon-reloadsystemctl start kube-schedulersystemctl enable kube-scheduler

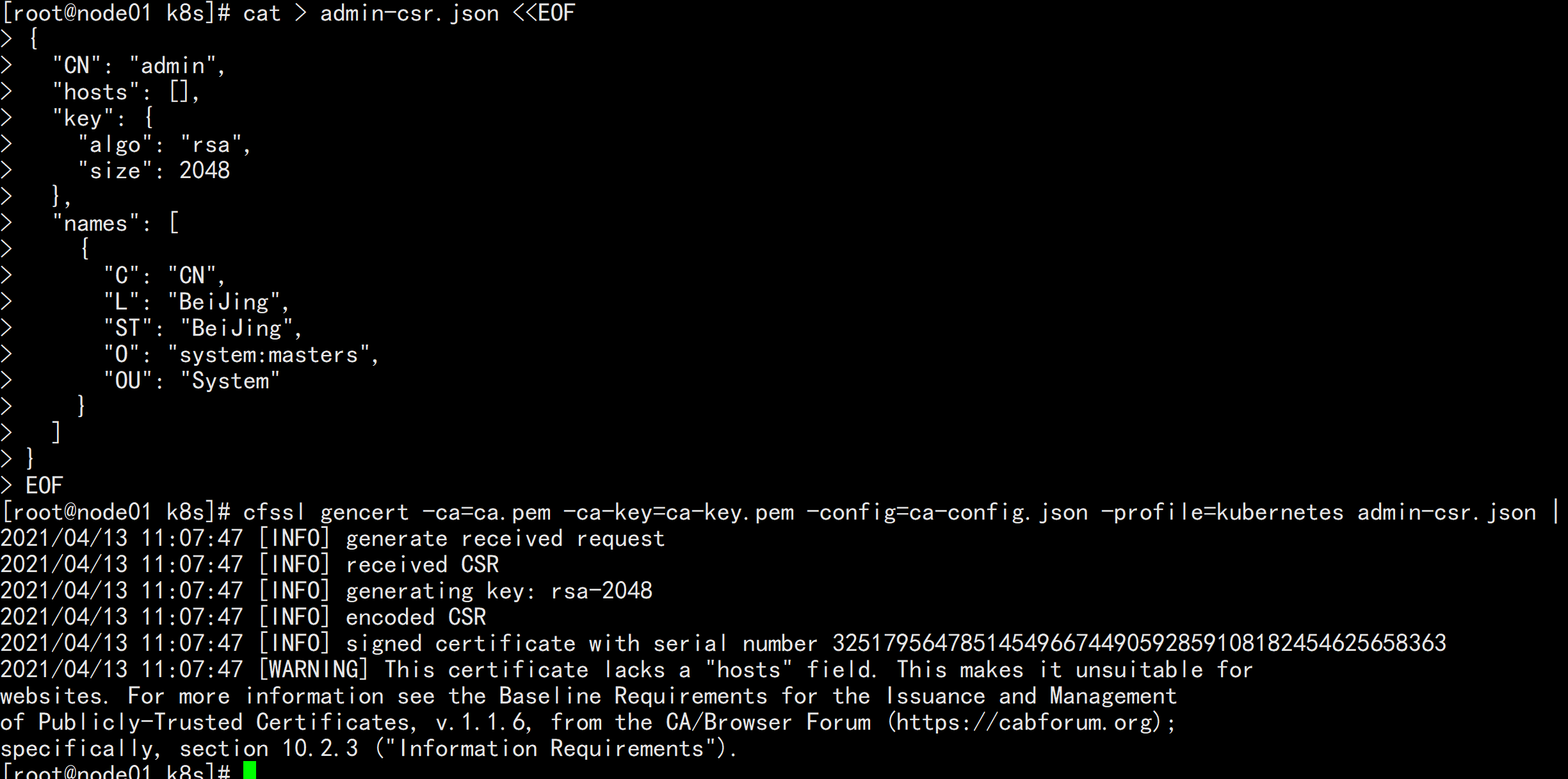

5. 查看集群状态生成kubectl连接集群的证书:cat > admin-csr.json <<EOF{"CN": "admin","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "System"}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

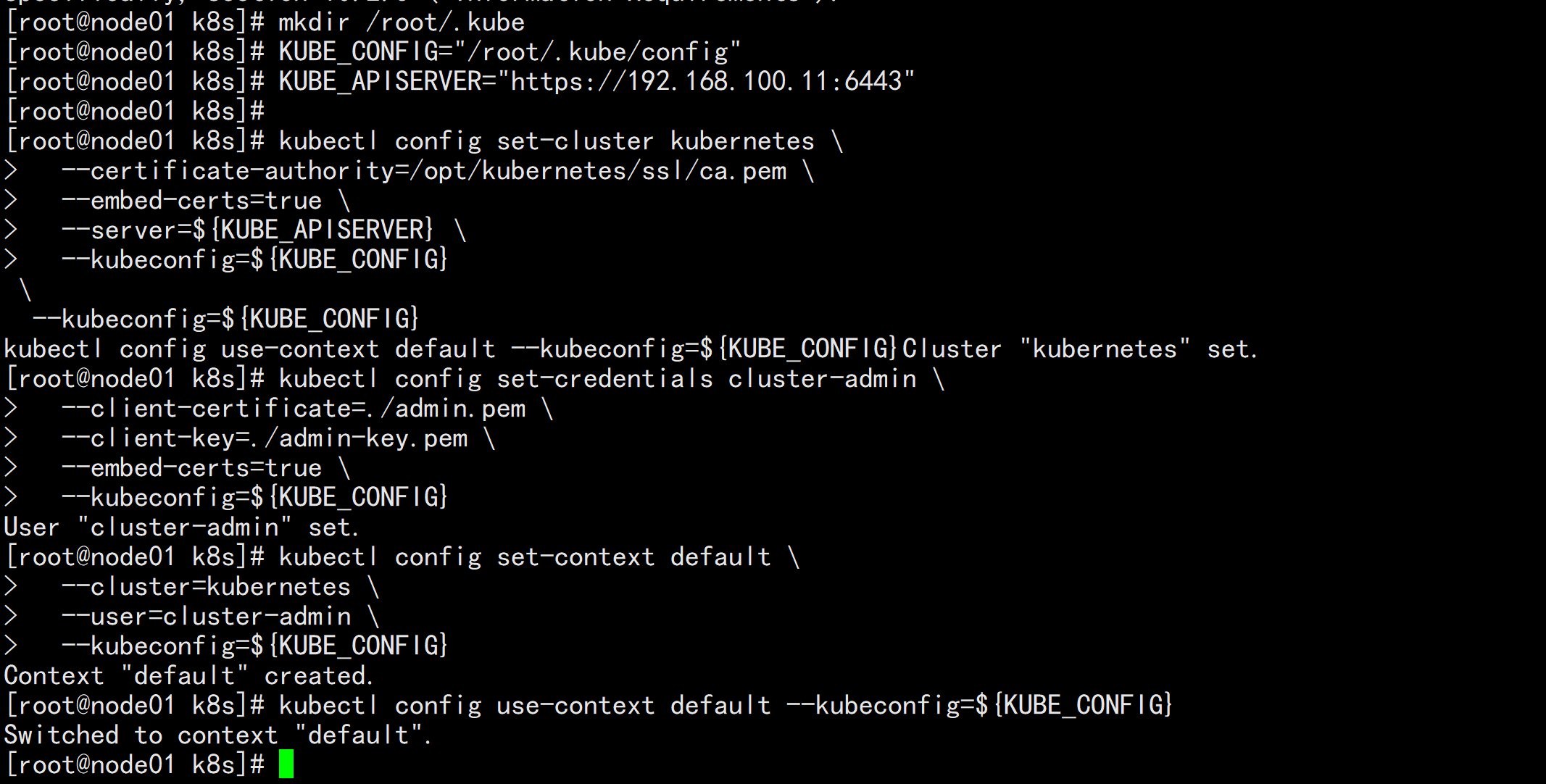

生成kubeconfig文件:mkdir /root/.kubeKUBE_CONFIG="/root/.kube/config"KUBE_APISERVER="https://192.168.100.11:6443"kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}kubectl config set-credentials cluster-admin \--client-certificate=./admin.pem \--client-key=./admin-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}kubectl config set-context default \--cluster=kubernetes \--user=cluster-admin \--kubeconfig=${KUBE_CONFIG}kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

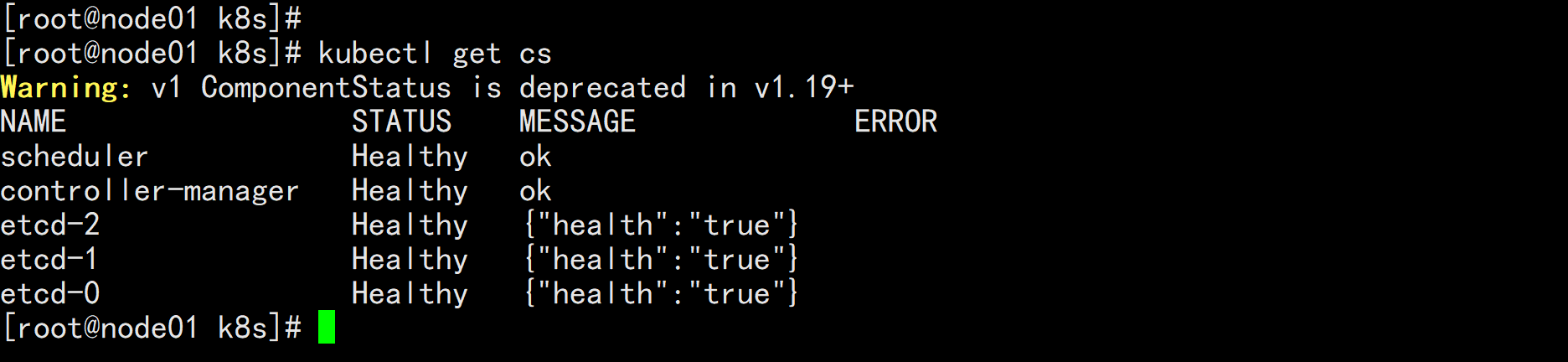

通过kubectl工具查看当前集群组件状态:kubectl get cs

如上输出说明Master节点组件运行正常。

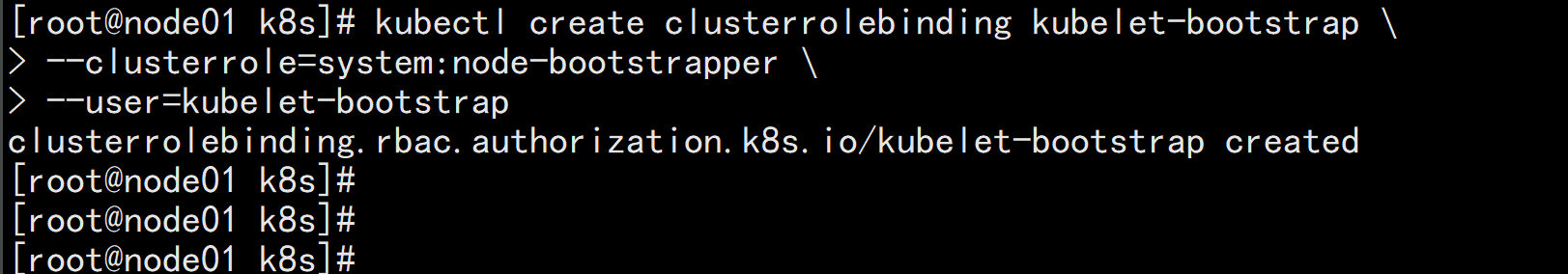

6. 授权kubelet-bootstrap用户允许请求证书kubectl create clusterrolebinding kubelet-bootstrap \--clusterrole=system:node-bootstrapper \--user=kubelet-bootstrap

五、部署Worker Node

5.1 创建工作目录并拷贝二进制文件

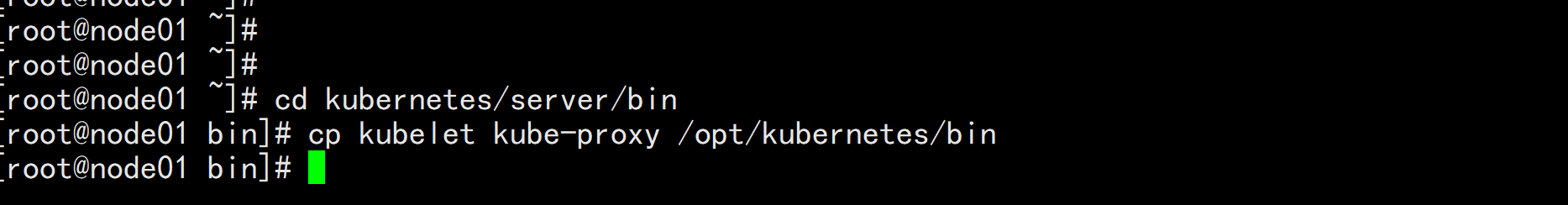

还是在Master Node上操作,即同时作为Worker Node在所有worker node创建工作目录:mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}从master节点拷贝:cd kubernetes/server/bincp kubelet kube-proxy /opt/kubernetes/bin # 本地拷贝

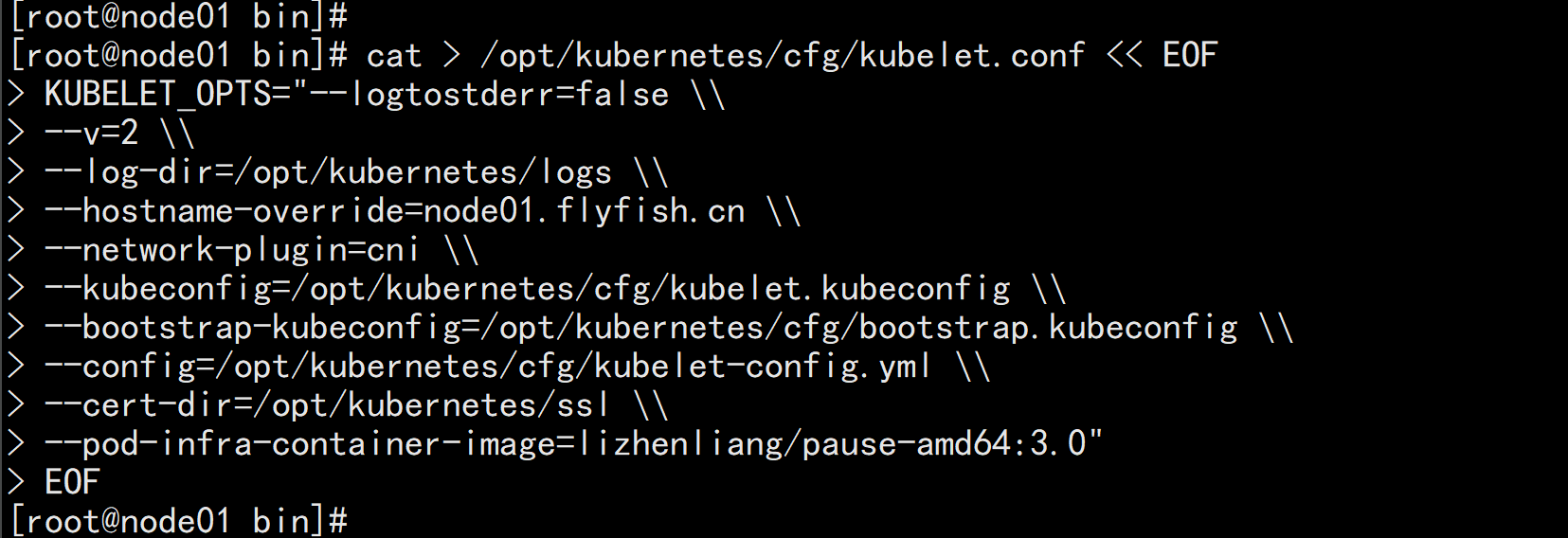

5.2 部署kubelet

1. 创建配置文件cat > /opt/kubernetes/cfg/kubelet.conf << EOFKUBELET_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--hostname-override=node01.flyfish.cn \\--network-plugin=cni \\--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\--config=/opt/kubernetes/cfg/kubelet-config.yml \\--cert-dir=/opt/kubernetes/ssl \\--pod-infra-container-image=lizhenliang/pause-amd64:3.0"EOF

•--hostname-override:显示名称,集群中唯一•--network-plugin:启用CNI•--kubeconfig:空路径,会自动生成,后面用于连接apiserver•--bootstrap-kubeconfig:首次启动向apiserver申请证书•--config:配置参数文件•--cert-dir:kubelet证书生成目录•--pod-infra-container-image:管理Pod网络容器的镜像

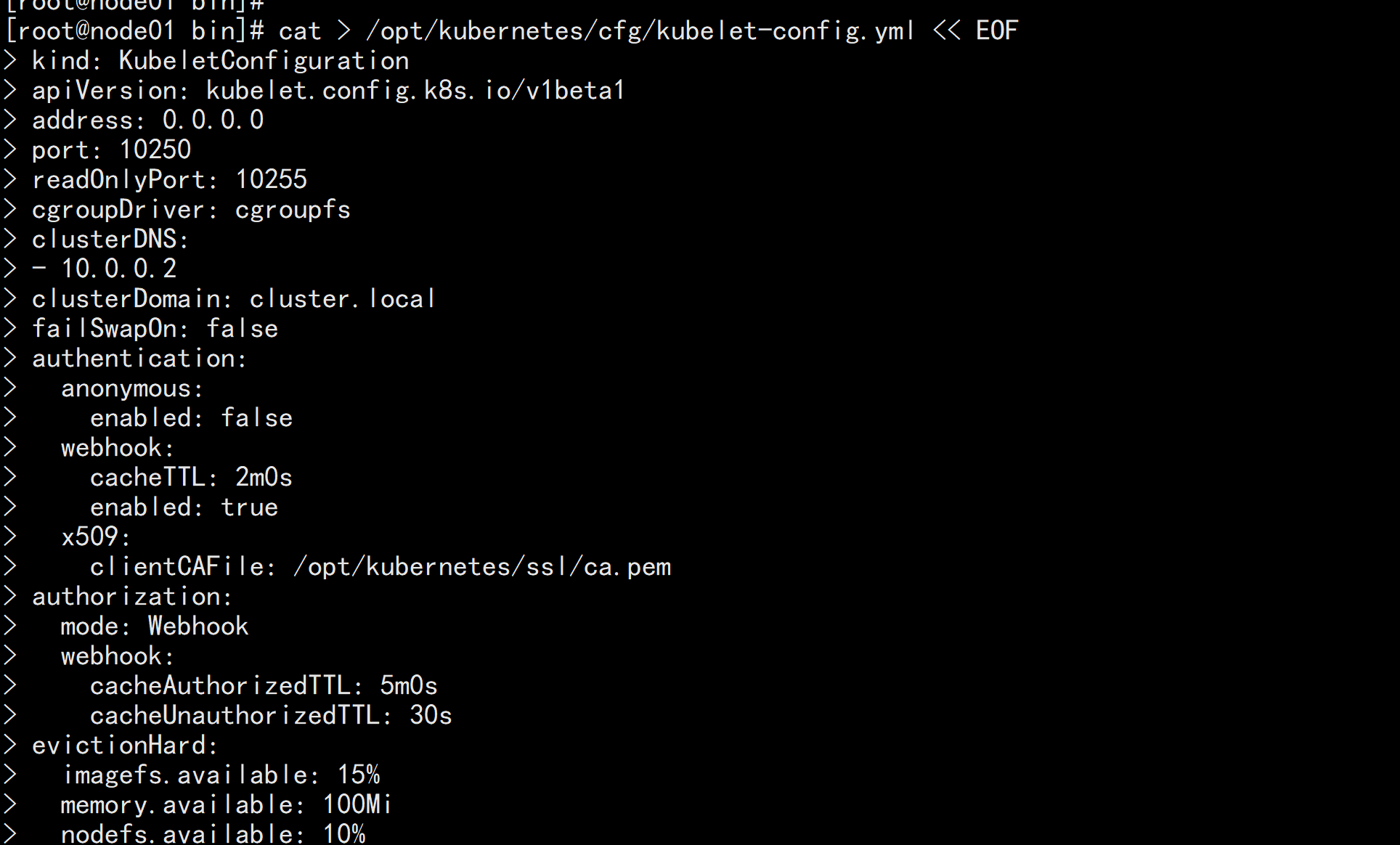

2. 配置参数文件cat > /opt/kubernetes/cfg/kubelet-config.yml << EOFkind: KubeletConfigurationapiVersion: kubelet.config.k8s.io/v1beta1address: 0.0.0.0port: 10250readOnlyPort: 10255cgroupDriver: cgroupfsclusterDNS:- 10.0.0.2clusterDomain: cluster.localfailSwapOn: falseauthentication:anonymous:enabled: falsewebhook:cacheTTL: 2m0senabled: truex509:clientCAFile: /opt/kubernetes/ssl/ca.pemauthorization:mode: Webhookwebhook:cacheAuthorizedTTL: 5m0scacheUnauthorizedTTL: 30sevictionHard:imagefs.available: 15%memory.available: 100Minodefs.available: 10%nodefs.inodesFree: 5%maxOpenFiles: 1000000maxPods: 110EOF

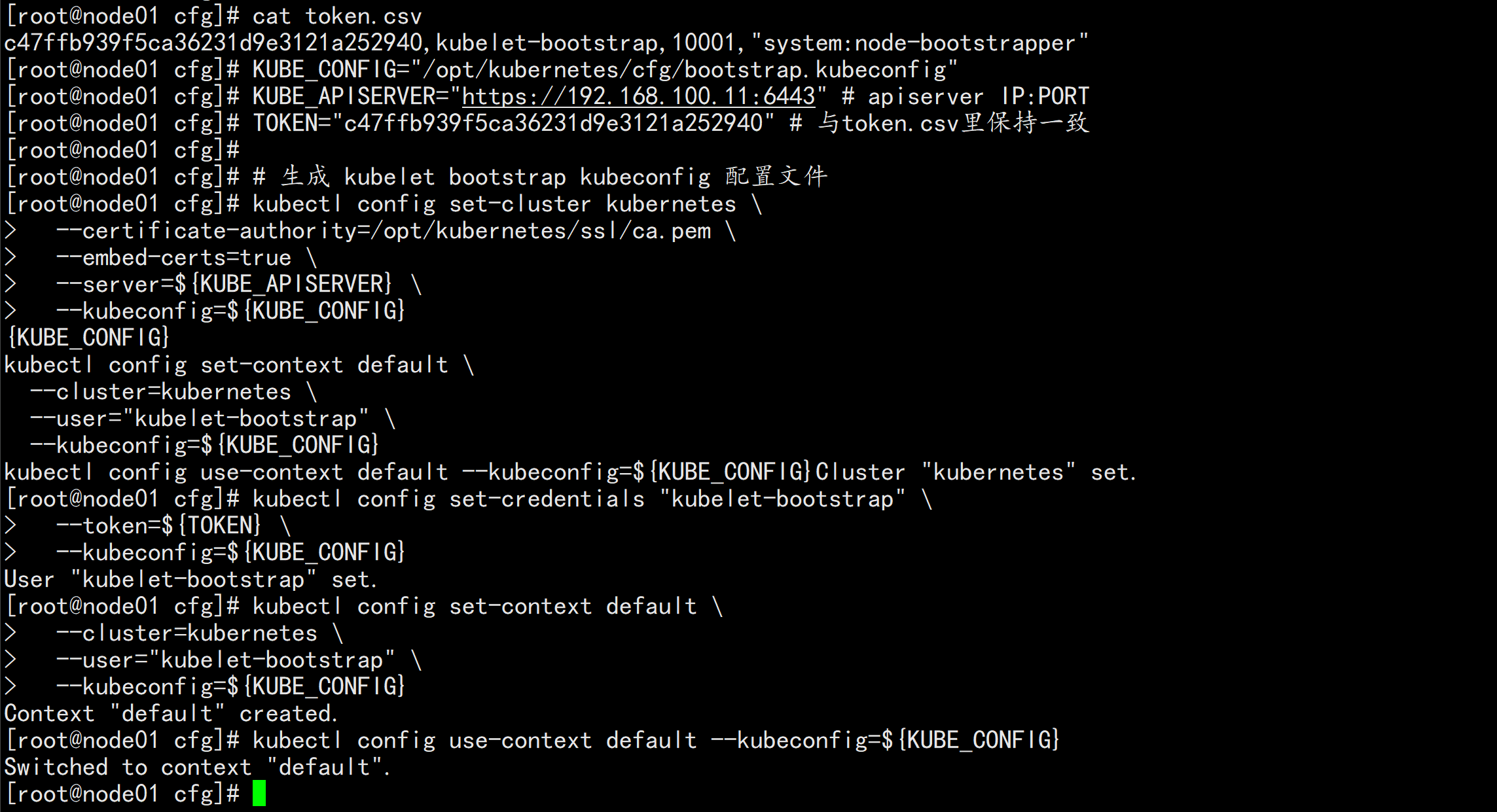

3. 生成kubelet初次加入集群引导kubeconfig文件KUBE_CONFIG="/opt/kubernetes/cfg/bootstrap.kubeconfig"KUBE_APISERVER="https://192.168.100.11:6443" # apiserver IP:PORTTOKEN="c47ffb939f5ca36231d9e3121a252940" # 与token.csv里保持一致# 生成 kubelet bootstrap kubeconfig 配置文件kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}kubectl config set-credentials "kubelet-bootstrap" \--token=${TOKEN} \--kubeconfig=${KUBE_CONFIG}kubectl config set-context default \--cluster=kubernetes \--user="kubelet-bootstrap" \--kubeconfig=${KUBE_CONFIG}kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

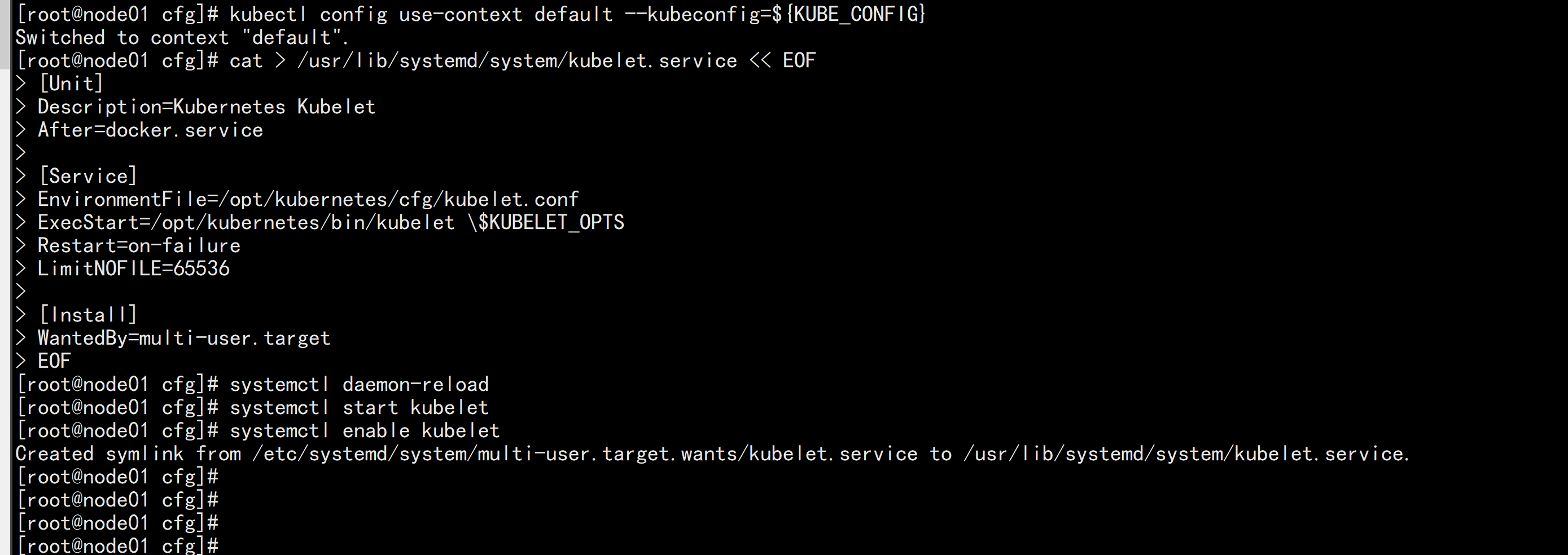

4. systemd管理kubeletcat > /usr/lib/systemd/system/kubelet.service << EOF[Unit]Description=Kubernetes KubeletAfter=docker.service[Service]EnvironmentFile=/opt/kubernetes/cfg/kubelet.confExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTSRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF

5. 启动并设置开机启动systemctl daemon-reloadsystemctl start kubeletsystemctl enable kubelet

5.3 批准kubelet证书申请并加入集群

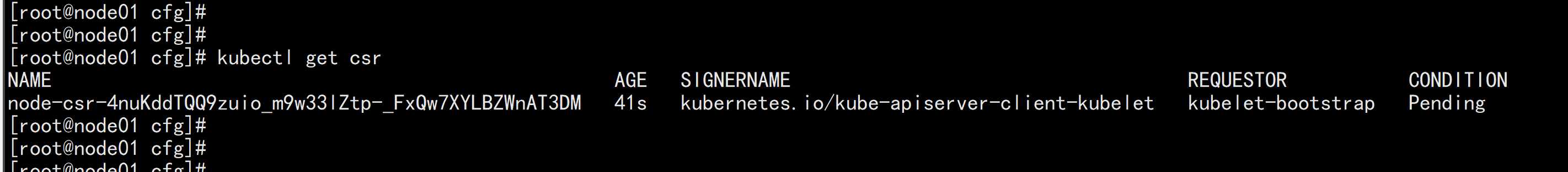

# 查看kubelet证书请求kubectl get csr

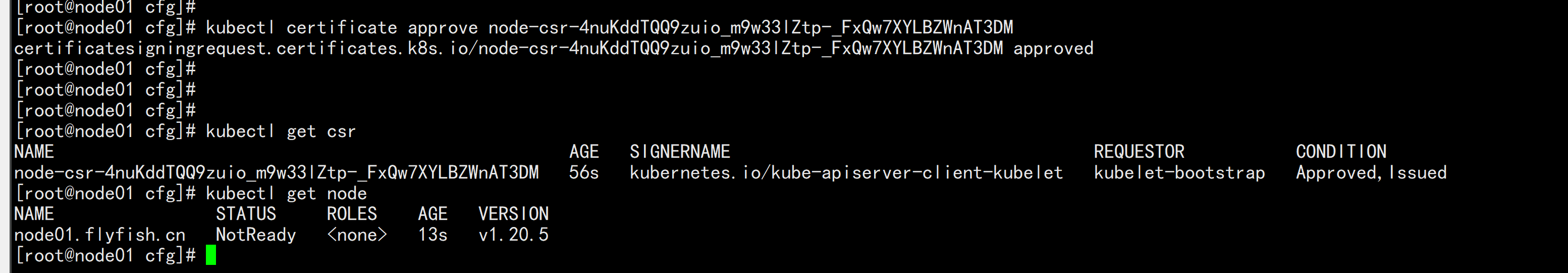

# 批准申请kubectl certificate approve node-csr-4nuKddTQQ9zuio_m9w33lZtp-_FxQw7XYLBZWnAT3DM# 查看节点kubectl get node注:由于网络插件还没有部署,节点会没有准备就绪 NotReady

5.4 部署kube-proxy

1. 创建配置文件cat > /opt/kubernetes/cfg/kube-proxy.conf << EOFKUBE_PROXY_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--config=/opt/kubernetes/cfg/kube-proxy-config.yml"EOF

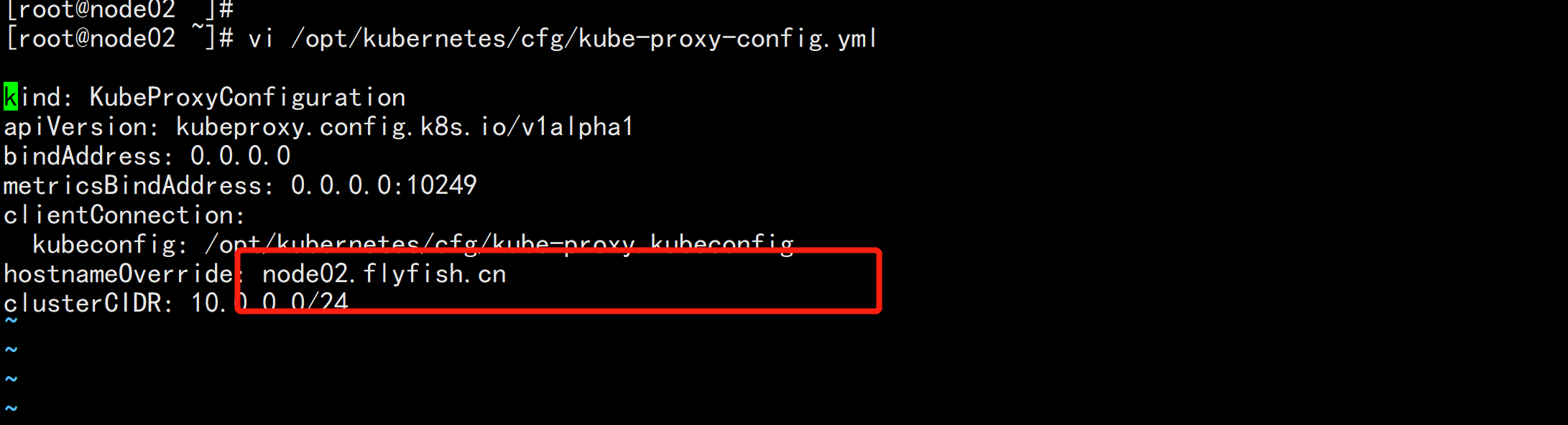

2. 配置参数文件cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOFkind: KubeProxyConfigurationapiVersion: kubeproxy.config.k8s.io/v1alpha1bindAddress: 0.0.0.0metricsBindAddress: 0.0.0.0:10249clientConnection:kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfighostnameOverride: node01.flyfish.cnclusterCIDR: 10.0.0.0/24EOF

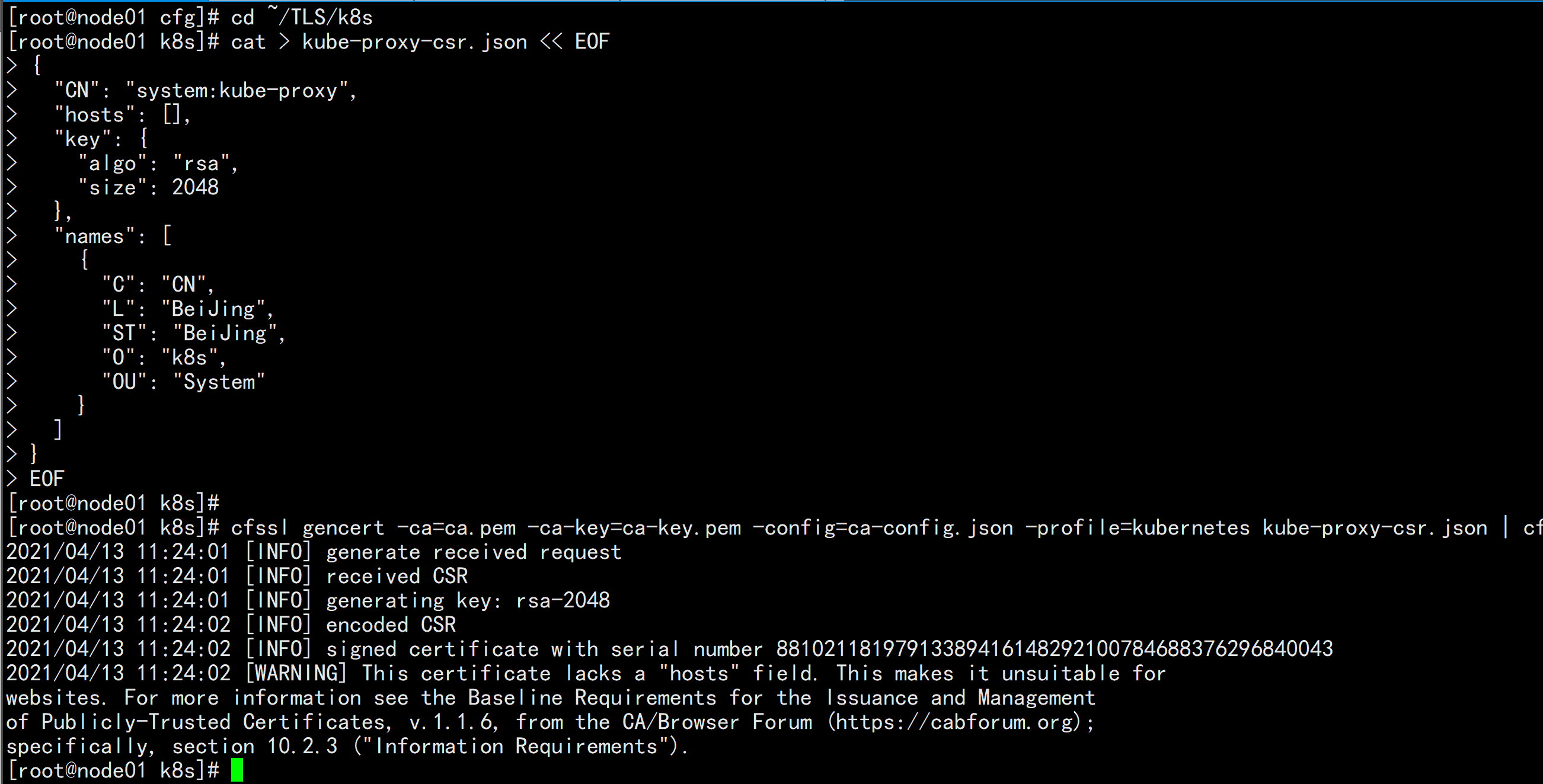

3. 生成kube-proxy.kubeconfig文件生成kube-proxy证书:# 切换工作目录cd ~/TLS/k8s# 创建证书请求文件cat > kube-proxy-csr.json << EOF{"CN": "system:kube-proxy","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]}EOF# 生成证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

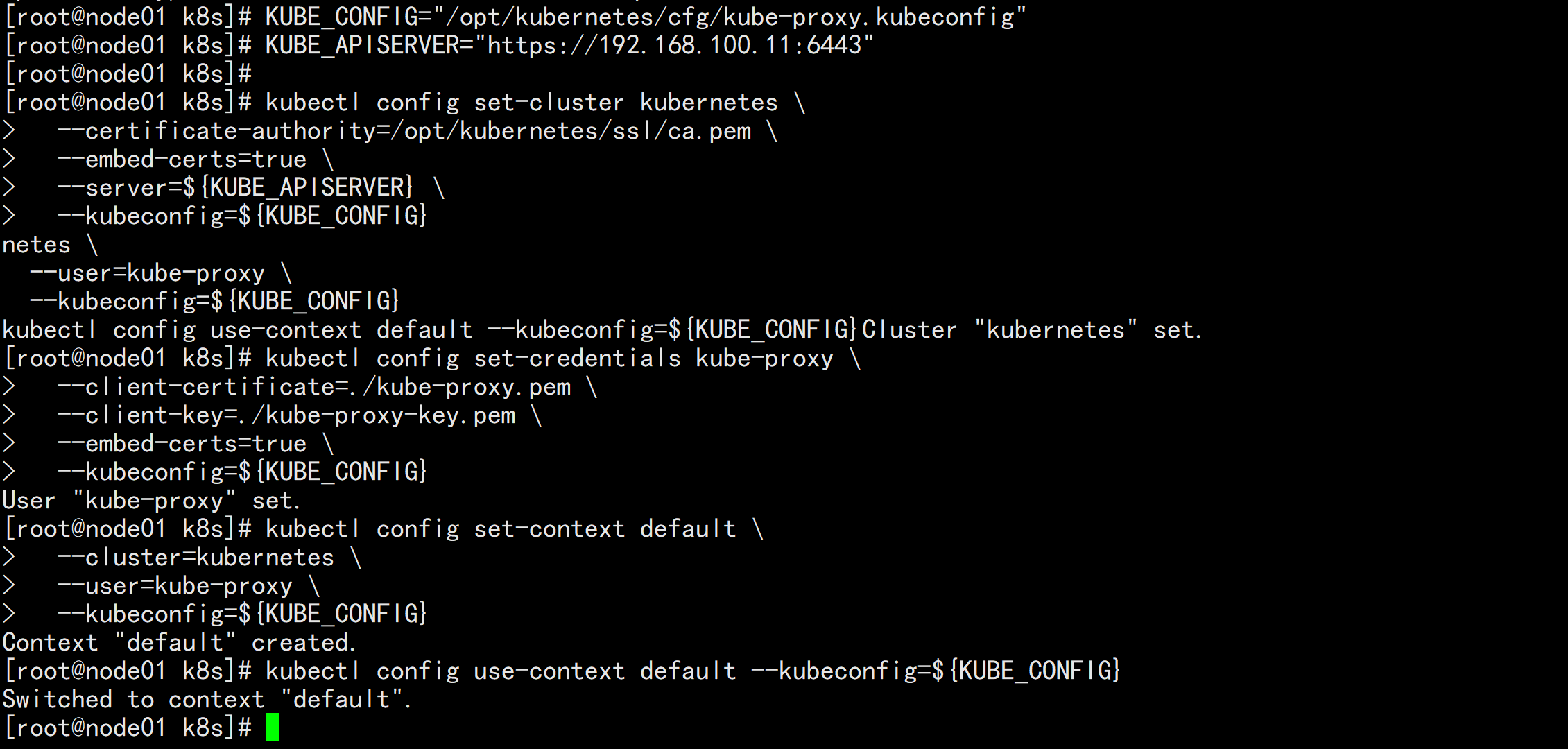

生成kubeconfig文件:KUBE_CONFIG="/opt/kubernetes/cfg/kube-proxy.kubeconfig"KUBE_APISERVER="https://192.168.100.11:6443"kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}kubectl config set-credentials kube-proxy \--client-certificate=./kube-proxy.pem \--client-key=./kube-proxy-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}kubectl config set-context default \--cluster=kubernetes \--user=kube-proxy \--kubeconfig=${KUBE_CONFIG}kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

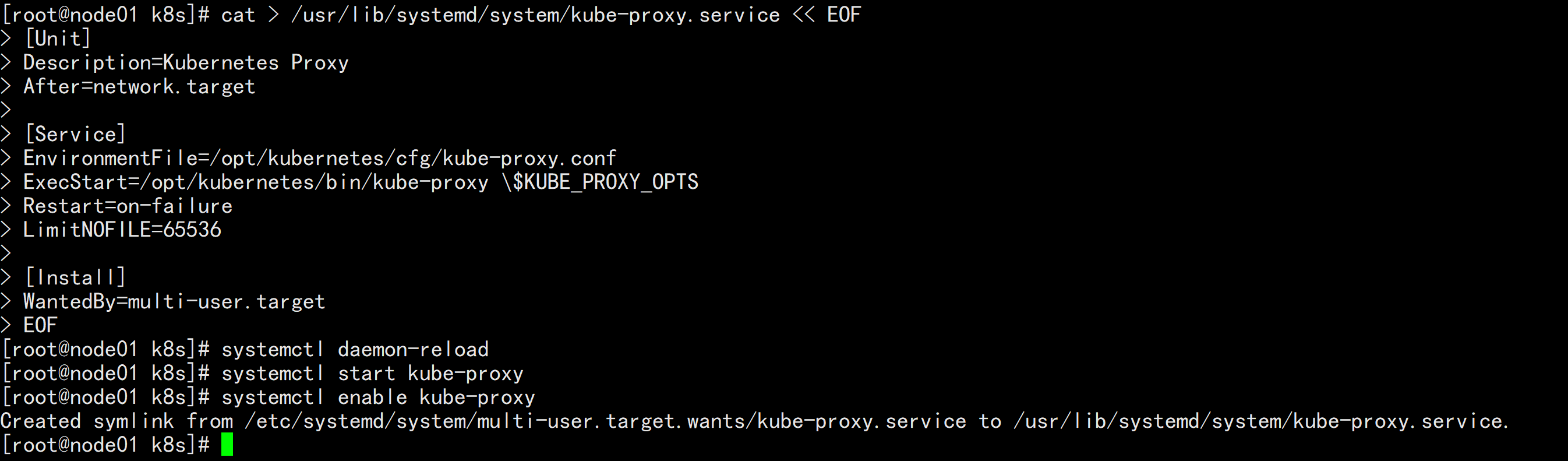

4. systemd管理kube-proxycat > /usr/lib/systemd/system/kube-proxy.service << EOF[Unit]Description=Kubernetes ProxyAfter=network.target[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.confExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTSRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF5. 启动并设置开机启动systemctl daemon-reloadsystemctl start kube-proxysystemctl enable kube-proxy

5.5 部署网络组件

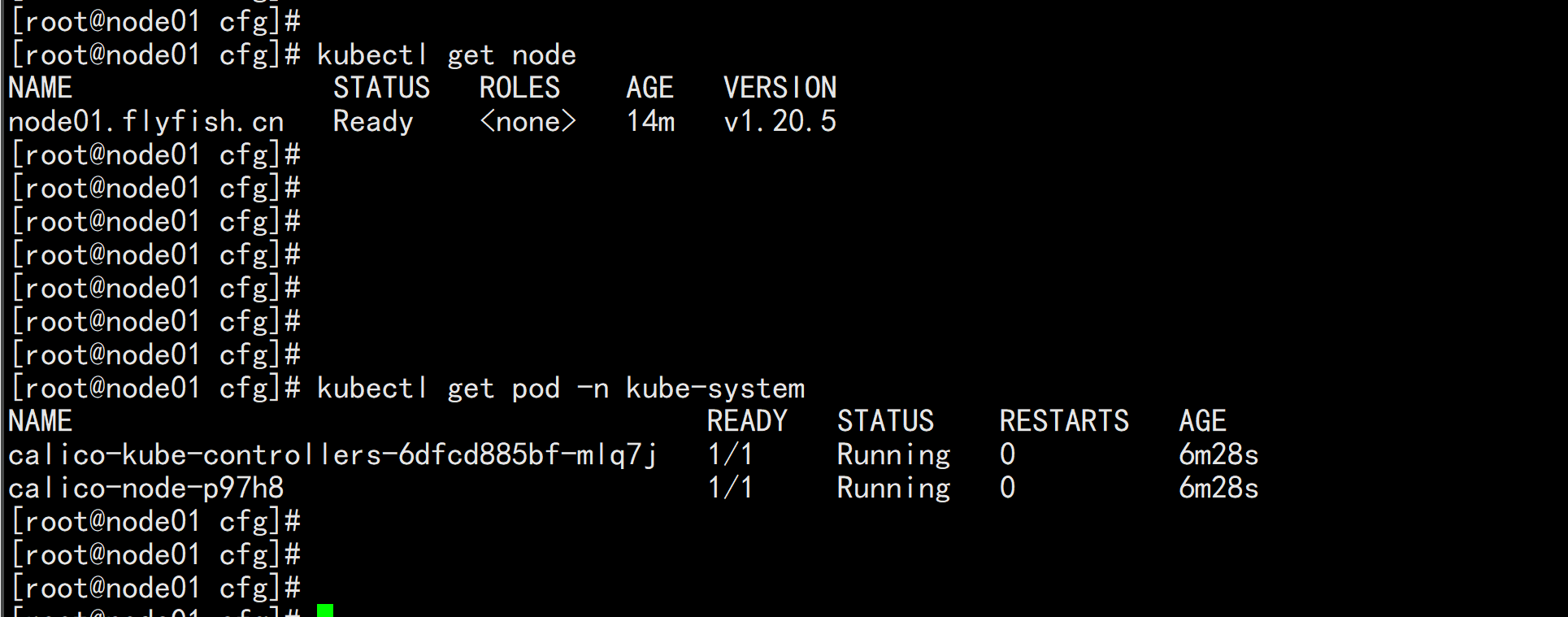

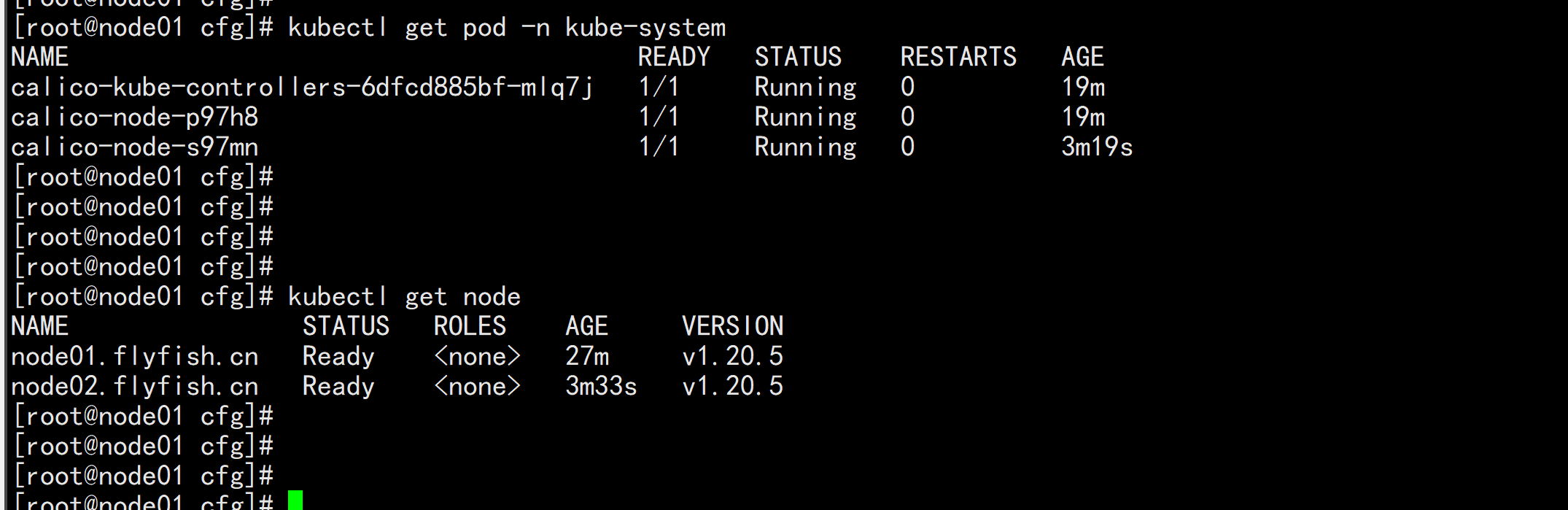

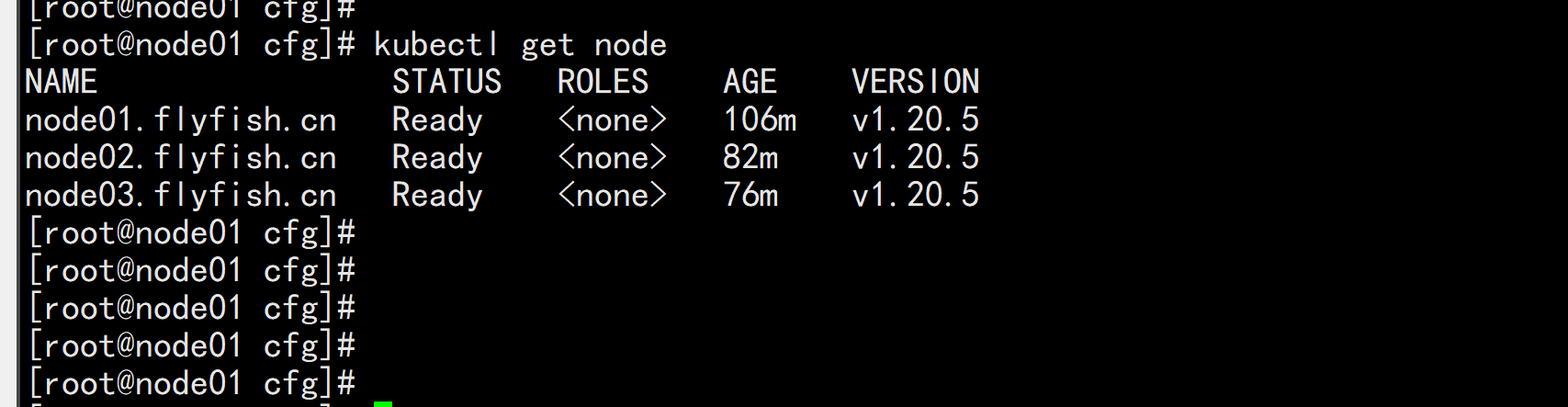

Calico是一个纯三层的数据中心网络方案,是目前Kubernetes主流的网络方案。部署Calico:wget https://docs.projectcalico.org/v3.14/manifests/calico.yamlk8s1.22+wget https://docs.projectcalico.org/manifests/calico.yamlkubectl apply -f calico.yamlkubectl get pods -n kube-system等Calico Pod都Running,节点也会准备就绪:kubectl get nodekubectl get pod -n kube-system

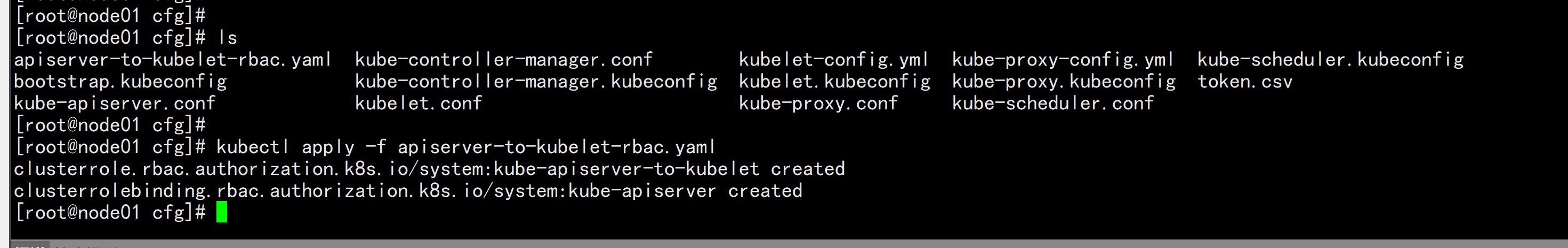

5.6 授权apiserver访问kubelet

应用场景:例如kubectl logscat > apiserver-to-kubelet-rbac.yaml << EOFapiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:annotations:rbac.authorization.kubernetes.io/autoupdate: "true"labels:kubernetes.io/bootstrapping: rbac-defaultsname: system:kube-apiserver-to-kubeletrules:- apiGroups:- ""resources:- nodes/proxy- nodes/stats- nodes/log- nodes/spec- nodes/metrics- pods/logverbs:- "*"---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: system:kube-apiservernamespace: ""roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:kube-apiserver-to-kubeletsubjects:- apiGroup: rbac.authorization.k8s.iokind: Username: kubernetesEOF

kubectl apply -f apiserver-to-kubelet-rbac.yaml

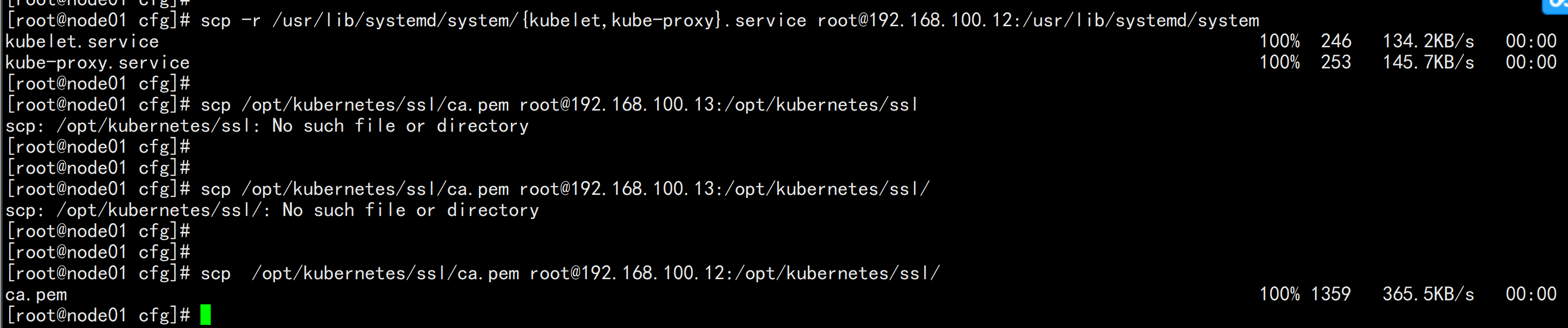

5.7 新增加Worker Node

1. 拷贝已部署好的Node相关文件到新节点在Master节点将Worker Node涉及文件拷贝到新节点192.168.100.12/13scp -r /opt/kubernetes root@192.168.100.12:/opt/scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.100.12:/usr/lib/systemd/systemscp /opt/kubernetes/ssl/ca.pem root@192.168.100.12:/opt/kubernetes/ssl/

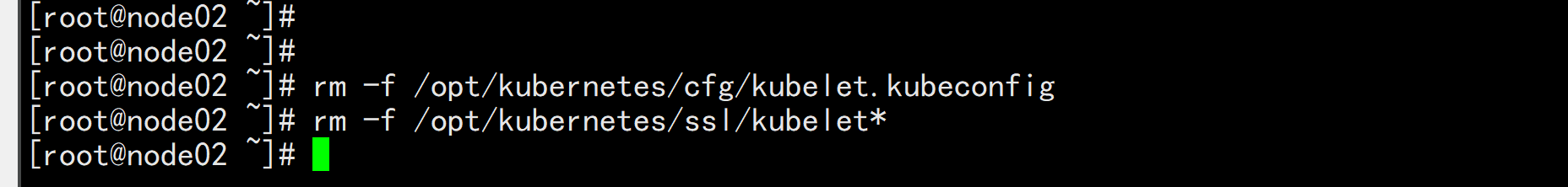

2. 删除kubelet证书和kubeconfig文件rm -f /opt/kubernetes/cfg/kubelet.kubeconfigrm -f /opt/kubernetes/ssl/kubelet*注:这几个文件是证书申请审批后自动生成的,每个Node不同,必须删除

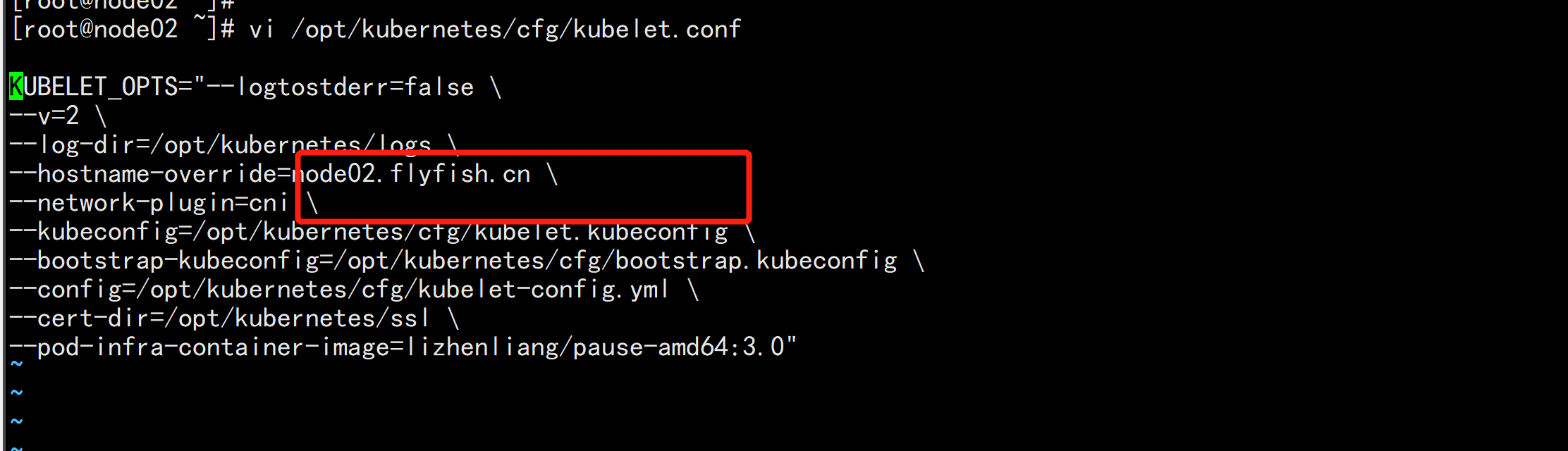

3. 修改主机名vi /opt/kubernetes/cfg/kubelet.conf--hostname-override=node02.flyfish.cnvi /opt/kubernetes/cfg/kube-proxy-config.ymlhostnameOverride: node02.flyfish.cn

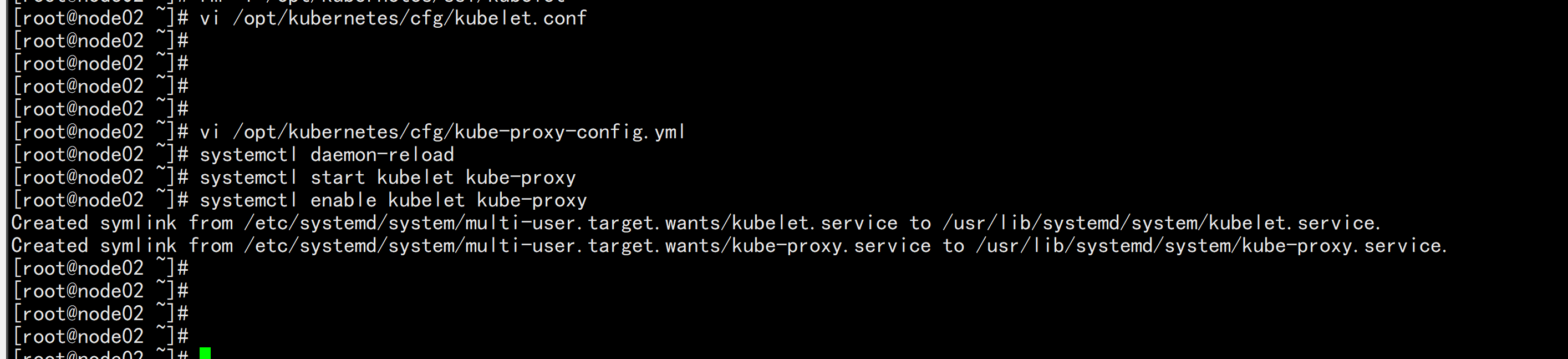

4. 启动并设置开机启动systemctl daemon-reloadsystemctl start kubelet kube-proxysystemctl enable kubelet kube-proxy

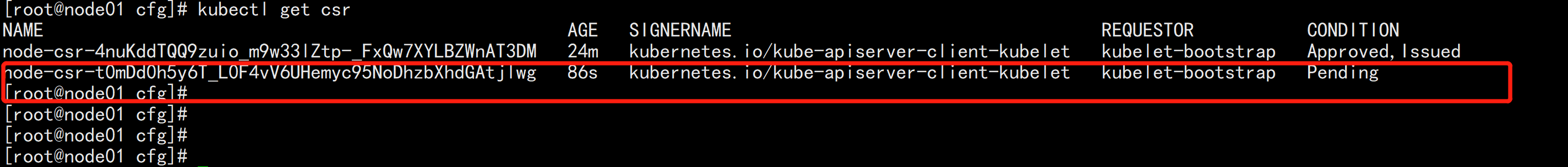

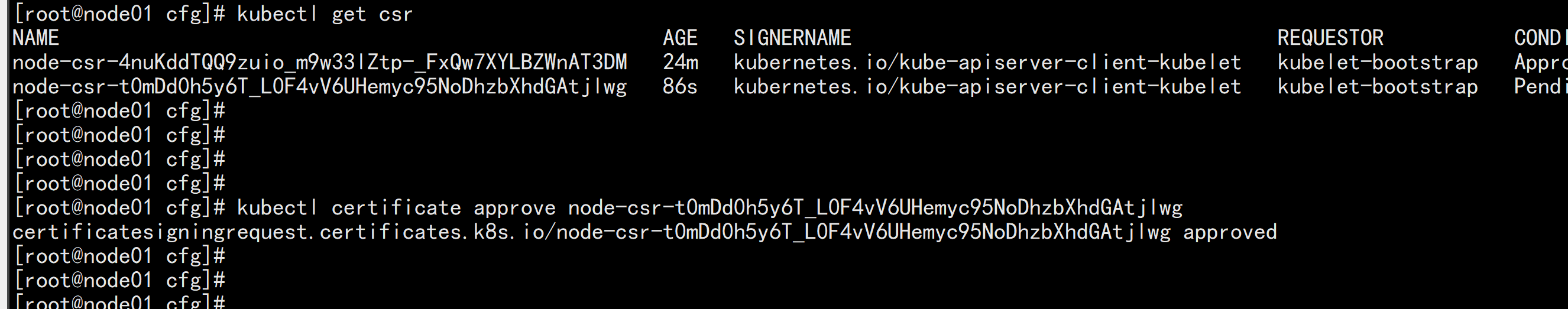

5. 在Master上批准新Node kubelet证书申请# 查看证书请求kubectl get csr

# 授权请求kubectl certificate approve node-csr-t0mDd0h5y6T_L0F4vV6UHemyc95NoDhzbXhdGAtjlwg

kubectl get nodekubectl get pod -n kube-system

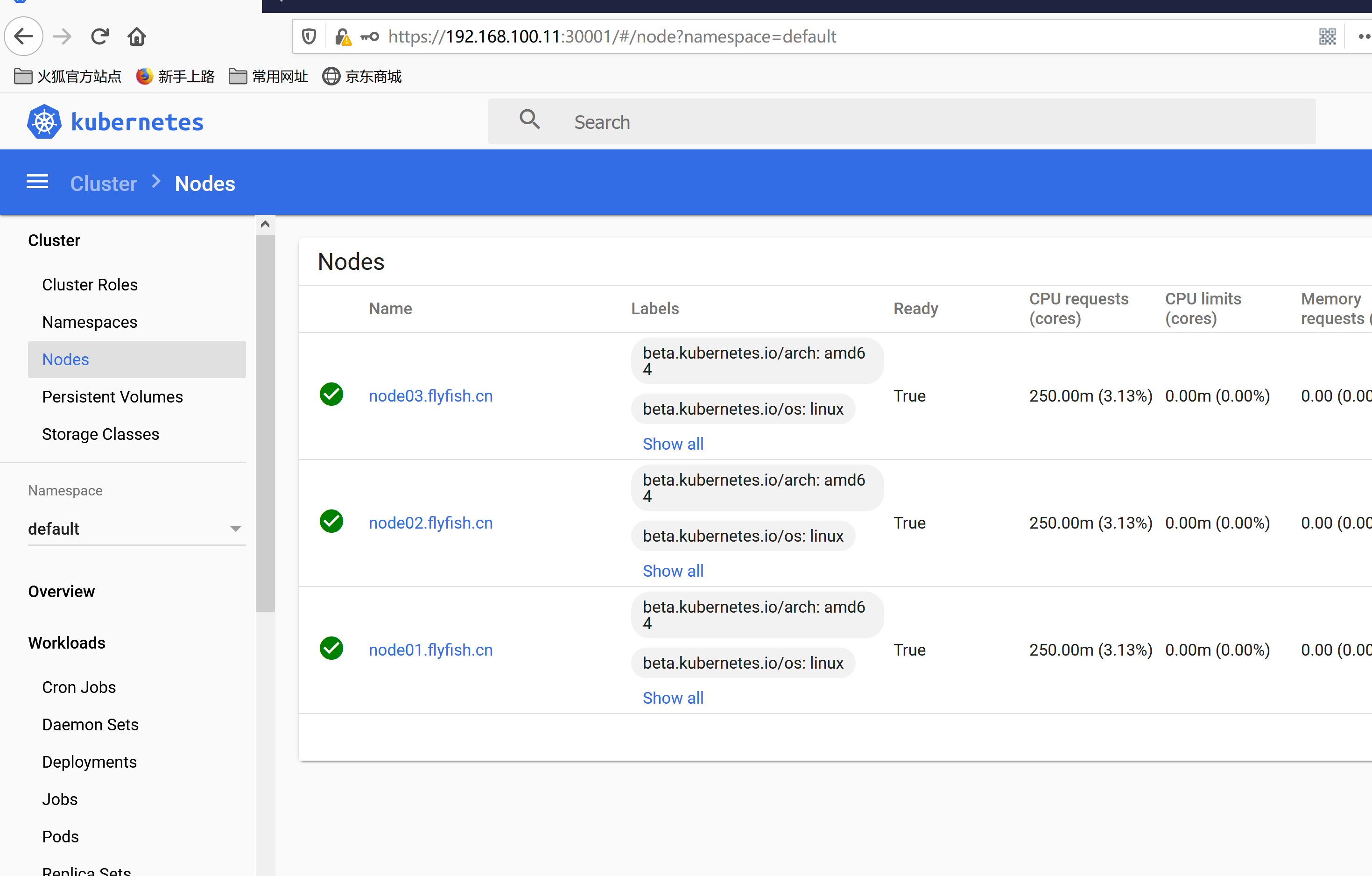

同上配置node03.flyfish.cn 节点kubectl get node

六、部署Dashboard和CoreDNS

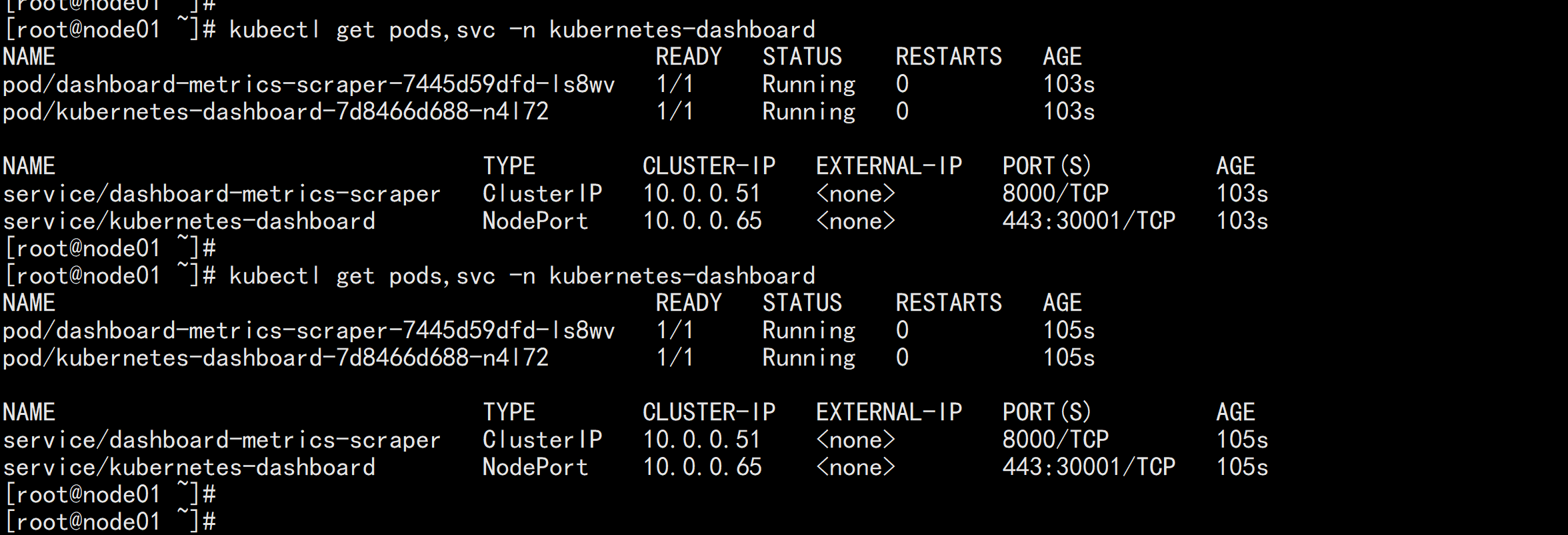

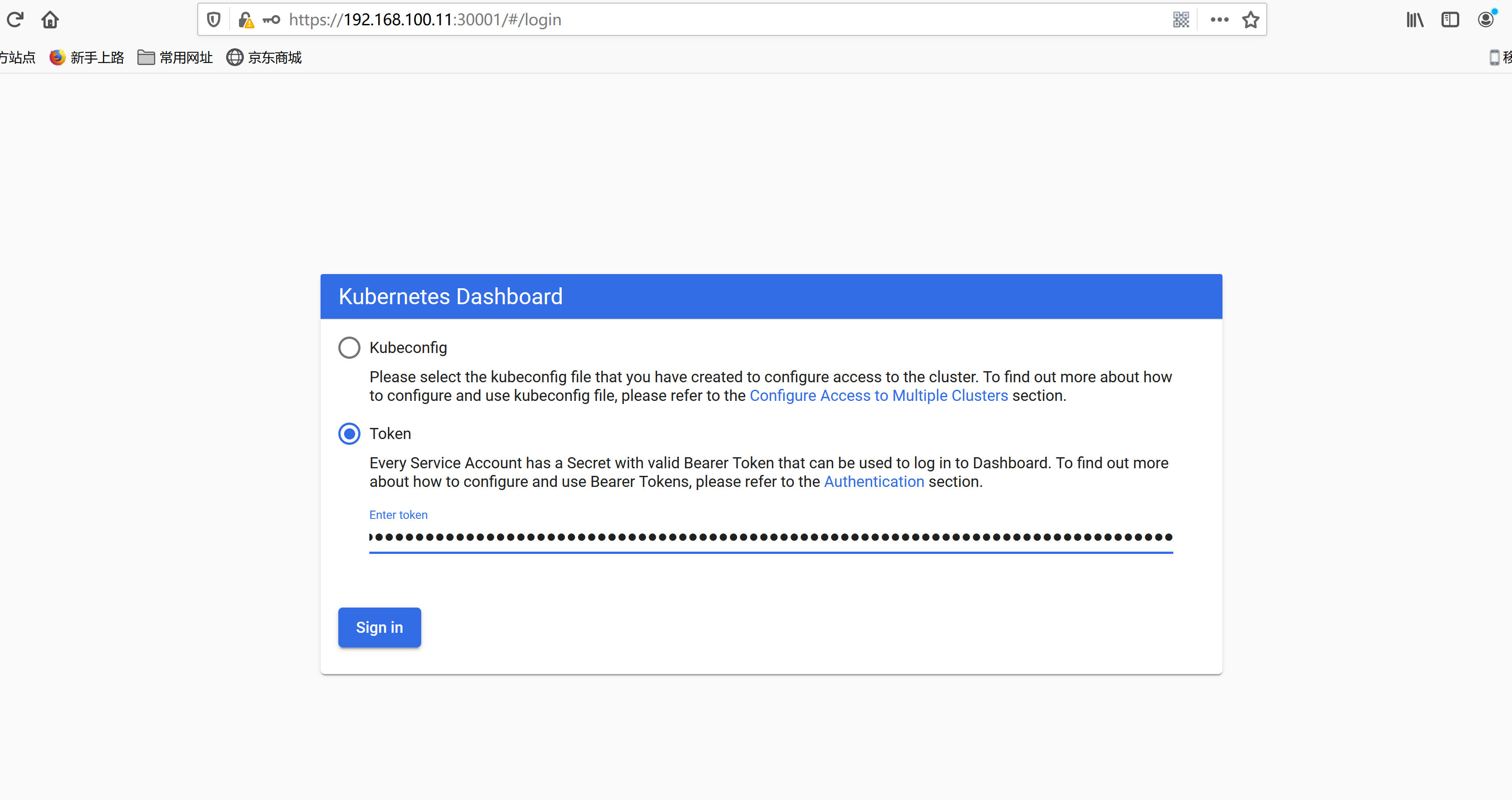

查考官网:https://github.com/kubernetes/dashboard/releases/tag/v2.3.16.1 部署Dashboard$ wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部:vim recommended.yaml----kind: ServiceapiVersion: v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboardspec:ports:- port: 443targetPort: 8443nodePort: 30001type: NodePortselector:k8s-app: kubernetes-dashboard----

kubectl apply -f recommended.yamlkubectl get pods,svc -n kubernetes-dashboard

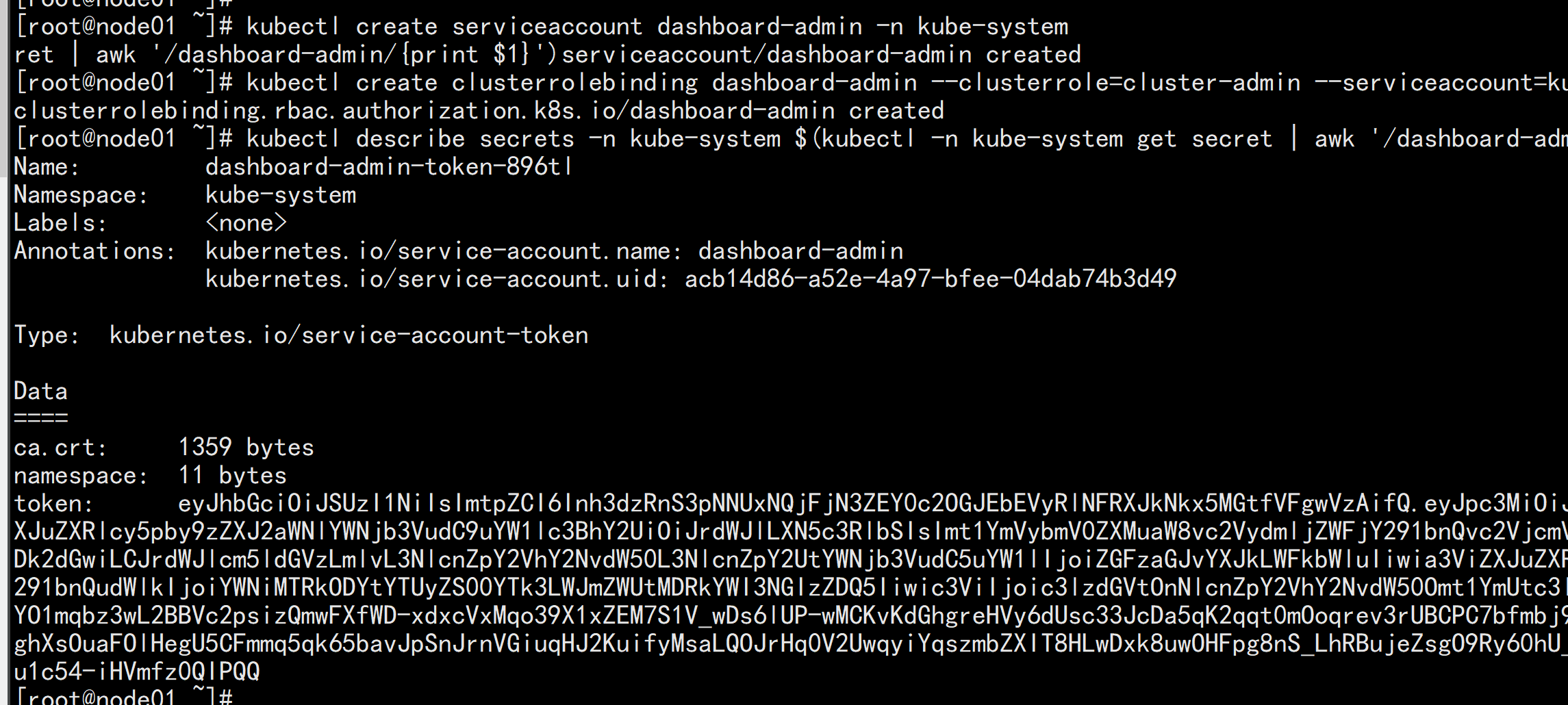

创建service account并绑定默认cluster-admin管理员集群角色:kubectl create serviceaccount dashboard-admin -n kube-systemkubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-adminkubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

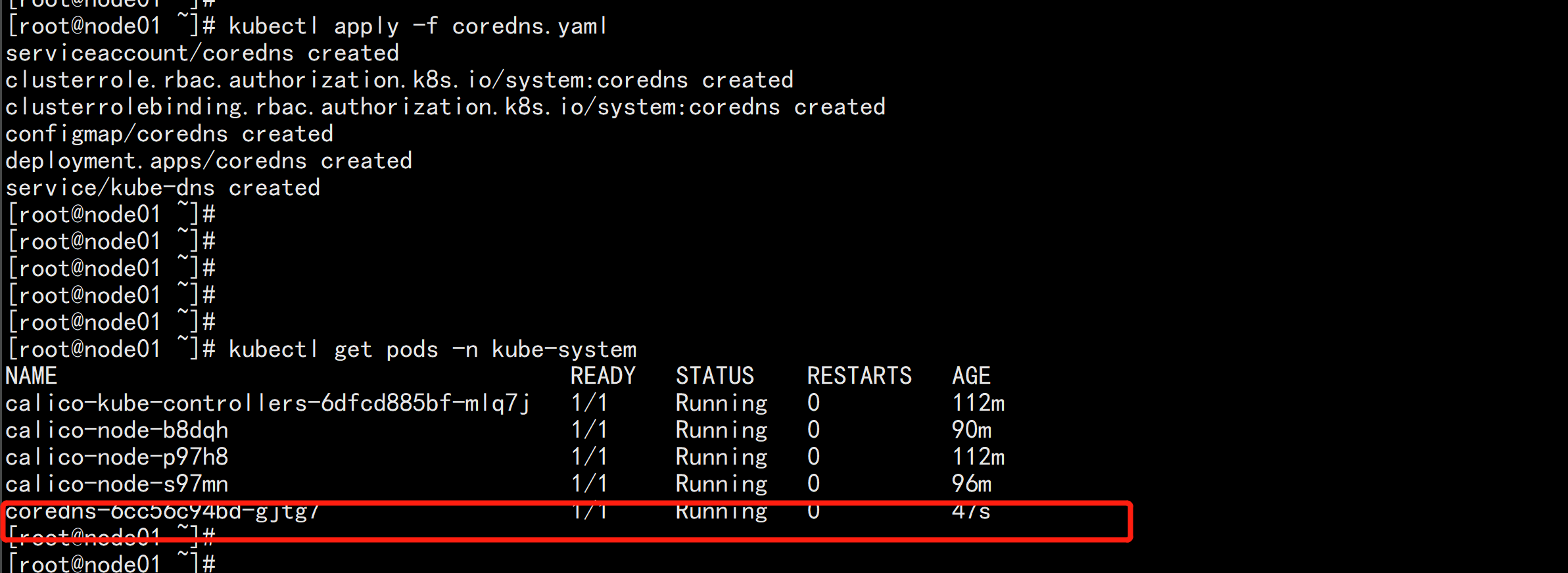

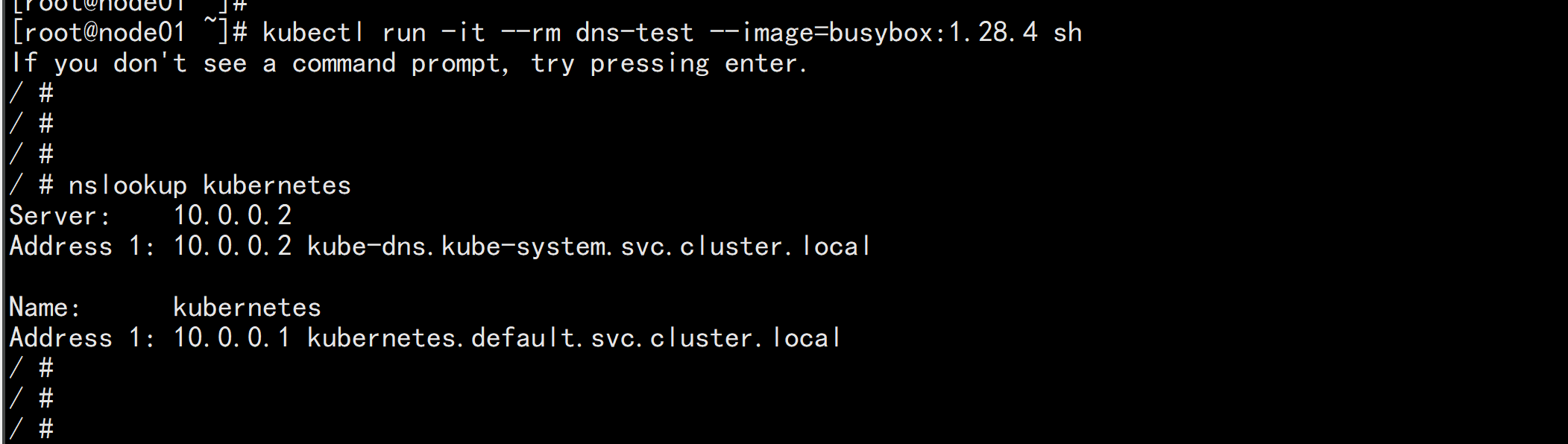

kubectl apply -f coredns.yamlkubectl run -it --rm dns-test --image=busybox:1.28.4 sh