@zhangyy

2020-11-19T01:28:06.000000Z

字数 8622

阅读 357

oozie 任务调度处理

协作框架

- 一:oozie example 运行任务调度案例

- 二:oozie 运行自定的mapreduce 的jar 包

- 三:oozie 调度shell 脚本

- 四:oozie 的coordinator 周期性调度当前任务

一: 运行oozie example 案例

1.1 解压exmaple包

解压example 包tar -zxvf oozie-examples.tar.gzcd /home/hadoop/yangyang/oozie/examples/apps/map-reducejob.properties --定义job相关的属性,比如目录路径、namenode节点等。--定义workflow的位置workflow.xml --定义工作流相关的配置(start --end --kill)(action)--mapred.input.dir--mapred.output.dirlib --目录,存放job任务需要的资源(jar包)

1.2 更改job.properties

nameNode=hdfs://namenode01.hadoop.com:8020jobTracker=namenode01.hadoop.com:8032queueName=defaultexamplesRoot=examplesoozie.wf.application.path=${nameNode}/user/hadoop/${examplesRoot}/apps/map-reduce/workflow.xmloutputDir=map-reduce

1.3 配置workflow.xml 文件:

<workflow-app xmlns="uri:oozie:workflow:0.2" name="map-reduce-wf"><start to="mr-node"/><action name="mr-node"><map-reduce><job-tracker>${jobTracker}</job-tracker><name-node>${nameNode}</name-node><prepare><delete path="${nameNode}/user/${wf:user()}/${examplesRoot}/output-data/${outputDir}"/></prepare><configuration><property><name>mapred.job.queue.name</name><value>${queueName}</value></property><property><name>mapred.mapper.class</name><value>org.apache.oozie.example.SampleMapper</value></property><property><name>mapred.reducer.class</name><value>org.apache.oozie.example.SampleReducer</value></property><property><name>mapred.map.tasks</name><value>1</value></property><property><name>mapred.input.dir</name><value>/user/${wf:user()}/${examplesRoot}/input-data/text</value></property><property><name>mapred.output.dir</name><value>/user/${wf:user()}/${examplesRoot}/output-data/${outputDir}</value></property></configuration></map-reduce><ok to="end"/><error to="fail"/></action><kill name="fail"><message>Map/Reduce failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message></kill><end name="end"/></workflow-app>

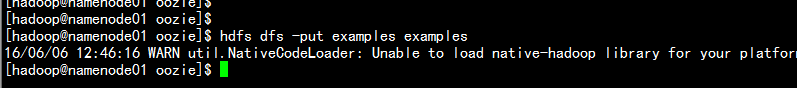

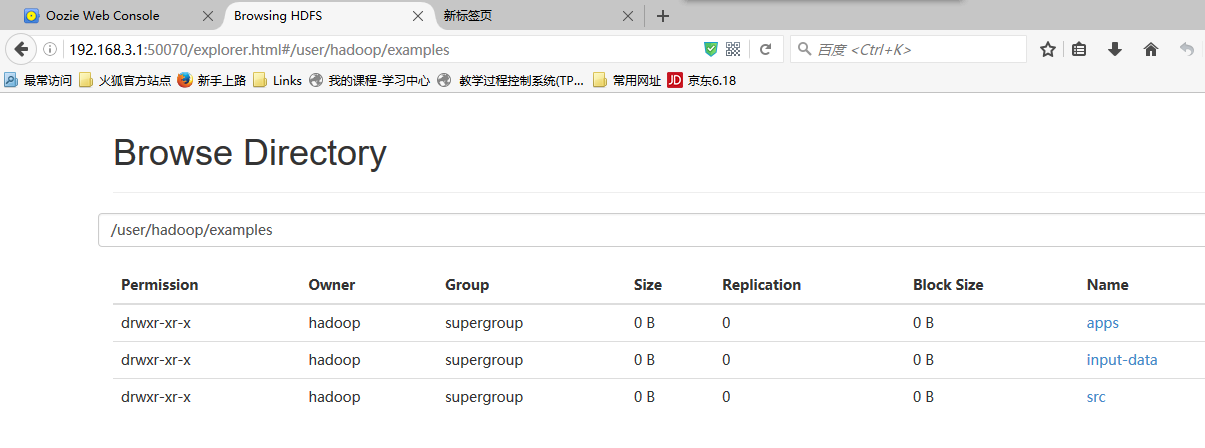

1.3 上传example 目录到hdfs 上面

hdfs dfs -put example example

1.4 运行oozie 调度任务

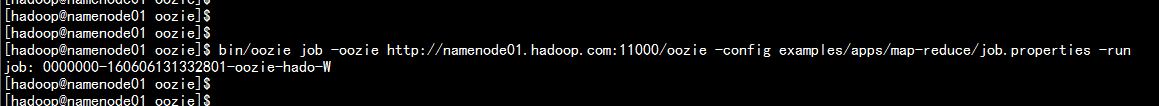

bin/oozie job -oozie http://namenode01.hadoop.com:11000/oozie -config examples/apps/map-reduce/job.properties -run

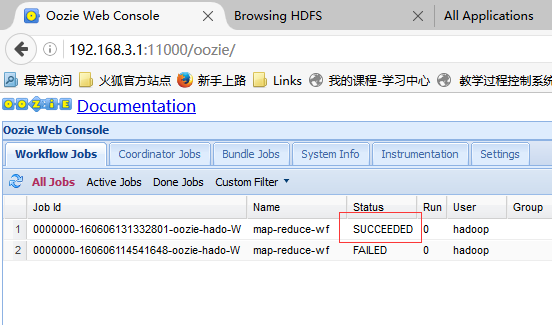

查看状态:

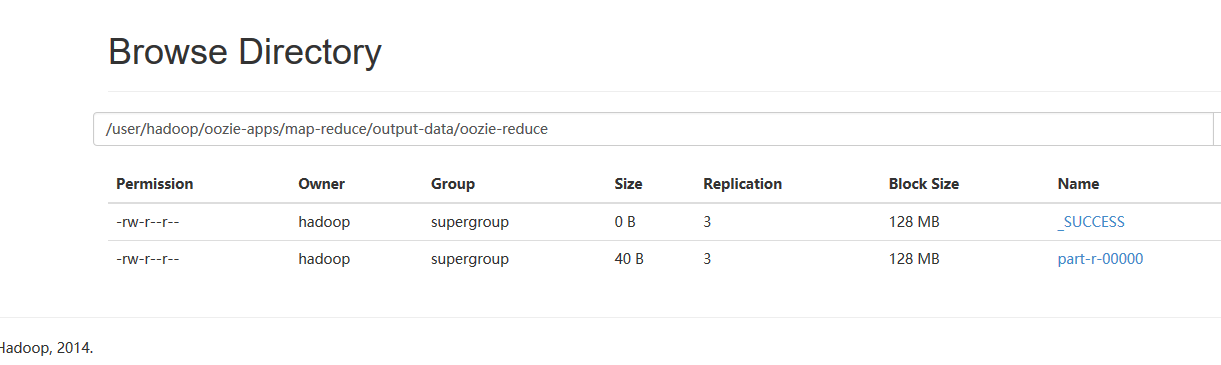

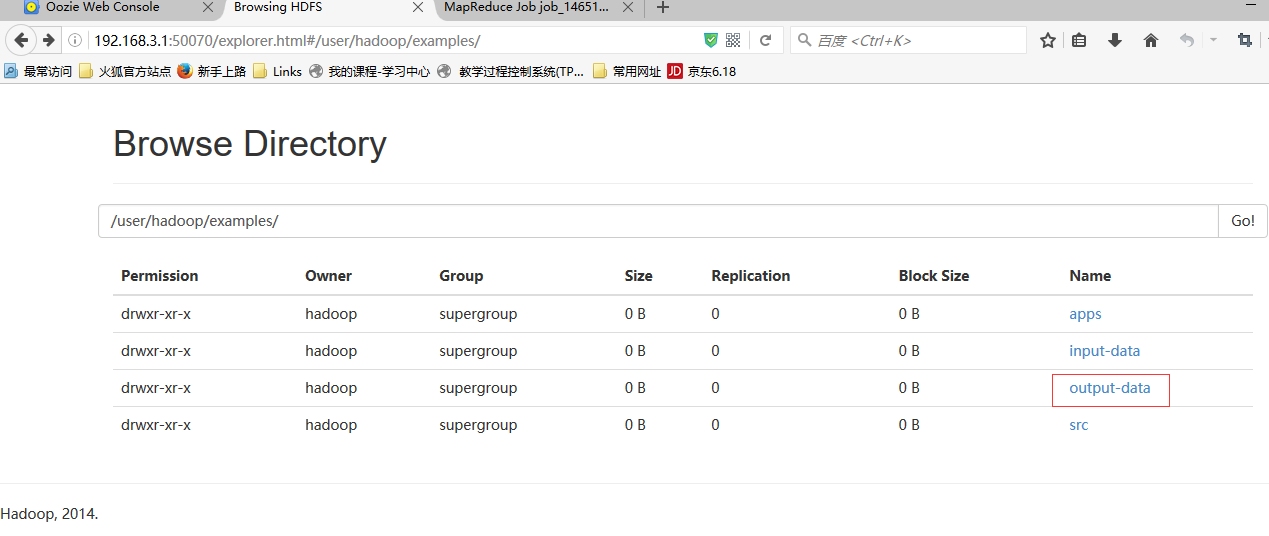

输出目录

二:oozie 运行自定的mapreduce 的jar 包

2.1 在hdfs 上创建上传目录

cd /home/hadoop/yangyang/oozie/hdfs dfs -mkdir oozie-apps

2.2 新建本地的文件用作上传的目录

mkdir oozie-appscd /home/hadoop/yangyang/oozie/examples/appscp -ap map-reduce /home/hadoop/yangyang/oozie/oozie-apps/cd /homme/hadoop/yangyang/oozie/oozie-appps/map-reducemkdir input-data

2.3 拷贝运行的jar包与要运行的job 任务的文件

cp -p mr-wordcount.jar yangyang/oozie/oozie-apps/map-reduce/lib/cp -p /home/hadoop/wc.input ./input-data

2.4 配置job.properties 文件和workflow.xml

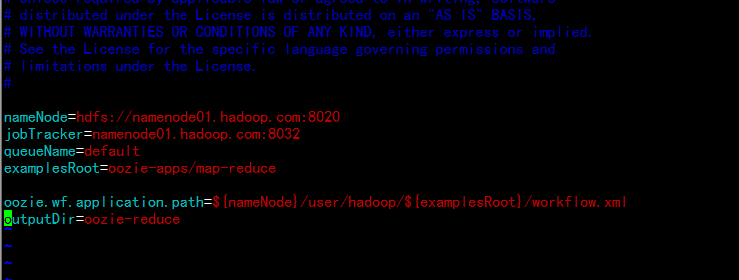

vim job.properties

nameNode=hdfs://namenode01.hadoop.com:8020jobTracker=namenode01.hadoop.com:8032queueName=defaultexamplesRoot=oozie-apps/map-reduceoozie.wf.application.path=${nameNode}/user/hadoop/${examplesRoot}/workflow.xmloutputDir=oozie-reduce

vim workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.2" name="wc-map-reduce"><start to="mr-node"/><action name="mr-node"><map-reduce><job-tracker>${jobTracker}</job-tracker><name-node>${nameNode}</name-node><prepare><delete path="${nameNode}/user/hadoop/${examplesRoot}/output-data/${outputDir}"/></prepare><configuration><property><name>mapred.job.queue.name</name><value>${queueName}</value></property><!--0 new API--><property><name>mapred.mapper.new-api</name><value>true</value></property><property><name>mapred.reducer.new-api</name><value>true</value></property><!--1 input--><property><name>mapred.input.dir</name><value>/user/hadoop/${examplesRoot}/input-data</value></property><!--2 mapper class --><property><name>mapreduce.job.map.class</name><value>org.apache.hadoop.wordcount.WordCountMapReduce$WordCountMapper</value></property><property><name>mapreduce.map.output.key.class</name><value>org.apache.hadoop.io.Text</value></property><property><name>mapreduce.map.output.value.class</name><value>org.apache.hadoop.io.IntWritable</value></property><!--3 reduer class --><property><name>mapreduce.job.reduce.class</name><value>org.apache.hadoop.wordcount.WordCountMapReduce$WordCountReducer</value></property><property><name>mapreduce.job.output.key.class</name><value>org.apache.hadoop.io.Text</value></property><property><name>mapreduce.job.output.value.class</name><value>org.apache.hadoop.io.IntWritable</value></property><!--4 output --><property><name>mapred.output.dir</name><value>/user/hadoop/${examplesRoot}/output-data/${outputDir}</value></property></configuration></map-reduce><ok to="end"/><error to="fail"/></action><kill name="fail"><message>Map/Reduce failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message></kill><end name="end"/></workflow-app>

2.6 上传文件到hdfs 上面:

hdfs dfs -put map-reduce oozie-apps

2.7 执行oozie 命令运行job 处理

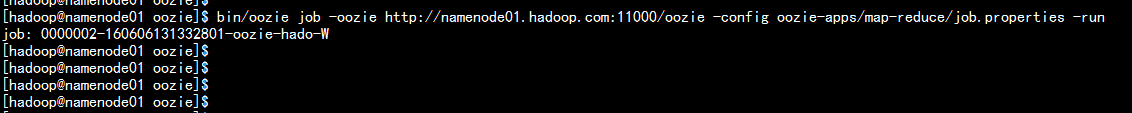

bin/oozie job -oozie http://namenode01.hadoop.com:11000/oozie -config oozie-apps/map-reduce/job.properties -run

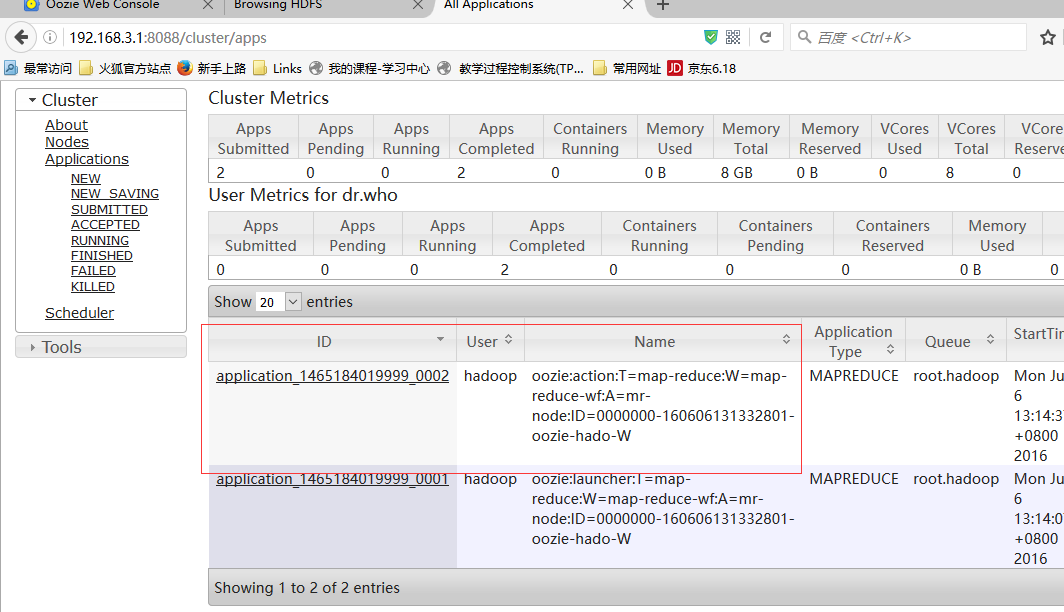

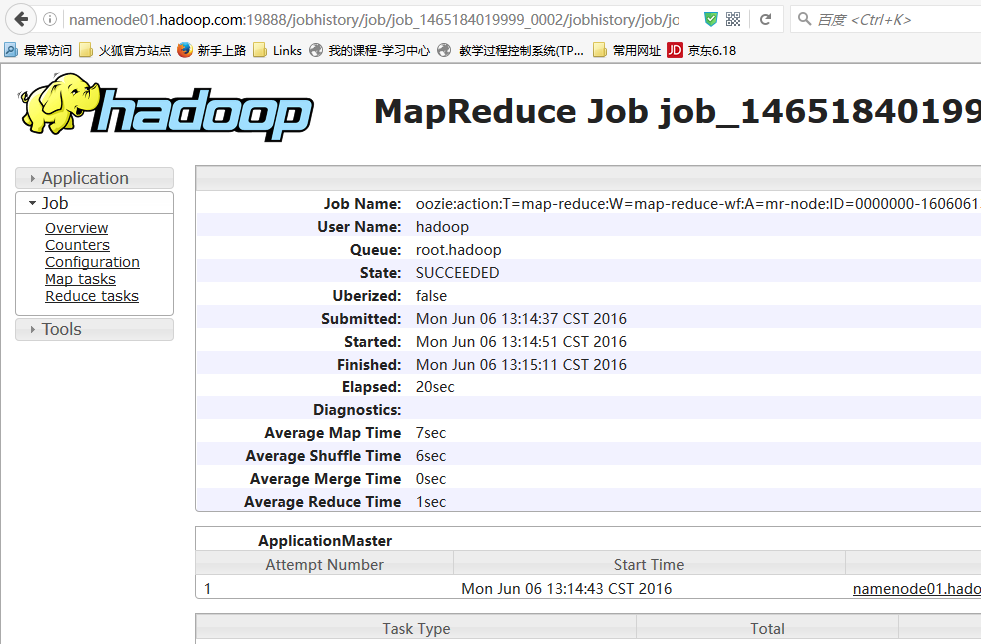

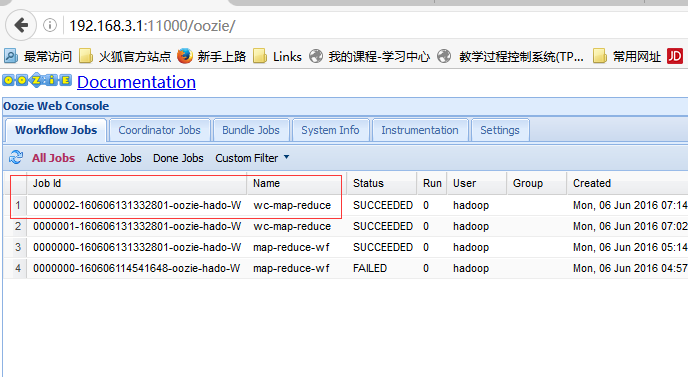

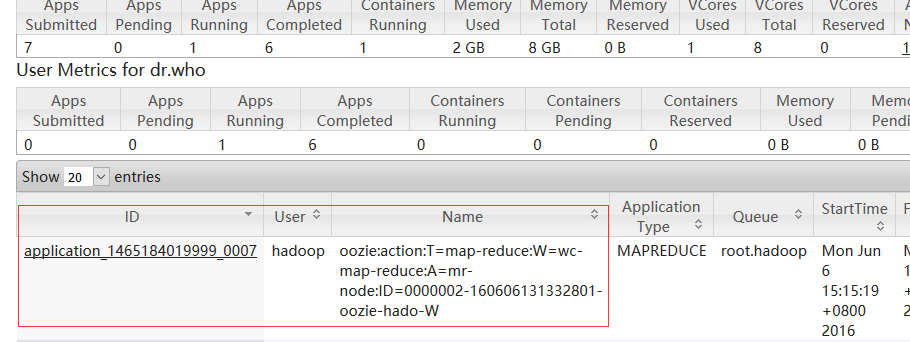

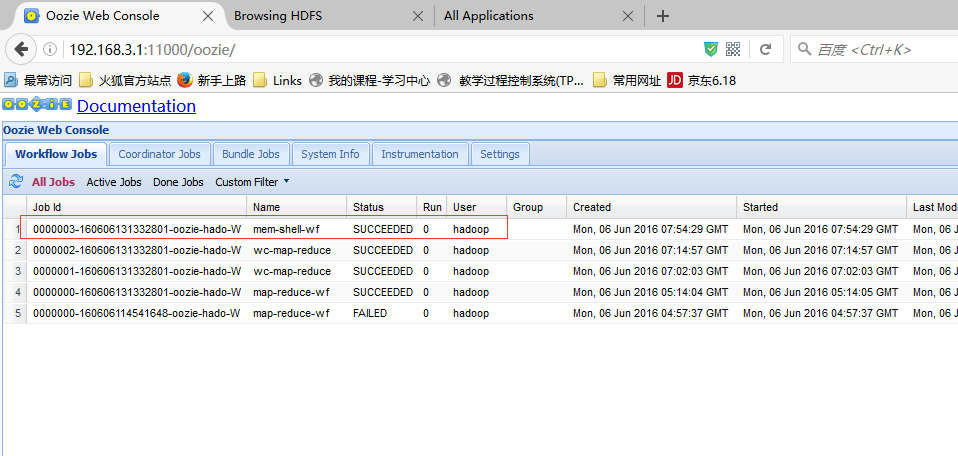

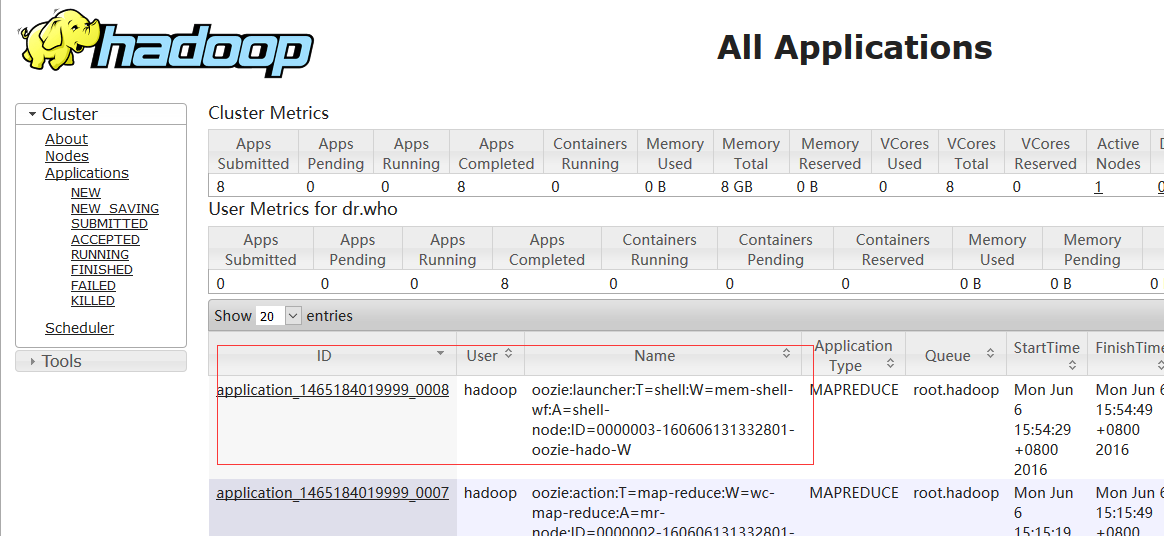

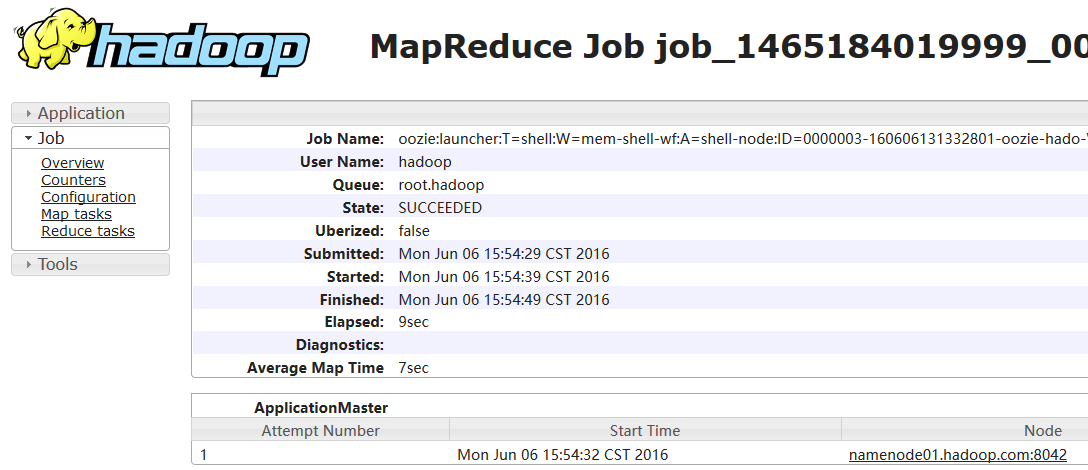

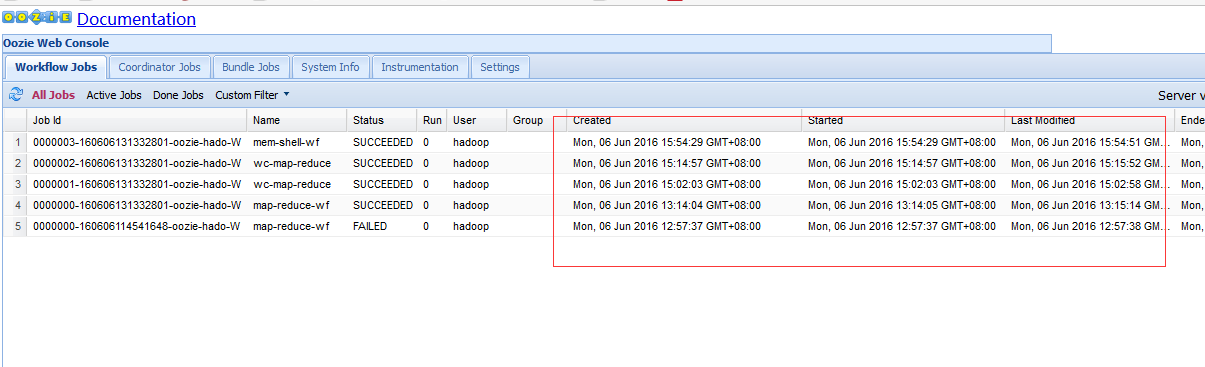

2.8 在浏览器上面查看测试结果

三:oozie 调度shell 脚本

3.1 生成配置文件:

cd /home/hadoop/yangyang/oozie/examples/appscp -ap shell/ ../../oozie-apps/mv shell mem-shell

3.2 书写shell 脚本:

cd /home/hadoop/yangyang/oozie/oozie-apps/mem-shell

vim meminfo.sh

#!/bin/bash/usr/bin/free -m >> /tmp/meminfo

3.3 配置job.properties 文件和workflow.xml

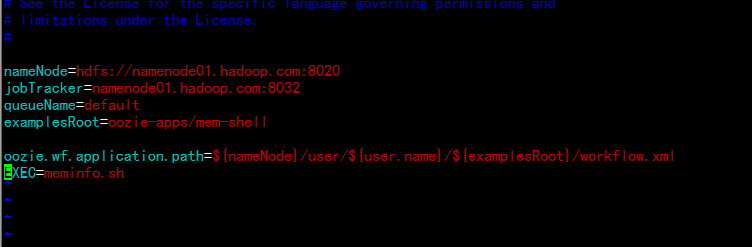

vim job.properties

nameNode=hdfs://namenode01.hadoop.com:8020jobTracker=namenode01.hadoop.com:8032queueName=defaultexamplesRoot=oozie-apps/mem-shelloozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/workflow.xmlEXEC=meminfo.sh

vim workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.4" name="mem-shell-wf"><start to="shell-node"/><action name="shell-node"><shell xmlns="uri:oozie:shell-action:0.2"><job-tracker>${jobTracker}</job-tracker><name-node>${nameNode}</name-node><configuration><property><name>mapred.job.queue.name</name><value>${queueName}</value></property></configuration><exec>${EXEC}</exec><file>/user/hadoop/oozie-apps/mem-shell/${EXEC}#${EXEC}</file></shell><ok to="end"/><error to="fail"/></action><kill name="fail"><message>Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message></kill><end name="end"/></workflow-app>

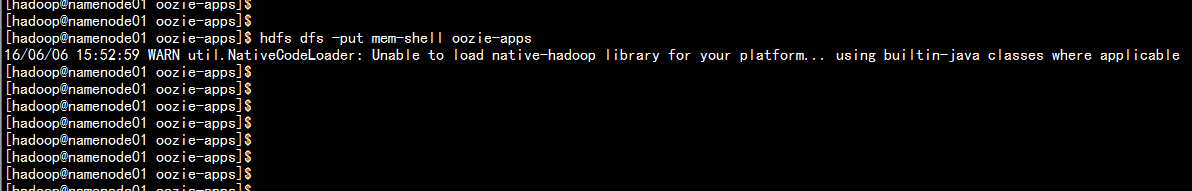

3.4 上传配置文件到hdfs 上面

cd /home/hadoop/yangyang/oozie/oozie-appshdfs dfs -put mem-shell oozie-apps

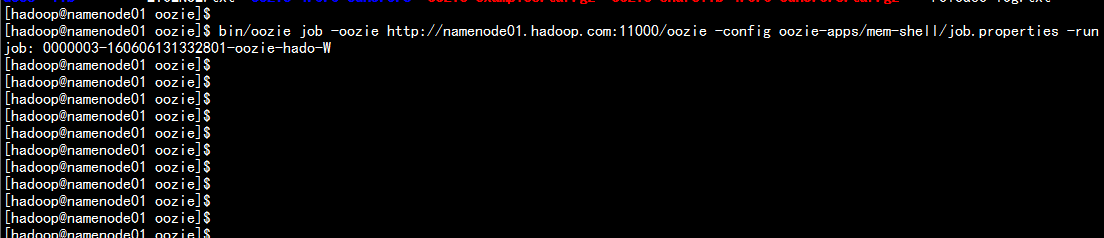

3.5 执行oozie 调度 shell脚本

bin/oozie job -oozie http://namenode01.hadoop.com:11000/oozie -config oozie-apps/mem-shell/job.properties -run

四:oozie 的coordinator 周期性调度当前任务

4.1 配置时区 更改oozie 的配置文件

cd /home/hadoop/yangyang/oozie/confvim oozie-site.xml 增加:<property><name>oozie.processing.timezone</name><value>GMT+0800</value></property><property><name>oozie.service.coord.check.maximum.frequency</name><value>false</value></property>

4.2 更改本地 时间

使用root 账户 配置cp -p /etc/localtime /etc/localtime.bakrm -rf /etc/localtimecd /usr/share/zoneinfo/Asia/cp -p Shanghai /etc/localtime

4.3 更改oozie-consle.js 文件

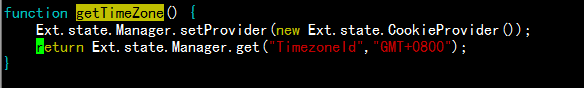

cd /home/hadoop/yangyang/oozie/oozie-server/webapps/oozievim oozie-console.jsfunction getTimeZone() {Ext.state.Manager.setProvider(new Ext.state.CookieProvider());return Ext.state.Manager.get("TimezoneId","GMT+0800");}

4.4 从新启动oozie 服务

bin/oozie-stop.shbin/oozie-start.sh

4.5 查看oozie 的当前时间

4.6 配置job.properties 文件和workflow.xml

cd /home/hadoop/yangyang/oozie/examples/appscp -ap cron ../../oozie-apps/cd cronrm -rf job.properties workflow.xmlcd /home/hadoop/yangyang/oozie/oozie-apps/mem-shellcp -p * ../cron

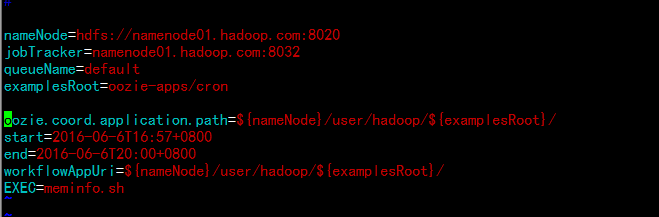

配置job.properties

vim job.properties---nameNode=hdfs://namenode01.hadoop.com:8020jobTracker=namenode01.hadoop.com:8032queueName=defaultexamplesRoot=oozie-apps/cronoozie.coord.application.path=${nameNode}/user/hadoop/${examplesRoot}/start=2016-06-6T16:57+0800end=2016-06-6T20:00+0800workflowAppUri=${nameNode}/user/hadoop/${examplesRoot}/EXEC=meminfo.sh

配置workflow.xml

vim workflow.xml---<workflow-app xmlns="uri:oozie:workflow:0.4" name="memcron-shell-wf"><start to="shell-node"/><action name="shell-node"><shell xmlns="uri:oozie:shell-action:0.2"><job-tracker>${jobTracker}</job-tracker><name-node>${nameNode}</name-node><configuration><property><name>mapred.job.queue.name</name><value>${queueName}</value></property></configuration><exec>${EXEC}</exec><file>/user/hadoop/oozie-apps/cron/${EXEC}#${EXEC}</file></shell><ok to="end"/><error to="fail"/></action><kill name="fail"><message>Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message></kill><end name="end"/></workflow-app>

配置coordinator.xml

vim coordinator.xml---<coordinator-app name="cron-coord" frequency="${coord:minutes(2)}" start="${start}" end="${end}" timezone="GMT+0800"xmlns="uri:oozie:coordinator:0.2"><action><workflow><app-path>${workflowAppUri}</app-path><configuration><property><name>jobTracker</name><value>${jobTracker}</value></property><property><name>nameNode</name><value>${nameNode}</value></property><property><name>queueName</name><value>${queueName}</value></property><property><name>EXEC</name><value>${EXEC}</value></property></configuration></workflow></action></coordinator-app>

4.7 上传配置文件到hdfs 上面:

hdfs dfs -put cron oozie-apps

4.8 执行 oozie 命令 运行job

bin/oozie job -oozie http://namenode01.hadoop.com:11000/oozie -config oozie-apps/cron/job.properties -run

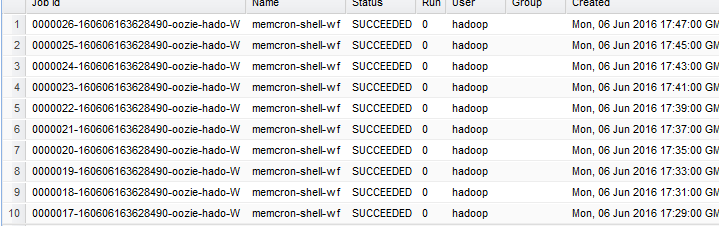

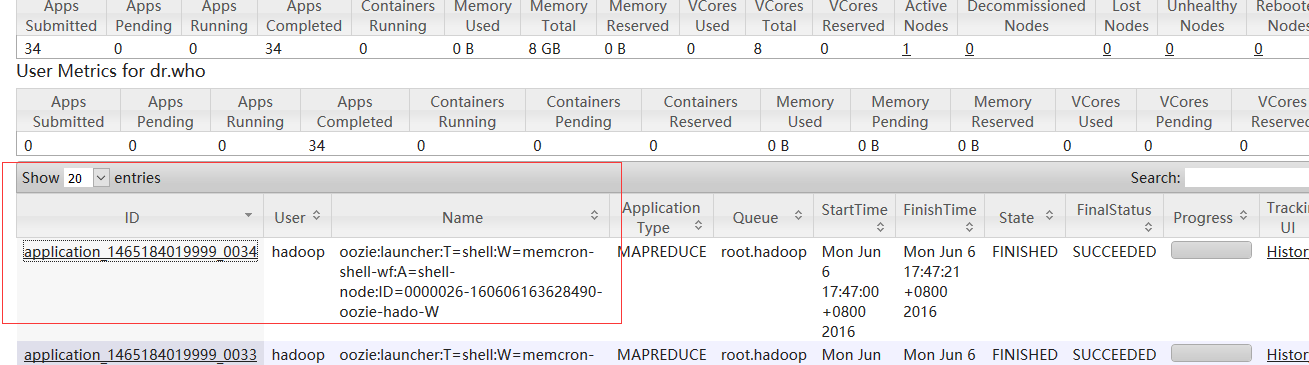

4.9 从web浏览job的相关问题