@zhangyy

2020-09-30T09:08:16.000000Z

字数 6261

阅读 429

CDH6 配置Flink1.10.1 编译与CDH 集成

大数据运维专栏

- 一: 编译环境包的准备

- 二: 编译生成包配置

- 三: 使用编译好的flink 做 CDH的parcels与csd 文件

- 四: CDH6.3.2 集成 flink 1.10.1

一: 编译环境包的准备

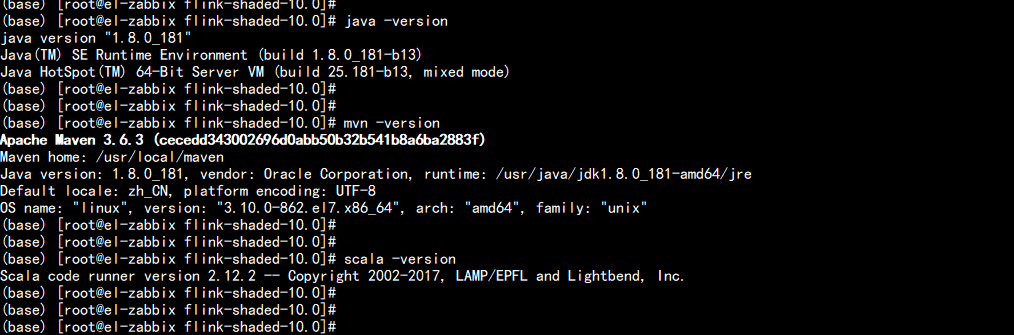

一、环境CDH 6.3.2(Hadoop 3.0.0)、Flink 1.10.1、Centos7.7、Maven 3.6.3、Scala-2.12和JDK1.8二、安装包1、flink1.10.1 tar包https://mirrors.tuna.tsinghua.edu.cn/apache/flink/flink-1.10.1/flink-1.10.1-src.tgzflink-shadedhttps://mirrors.tuna.tsinghua.edu.cn/apache/flink/flink-shaded-10.0/flink-shaded-10.0-src.tgz3、maven版本https://mirror.bit.edu.cn/apache/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.tar.gz

二:编译环境部署

tar -zxvf jdk1.8.181.tar.gzmv jdk1.8.181 /usr/local/jdktar -zxvf apache-maven-3.6.3-bin.tar.gzmv apache-maven-3.6.3 /usr/local/maventar -zxvf scala-2.12.2.tgzmv scala-2.12.2 /usr/local/scala

vim /etc/proflie---JAVA_HOME=/usr/local/javaJRE_HOME=/usr/local/java/jrePATH=$PATH:/usr/local/java/binCLASSPATH=.:/usr/local/java/jre/libexport PATH JAVA_HOMEexport MVN_HOME=/usr/local/mavenexport PATH=$MVN_HOME/bin:$PATHexport SCALA_HOME=/usr/local/scalaexport PATH=$SCALA_HOME/bin:$PATH---

在<mirrors>...... </mirrors> 之间加上:<mirror><id>alimaven</id><mirrorOf>central</mirrorOf><name>aliyun maven</name><url>http://maven.aliyun.com/nexus/content/repositories/central/</url></mirror><mirror><id>alimaven</id><name>aliyun maven</name><url>http://maven.aliyun.com/nexus/content/groups/public/</url><mirrorOf>central</mirrorOf></mirror><mirror><id>central</id><name>Maven Repository Switchboard</name><url>http://repo1.maven.org/maven2/</url><mirrorOf>central</mirrorOf></mirror><mirror><id>repo2</id><mirrorOf>central</mirrorOf><name>Human Readable Name for this Mirror.</name><url>http://repo2.maven.org/maven2/</url></mirror><mirror><id>ibiblio</id><mirrorOf>central</mirrorOf><name>Human Readable Name for this Mirror.</name><url>http://mirrors.ibiblio.org/pub/mirrors/maven2/</url></mirror><mirror><id>jboss-public-repository-group</id><mirrorOf>central</mirrorOf><name>JBoss Public Repository Group</name><url>http://repository.jboss.org/nexus/content/groups/public</url></mirror><mirror><id>google-maven-central</id><name>Google Maven Central</name><url>https://maven-central.storage.googleapis.com</url><mirrorOf>central</mirrorOf></mirror><!-- 中央仓库在中国的镜像 --><mirror><id>maven.net.cn</id><name>oneof the central mirrors in china</name><url>http://maven.net.cn/content/groups/public/</url><mirrorOf>central</mirrorOf></mirror>

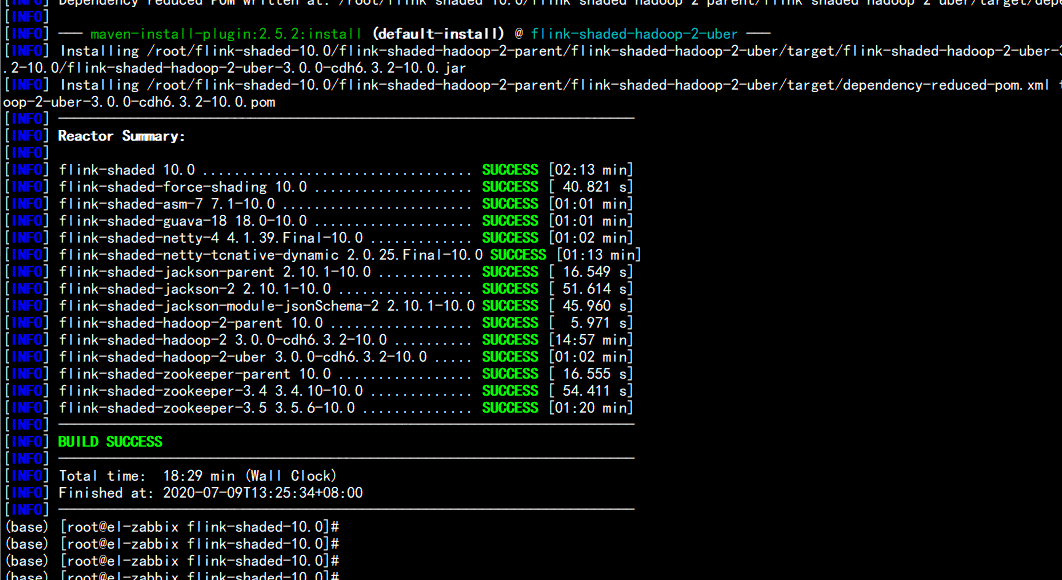

2.2、编译flink-shaded 版本

1、解压tar包wget https://mirrors.tuna.tsinghua.edu.cn/apache/flink/flink-shaded-10.0/flink-shaded-10.0-src.tgztar -zxvf flink-shaded-10.0-src.tgz2、修改pom.xmlvim flink-shaded-10.0/pom.xml----<profile><id>vendor-repos</id><activation><property><name>vendor-repos</name></property></activation><!-- Add vendor maven repositories --><repositories><!-- Cloudera --><repository><id>cloudera-releases</id><url>https://repository.cloudera.com/artifactory/cloudera-repos</url><releases><enabled>true</enabled></releases><snapshots><enabled>false</enabled></snapshots></repository><!-- Hortonworks --><repository><id>HDPReleases</id><name>HDP Releases</name><url>https://repo.hortonworks.com/content/repositories/releases/</url><snapshots><enabled>false</enabled></snapshots><releases><enabled>true</enabled></releases></repository><repository><id>HortonworksJettyHadoop</id><name>HDP Jetty</name><url>https://repo.hortonworks.com/content/repositories/jetty-hadoop</url><snapshots><enabled>false</enabled></snapshots><releases><enabled>true</enabled></releases></repository><!-- MapR --><repository><id>mapr-releases</id><url>https://repository.mapr.com/maven/</url><snapshots><enabled>false</enabled></snapshots><releases><enabled>true</enabled></releases></repository></repositories></profile>----

3、编译先进入到 flink-shaded-10.0文件夹下 执行以下命令:mvn -T2C clean install -DskipTests -Pvendor-repos -Dhadoop.version=3.0.0-cdh6.3.2 -Dscala-2.12 -Drat.skip=true

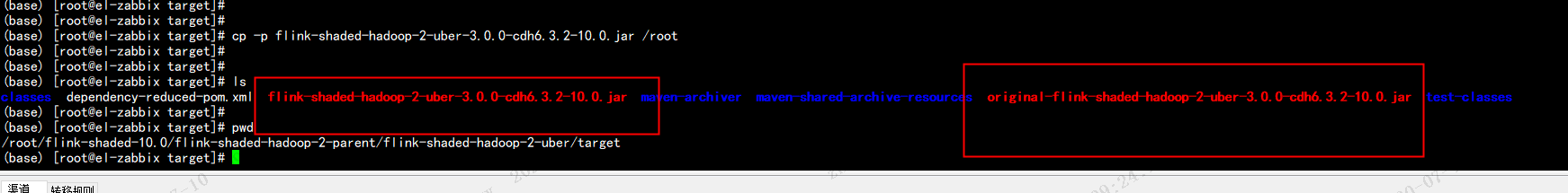

生成:flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jaroriginal-flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jar取到这两个jar 包/root/flink-shaded-10.0/flink-shaded-hadoop-2-parent/flink-shaded-hadoop-2-uber/target 下面mv flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jar /rootmv original-flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jar /root

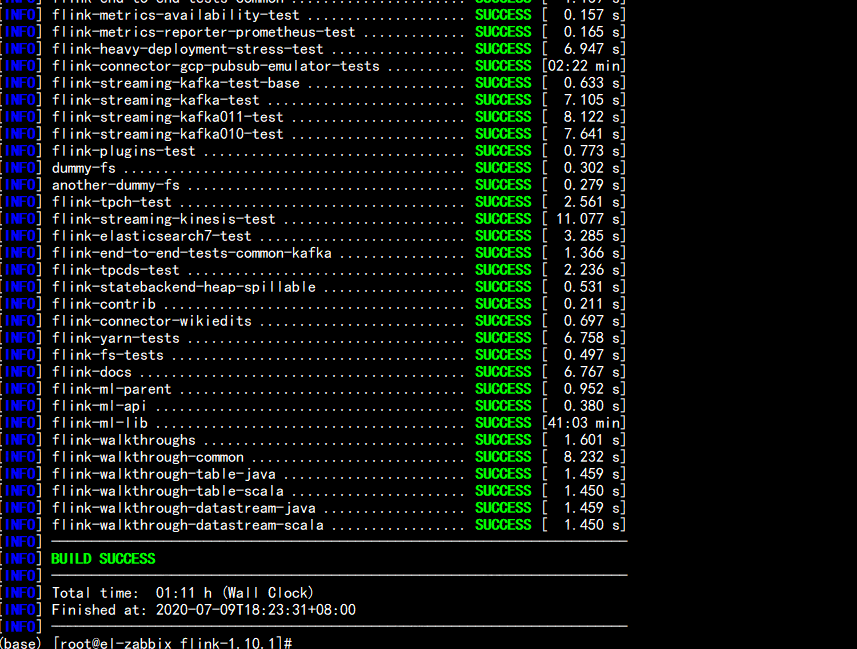

2.3、编译Flink1.10.1源码

1、解压tar包wget https://mirrors.tuna.tsinghua.edu.cn/apache/flink/flink-1.10.1/flink-1.10.1-src.tgztar -zxvf flink-1.10.1-src.tgz2、编译cd flink-1.10.1mvn clean install -DskipTests -Dfast -Drat.skip=true -Dhaoop.version=3.0.0-cdh6.3.2 -Pvendor-repos -Dinclude-hadoop -Dscala-2.12 -T10C

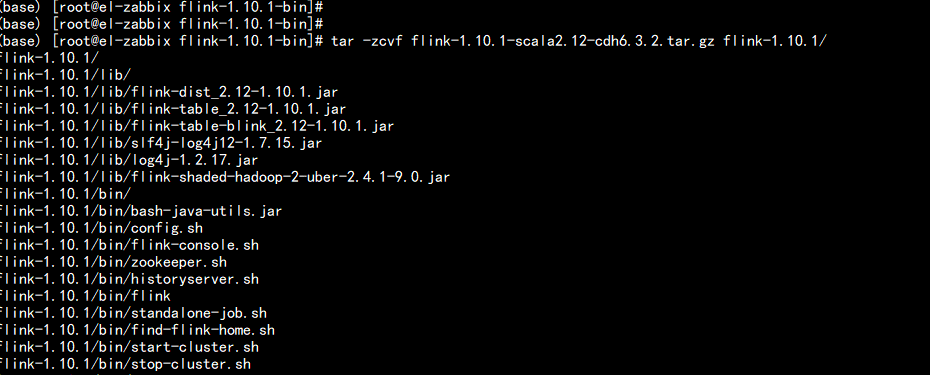

3、打包编译好的文件路径:flink-1.10.1/flink-dist/target/flink-1.10.1-bin会生成一个 flink-1.10.1 的文件 编译好的文件cd flink-1.10.1/flink-dist/target/flink-1.10.1-binmv original-flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jar flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jar flink-1.10.1-bin/libtar -zcvf flink-1.10.1-scala2.12-cdh6.3.2.tar.gz flink-1.10.1/mv flink-1.10.1-scala2.12-cdh6.3.2.tar.gz /root

三:使用编译好的flink 做 CDH的parcels与csd 文件

3.1 下载制作Parcel包和CSD文件的脚本

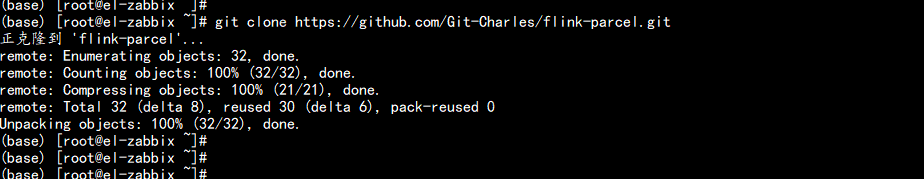

下载制作 parcels的CSD文件脚本:git clone https://github.com/Git-Charles/flink-parcel.git

3.2 修改配置文件 flink-parcel.properties

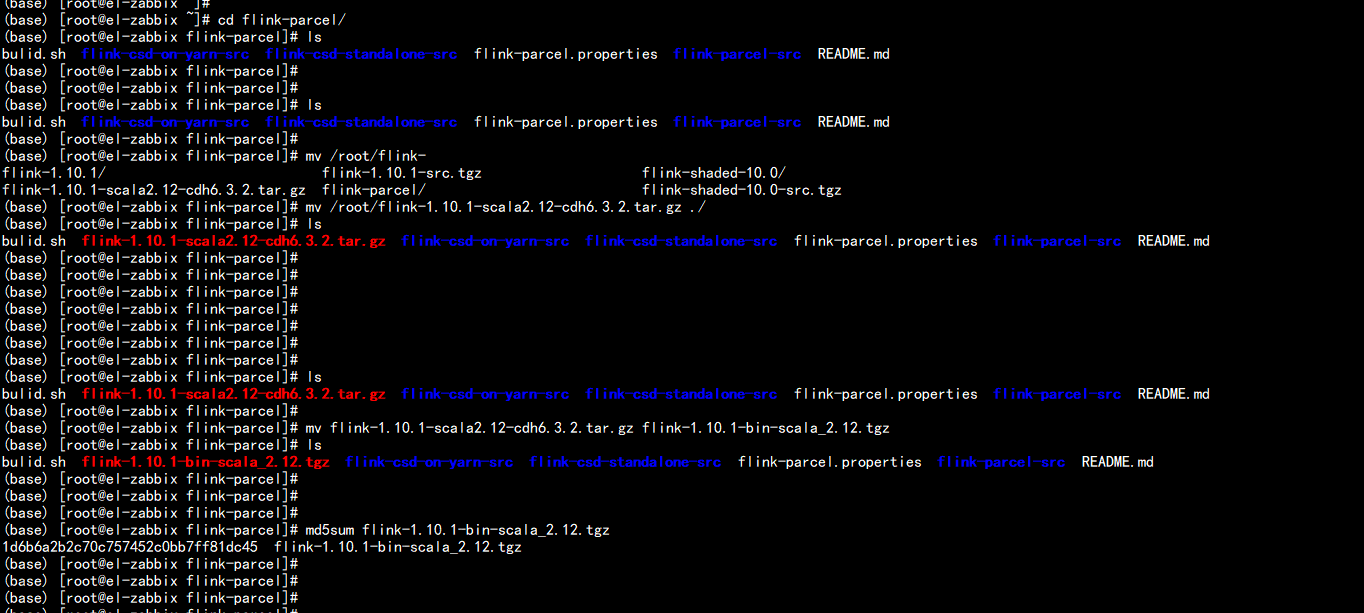

cd flink-parcelsmv /root/flink-1.10.1-scala2.12-cdh6.3.2.tar.gz ./mv flink-1.10.1-scala2.12-cdh6.3.2.tar.gz flink-1.10.1-bin-scala_2.12.tgzmd5um flink-1.10.1-bin-scala_2.12.tgz

3.3 修改配置文件 flink-parcel.properties

vim flink-parcel.properties----#FLINK 下载地址FLINK_URL=http://10.60.1.110/flink-1.10.1-bin-scala_2.12.tgzFLINK_MD5=f12ae2d502f9822fd2eeb53ad9d30fbd#flink版本号FLINK_VERSION=1.10.1#扩展版本号EXTENS_VERSION=BIN-SCALA_2.12#操作系统版本,以centos为例OS_VERSION=7#CDH 小版本CDH_MIN_FULL=5.2.0CDH_MAX_FULL=6.3.3#CDH大版本CDH_MIN=5CDH_MAX=6---

四:制作 Flink 的Parcel包和CSD文件并校验

4.1 编译flink 的parcel包:

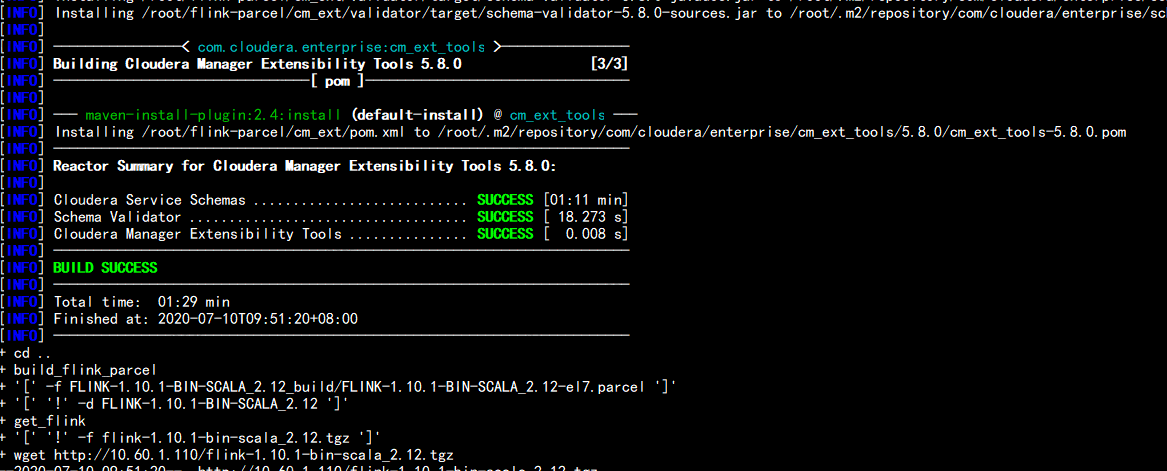

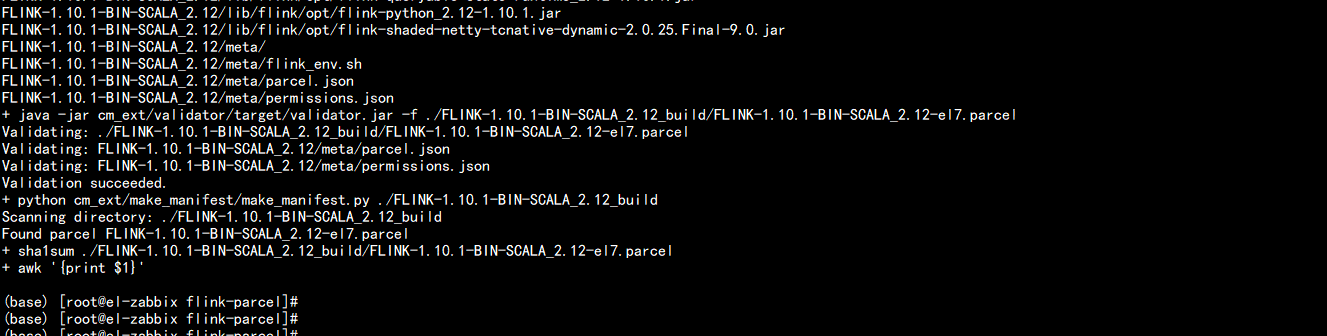

cd flink-parcelchmod +x bulid.shsh bulid.sh parcel

4.2 编译flink on yarn 的jar 包

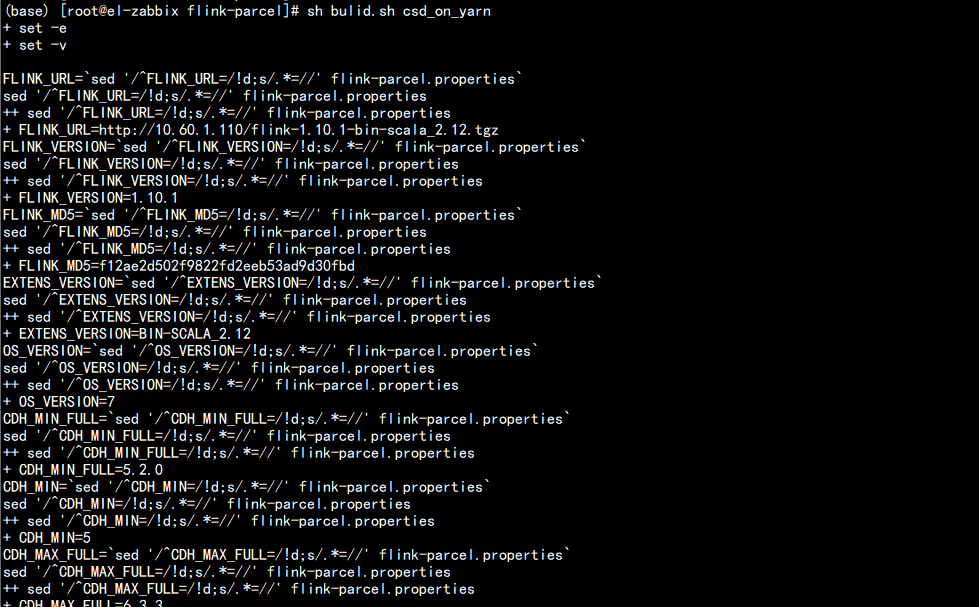

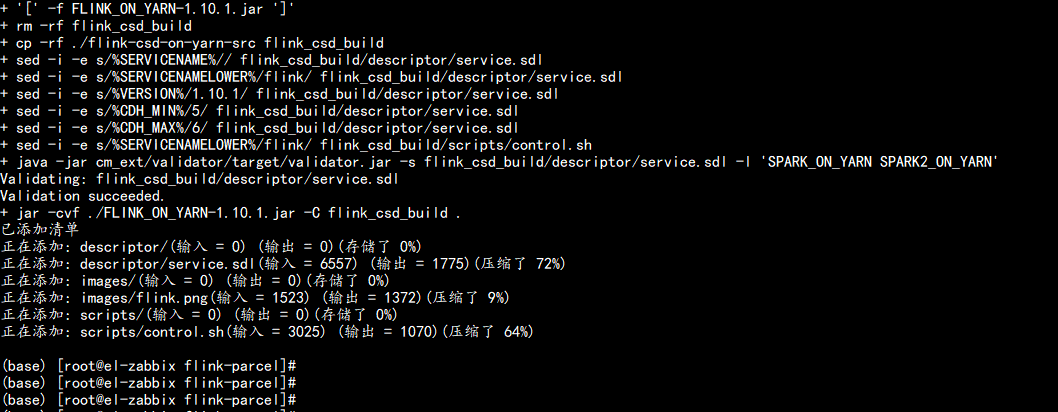

sh bulid.sh csd_on_yarn

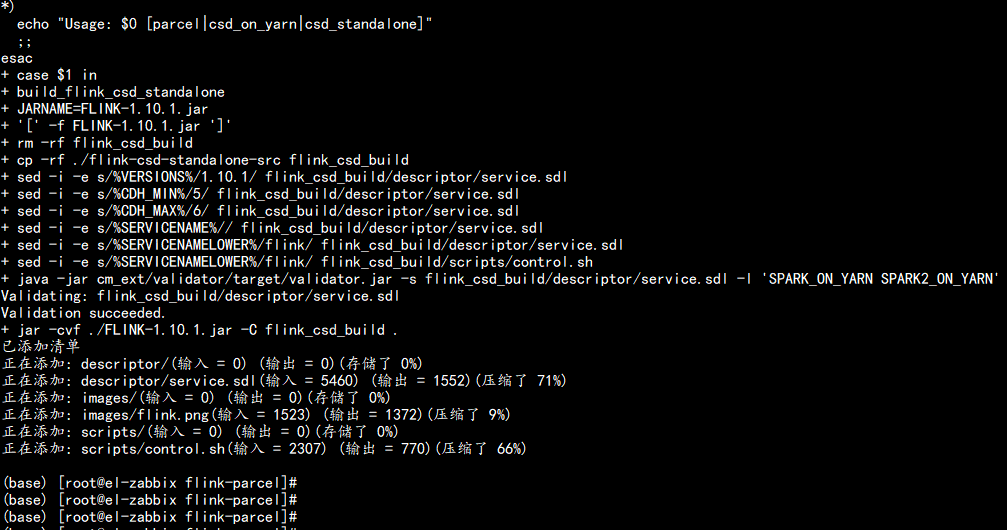

4.3 编译flink on standalone 的jar 包

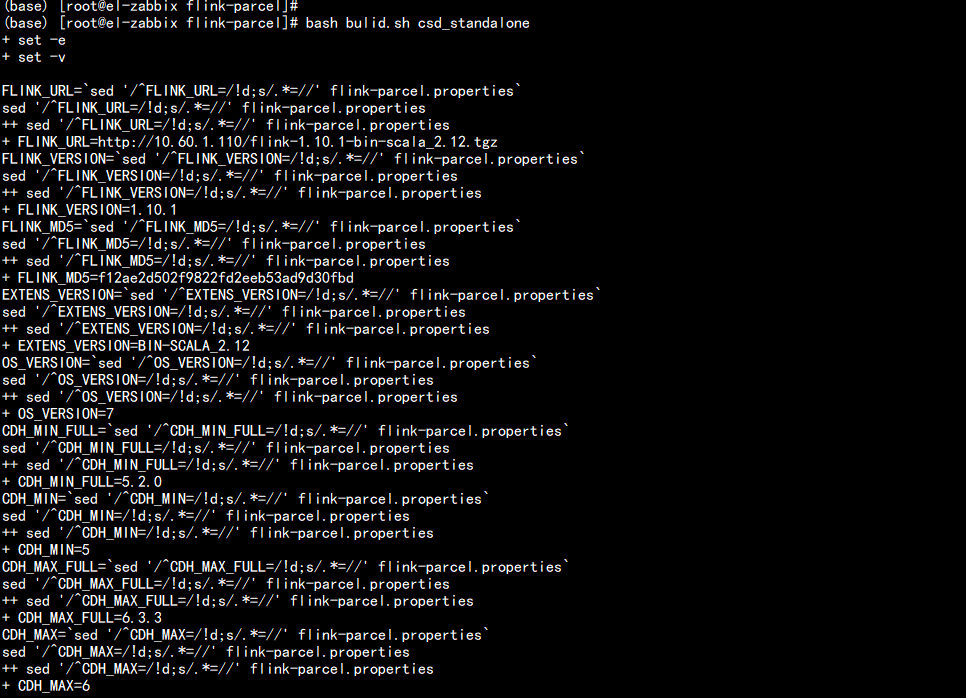

bash bulid.sh csd_standalone

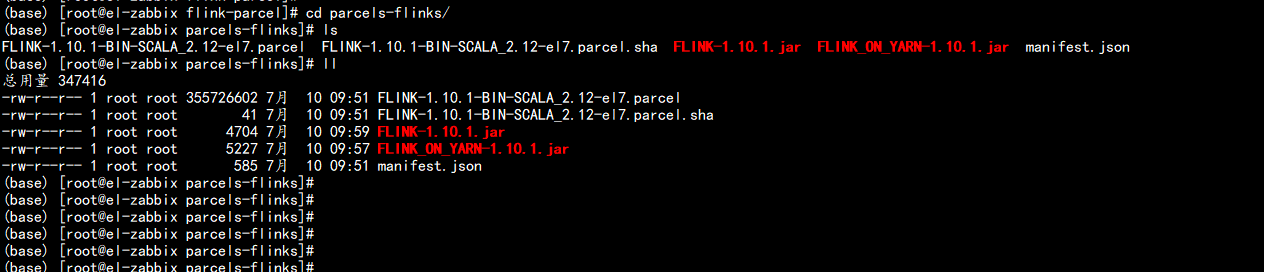

4.4 生成flink的parcels 与 jar包

mkdir parcels-flinkmv *.jar parcels-flink/cd FLINK-1.10.1-BIN-SCALA_2.12_build/mv * ../parcels-flink/tar -zcvf parcels-flink.tar.gz parcels-flink把这个包给下载 下来 去CDH6.3.2 下面去集成

五: CDH6.3.2 集成 flink 1.10.1

5.1 集成CDH6.3.2

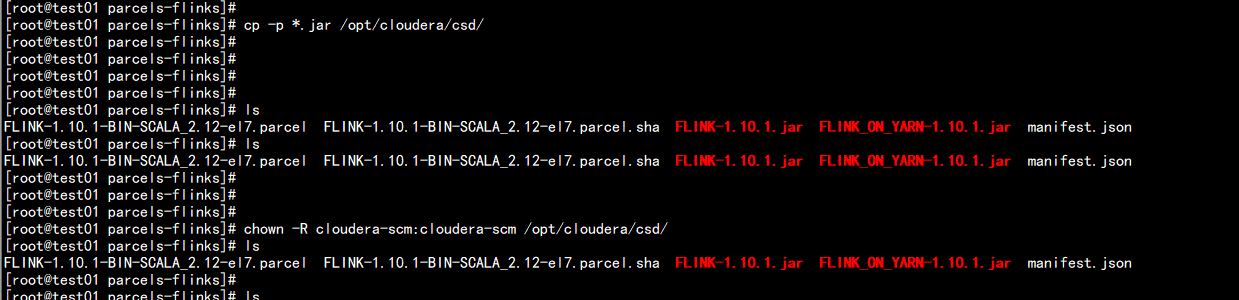

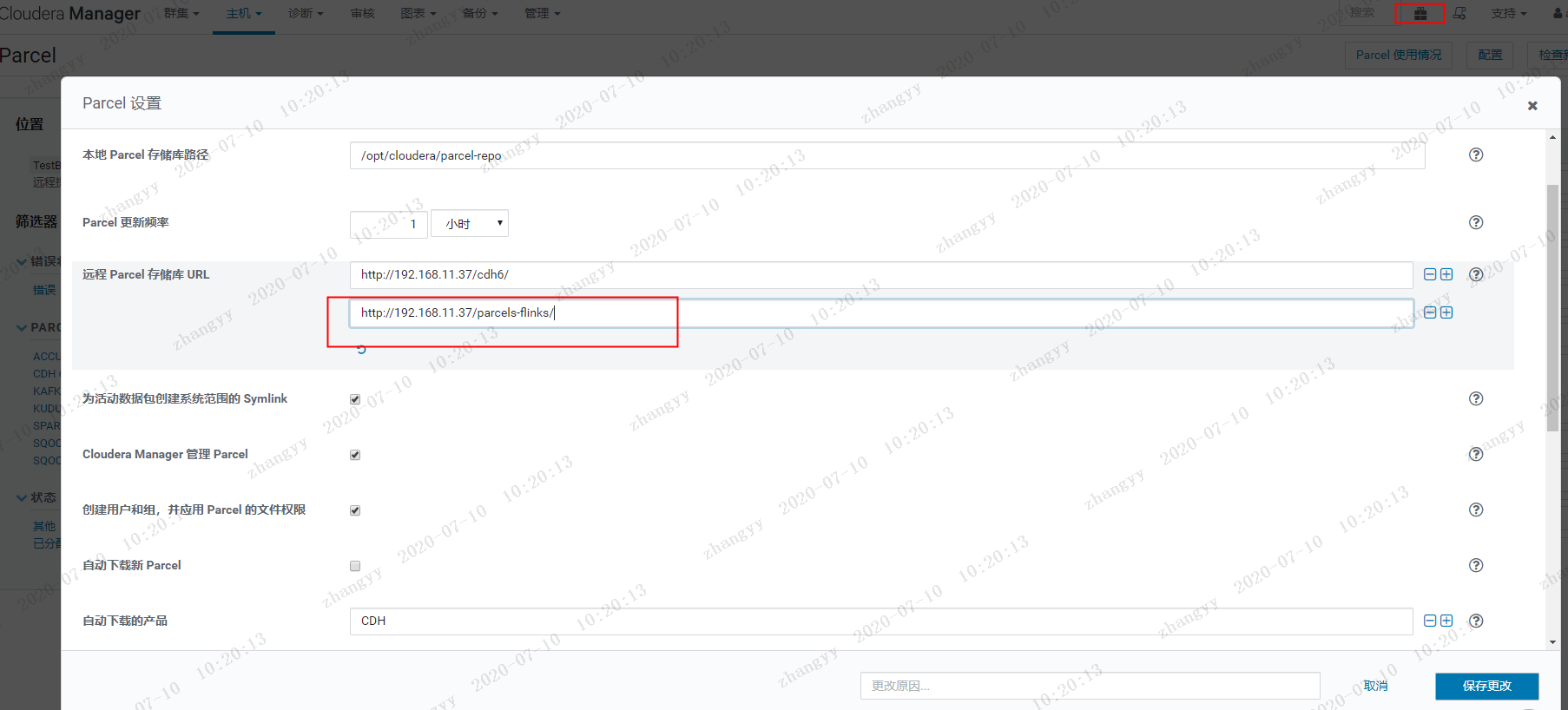

cd /var/www/html/上传 parcels-flink.tar.gz 到这个目录下面tar -zxvf parcels-flinkscp parcels-flinkscp -p *.jar /opt/cloudera/csd/chown -R cloudera-scm:cloudera-scm /opt/cloudera/csd/service cloudera-scm-server restart

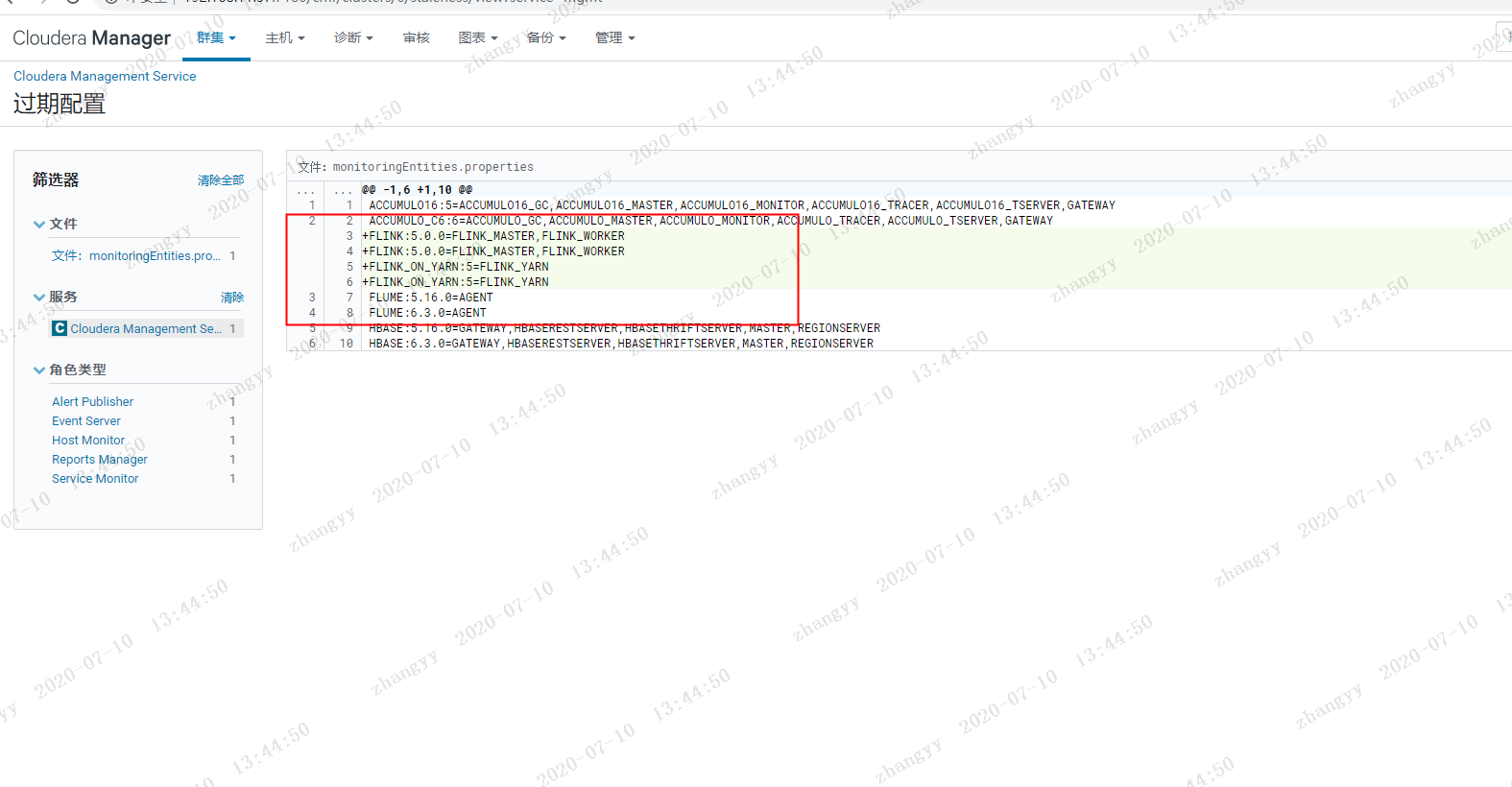

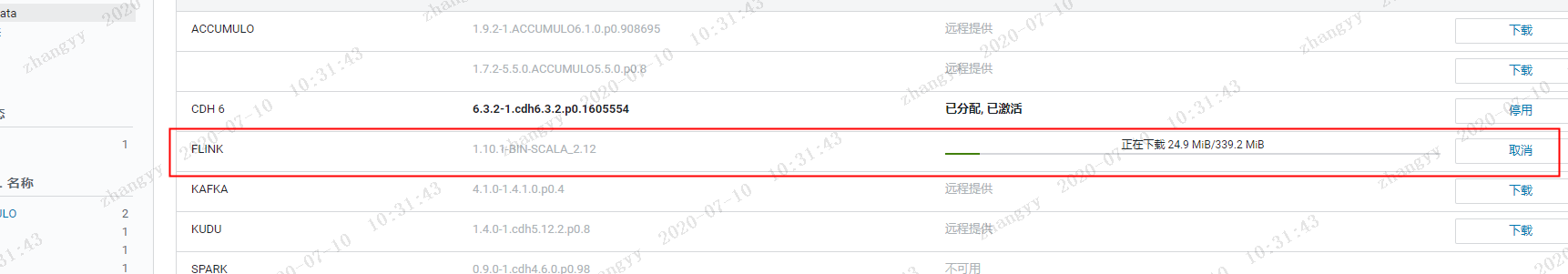

下载分发/分配/激活 flink

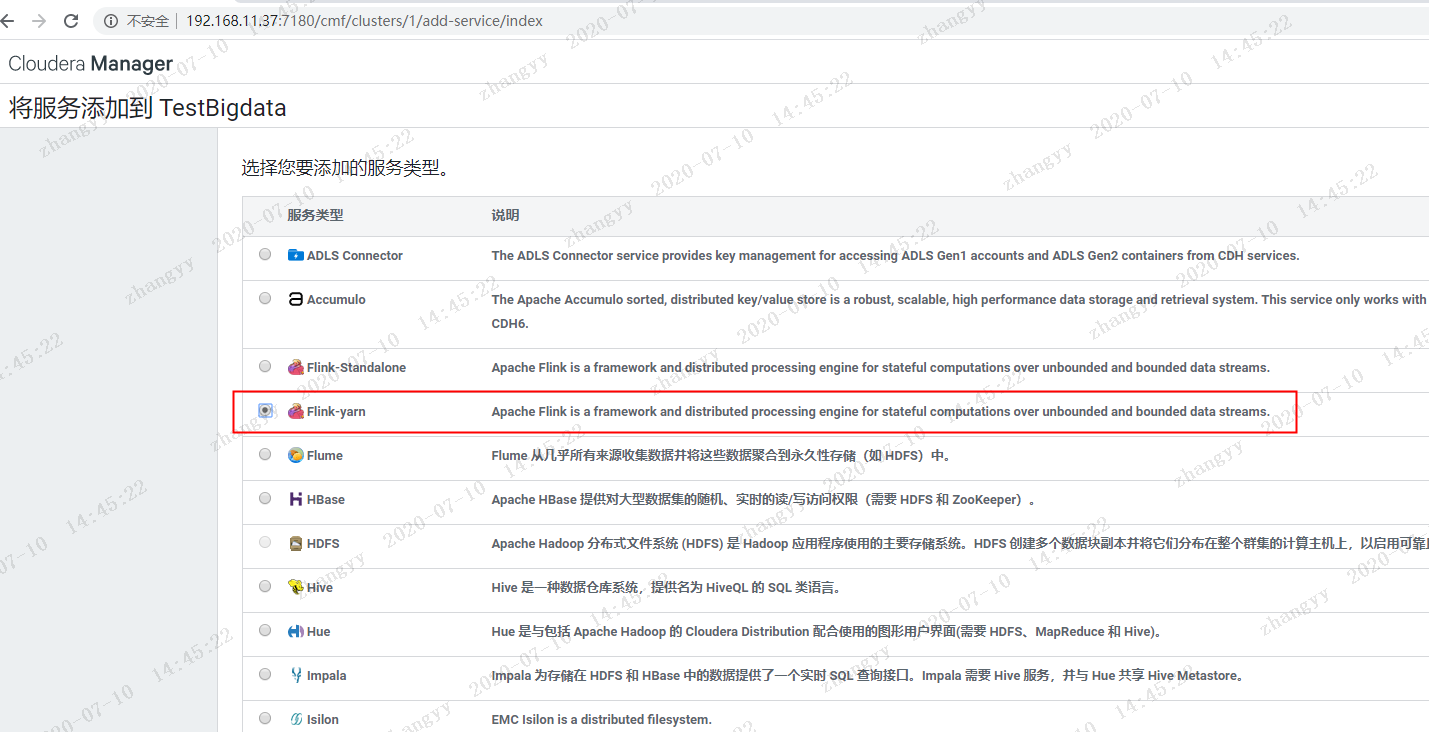

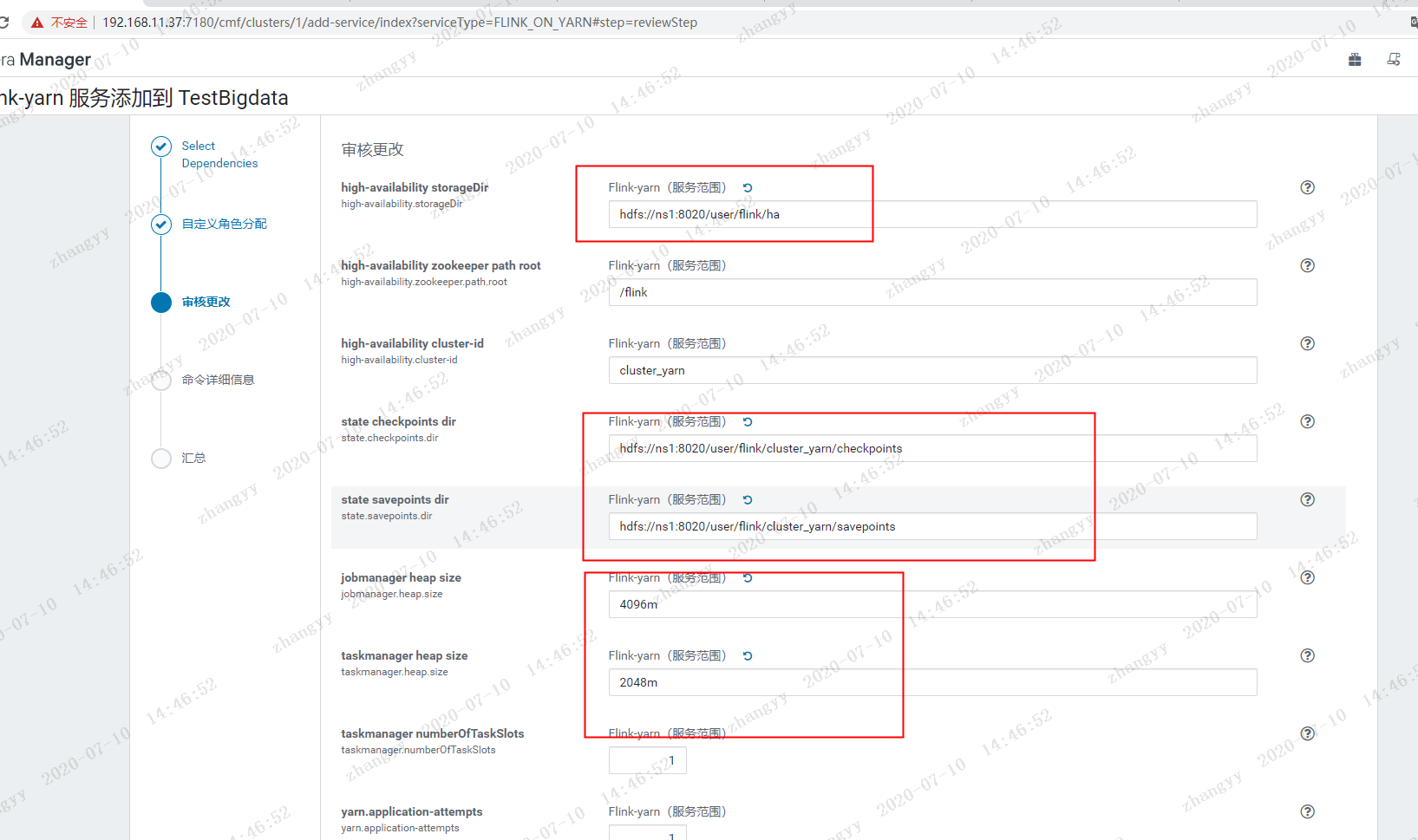

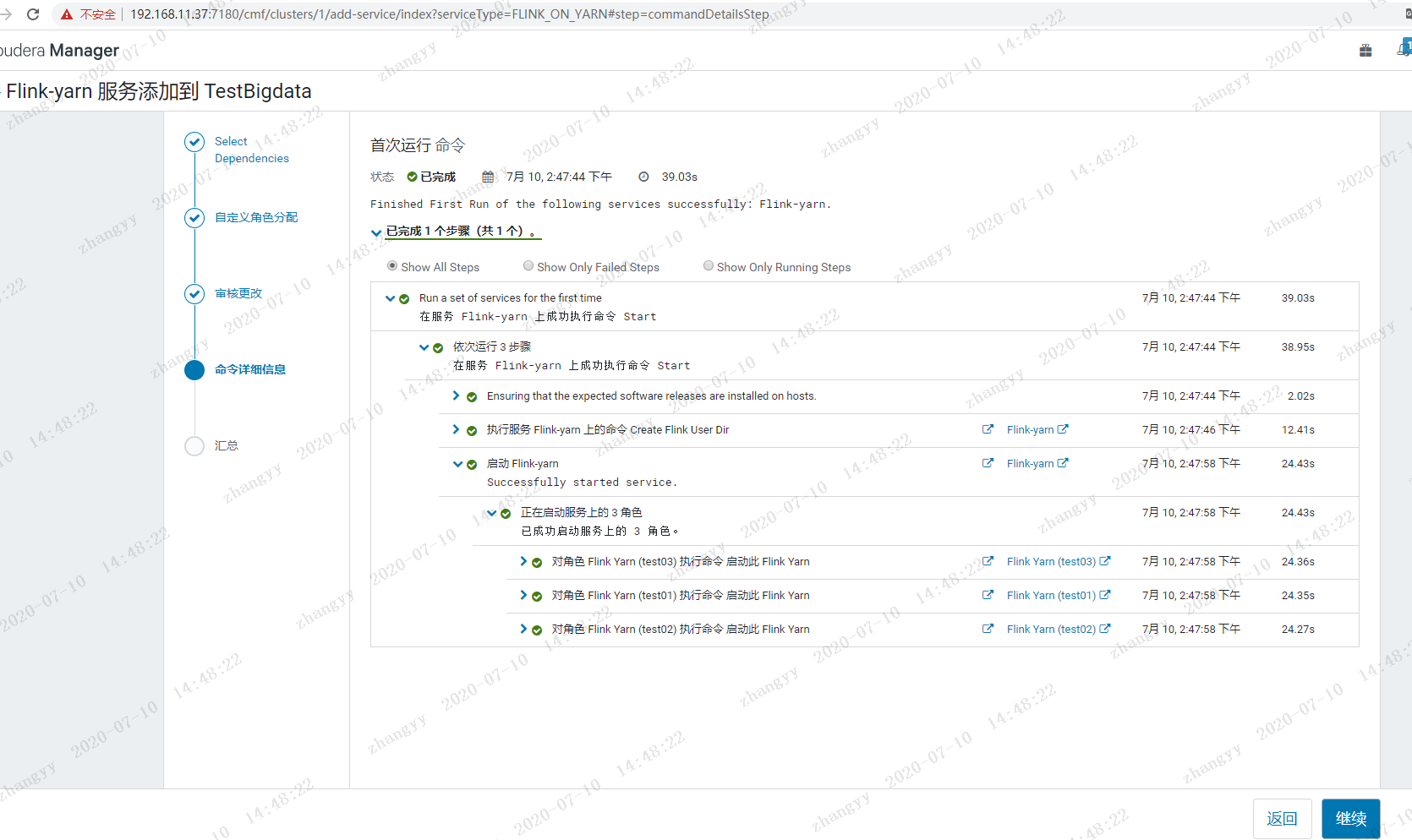

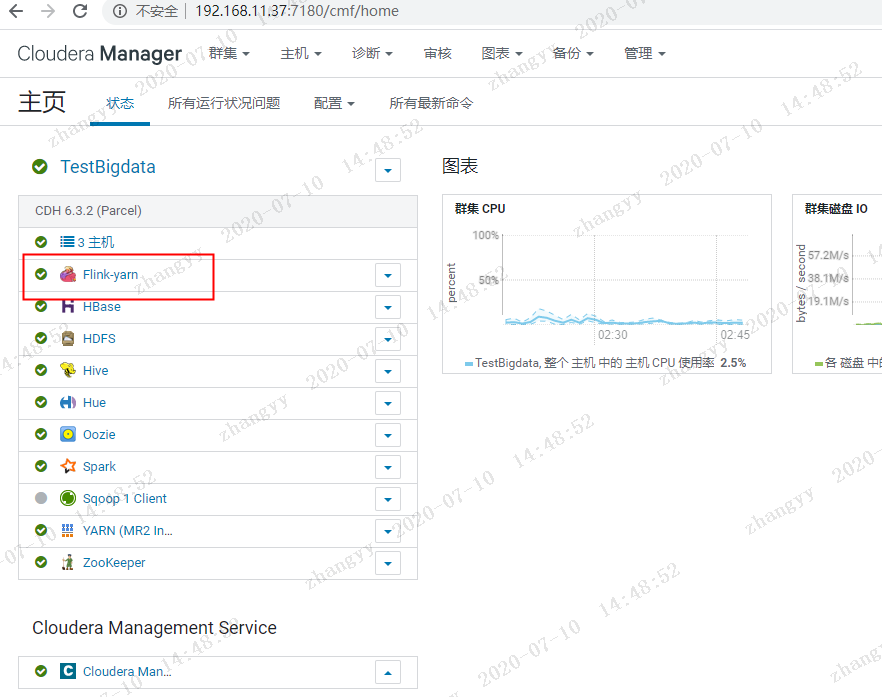

关于taskmanager.cpu.cores 报错问题cd /opt/cloudera/parcels/FLINK/lib/flink/confvim flink-site.xml----taskmanager.cpu.cores: 2taskmanager.memory.task.heap.size: 512mtaskmanager.memory.managed.size: 512mtaskmanager.memory.network.min: 64mtaskmanager.memory.network.max: 64m到最后 加上 然后同步所有主机:---添加flink on yarn 的服务