@zhangyy

2020-04-26T05:57:47.000000Z

字数 14722

阅读 408

Kubernetes 的概念与安装配置补充

kubernetes系列

- 一:kubernetes的介绍

- 二:kubernetes的安装配置

- 三:kubernetes 的 WEB UI

一:kubernetes的介绍

1.1、Kubernetes是什么

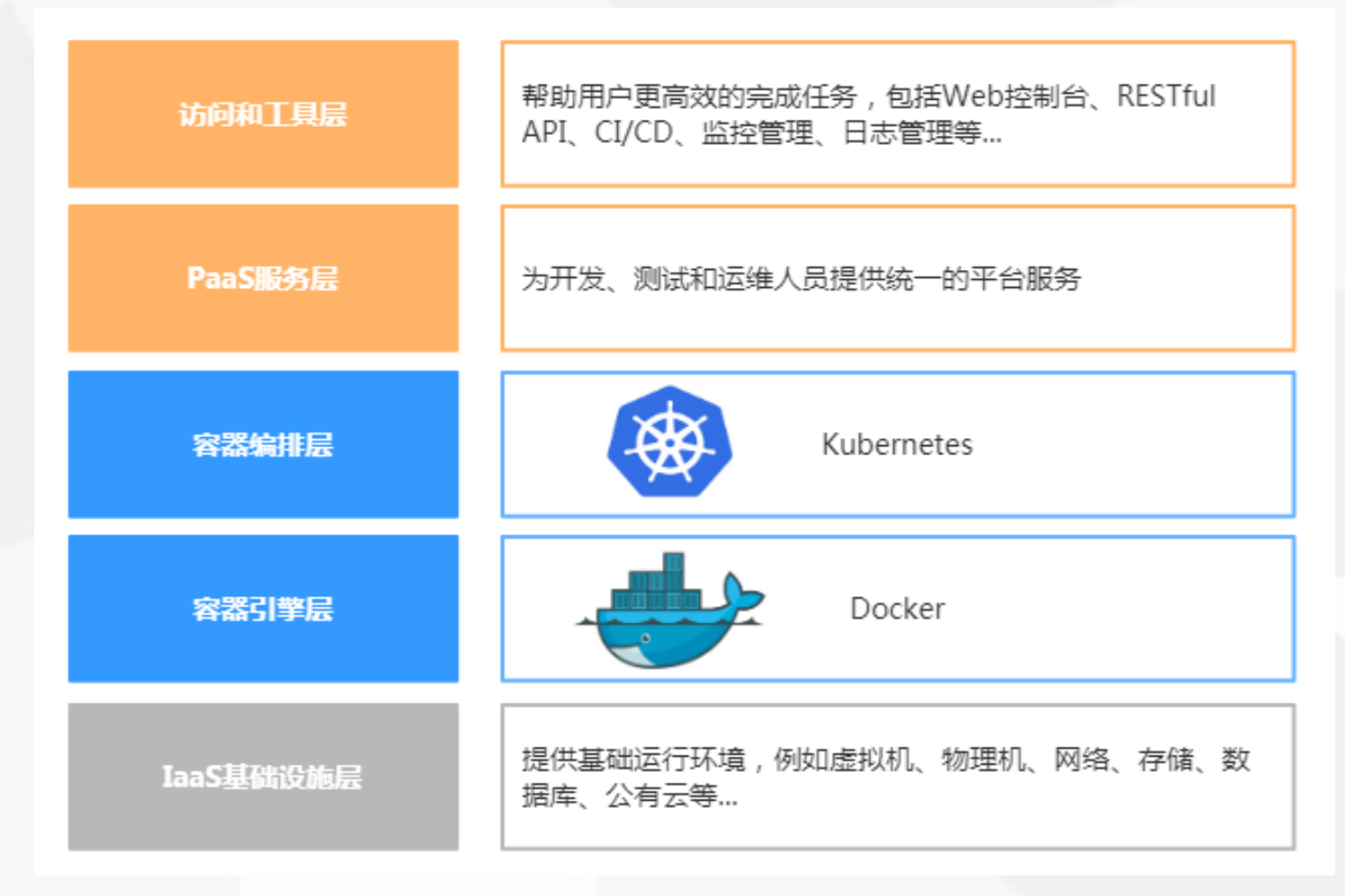

Kubernetes是Google在2014年开源的一个容器集群管理系统,Kubernetes简称K8S。K8S用于容器化应用程序的部署,扩展和管理。K8S提供了容器编排,资源调度,弹性伸缩,部署管理,服务发现等一系列功能。Kubernetes目标是让部署容器化应用简单高效。官方网站:http://www.kubernetes.io

1.2、Kubernetes特性

1、自我修复在节点故障时重新启动失败的容器,替换和重新部署,保证预期的副本数量;杀死健康检查失败的容器,并且在未准备好之前不会处理客户端请求,确保线上服务不中断。2、 弹性伸缩使用命令、UI或者基于CPU使用情况自动快速扩容和缩容应用程序实例,保证应用业务高峰并发时的高可用性;业务低峰时回收资源,以最小成本运行服务。 自动部署和回滚K8S采用滚动更新策略更新应用,一次更新一个Pod,而不是同时删除所有Pod,如果更新过程中出现问题,将回滚更改,确保升级不受影响业务。3、服务发现和负载均衡K8S为多个容器提供一个统一访问入口(内部IP地址和一个DNS名称),并且负载均衡关联的所有容器,使得用户无需考虑容器IP问题。4、 机密和配置管理管理机密数据和应用程序配置,而不需要把敏感数据暴露在镜像里,提高敏感数据安全性。并可以将一些常用的配置存储在K8S中,方便应用程序使用。5、存储编排挂载外部存储系统,无论是来自本地存储,公有云(如AWS),还是网络存储(如NFS、GlusterFS、Ceph)都作为集群资源的一部分使用,极大提高存储使用灵活性。6、 批处理提供一次性任务,定时任务;满足批量数据处理和分析的场景。

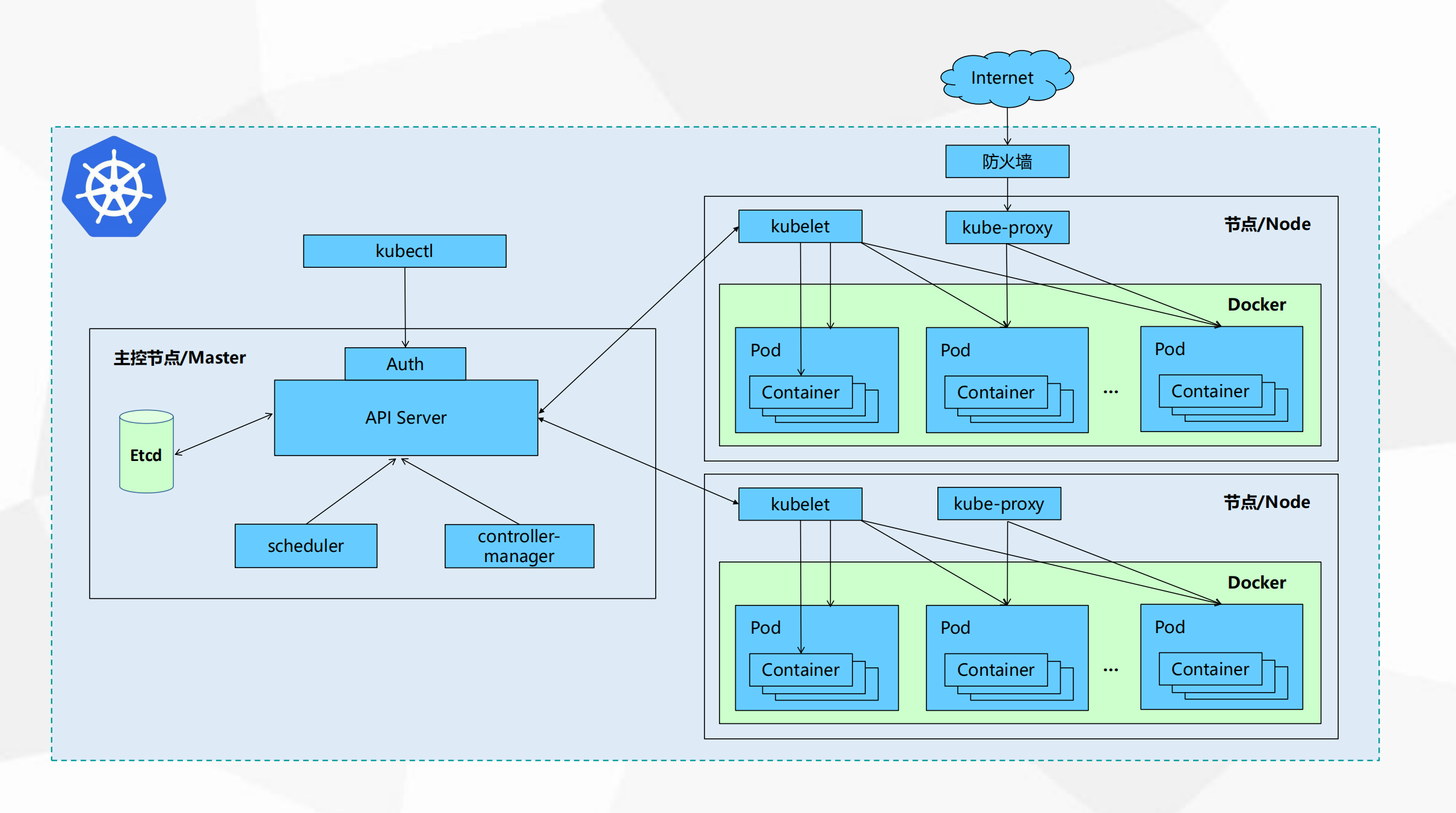

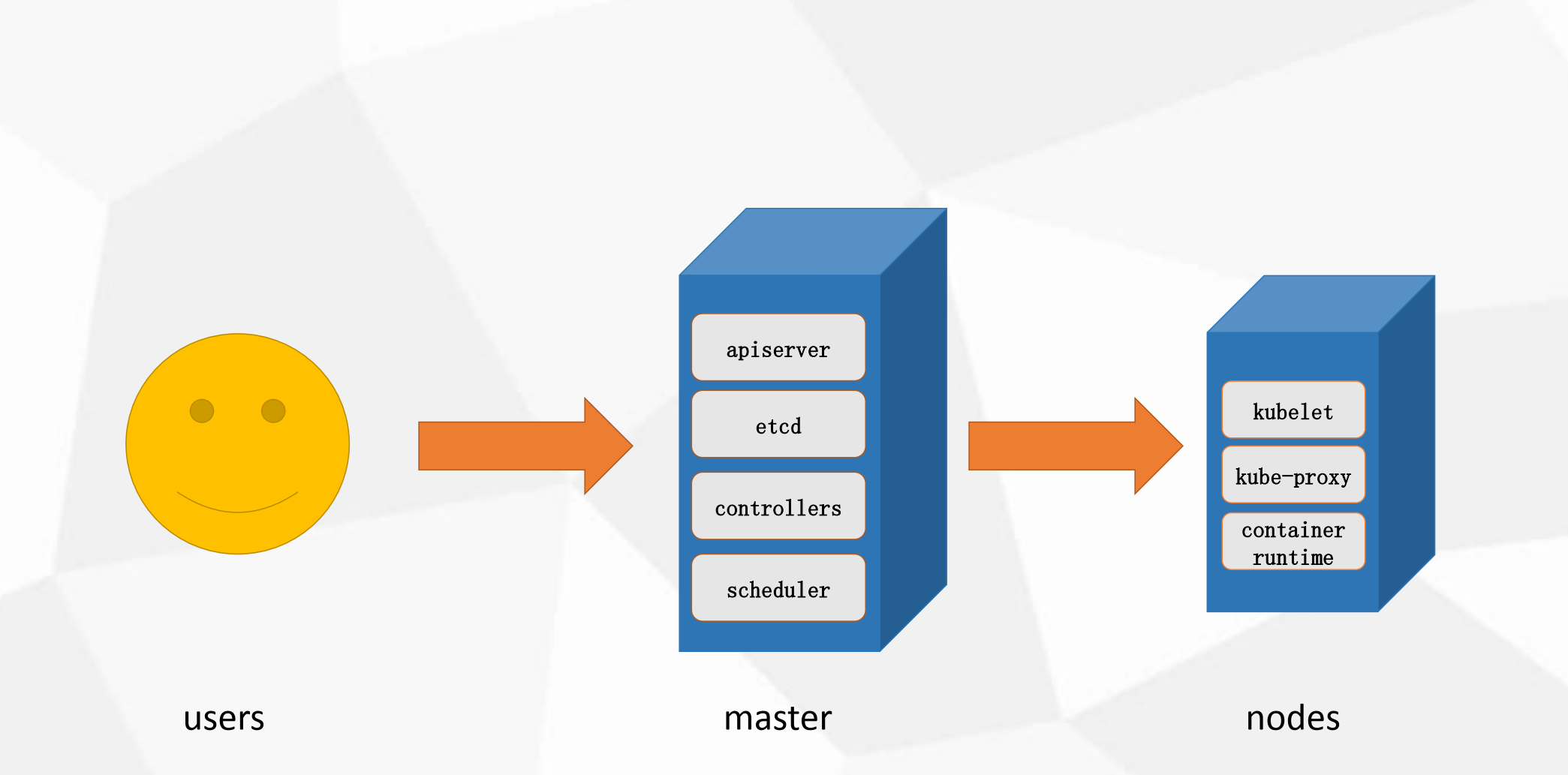

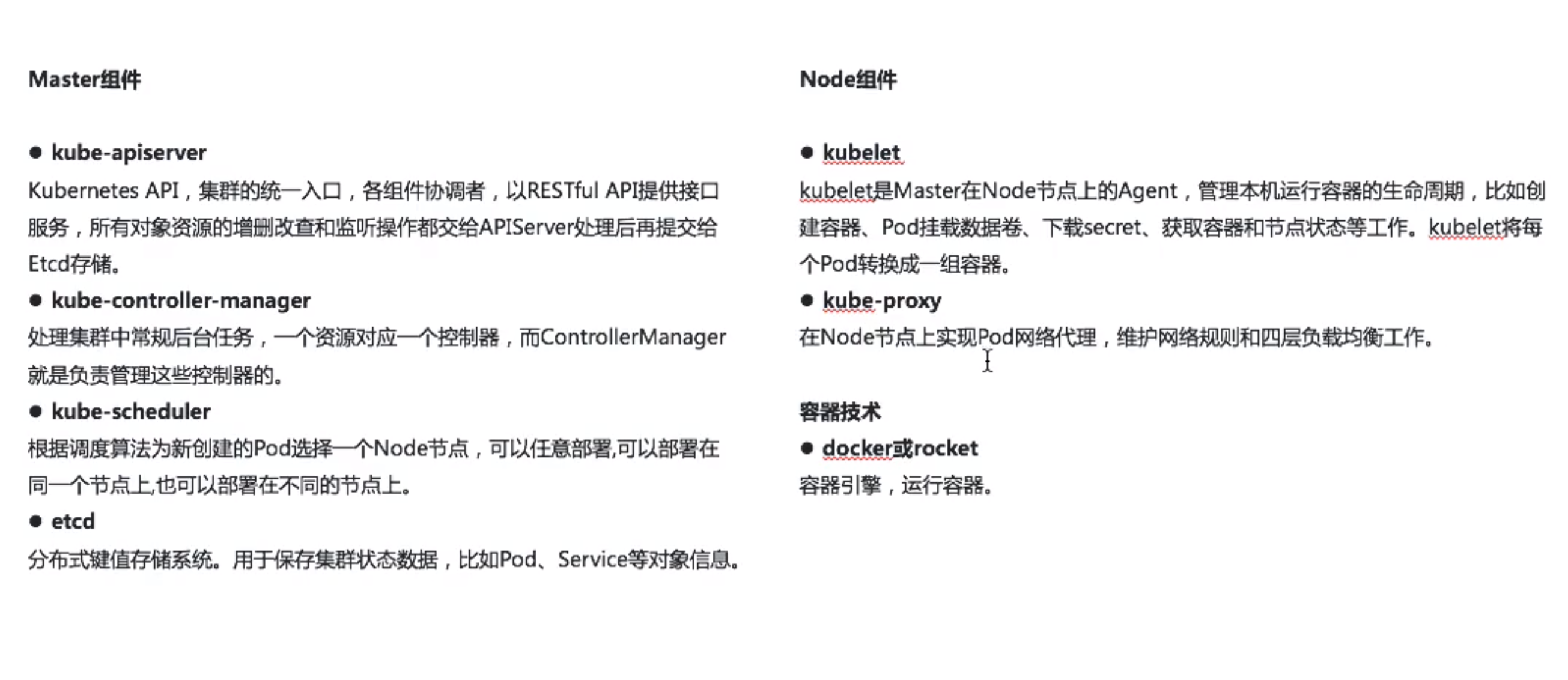

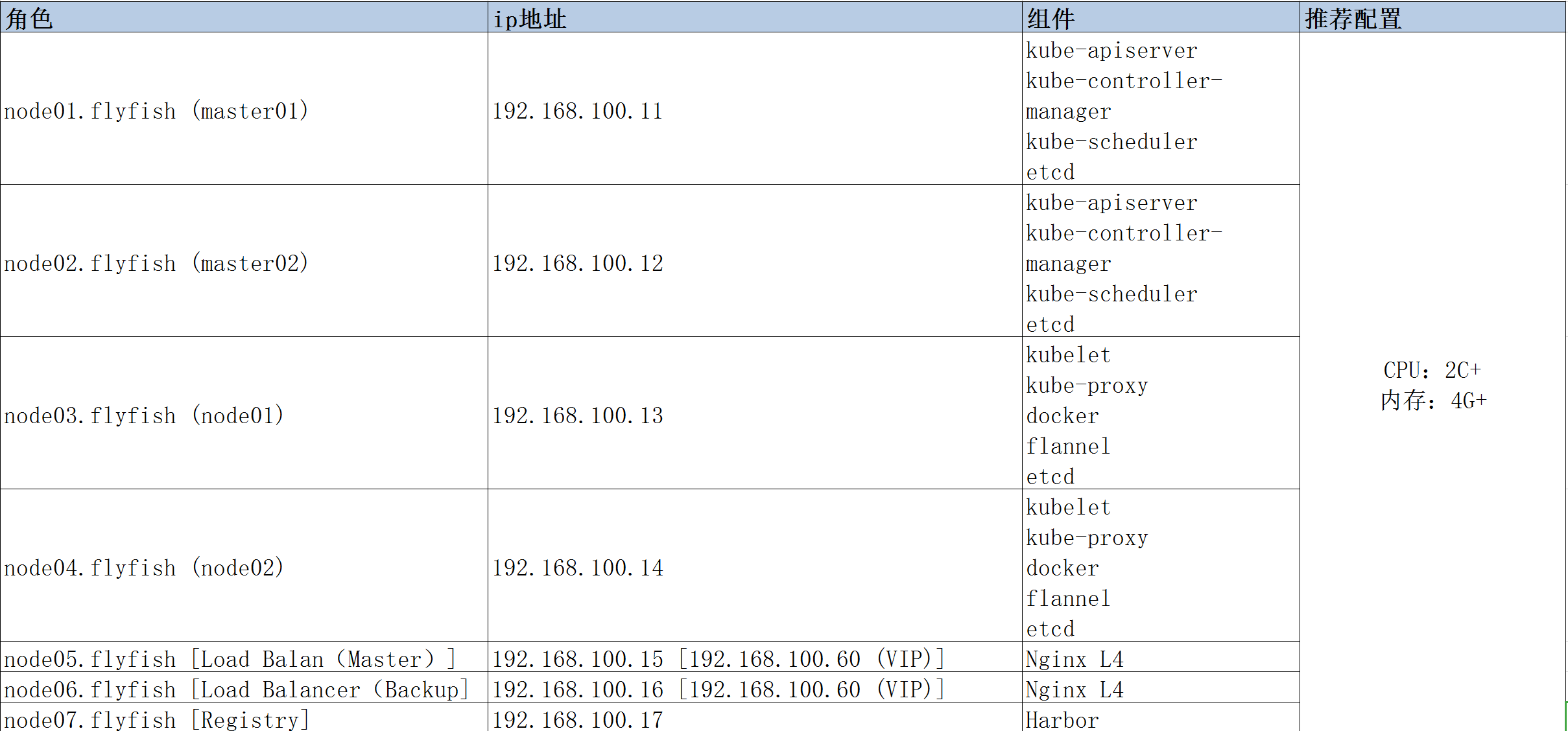

1.3 Kubernetes集群架构与组件

Master组件kube-apiserverKubernetes API,集群的统一入口,各组件协调者,以RESTful API提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给Etcd存储。kube-controller-manager处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的。kube-scheduler根据调度算法为新创建的Pod选择一个Node节点,可以任意部署,可以部署在同一个节点上,也可以部署在不同的节点上。etcd分布式键值存储系统。用于保存集群状态数据,比如Pod、Service等对象信息。Node组件kubelet kubelet是Master在Node节点上的Agent,管理本机运行容器的生命周期,比如创建容器、Pod挂载数据卷、下载secret、获取容器和节点状态等工作。kubelet将每个Pod转换成一组容器。kube-proxy在Node节点上实现Pod网络代理,维护网络规则和四层负载均衡工作。docker或rocket容器引擎,运行容器。

1. Pod•最小部署单元• 一组容器的集合• 一个Pod中的容器共享网络命名空间• Pod是短暂的2. Controllers• ReplicaSet : 确保预期的Pod副本数量• Deployment : 无状态应用部署• StatefulSet : 有状态应用部署• DaemonSet : 确保所有Node运行同一个Pod• Job : 一次性任务• Cronjob : 定时任务更高级层次对象,部署和管理Pod3. Service• 防止Pod失联• 定义一组Pod的访问策略4. Label : 标签,附加到某个资源上,用于关联对象、查询和筛选5. Namespaces : 命名空间,将对象逻辑上隔离6. Annotations :注释

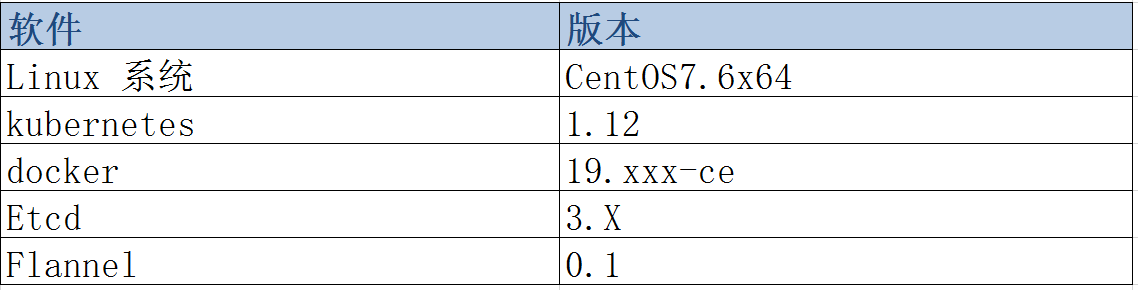

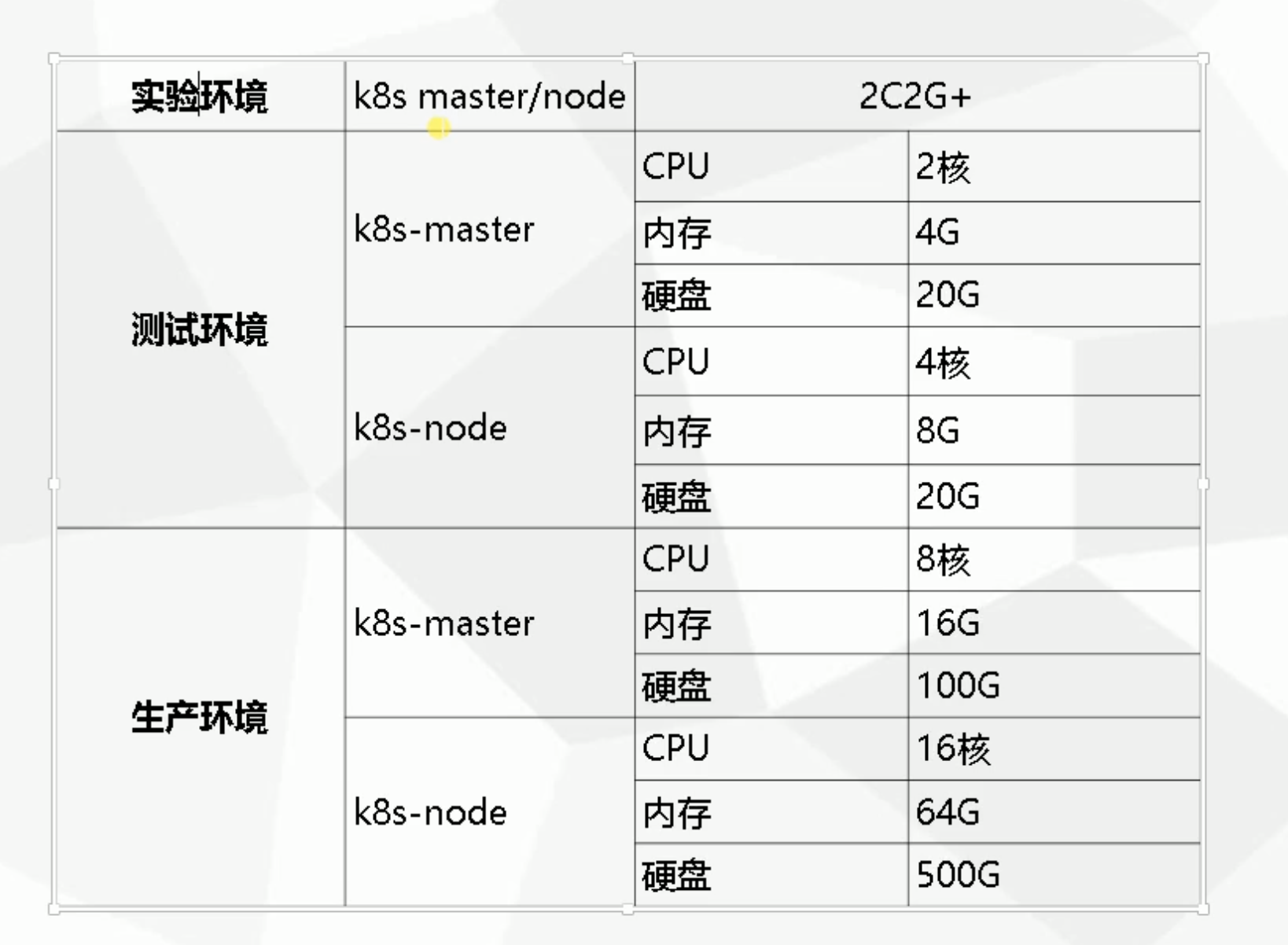

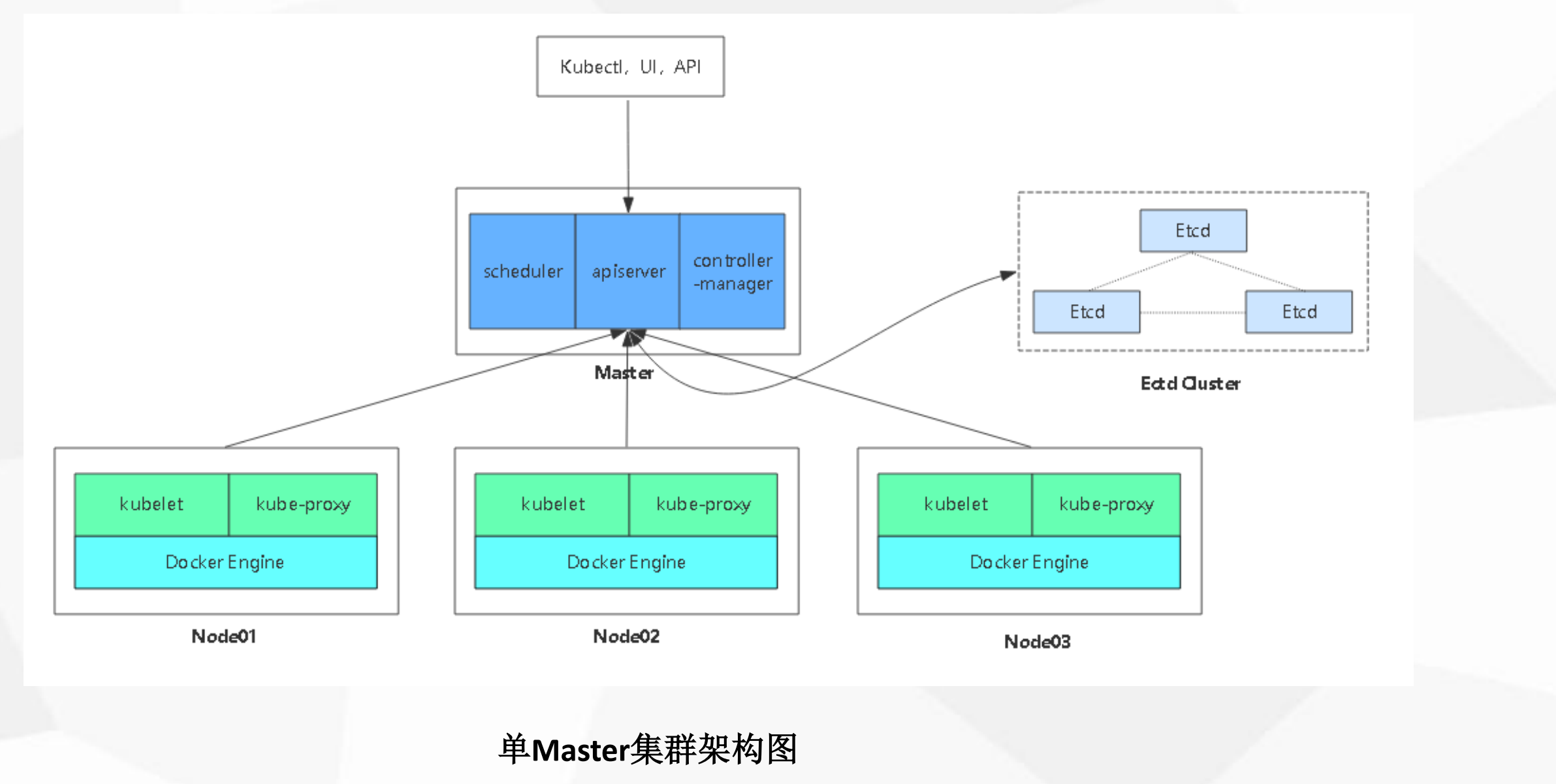

二: kubernetes 高可用集群环境部署

2.1 官方提供的三种部署方式

1. minikubeMinikube是一个工具,可以在本地快速运行一个单点的Kubernetes,仅用于尝试Kubernetes或日常开发的用户使用。部署地址:https://kubernetes.io/docs/setup/minikube/2. kubeadmKubeadm也是一个工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群。部署地址:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/3.二进制包推荐,从官方下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。下载地址:https://github.com/kubernetes/kubernetes/releases

2.2 采用二进制包部署

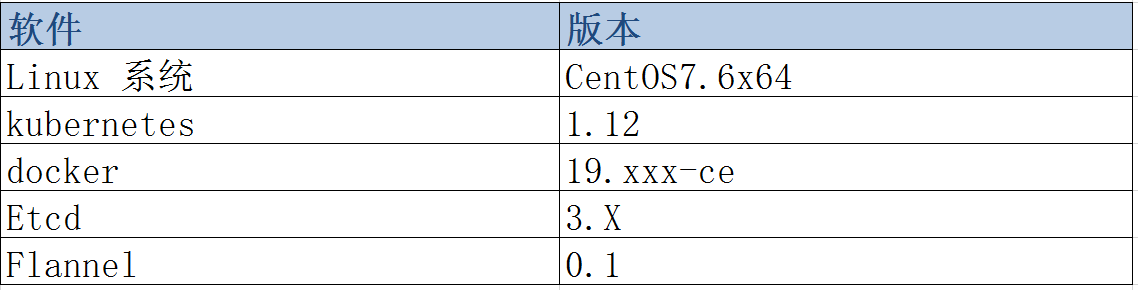

2.2.1 软件与版本

2.2.2 IP地址 与角色规划

2.2.3 单节点master

2.2.4 多节点master

2.3 首先部署单master节点

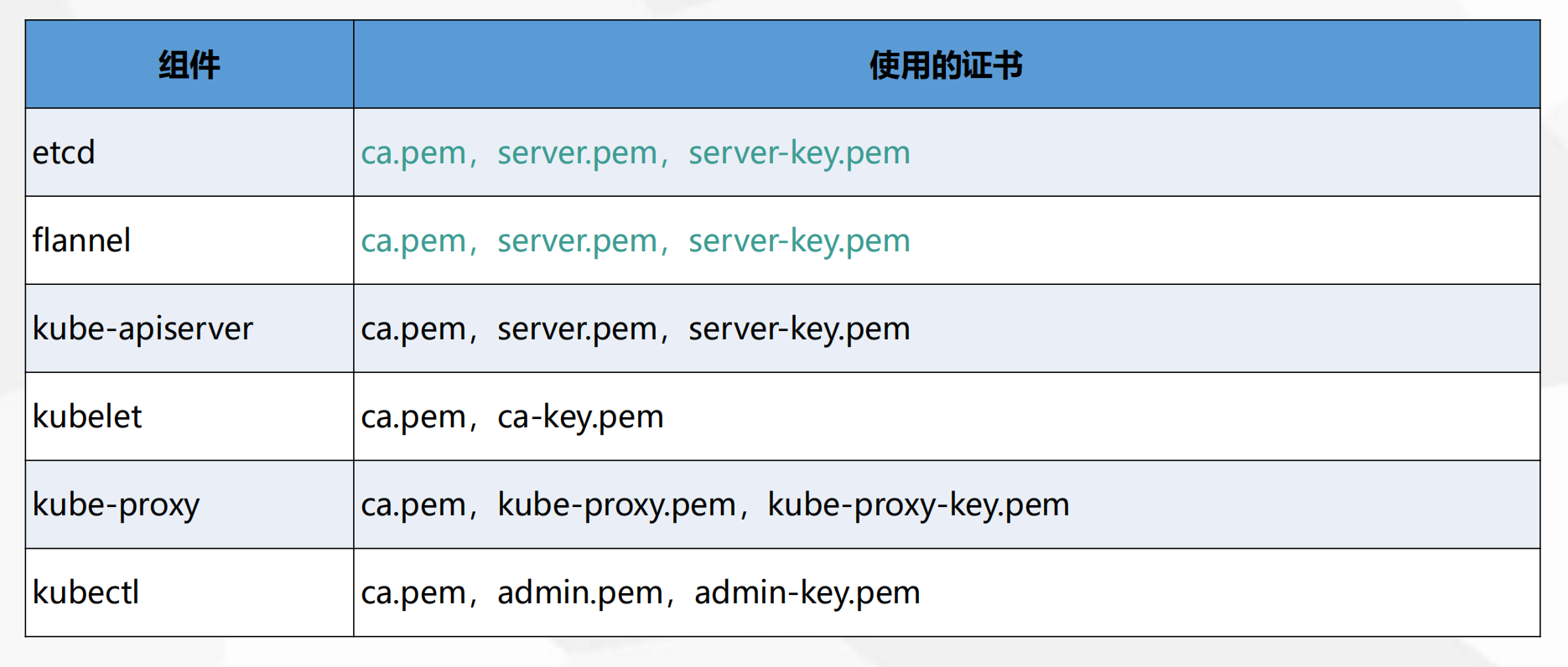

2.3.1 自签SSL证书

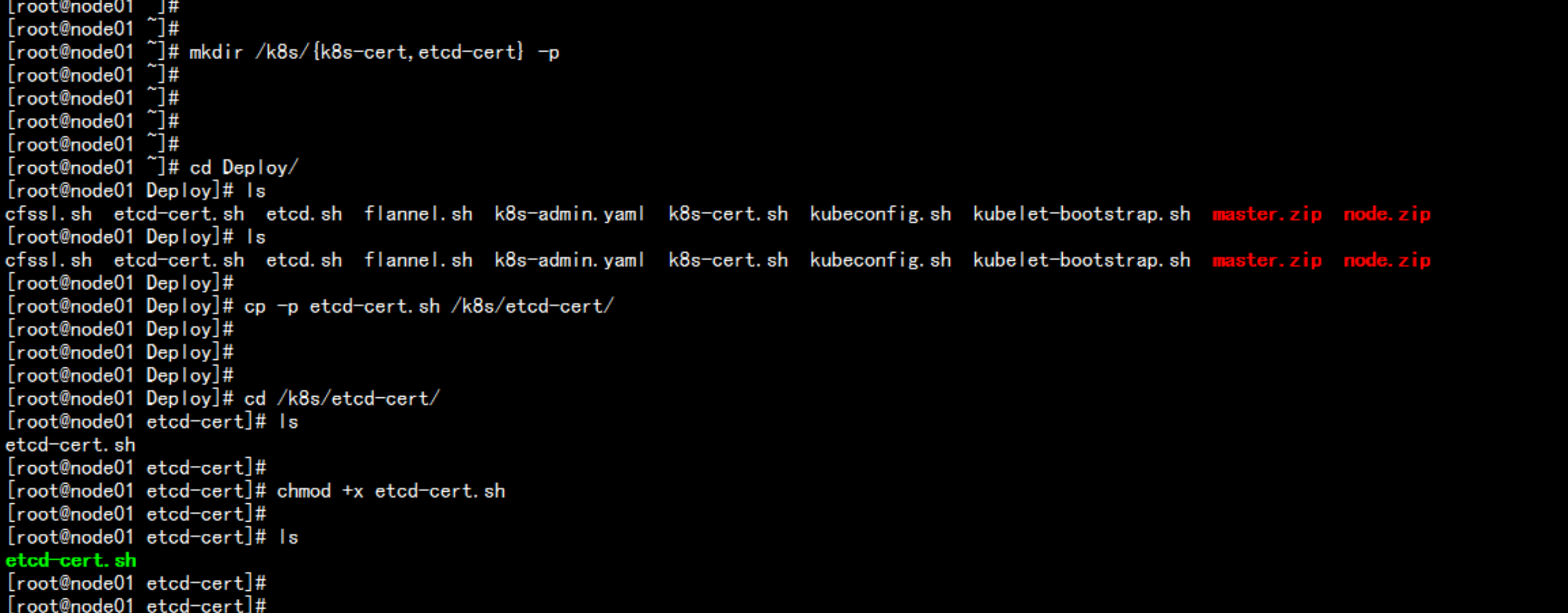

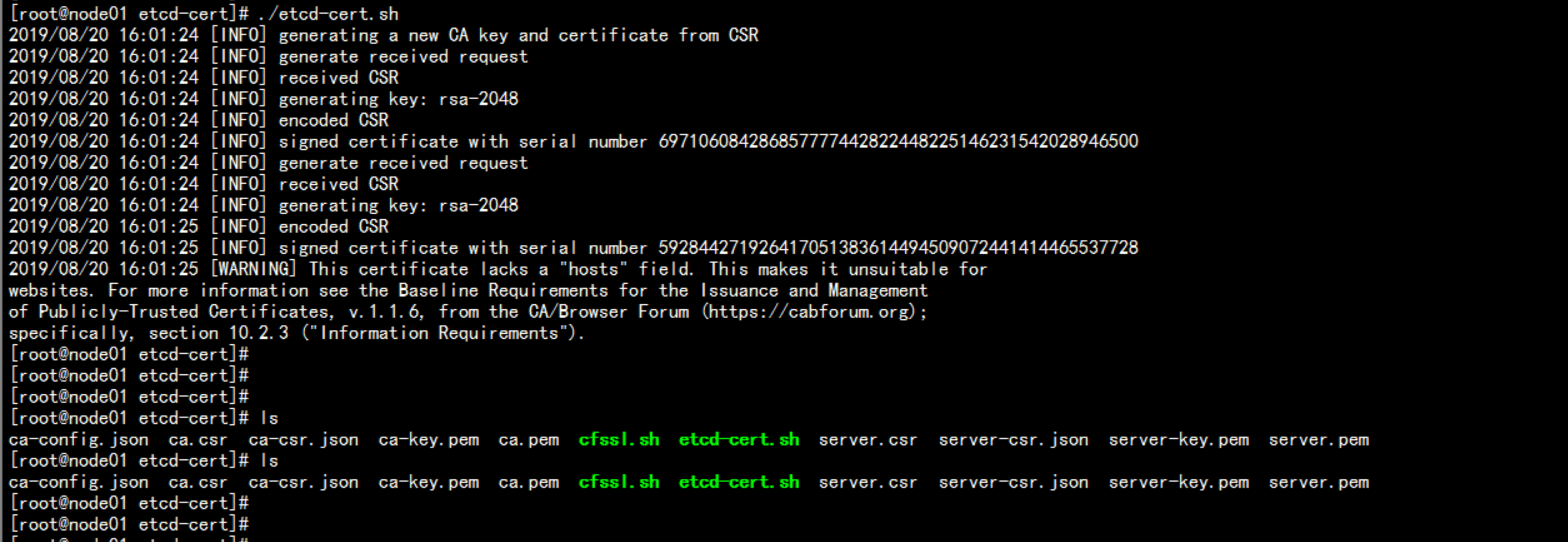

mkdir /k8s/{k8s-cert,etcd-cert} -pcd /Deploycp -p etcd-cert.sh /k8s/etcd-certcd /k8s/etcd-certchmod +x etcd-cert.sh----etcd-cert.sh 脚本内容cat > ca-config.json <<EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"www": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat > ca-csr.json <<EOF{"CN": "etcd CA","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing"}]}EOFcfssl gencert -initca ca-csr.json | cfssljson -bare ca -#-----------------------cat > server-csr.json <<EOF{"CN": "etcd","hosts": ["192.168.100.11","192.168.100.12","192.168.100.13","192.168.100.14","192.168.100.15","192.168.100.16","192.168.100.17","192.168.100.18","192.168.100.60"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing"}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server----

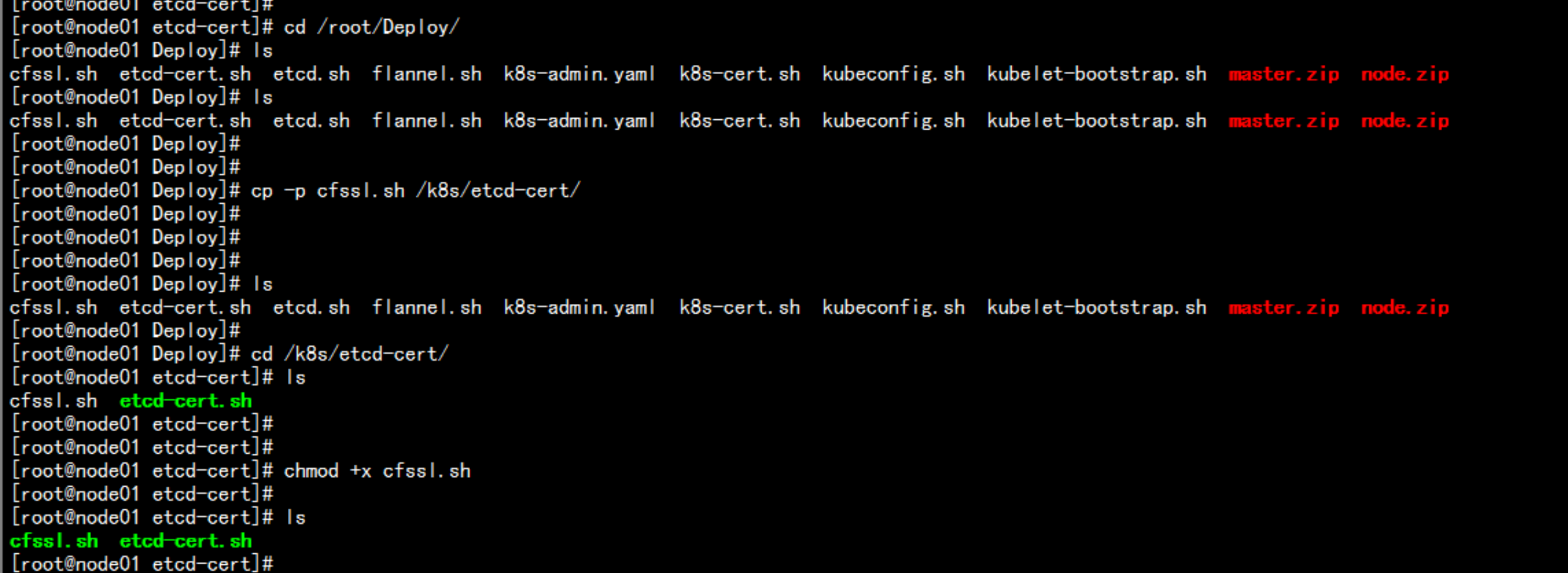

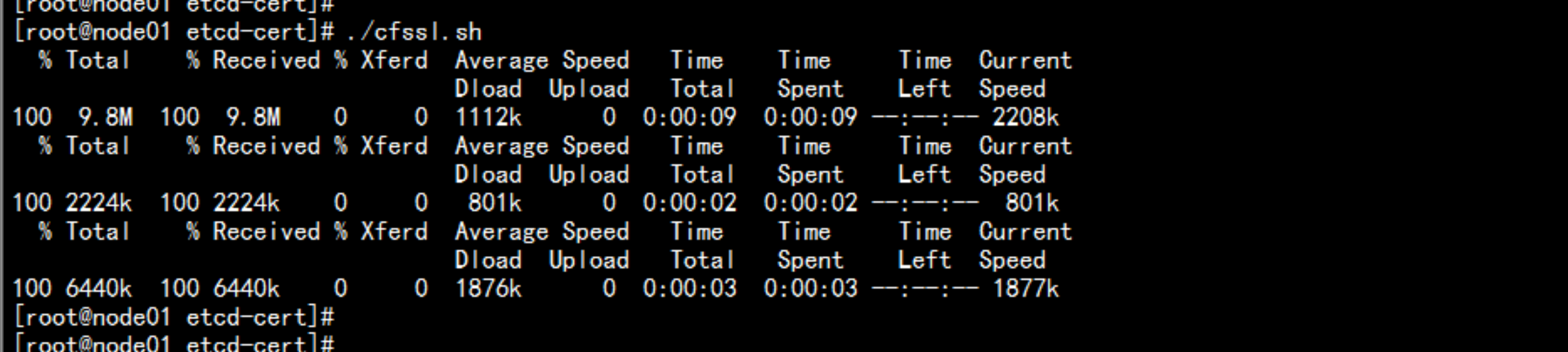

cfsssl 命令支持cd /root/Deploycp -p cfssl.sh /k8s/etcd-certcd /k8s/etcd-certchmod +x cfssl.sh./cfssl.sh./etcd-cert.sh----cfssl.sh 脚本内容curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfsslcurl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljsoncurl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfochmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo----

2.3.2 配置etcd 服务

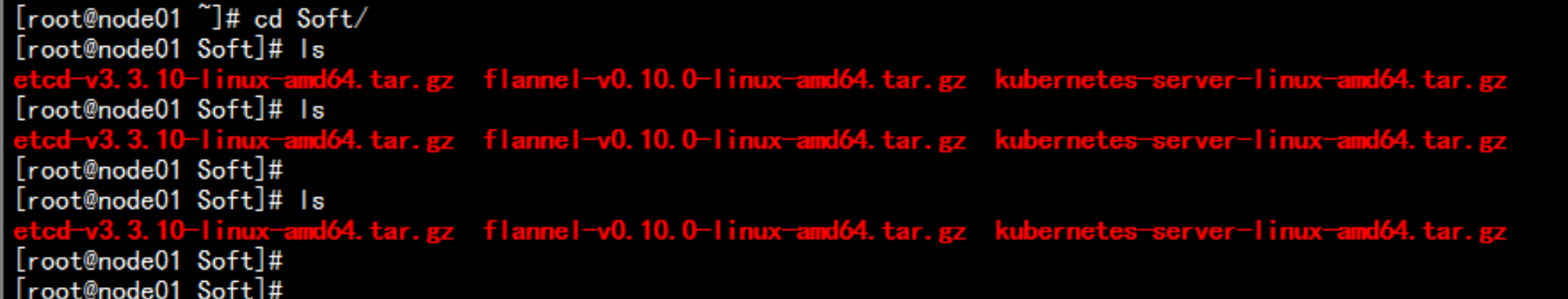

二进制包下载地址https://github.com/etcd-io/etcd/releases

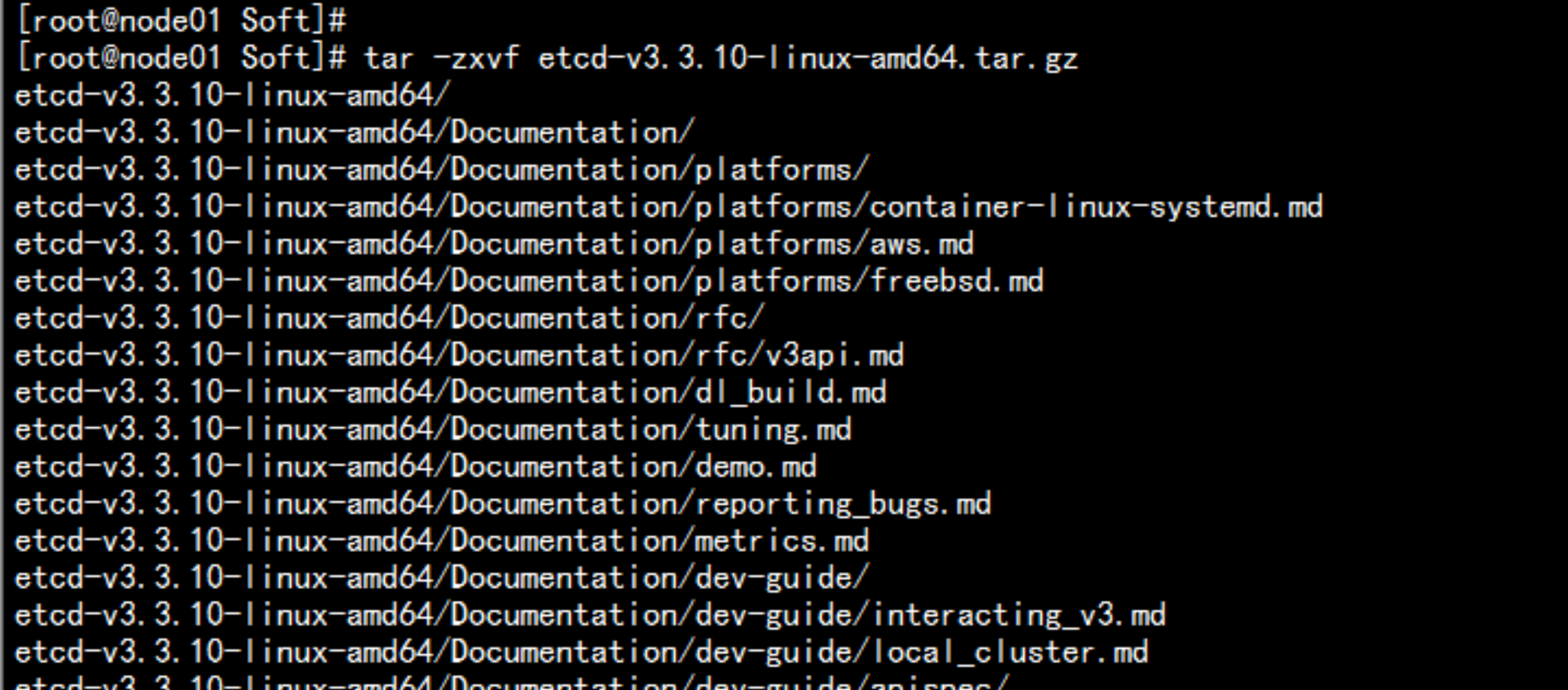

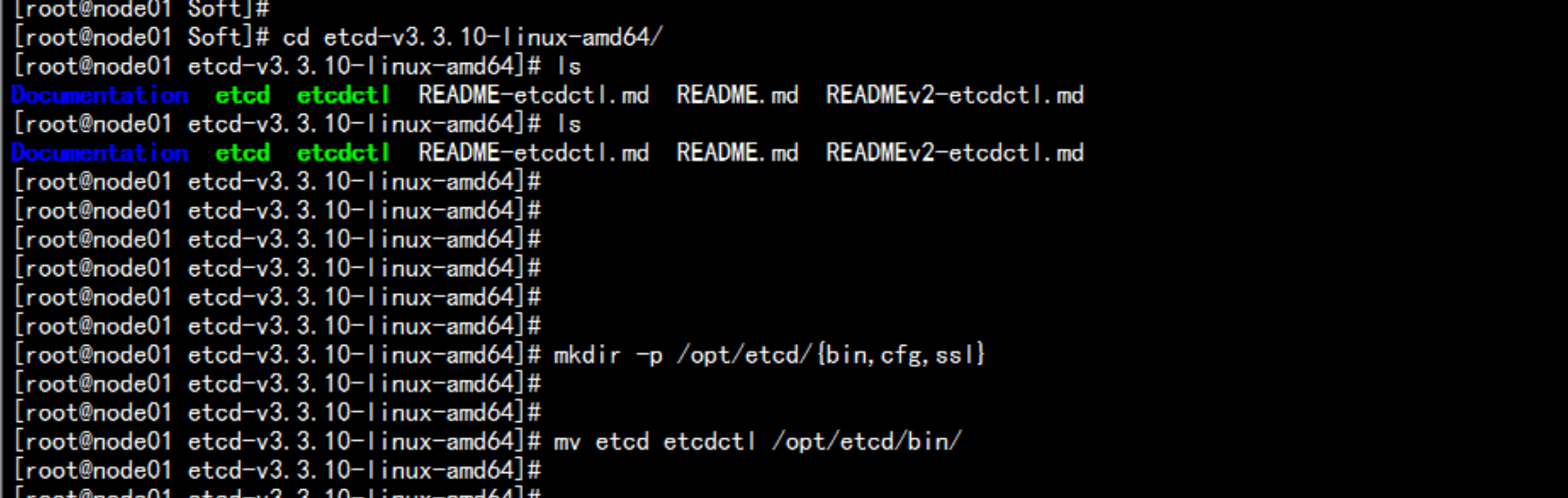

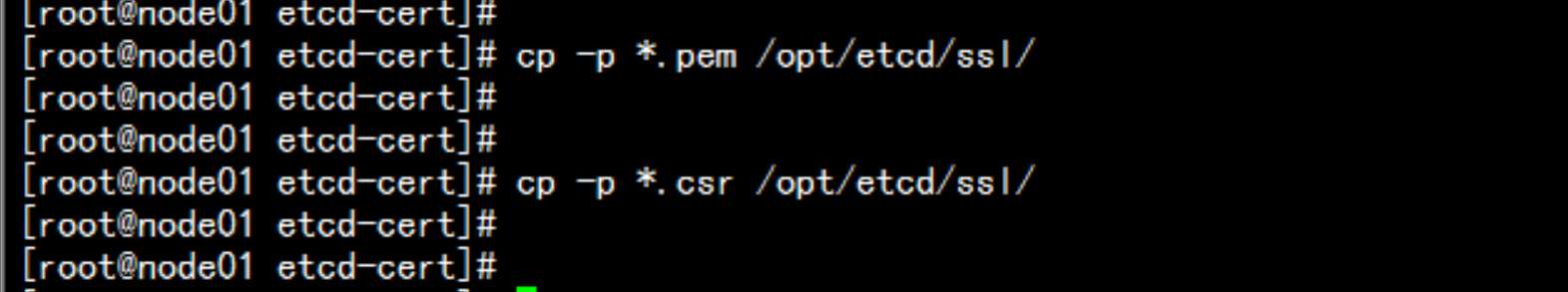

cd /root/Softtar -zxvf tar -zxvf etcd-v3.3.10-linux-amd64.tar.gzcd cd etcd-v3.3.10-linux-amd64/mkdir -p /opt/etcd/{ssl,bin,cfg}mv etcd etcdctl /opt/etcd/bin/cd /k8s/etcd-certcp -p *.pem /opt/etcd/sslcp -p *.csr /opt/etcd/ssl

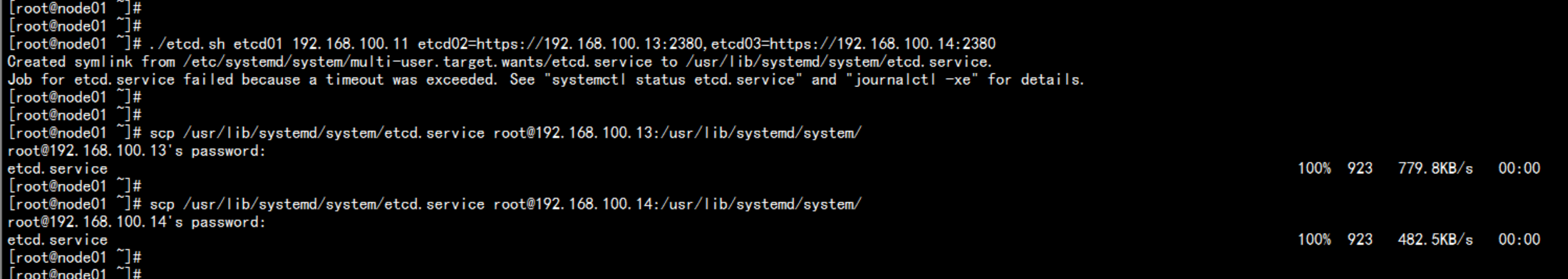

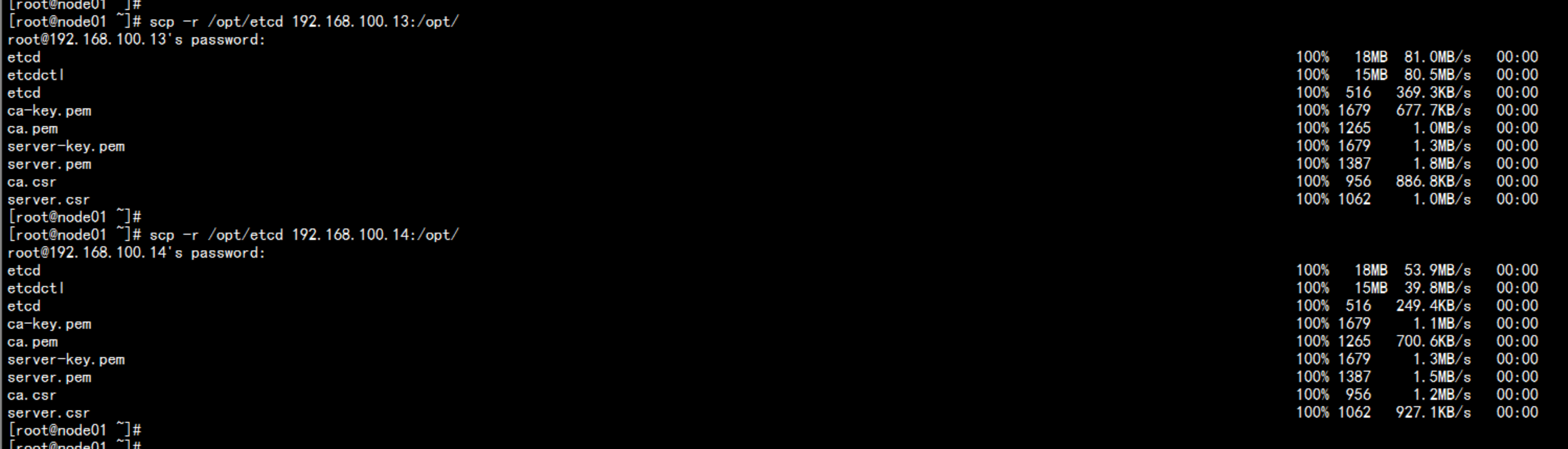

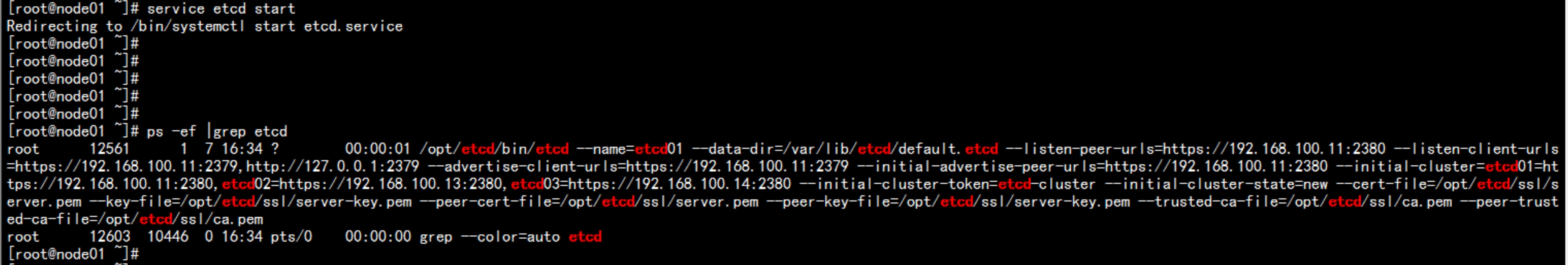

cd /root/Deploycp -p etcd.sh /rootchmod +x etcd.sh./etcd.sh etcd01 192.168.100.11 etcd02=https://192.168.100.13:2380,etcd03=https://192.168.100.14:2380scp -r /opt/etcd 192.168.100.13:/opt/scp -r /opt/etcd 192.168.100.14:/opt/scp /usr/lib/systemd/system/etcd.service root@192.168.100.13:/usr/lib/systemd/system/scp /usr/lib/systemd/system/etcd.service root@192.168.100.14:/usr/lib/systemd/system/----etcd.sh 脚本内容#!/bin/bash# example: ./etcd.sh etcd01 192.168.100.11 etcd02=https://192.168.100.13:2380,etcd03=https://192.168.100.14:2380ETCD_NAME=$1ETCD_IP=$2ETCD_CLUSTER=$3WORK_DIR=/opt/etcdcat <<EOF >$WORK_DIR/cfg/etcd#[Member]ETCD_NAME="${ETCD_NAME}"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380"ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379"ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"EOFcat <<EOF >/usr/lib/systemd/system/etcd.service[Unit]Description=Etcd ServerAfter=network.targetAfter=network-online.targetWants=network-online.target[Service]Type=notifyEnvironmentFile=${WORK_DIR}/cfg/etcdExecStart=${WORK_DIR}/bin/etcd \--name=\${ETCD_NAME} \--data-dir=\${ETCD_DATA_DIR} \--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \--initial-cluster=\${ETCD_INITIAL_CLUSTER} \--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \--initial-cluster-state=new \--cert-file=${WORK_DIR}/ssl/server.pem \--key-file=${WORK_DIR}/ssl/server-key.pem \--peer-cert-file=${WORK_DIR}/ssl/server.pem \--peer-key-file=${WORK_DIR}/ssl/server-key.pem \--trusted-ca-file=${WORK_DIR}/ssl/ca.pem \--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pemRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable etcdsystemctl restart etcd----

login :192.168.100.11 etcd 的文件vim /opt/etcd/cfg/etcd---#[Member]ETCD_NAME="etcd01"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.100.11:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.100.11:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.100.11:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.100.11:2379"ETCD_INITIAL_CLUSTER="etcd01=https://192.168.100.11:2380,etcd02=https://192.168.100.13:2380,etcd03=https://192.168.100.14:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"~---login :192.168.100.13 etcd 文件内容vim /opt/etcd/cfg/etcd---#[Member]ETCD_NAME="etcd02"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.100.13:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.100.13:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.100.13:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.100.13:2379"ETCD_INITIAL_CLUSTER="etcd01=https://192.168.100.11:2380,etcd02=https://192.168.100.13:2380,etcd03=https://192.168.100.14:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"----login :192.168.100.14 etcd 文件内容vim /opt/etcd/cfg/etcd----#[Member]ETCD_NAME="etcd03"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.100.14:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.100.14:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.100.14:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.100.14:2379"ETCD_INITIAL_CLUSTER="etcd01=https://192.168.100.11:2380,etcd02=https://192.168.100.13:2380,etcd03=https://192.168.100.14:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"----

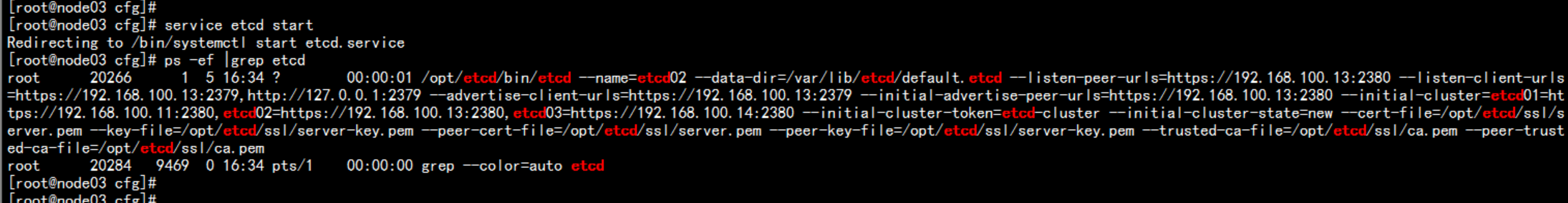

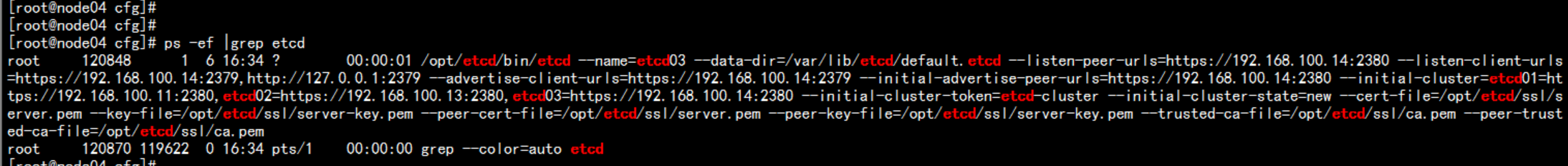

启动 etcd 服务service etcd startchkconfig etcd on

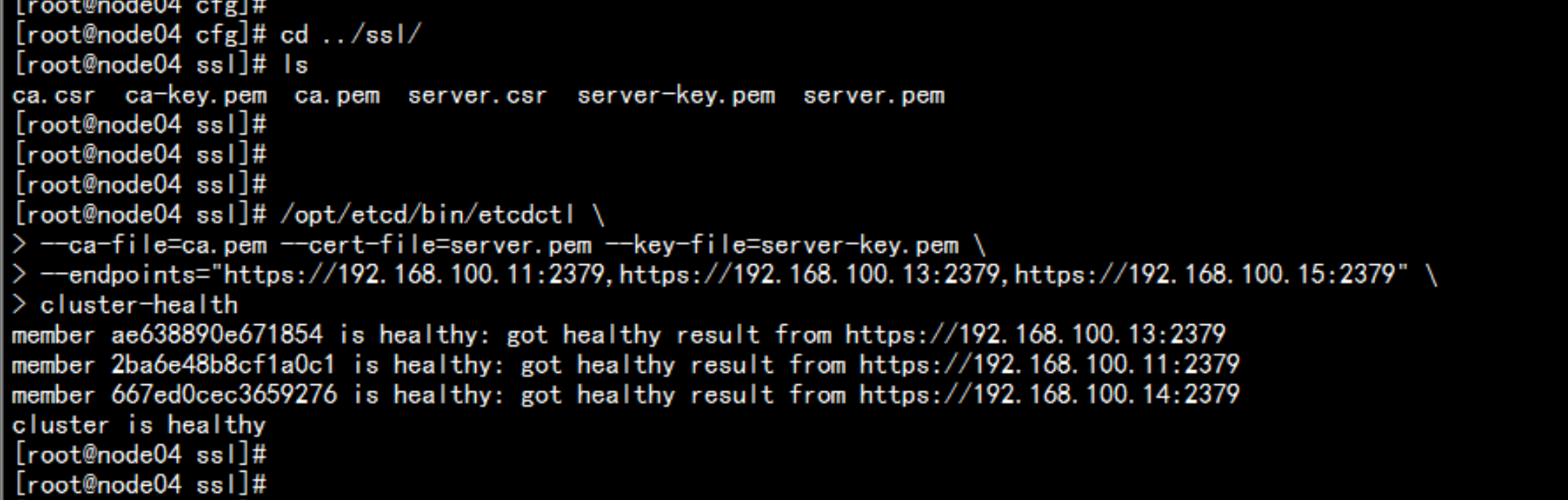

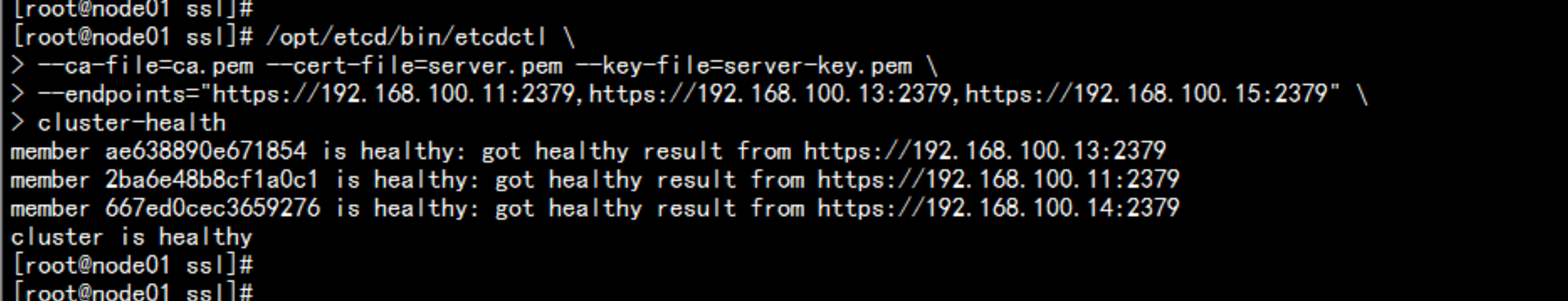

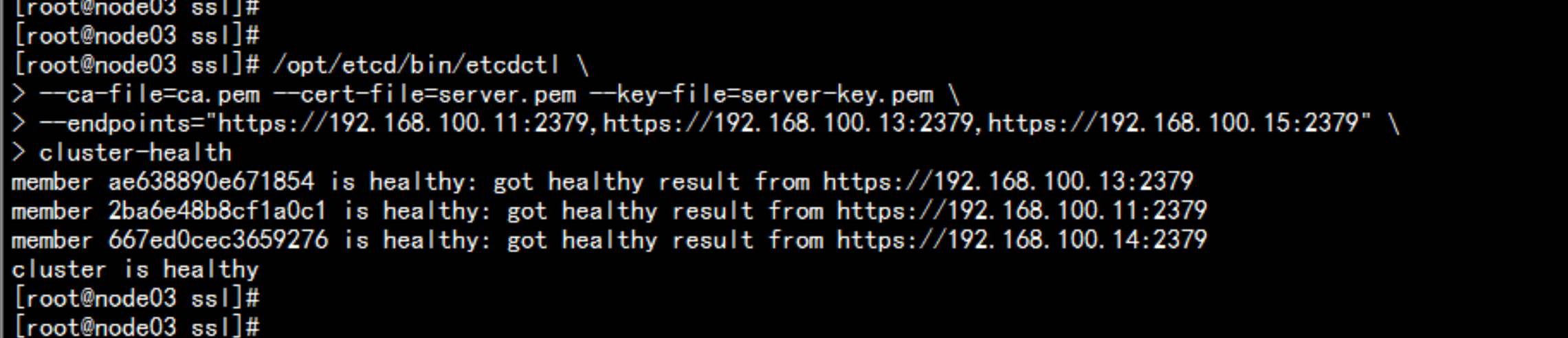

验证 etcd集群cd /opt/etcd/ssl/opt/etcd/bin/etcdctl \--ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem \--endpoints="https://192.168.100.11:2379,https://192.168.100.13:2379,https://192.168.100.14:2379" \cluster-health

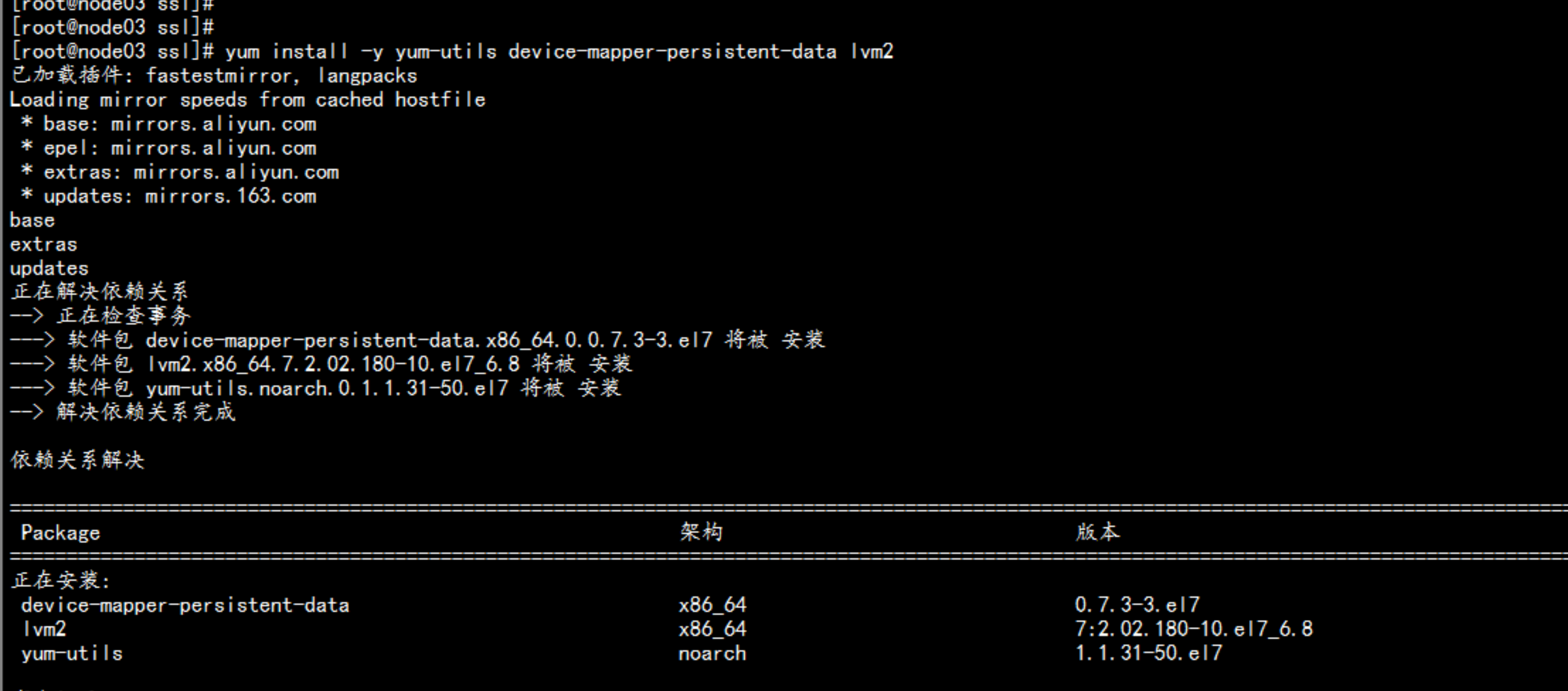

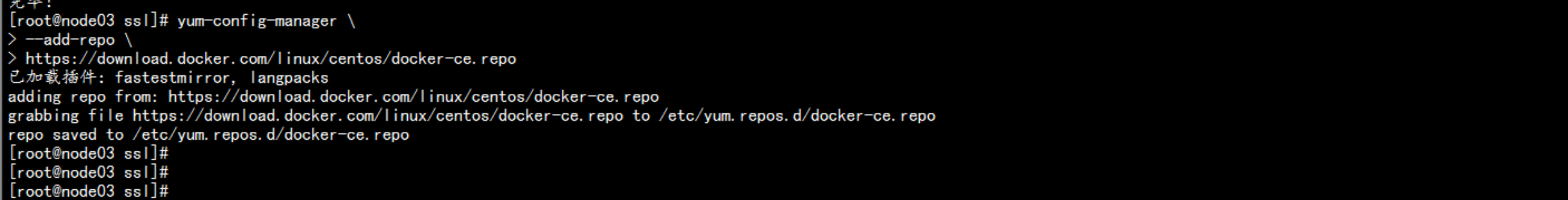

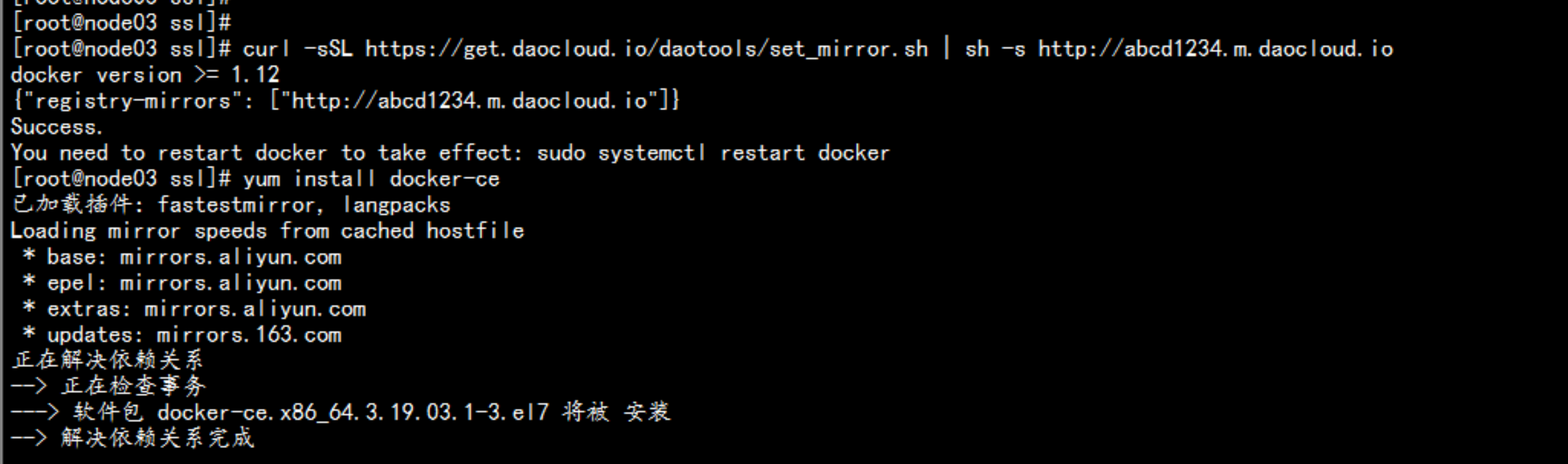

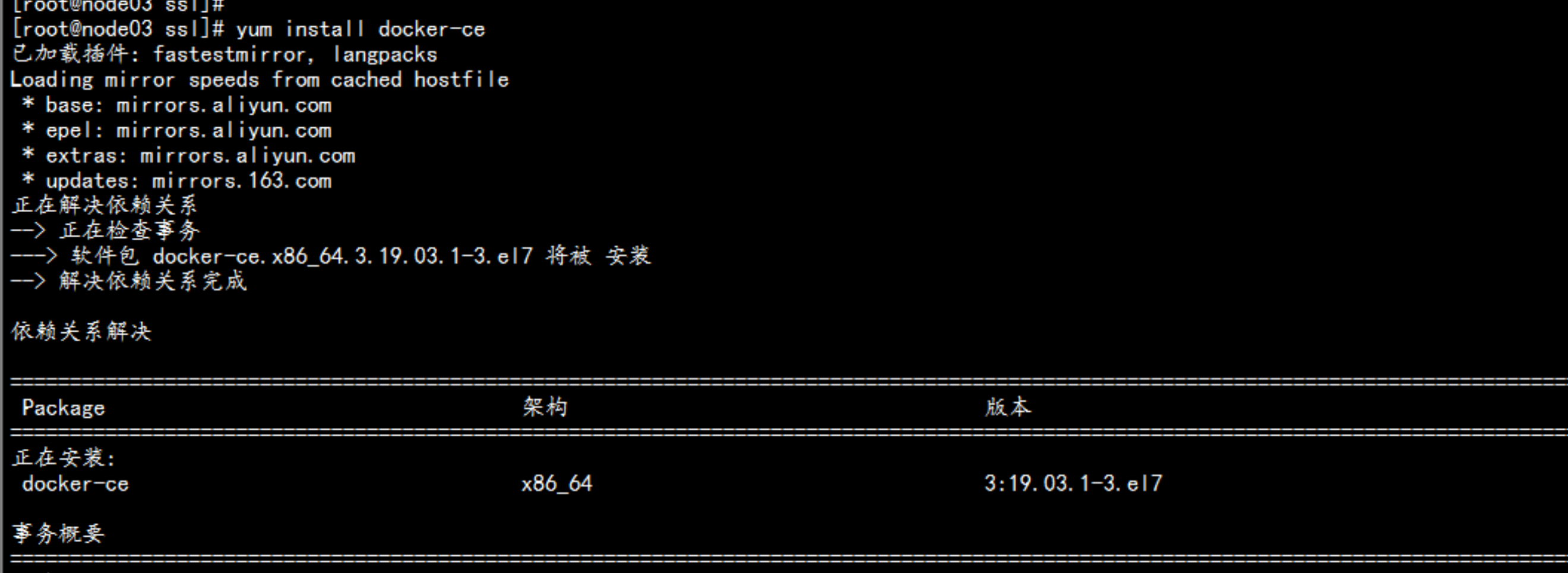

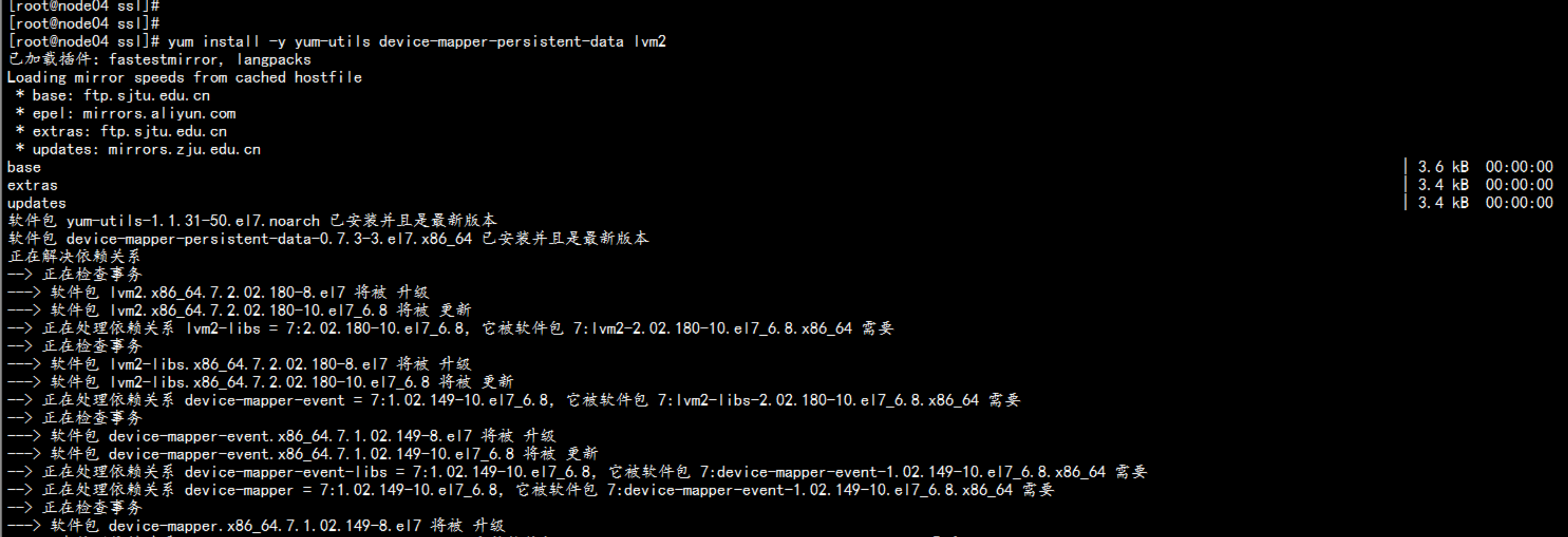

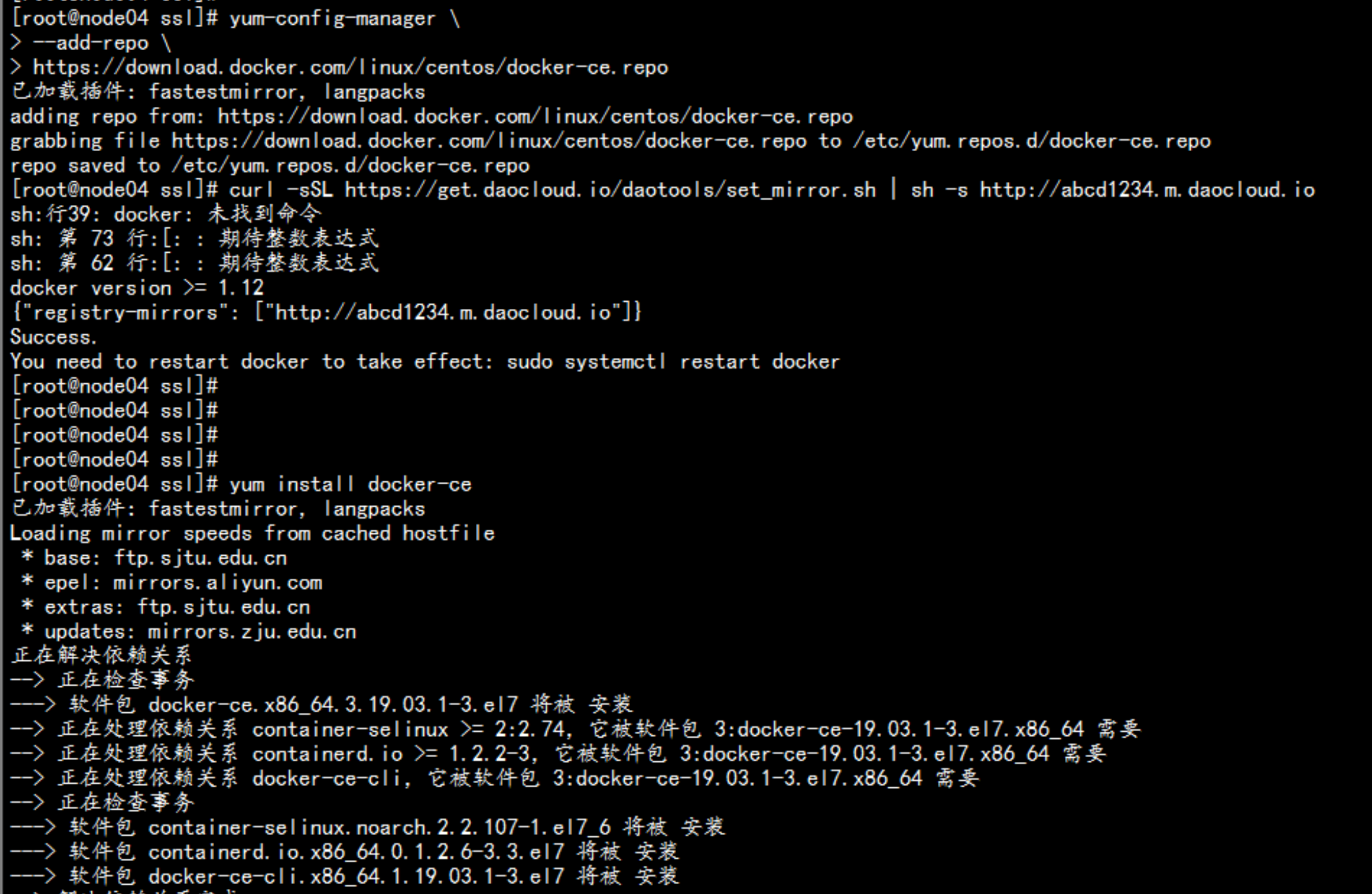

2.4 Node安装Docker

在 node节点 安装docker192.168.100.13 192.168.100.14----yum install -y yum-utils device-mapper-persistent-data lvm2# yum-config-manager \--add-repo \https://download.docker.com/linux/centos/docker-ce.repodocker 加速器curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://abcd1234.m.daocloud.io# yum install docker-ce# systemctl start docker# systemctl enable docker---

2.5 部署flannel 网络 模型

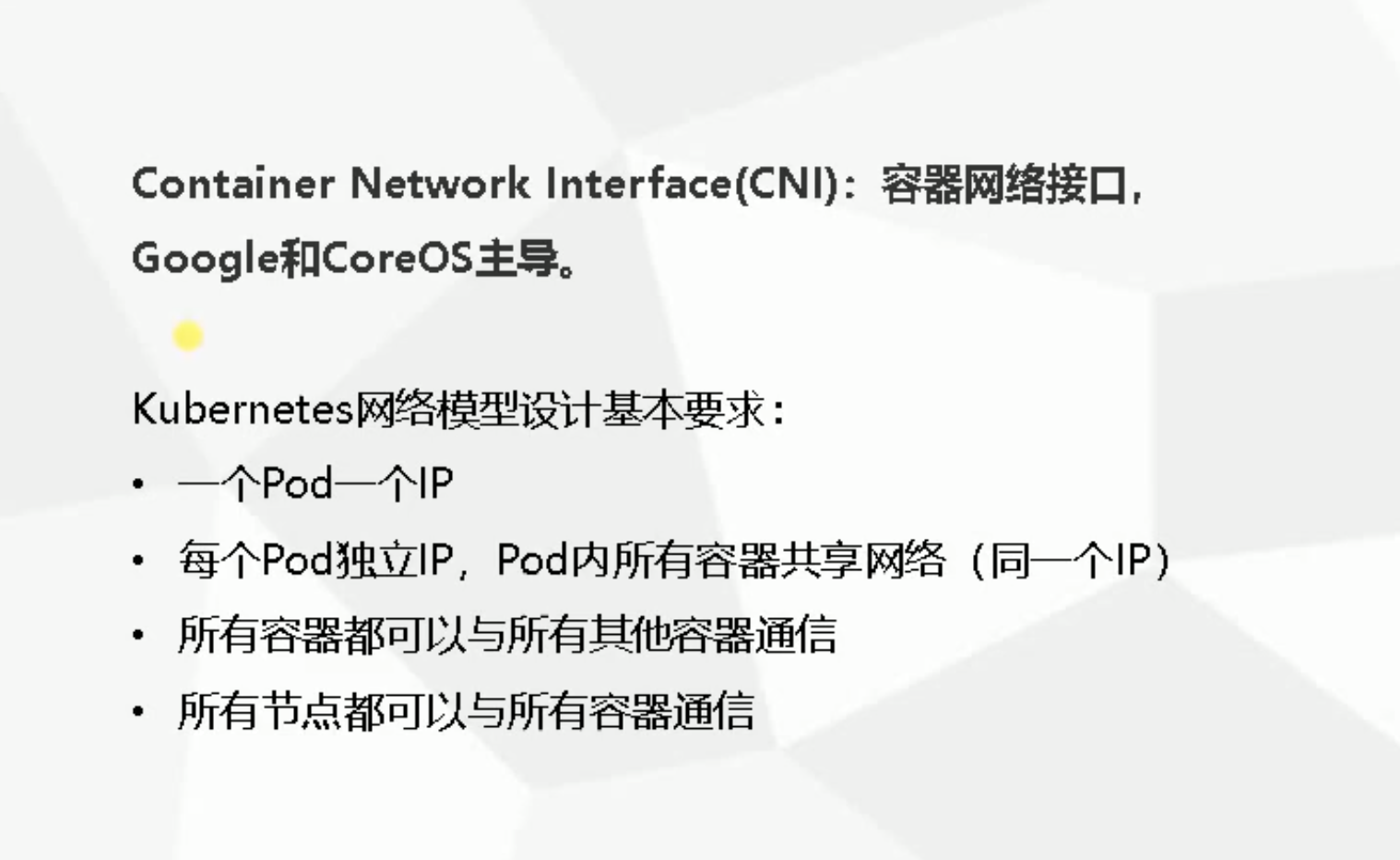

2.5.1 kubernetes 网络模型(CNI)

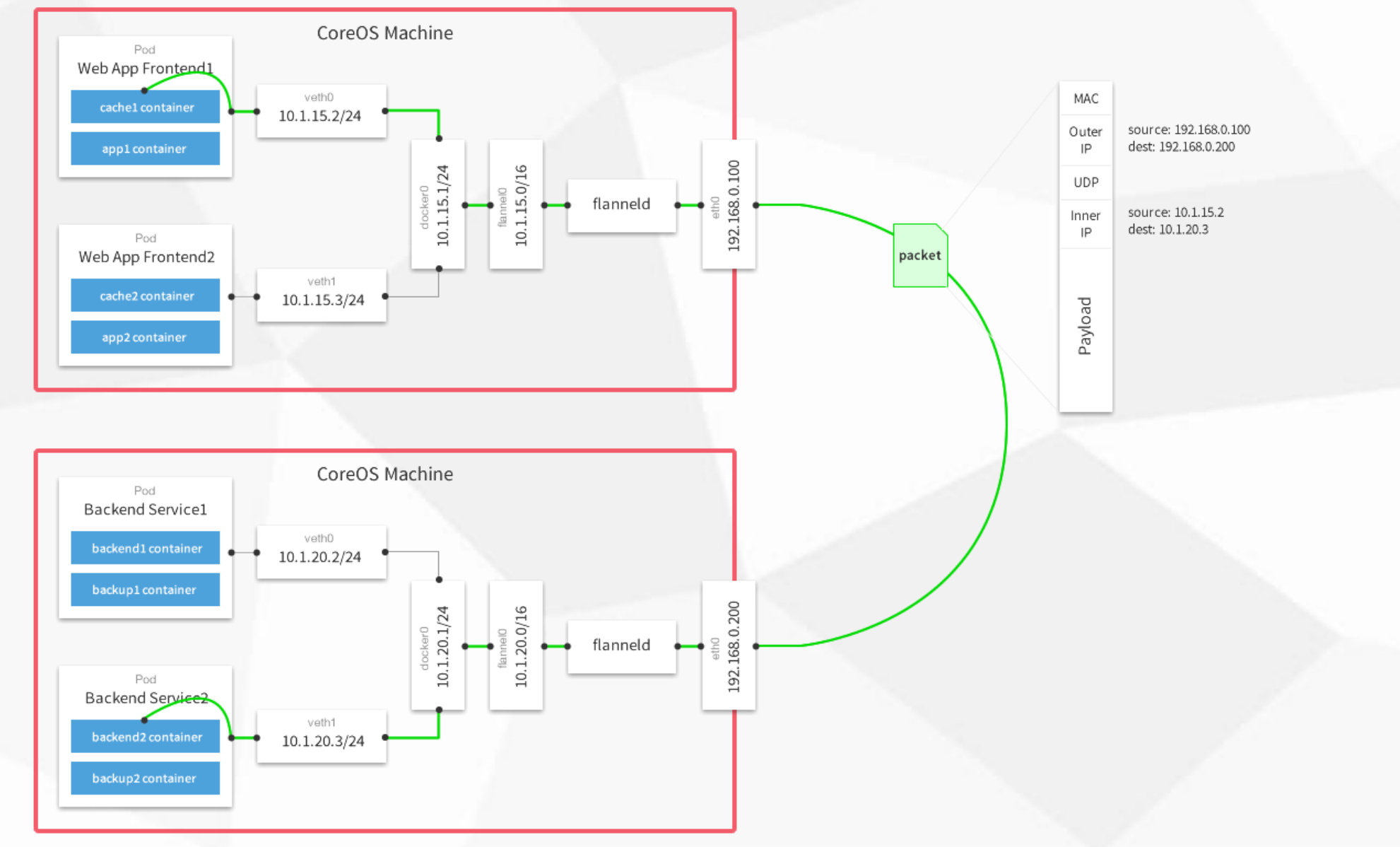

Container Network Interface(CNI): 容器网络接口,Google 和 cCoreOS 主导Kubernetes 网络模型设计要求:1. 一个pod 一个IP2. 每个pod 独立IP,pod 内所有容器共享网络(同一个IP)3. 所有容器都可以与所有其他容器通信4. 所有节点都可以与所有容器通信

2.5.2 flannel的 网络模型

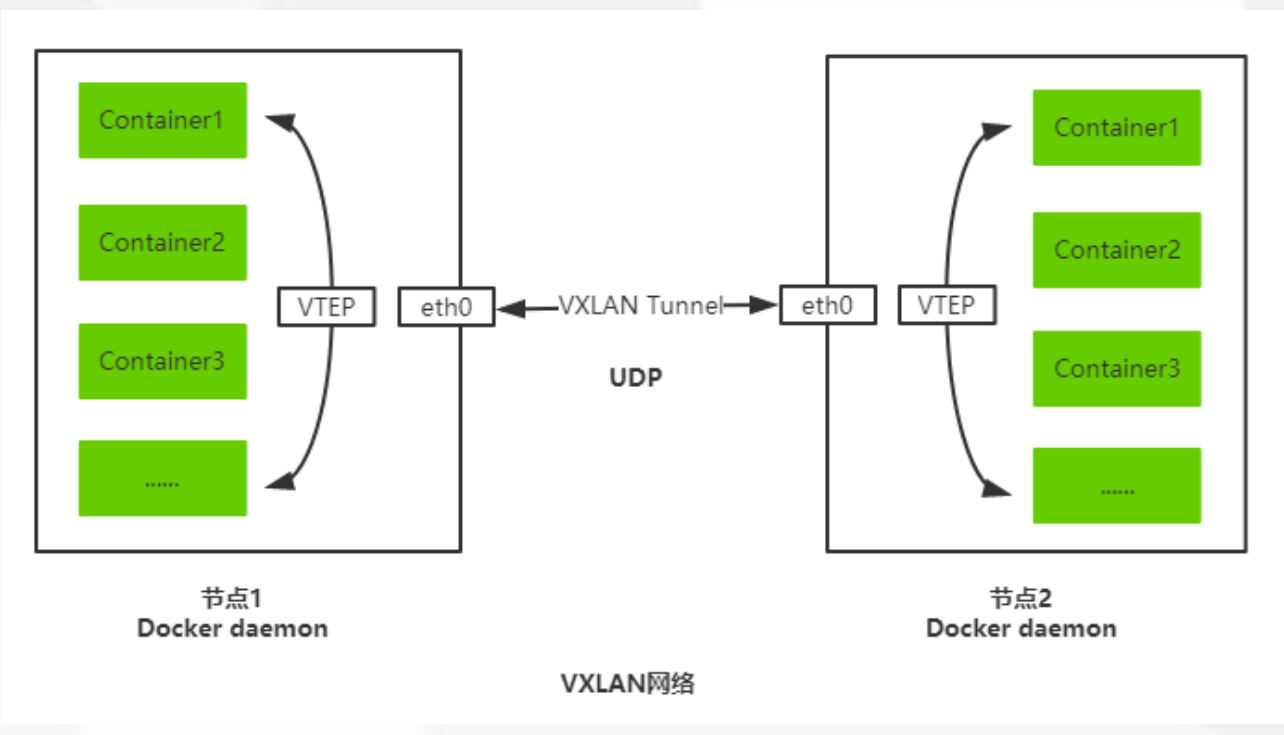

Overlay Network:覆盖网络,在基础网络上叠加的一种虚拟网络技术模式,该网络中的主机通过虚拟链路连接起来。VXLAN:将源数据包封装到UDP中,并使用基础网络的IP/MAC作为外层报文头进行封装,然后在以太网上传输,到达目的地后由隧道端点解封装并将数据发送给目标地址。Flannel:是Overlay网络的一种,也是将源数据包封装在另一种网络包里面进行路由转发和通信,目前已经支持UDP、VXLAN、AWS VPC和GCE路由等数据转发方式。

2.5.3 flannel 安装 与配置

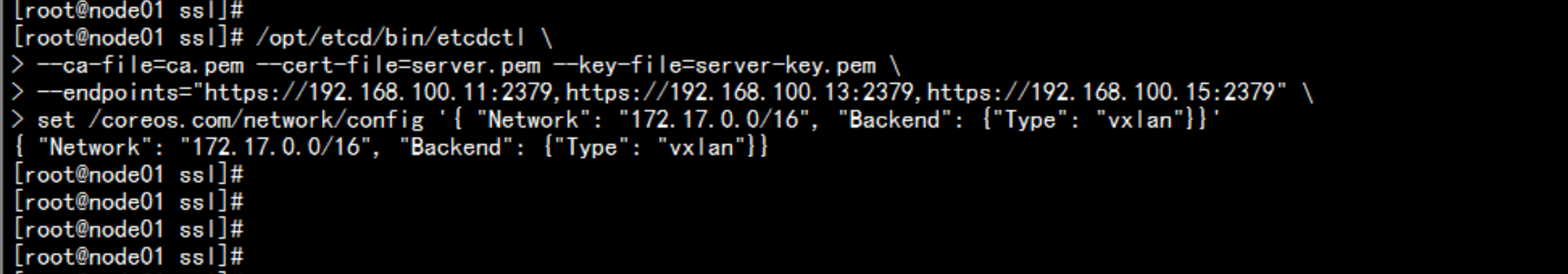

1. 写入分配的子网段到etcd,供flanneld使用cd /opt/etcd/ssl//opt/etcd/bin/etcdctl \--ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem \--endpoints="https://192.168.100.11:2379,https://192.168.100.13:2379,https://192.168.100.14:2379" \set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

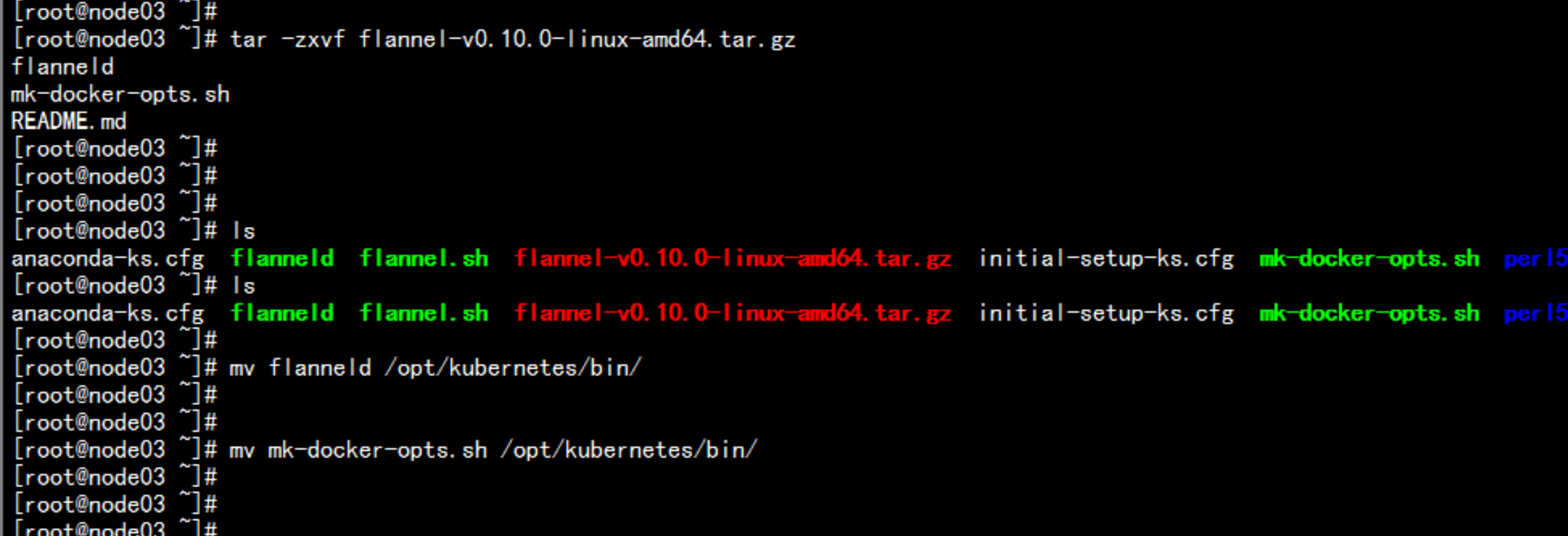

2. 下载flannel 软件https://github.com/coreos/flannel/releasestar -zxvf flannel-v0.10.0-linux-amd64.tar.gzmv flanneld /opt/kubernetes/bin/mv mk-docker-opts.sh /opt/kubernetes/bin/

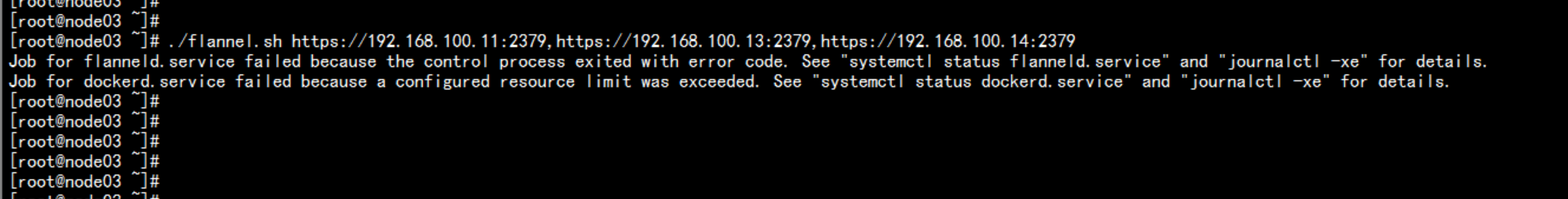

在node节点 上 部署flannelmkdir /opt/kubernetes/{bin,cfg,ssl} -pcd /root/./flannel.sh https://192.168.100.11:2379,https://192.168.100.13:2379,https://192.168.100.14:2379

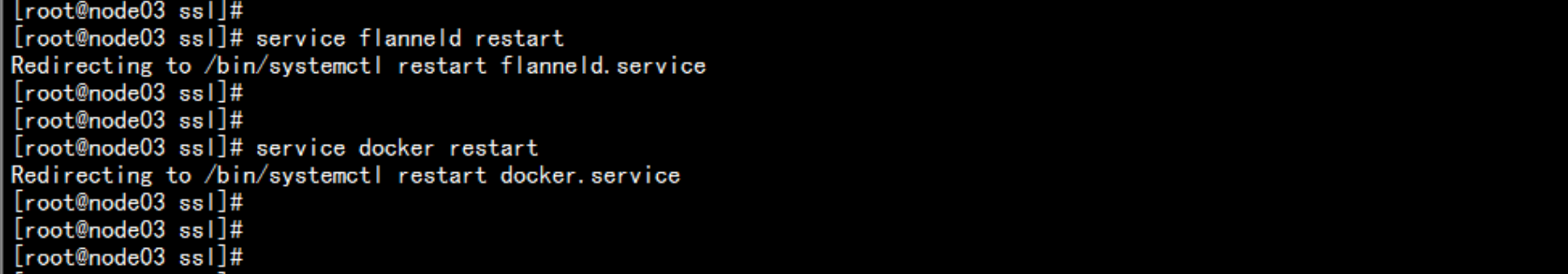

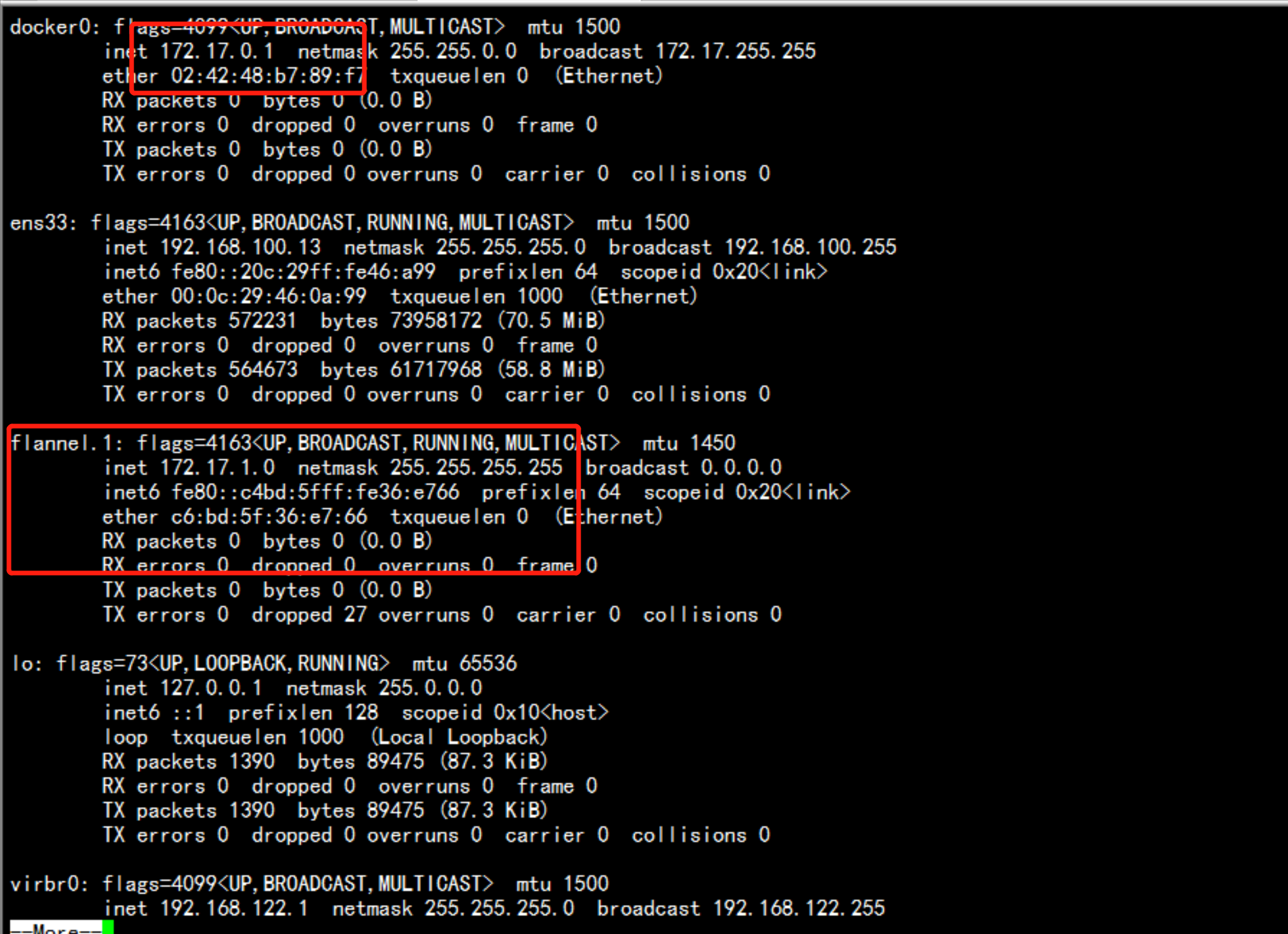

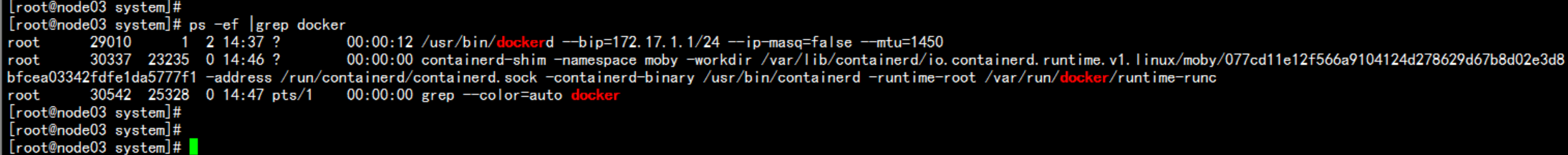

启动 flannel 与 dockerservice flanneld restartservice docker restart

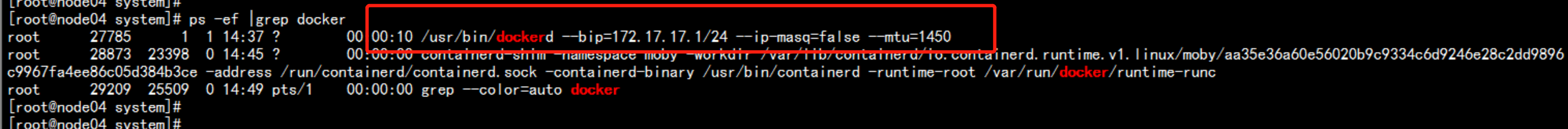

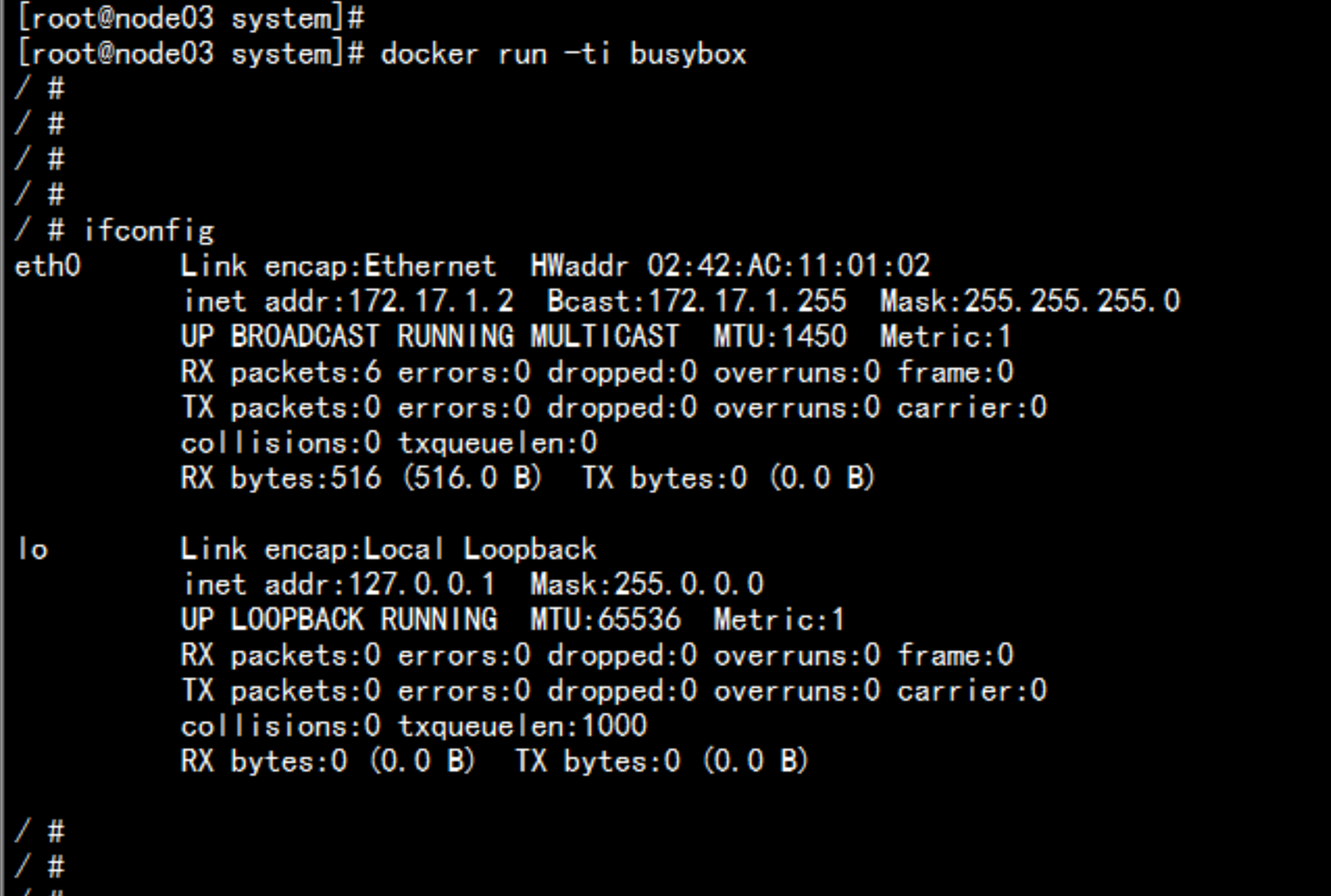

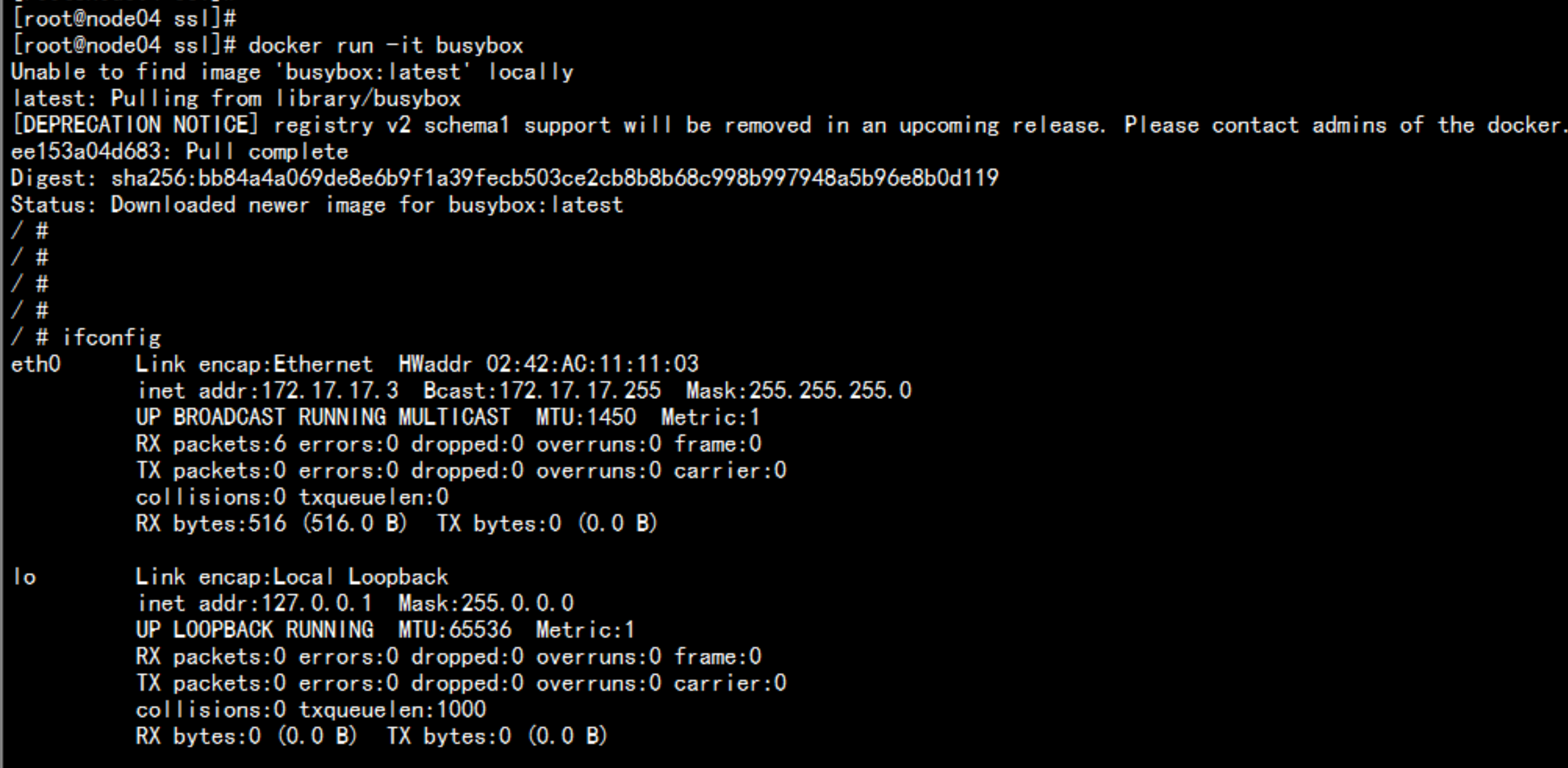

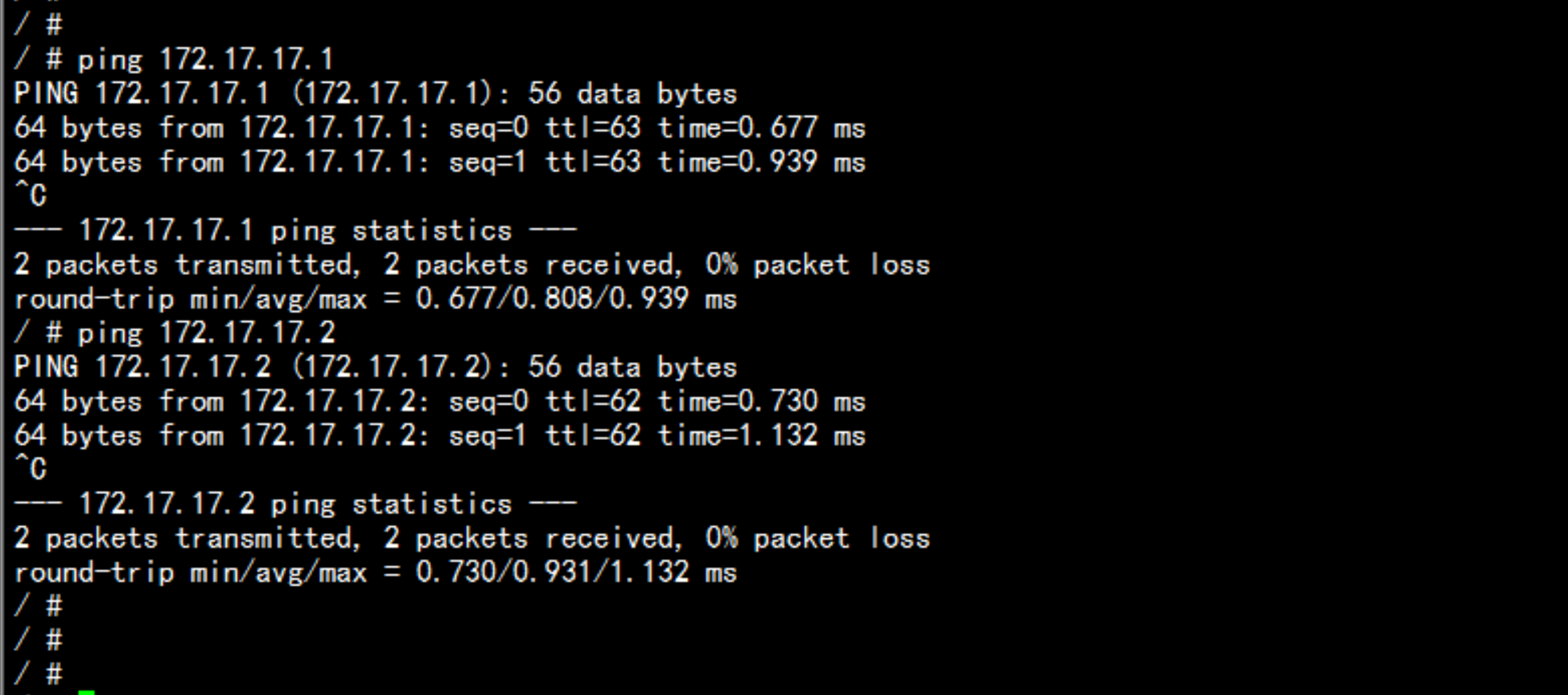

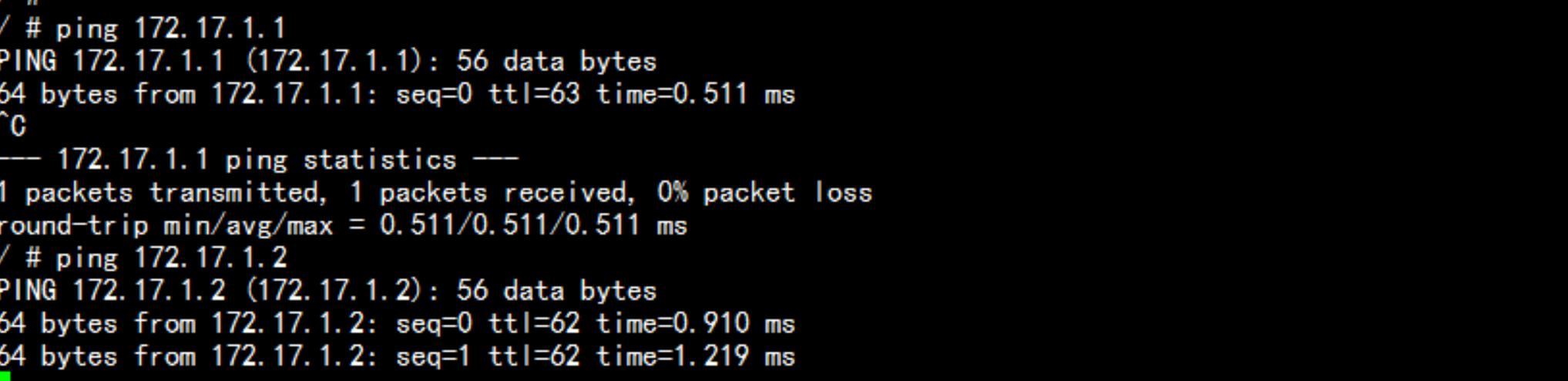

flannel 网络的测试在192.168.100.13 与 192.168.10.14 上面 创建 临时容器 查看 是不是可以ping 通安装测试容器docker run ti bubsyboxdocker run ti busybox

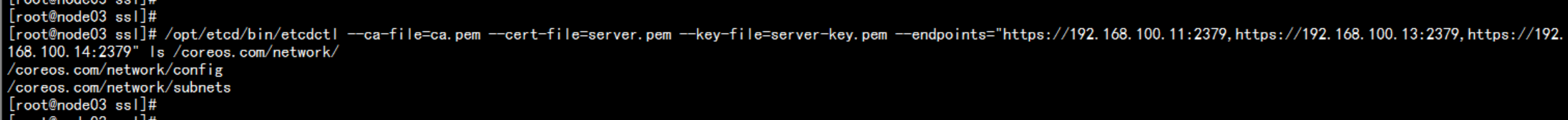

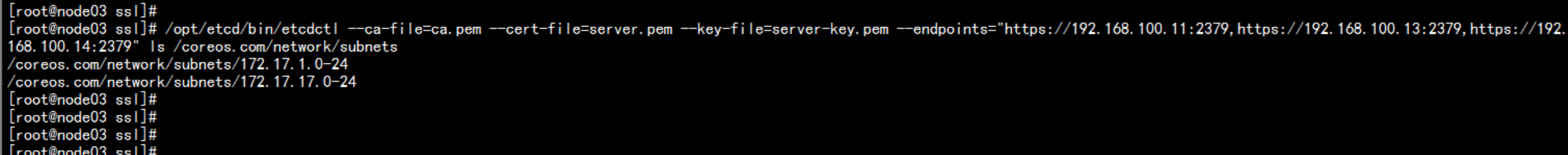

查看路由/opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.100.11:2379,https://192.168.100.13:2379,https://192.168.100.14:2379" ls /coreos.com/network//opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.100.11:2379,https://192.168.100.13:2379,https://192.168.100.14:2379" ls /coreos.com/network/subnets

2.6 部署k8s 的 Master 部分

2.6.1 下载kubernetes

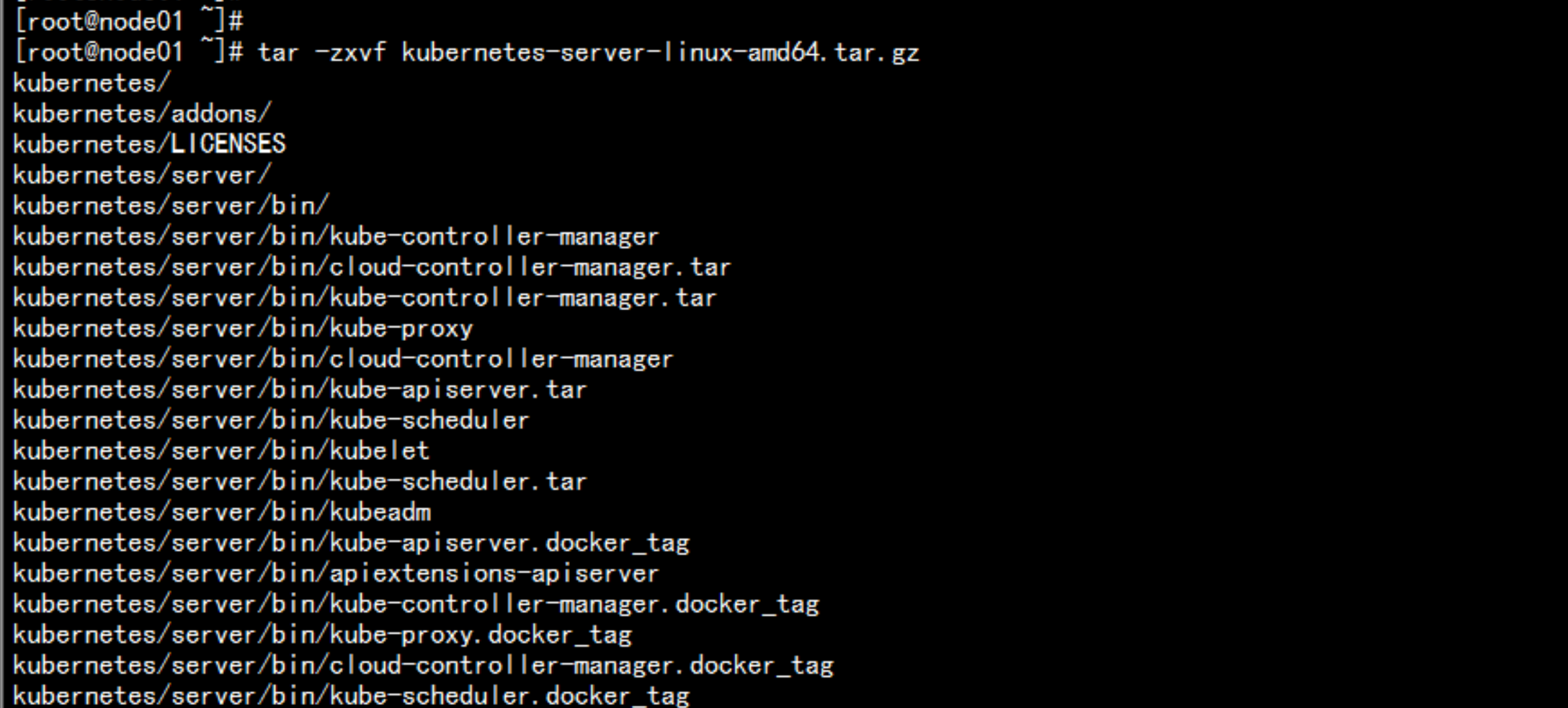

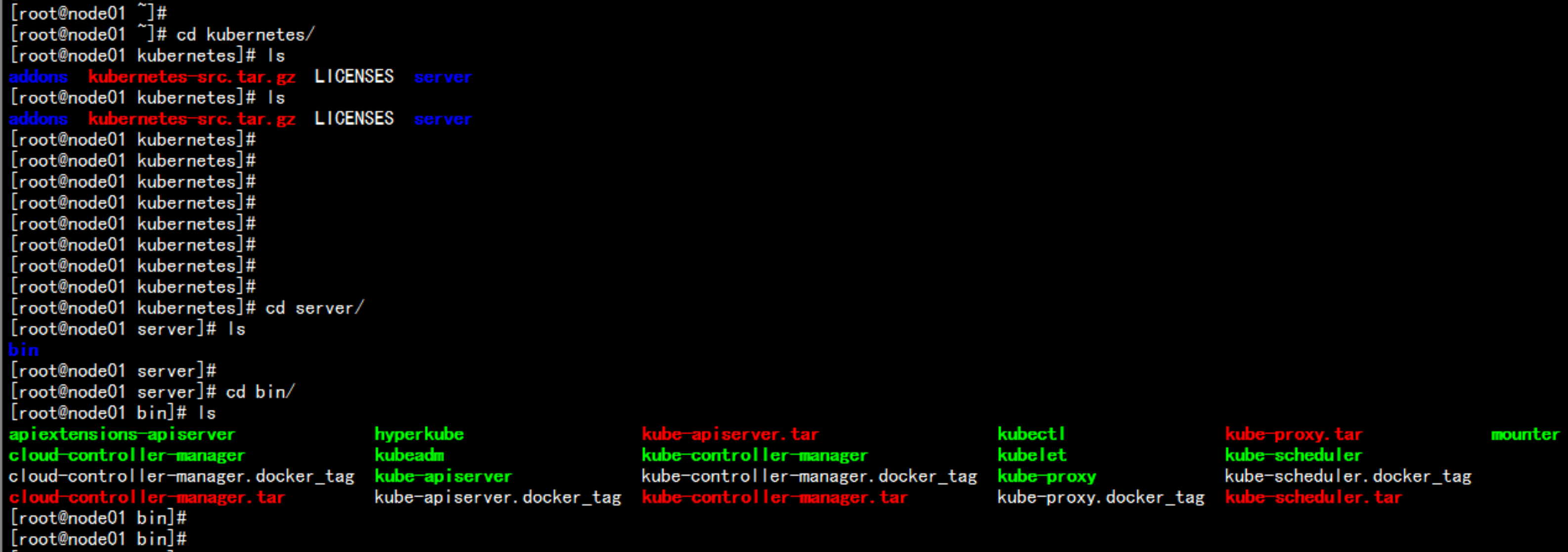

下载地址:选择 1.13.4 版本https://dl.k8s.io/v1.13.4/kubernetes-server-linux-amd64.tar.gz

2.6.2 部署kube-apiserver

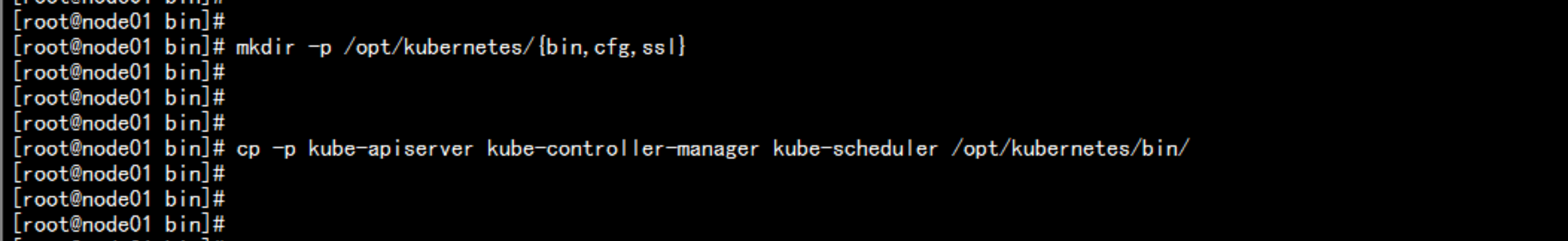

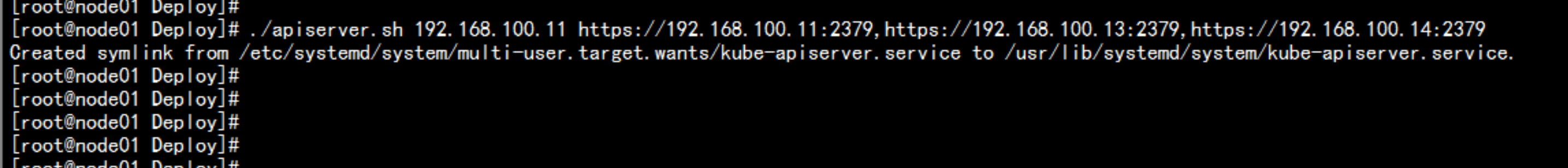

tar -zxvf kubernetes-server-linux-amd64.tar.gzmkdir -p /opt/kubernetes/{bin,cfg,ssl}cd /root/kubernetes/server/bincp -p kube-apiserver kube-controller-manager kube-scheduler /opt/kubernetes/bin/cd /root/Deploychmod +x apiserver.sh./apiserver.sh 192.168.100.11 https://192.168.100.11:2379,https://192.168.100.13:2379,https://192.168.100.14:2379

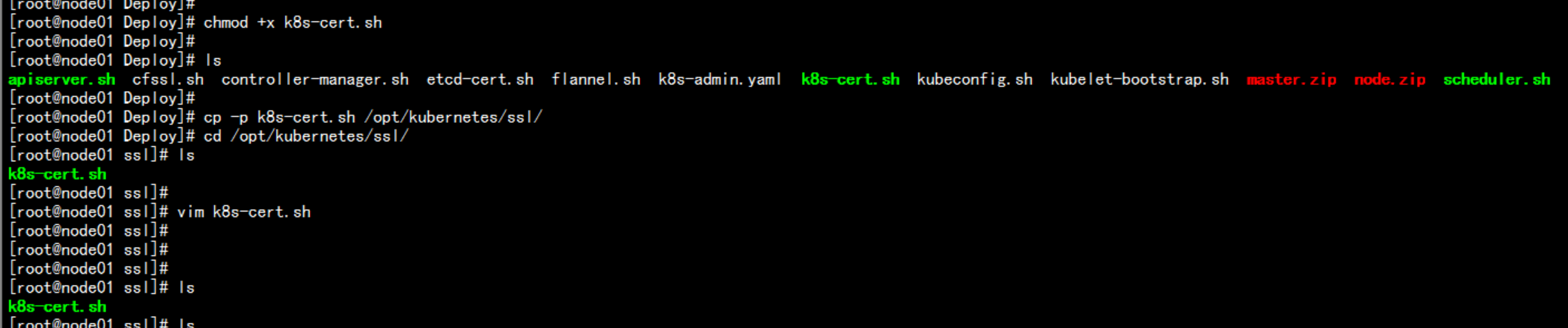

配置k8s 的认证cd /root/Deploycp -p k8s-cert.sh /opt/kubernetes/sslcd /opt/kubernetes/sslchmod +x k8s-cert.sh./k8s-cert.sh

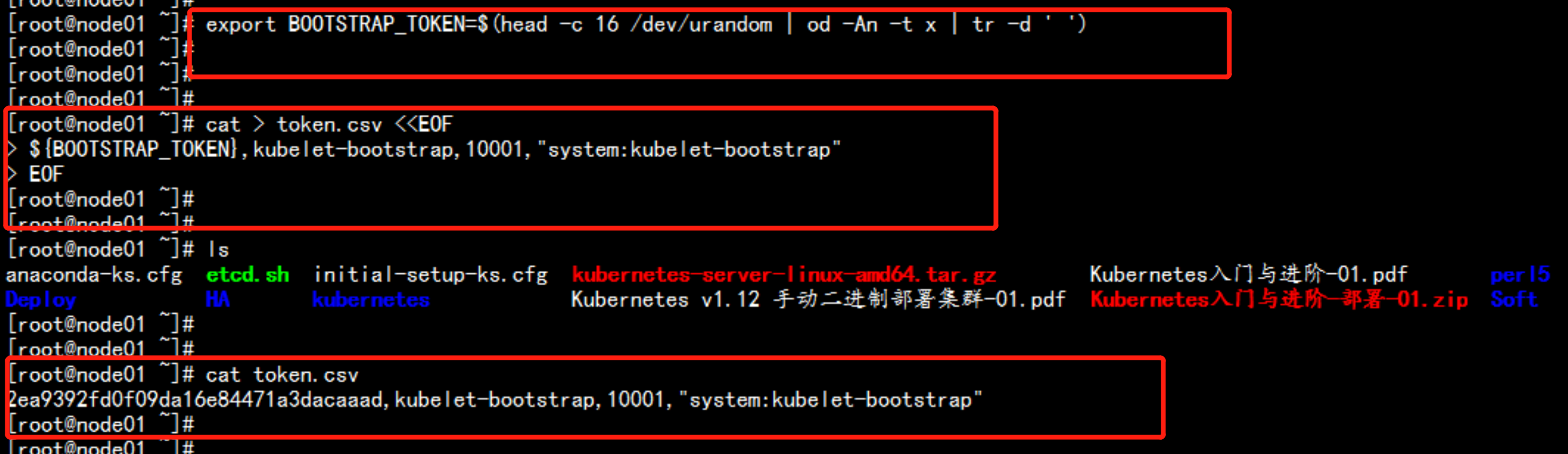

生成token 文件export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')cat > token.csv <<EOF${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"EOFcat token.csvmv tokcen.csv /opt/kubernetes/cfg/

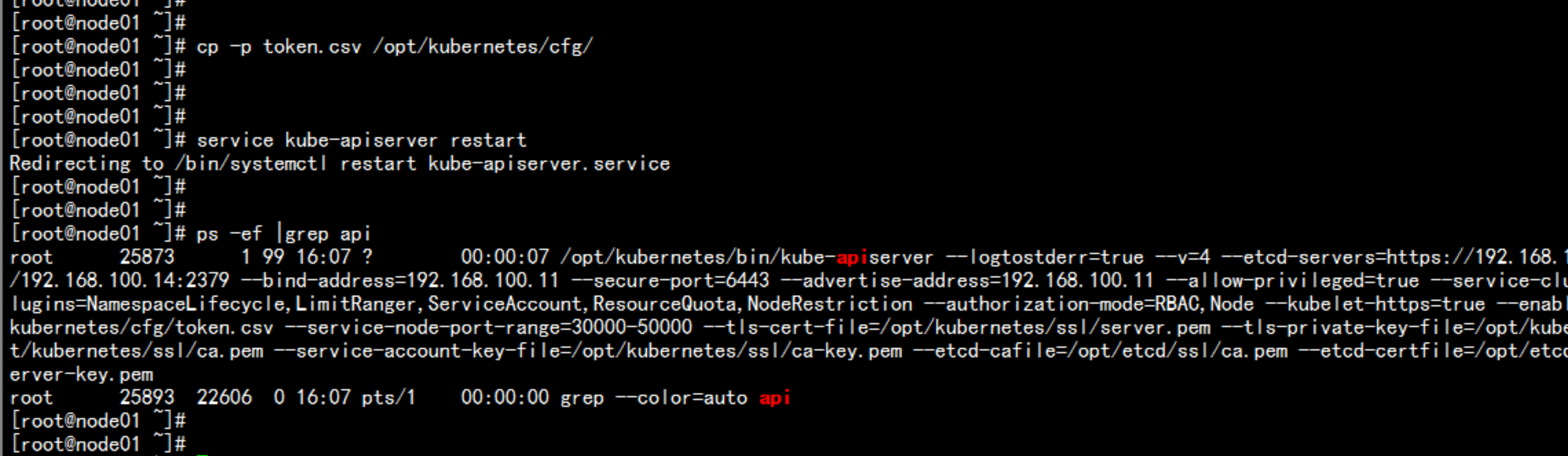

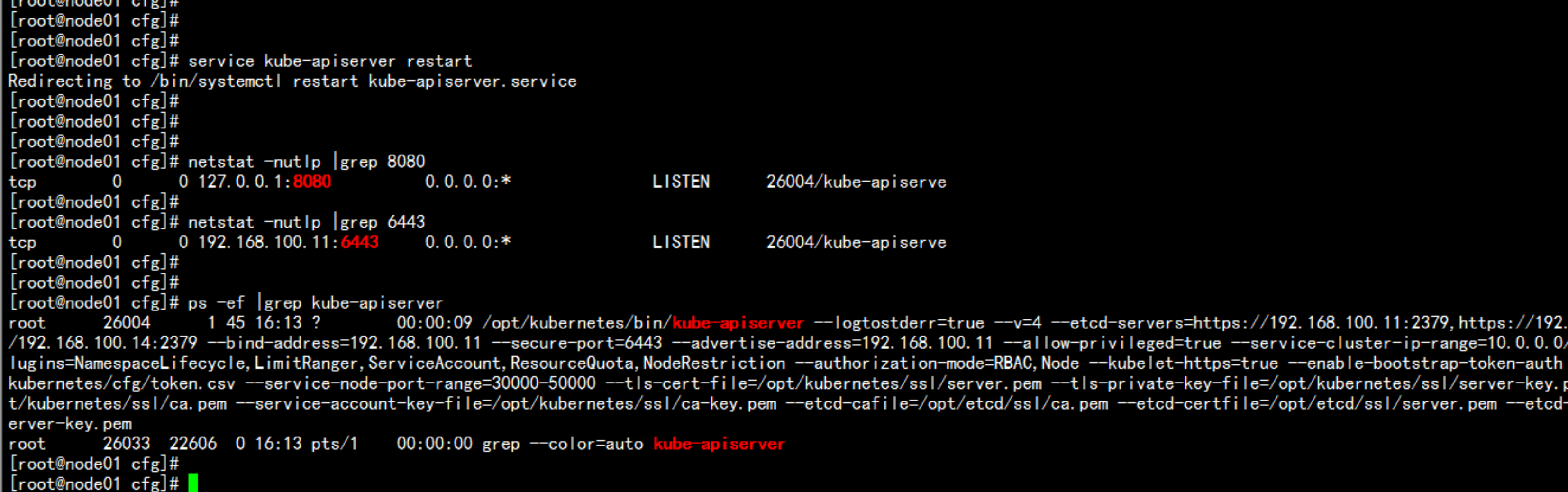

重启动 kube-apiserverservice kube-apiserver restartnetstat -nultp |grep 8080netstat -nultp |grep 6443apiserver 问题排查命令/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

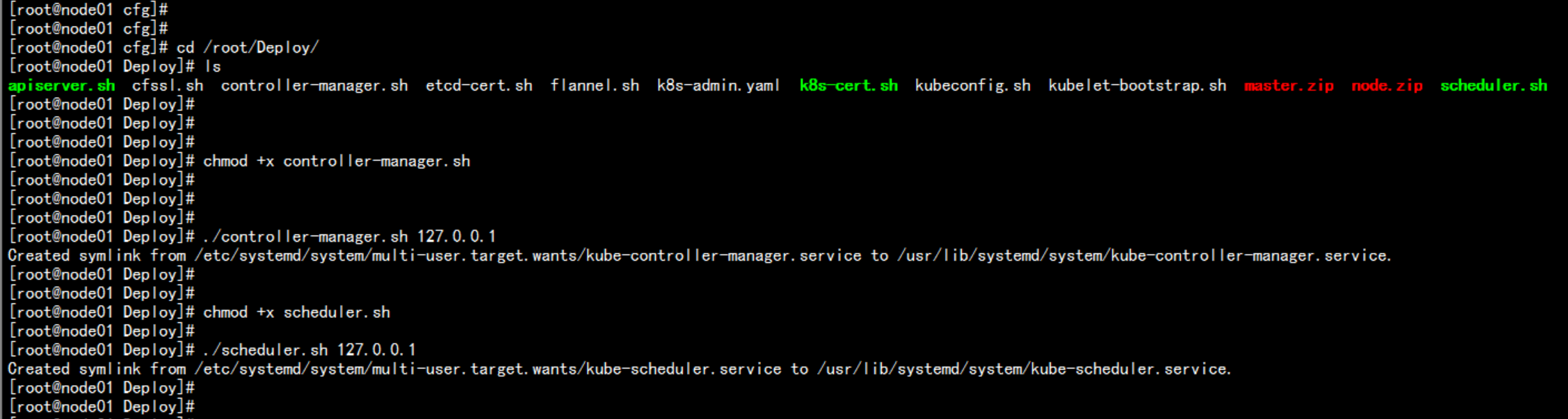

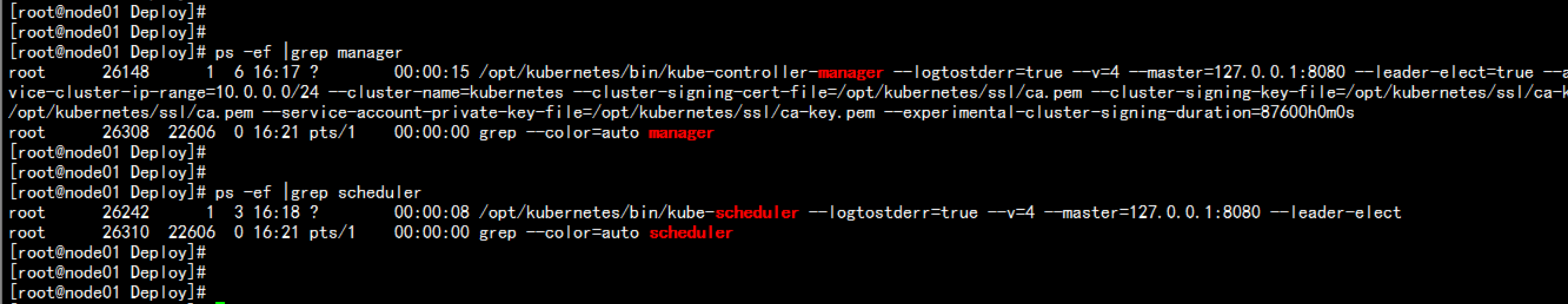

2.6.3 部署controller-manager 与 scheduler

cd /root/Deploychmod +x controller-manager.shchmod +x scheduler.sh./conroller-manager.sh 127.0.0.1./scheduler.sh 127.0.0.1

检查集群 的状态cd /root/kubernetes/server/bin/cp -p kubectl /usr/bin/kubectl get cs

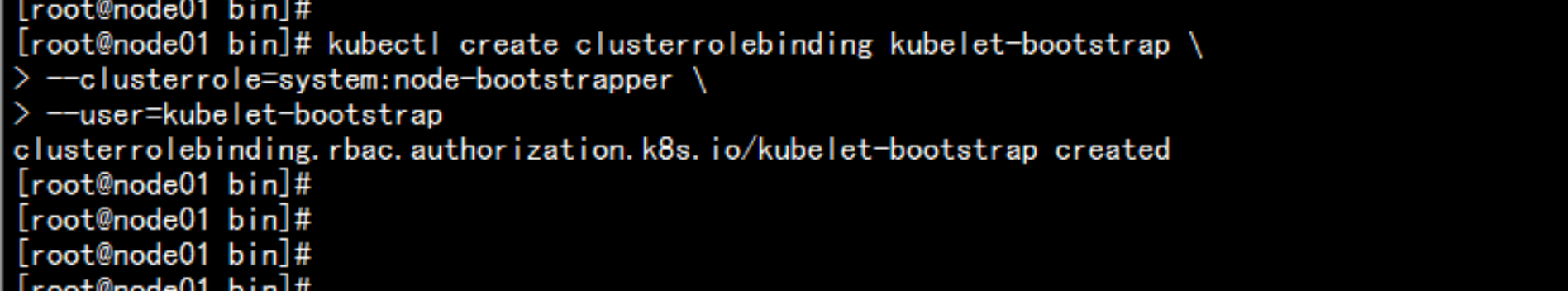

部署Node组件 所需要的授权

kubectl create clusterrolebinding kubelet-bootstrap \--clusterrole=system:node-bootstrapper \--user=kubelet-bootstrap

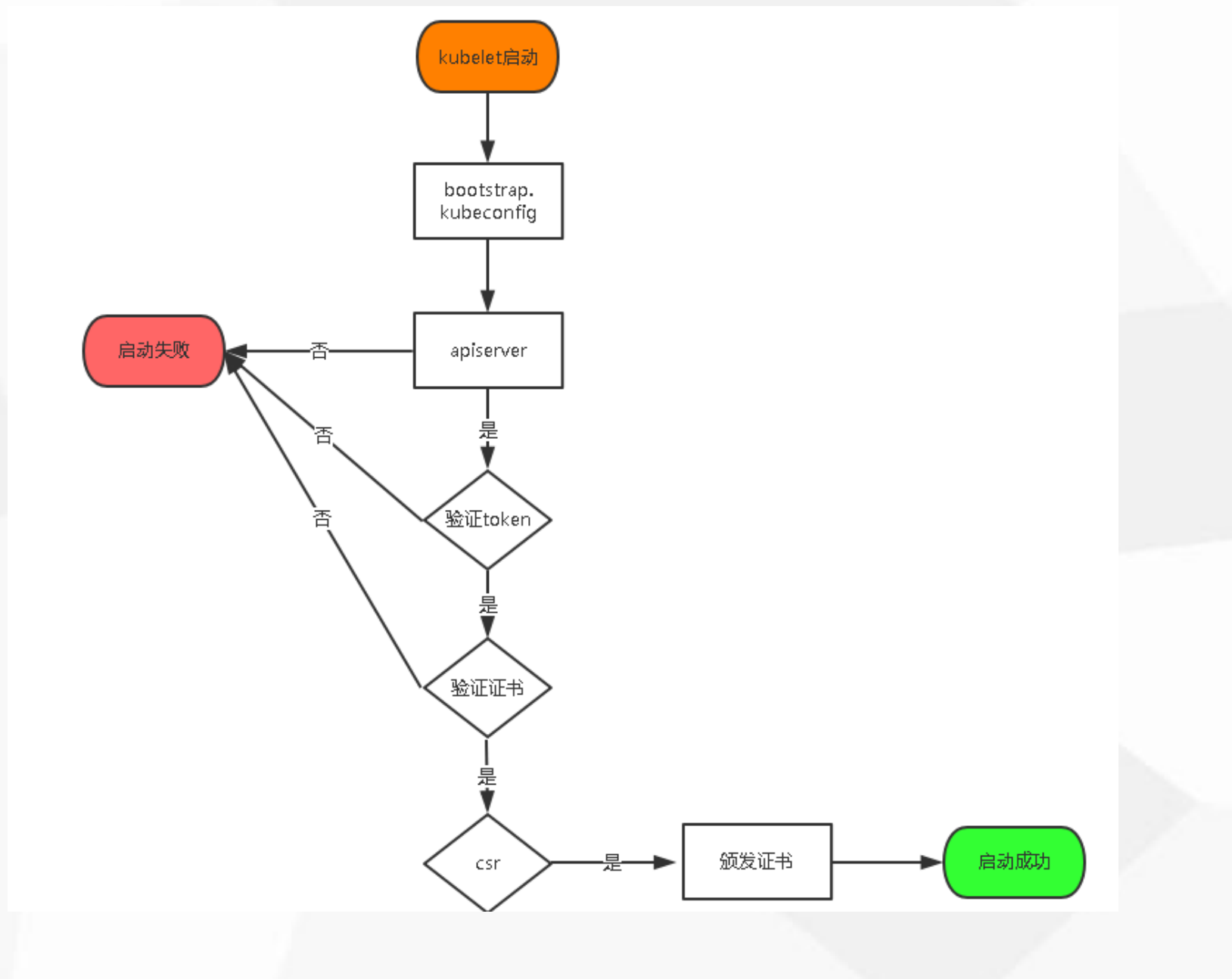

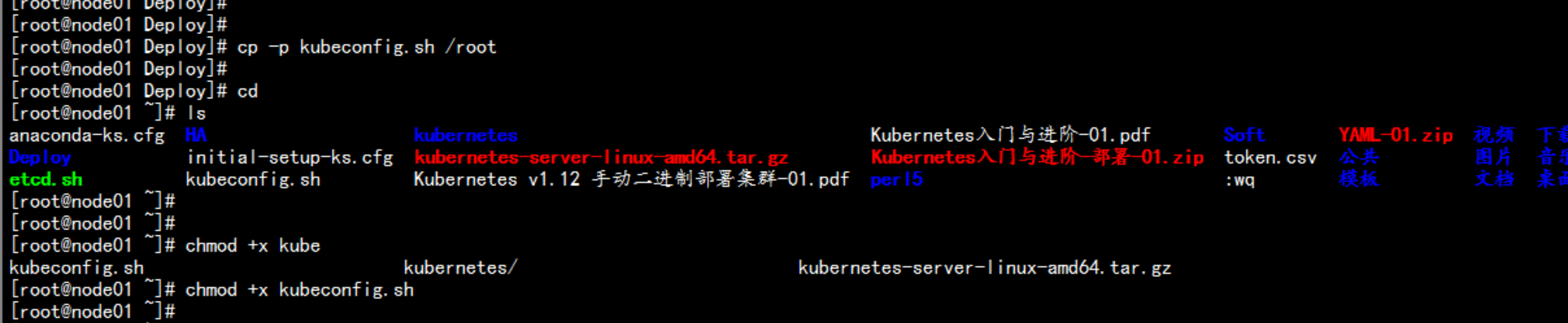

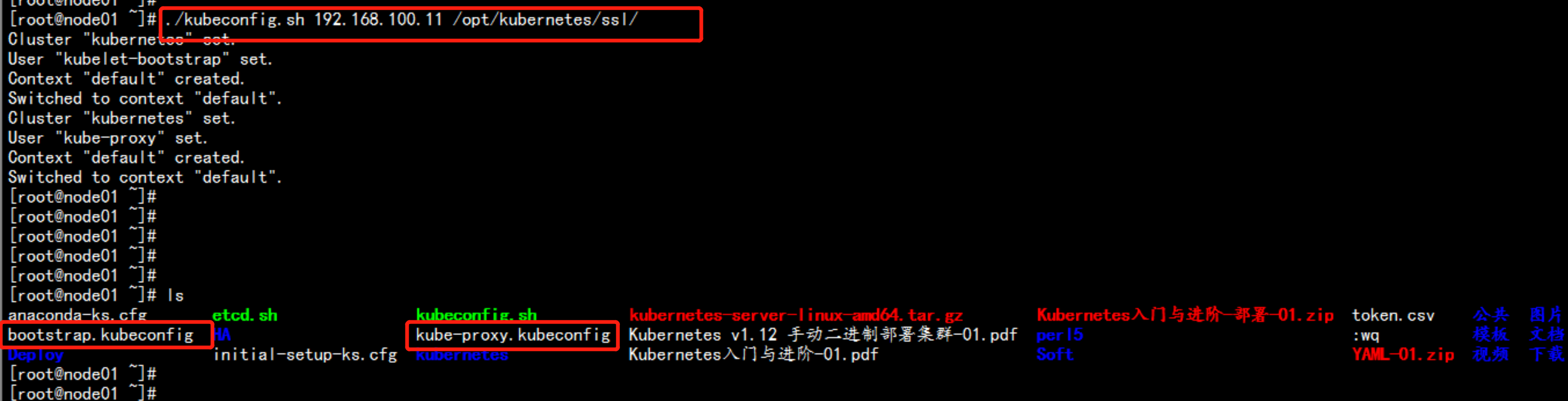

cd /root/Deploycp -p kubeconfig.sh /rootcd /rootchmod +x kubeconfig.sh./kubeconfig.sh 192.168.100.11 /opt/kubernetes/ssl生成bootstrap.kubeconfig 与 kube-proxy.kubeconfig 文件

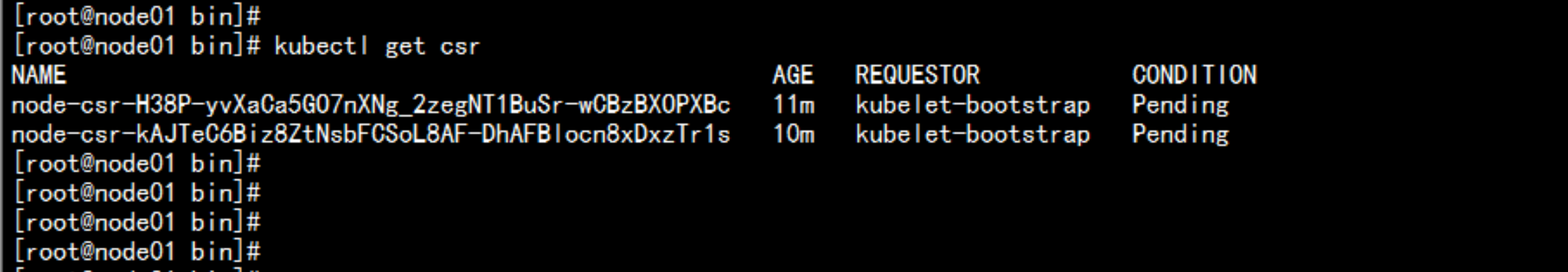

查看证书颁发kubectl get csr

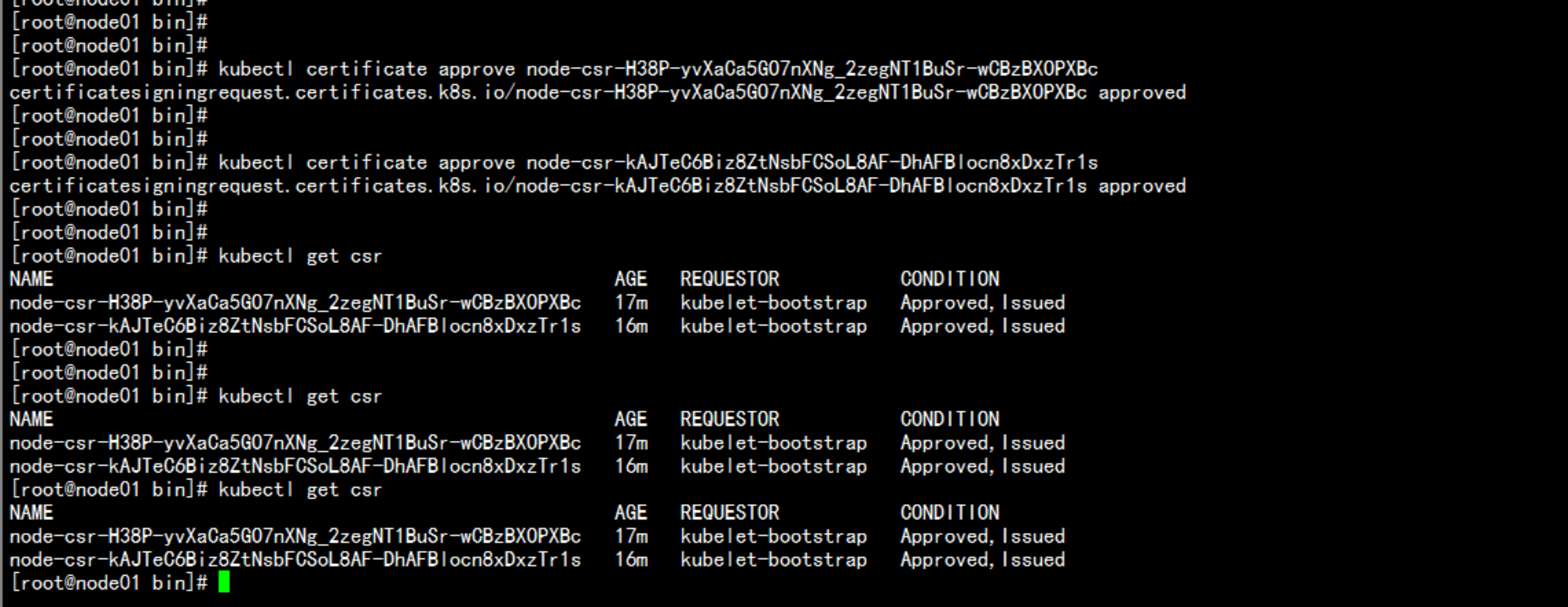

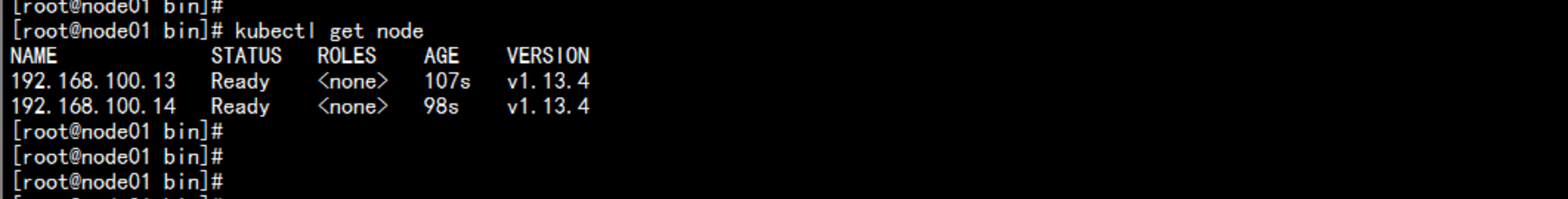

kubectl certificate approve node-csr-H38P-yvXaCa5GO7nXNg_2zegNT1BuSr-wCBzBXOPXBckubectl certificate approve node-csr-kAJTeC6Biz8ZtNsbFCSoL8AF-DhAFBlocn8xDxzTr1skubectl get csrkubectl get node

2.7 部署k8s的node部分组件

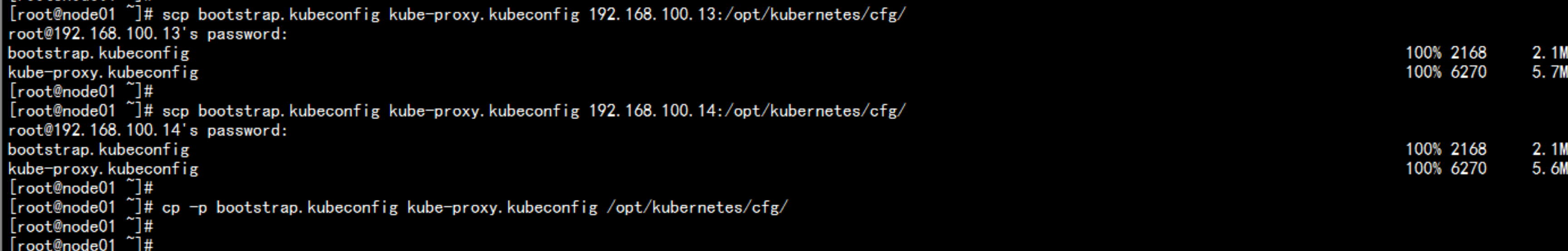

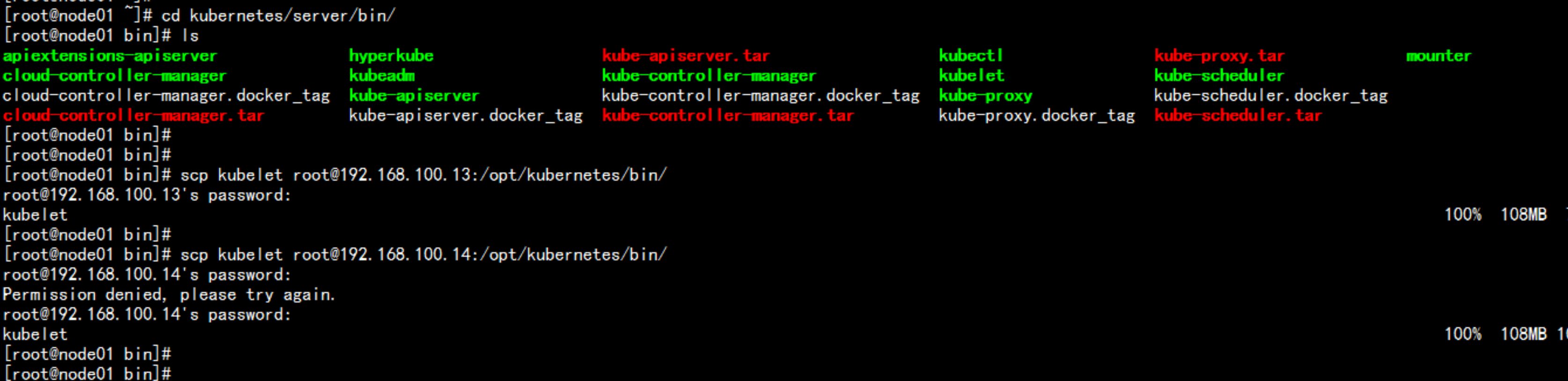

复制 bootstrap.kubeconfig 与 kube-proxy.kubeconfig 文件到node 上面scp bootstrap.kubeconfig kube-proxy.kubeconfig 192.168.100.13:/opt/kubernetes/cfg/scp bootstrap.kubeconfig kube-proxy.kubeconfig 192.168.100.14:/opt/kubernetes/cfg/cp -p bootstrap.kubeconfig kube-proxy.kubeconfig /opt/kubernetes/cfg/cd /root/kubernetes/server/binscp kubelet root@192.168.100.13:/opt/kubernetes/bin/scp kubelet root@192.168.100.14:/opt/kubernetes/bin/

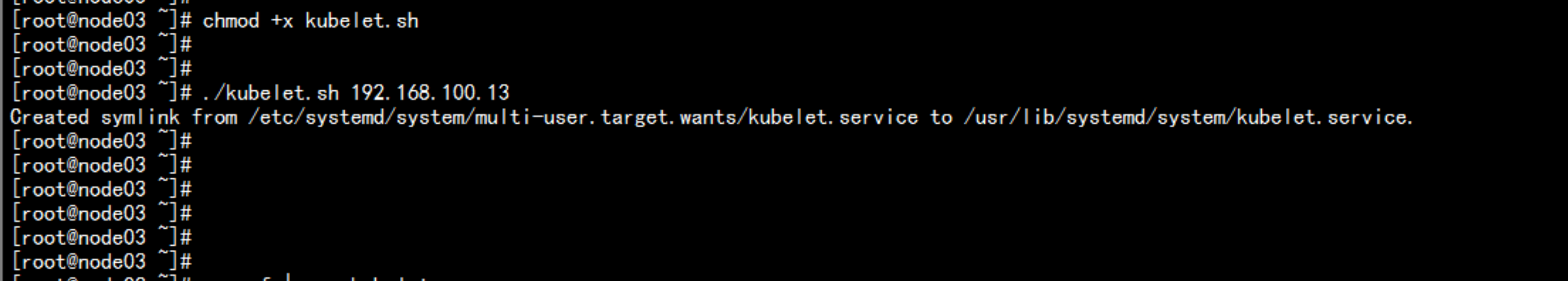

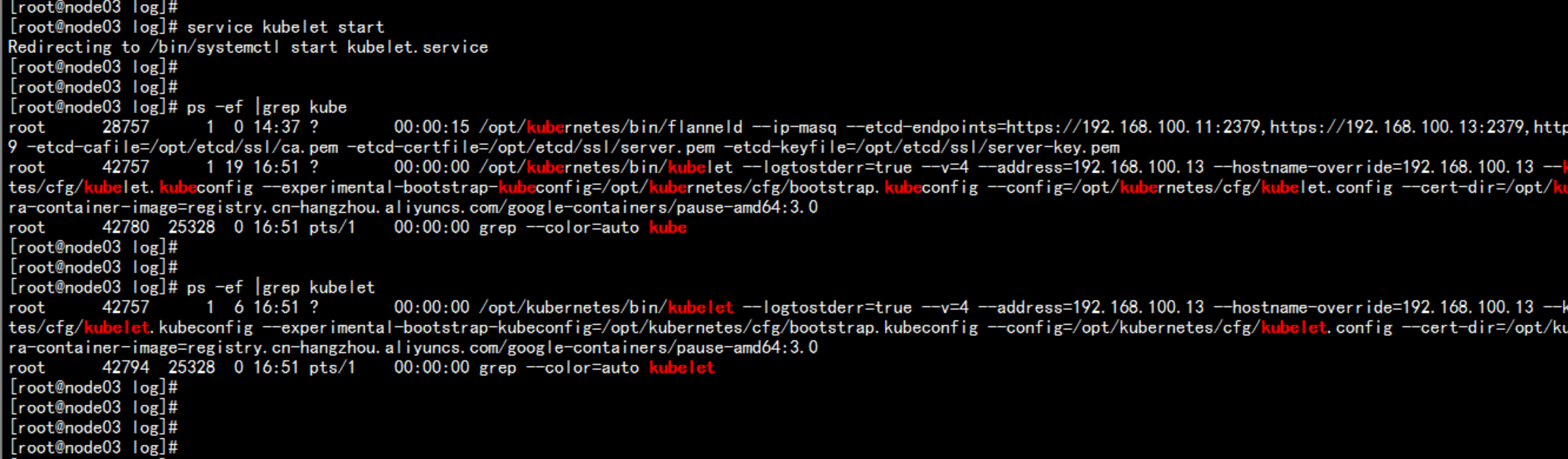

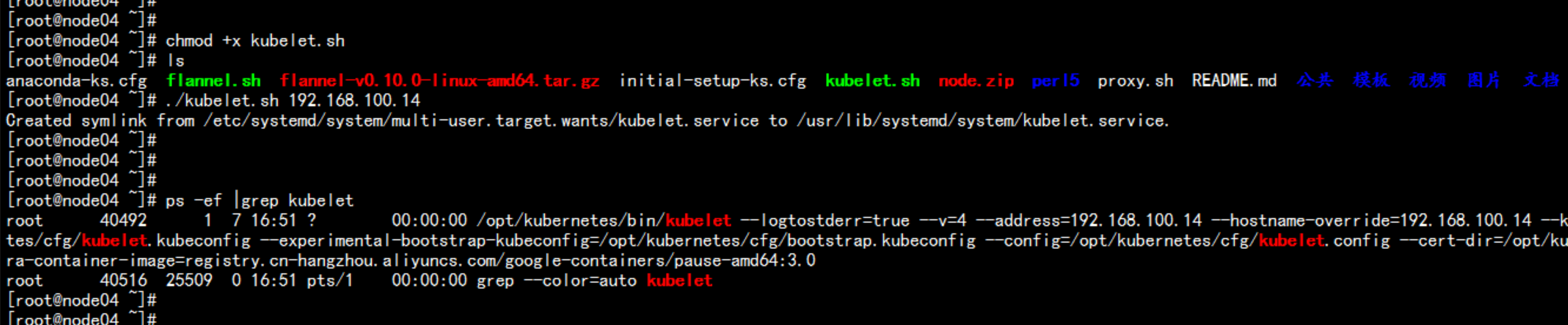

node 节点 执行cd /rootchmod +x kubelet.sh./kubelet 192.168.100.13./kubelet 192.168.100.14

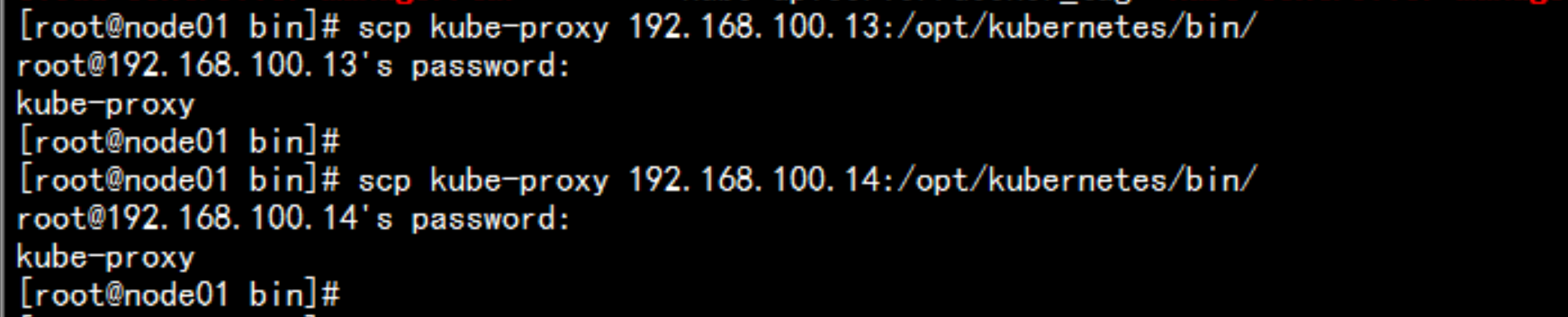

kube-proxy 部署scp kube-proxy 192.168.100.13:/opt/kubernetes/bin/scp kube-proxy 192.168.100.14:/opt/kubernetes/bin/

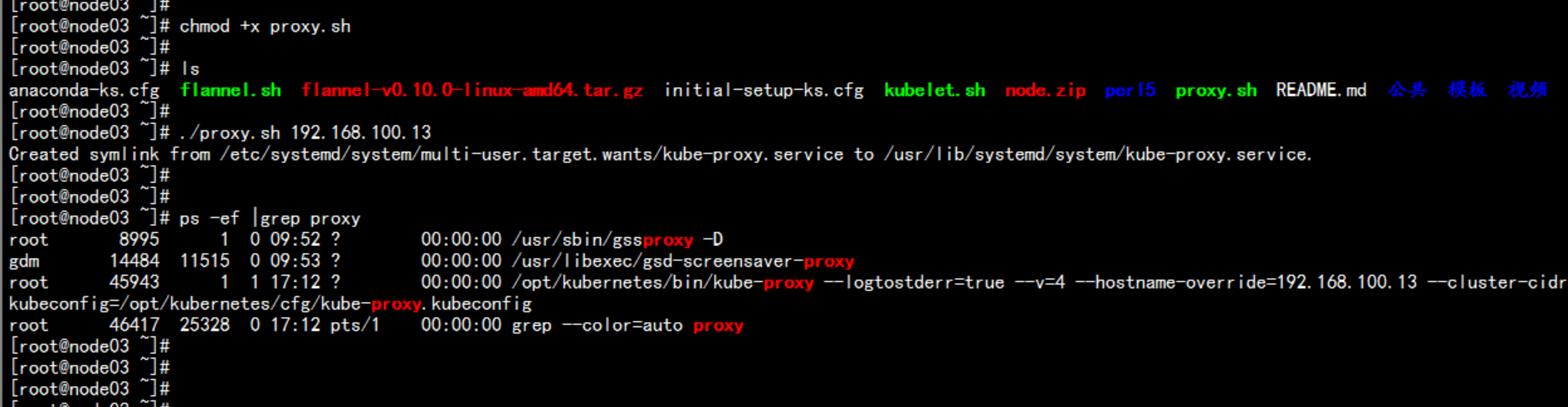

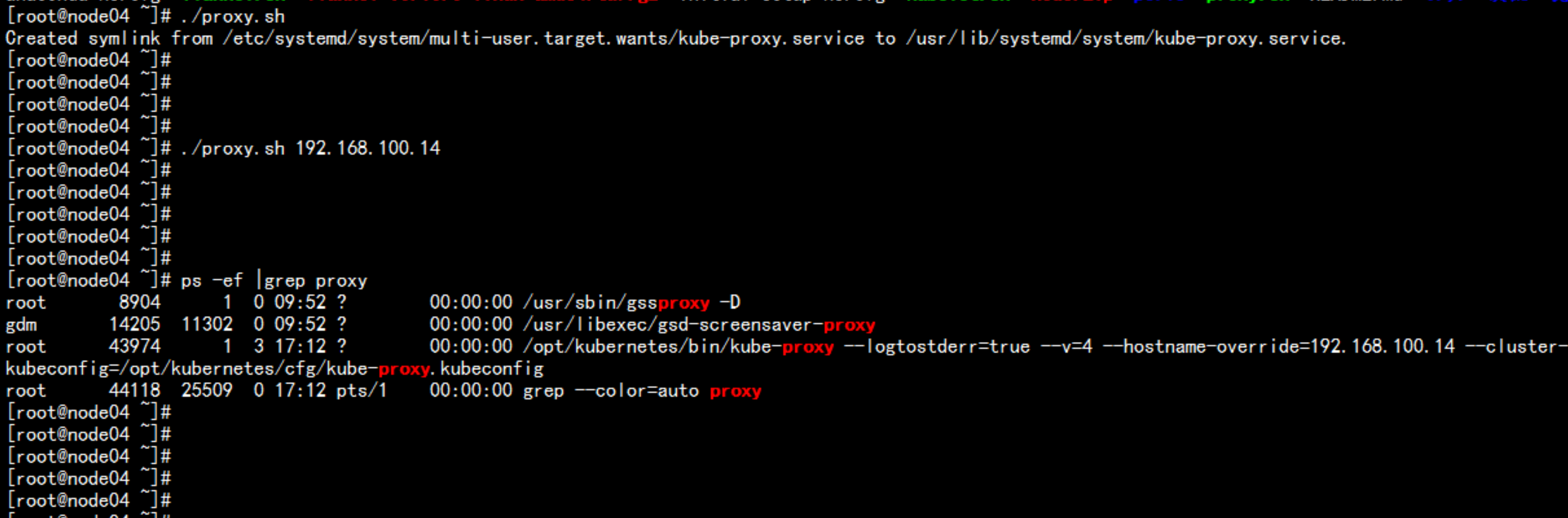

kube-proxy 部署设置登录到node节点chmod +x proxy.sh./proxy.sh 192.168.100.13./proxy.sh 192.168.100.14ps -ef |grep proxy

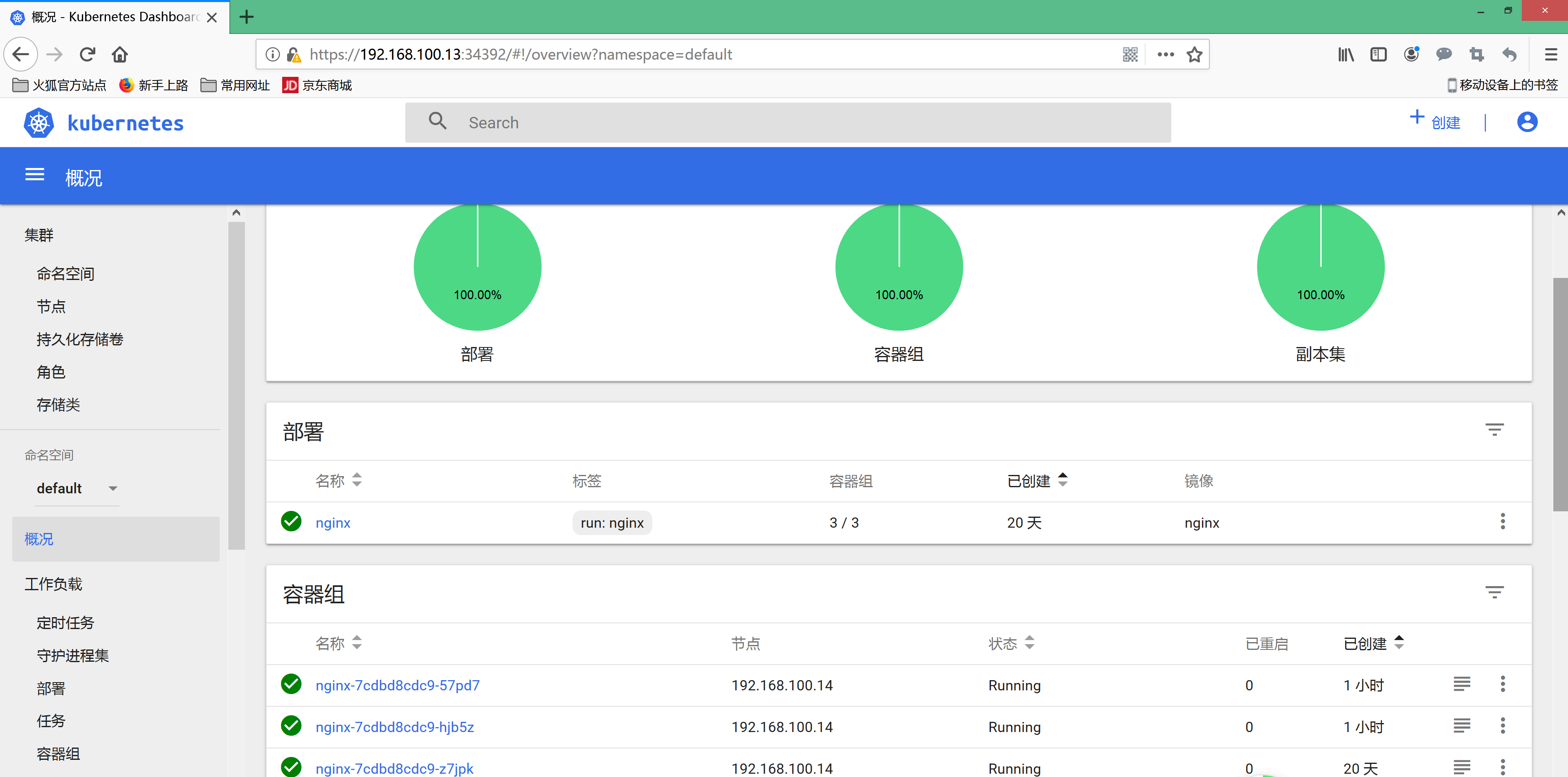

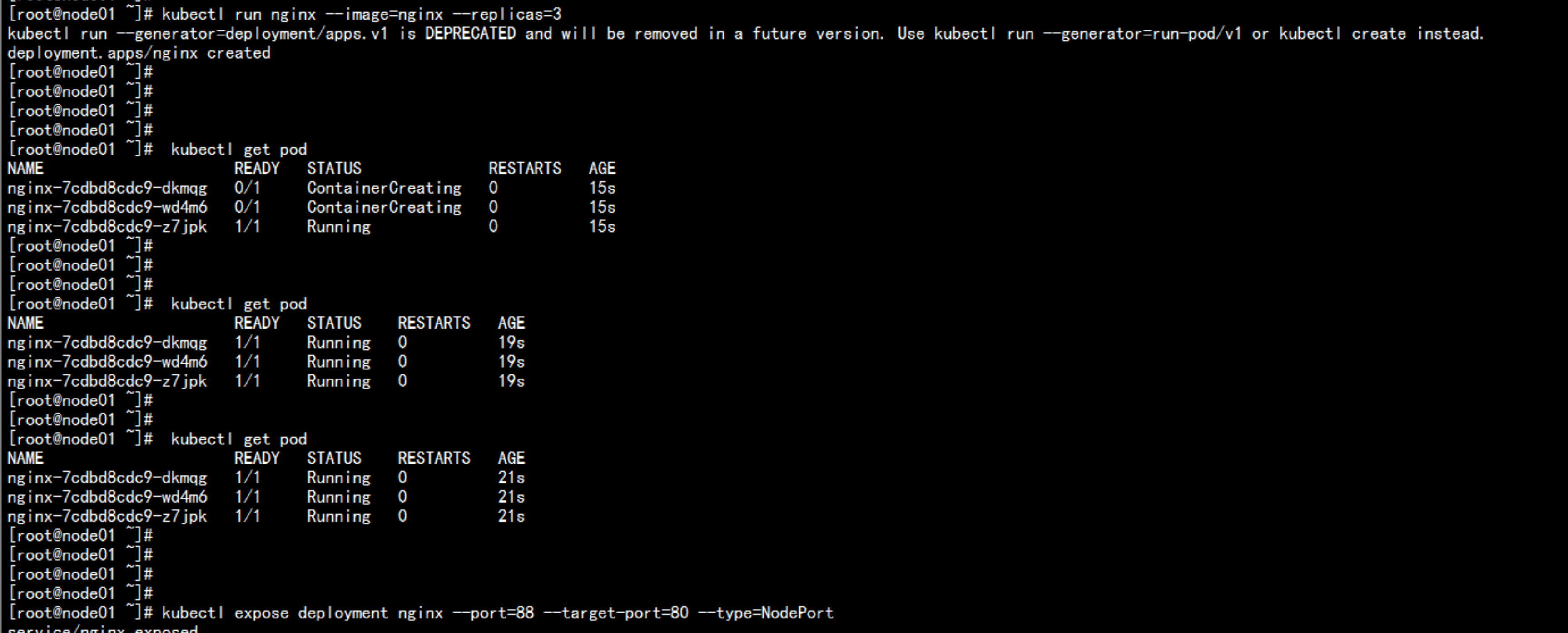

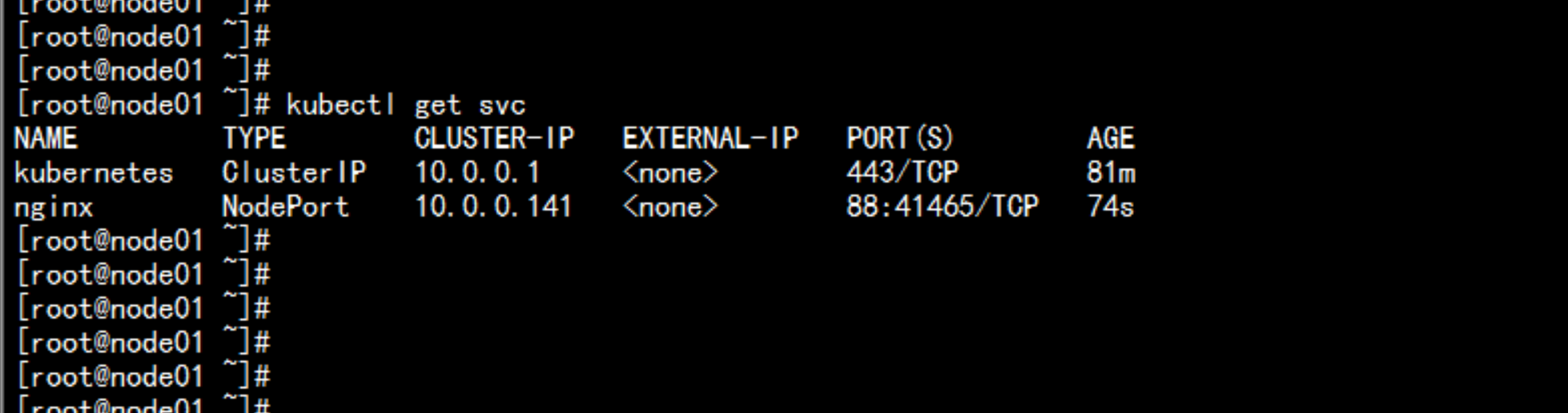

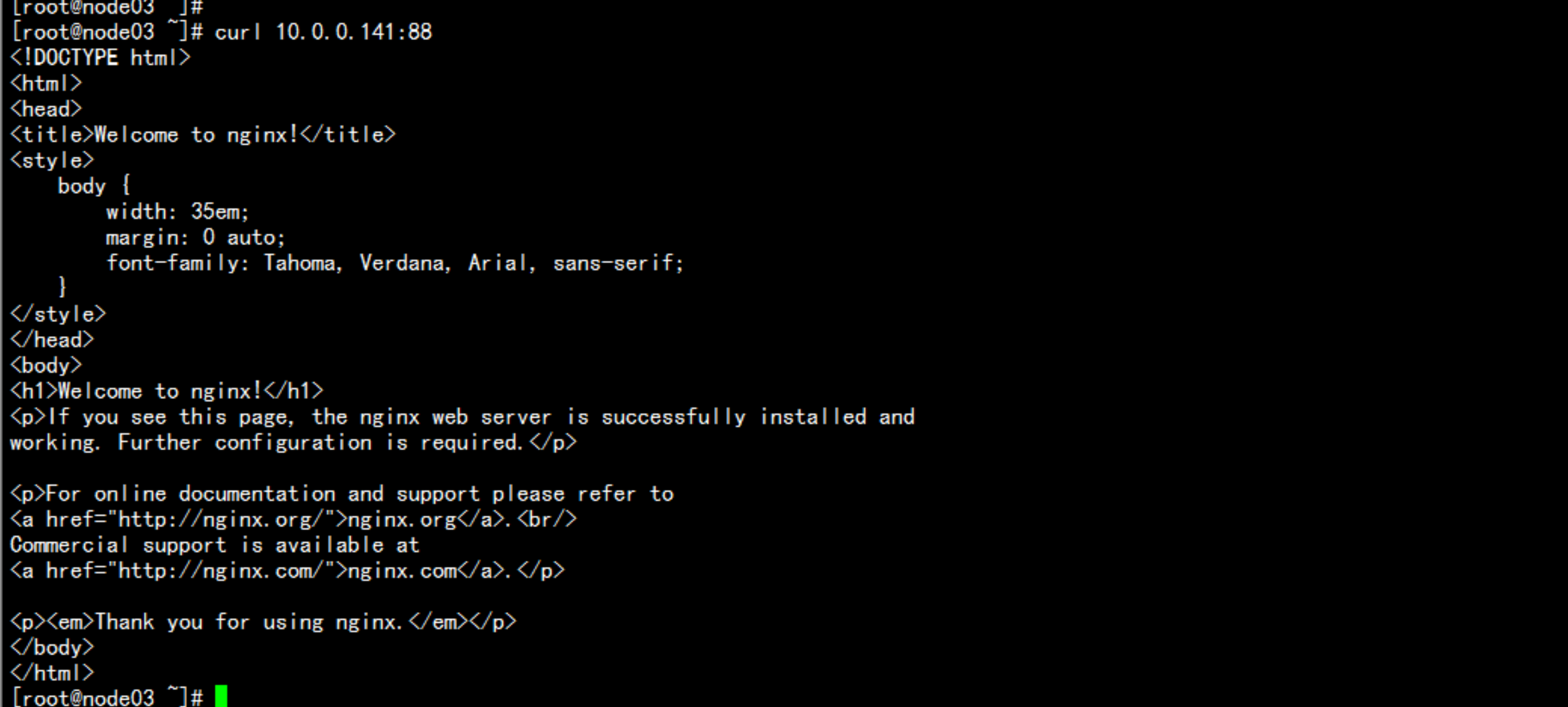

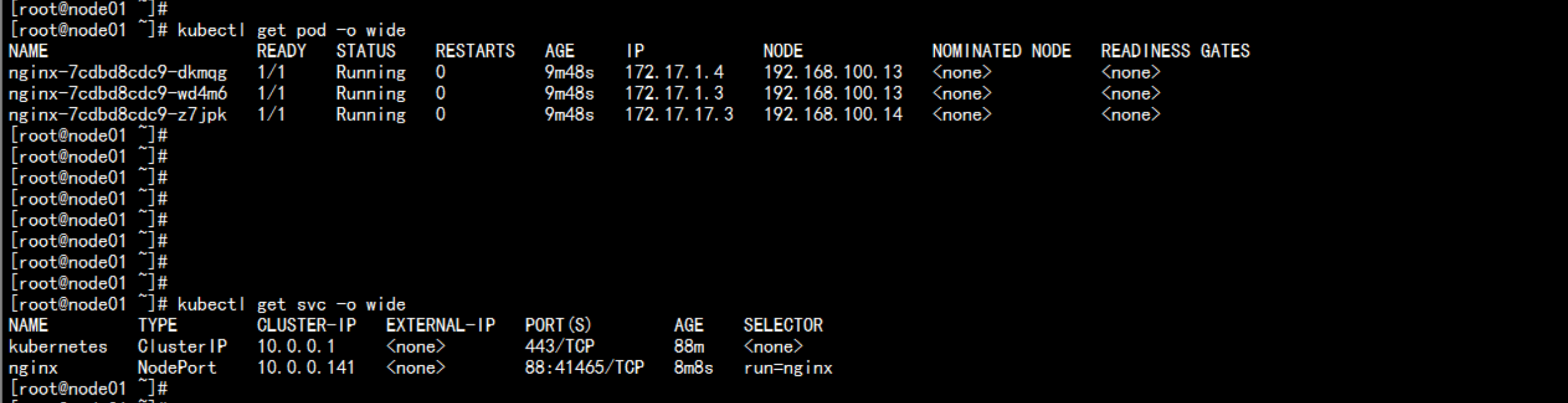

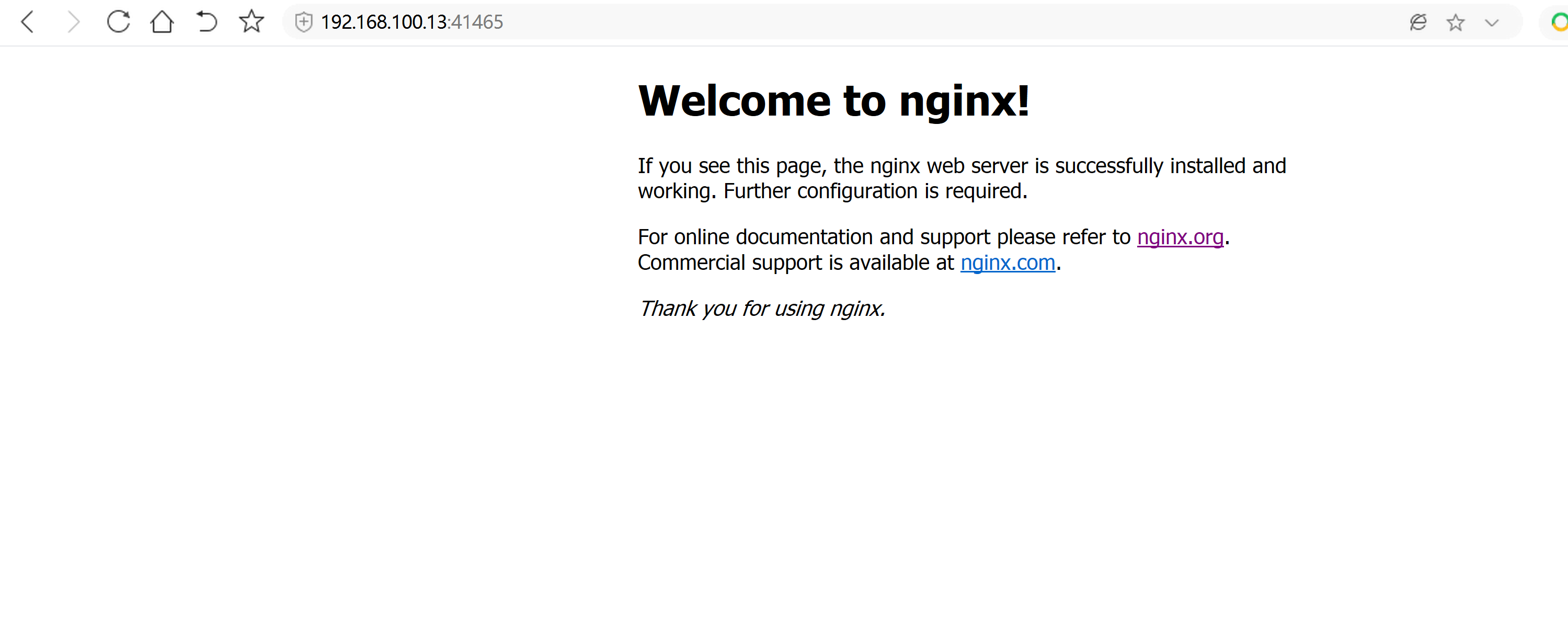

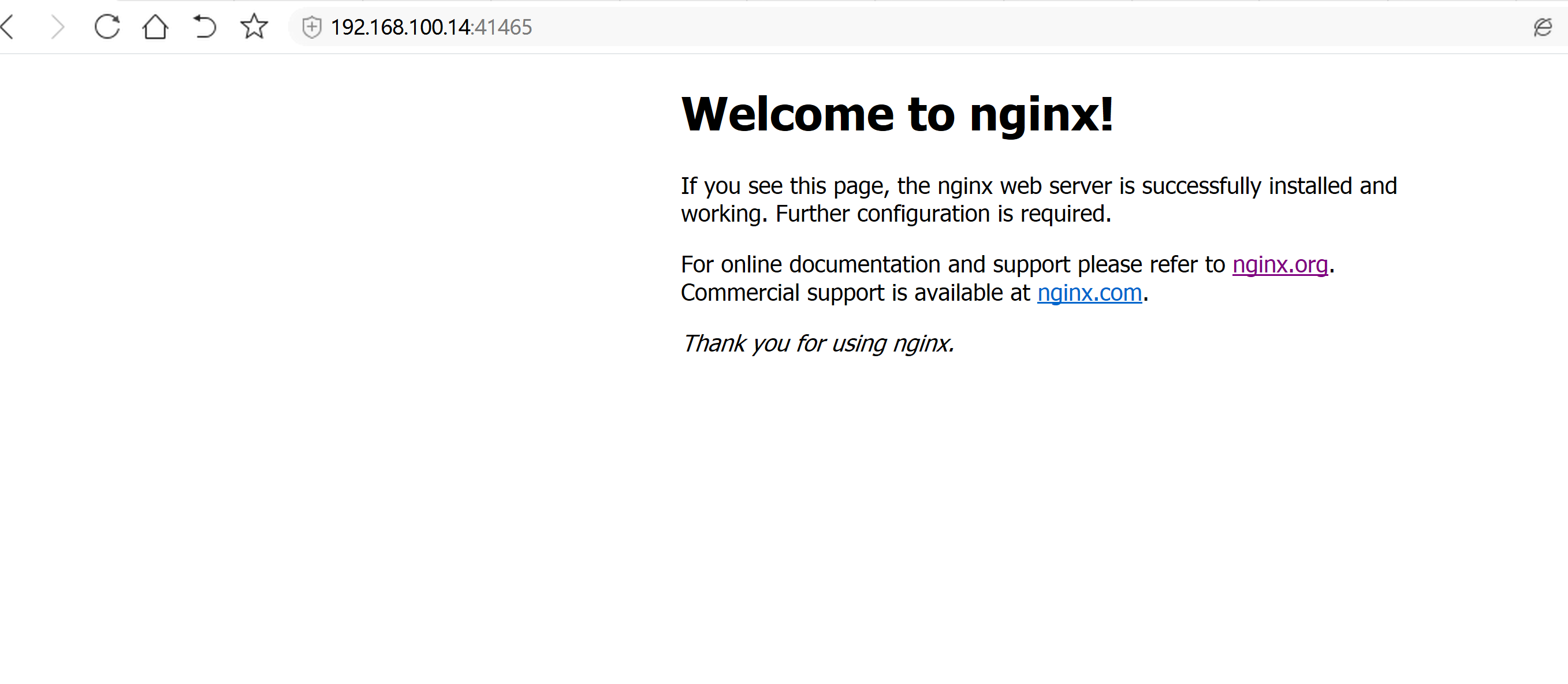

运行一个nginx实例测试# kubectl run nginx --image=nginx --replicas=3# kubectl get pod# kubectl expose deployment nginx --port=88 --target-port=80 --type=NodePort# kubectl get svc nginx

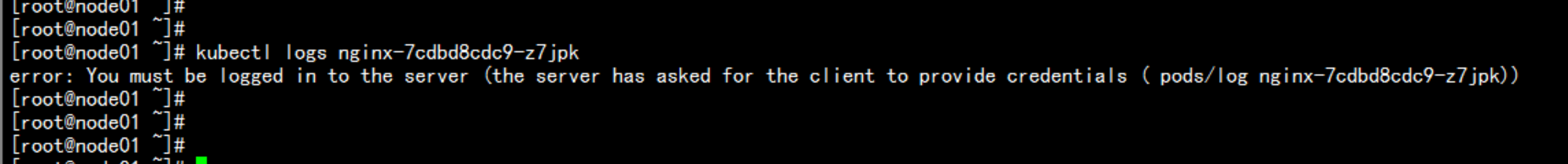

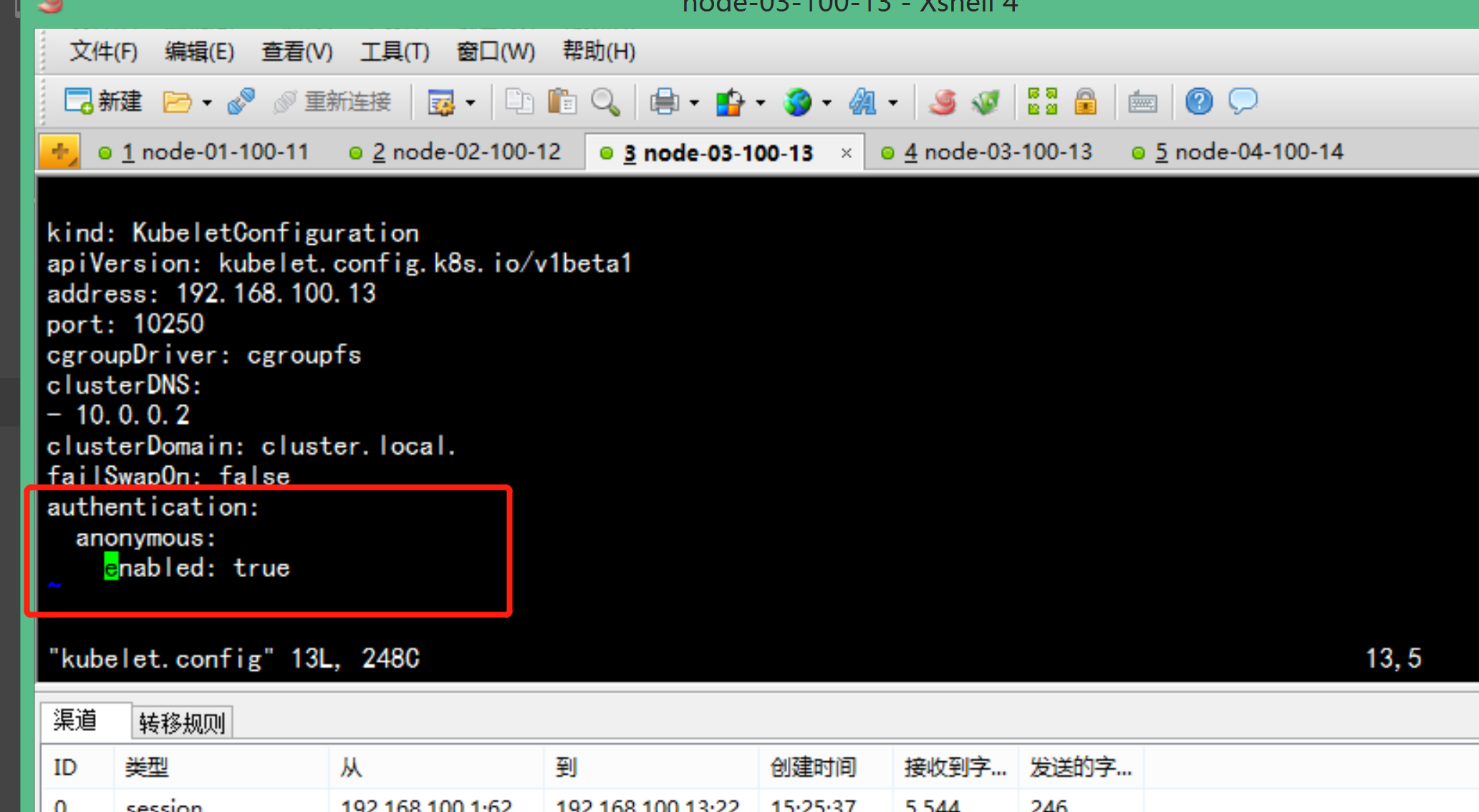

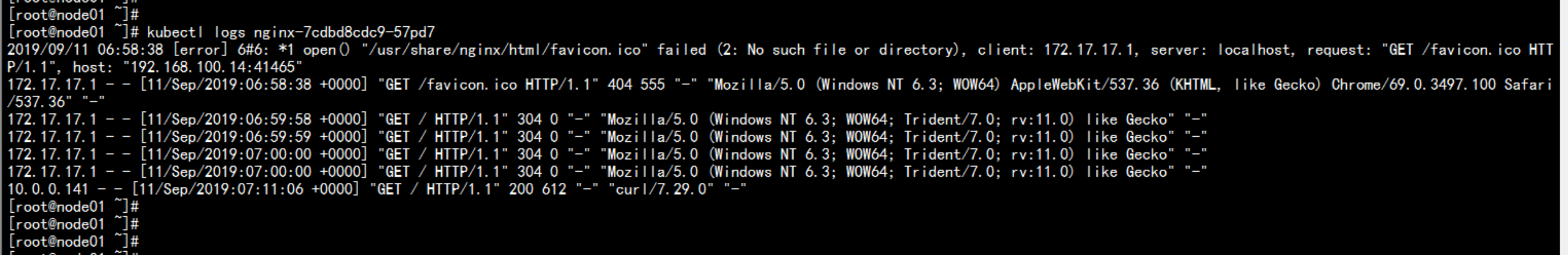

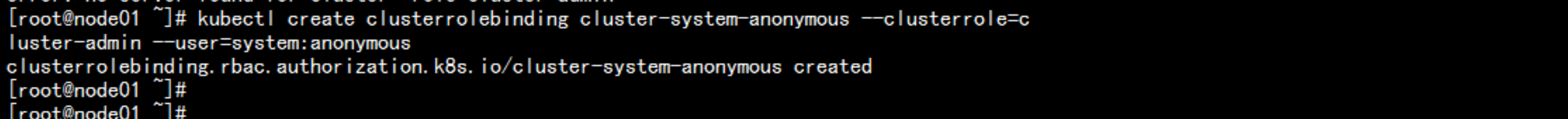

主节点 无法 查看 日志error: You must be logged in to the server (the server has asked for the client to provide credentials ( pods/log nginx-7cdbd8cdc9-z7jpk))----解决方法:开放kubelet 的 匿名访问权限vim /opt/kubernetes/kubelet.config在最后加上:authentication:anonymous:enabled: true----service kubelet restartkubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

2.8 部署一个kubernetes UI 界面

下载地址链接:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dashboard

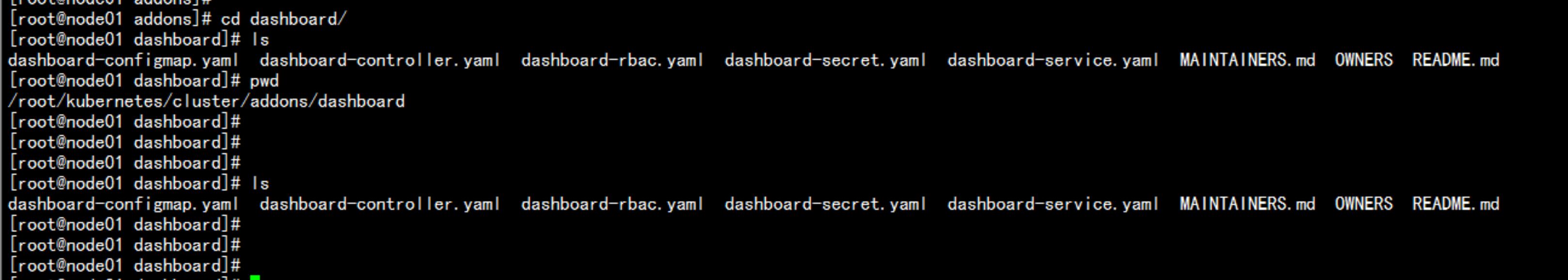

找到K8S 的 源码包 解压cd /root/kubernetes/tar -zxvf kubernetes-src.tar.gzcd /root/kubernetes/cluster/addons/dashboard/

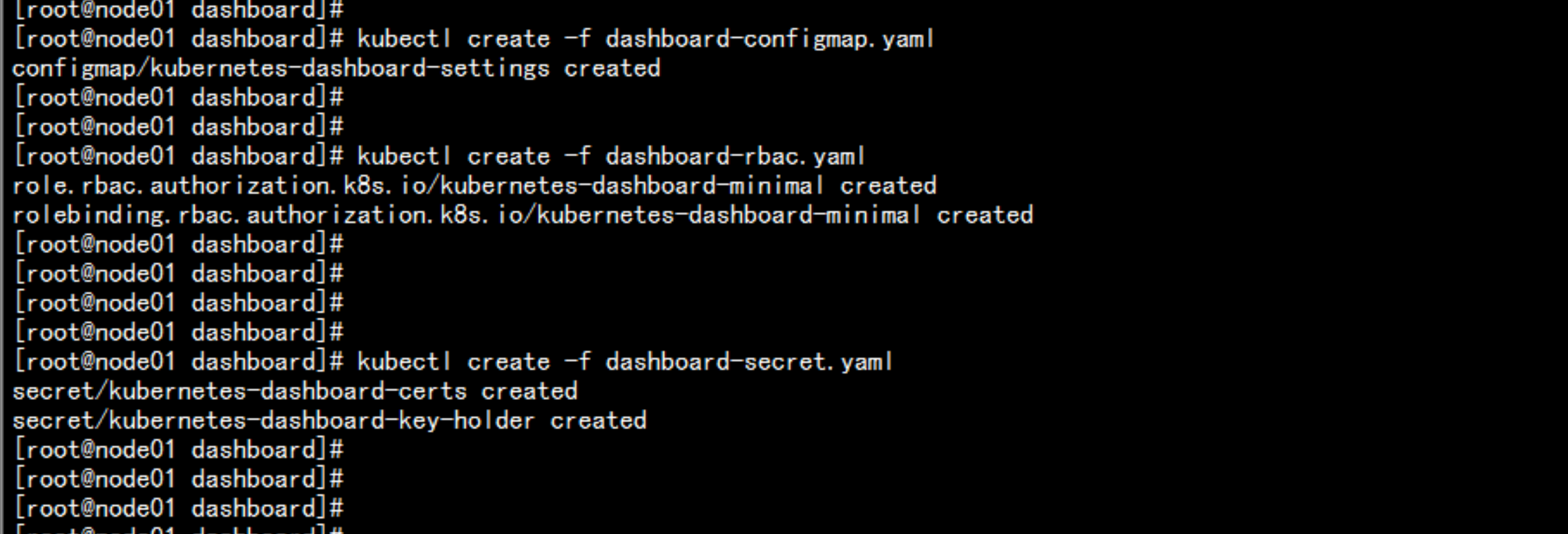

kubectl create -f dashboard-configmap.yamlkubectl create -f dashboard-rbac.yamlkubectl create -f dashboard-secret.yaml

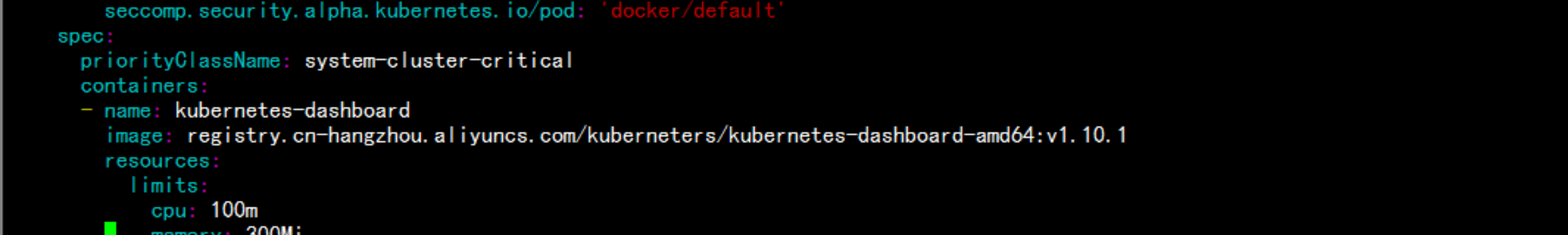

vim dashboard-controller.yaml改image:image: registry.cn-hangzhou.aliyuncs.com/kuberneters/kubernetes-dashboard-amd64:v1.10.1

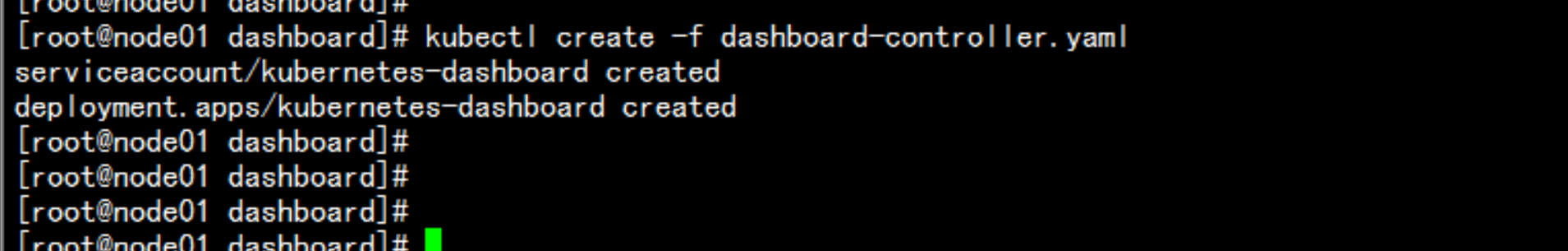

kubectl create -f dashboard-controller.yaml

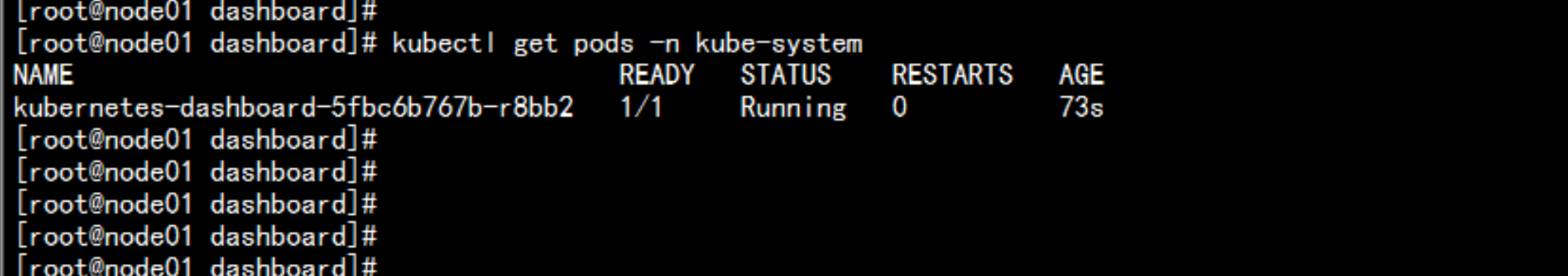

kubectl get pods -n kube-syetem

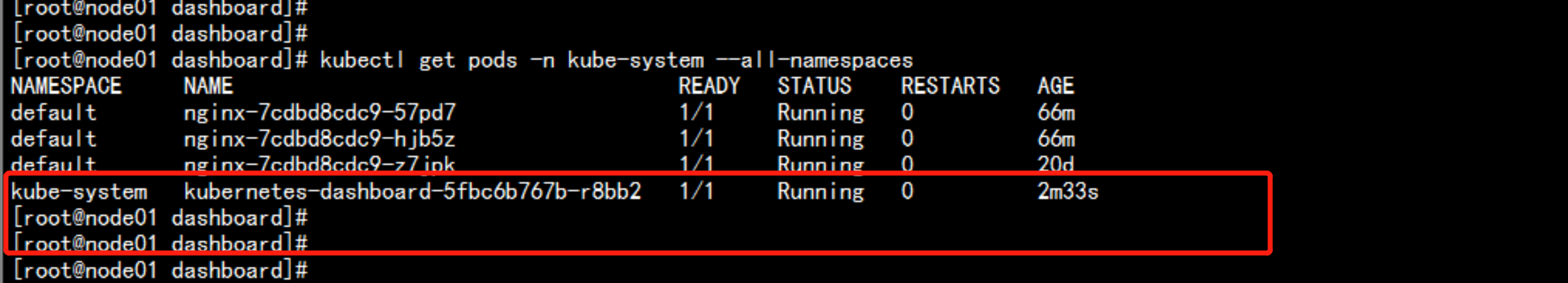

kubectl get pods -n kube-system --all-namespaces

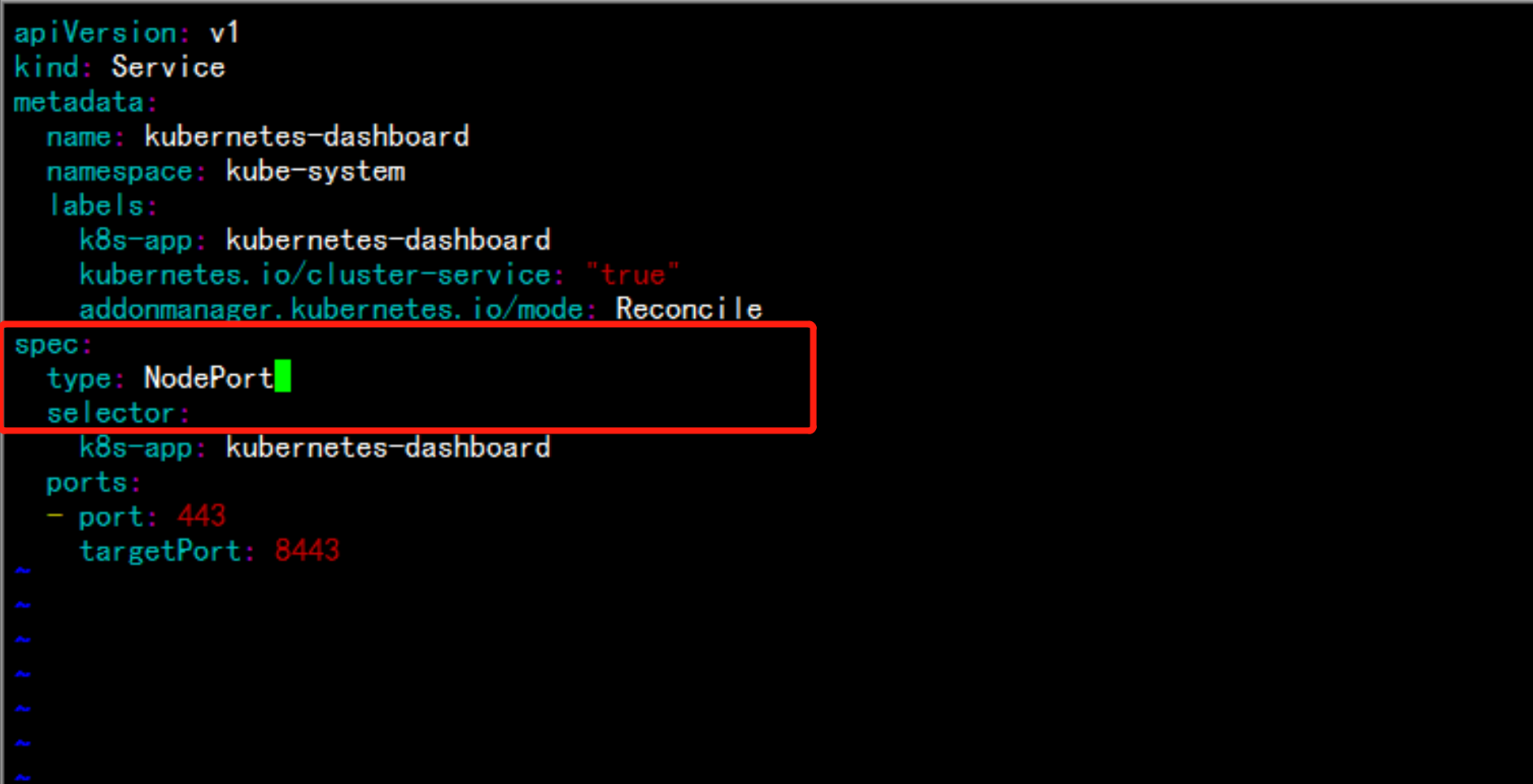

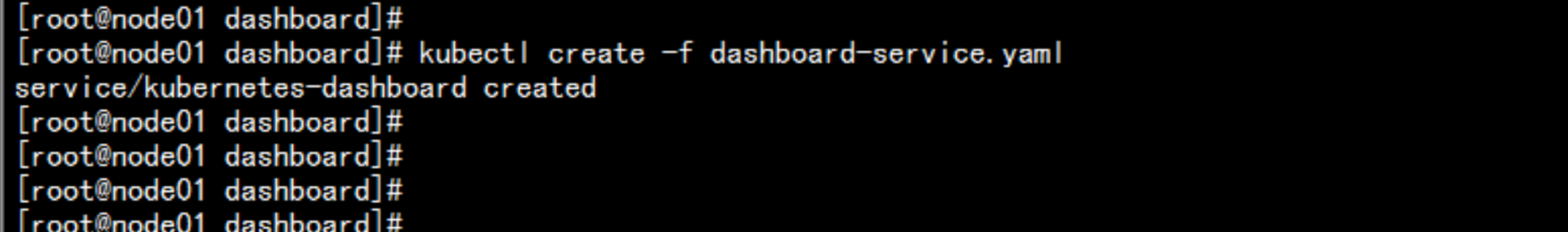

修改: dashboard-service.yamlvim dashborad-service.yaml增加:type: NodePortkubectl create -f dashboard-service.yaml

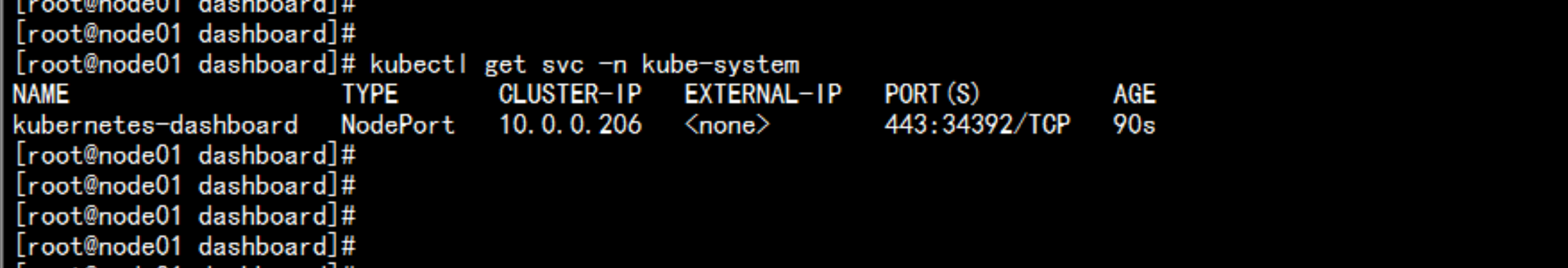

kubectl get svc -n kube-system

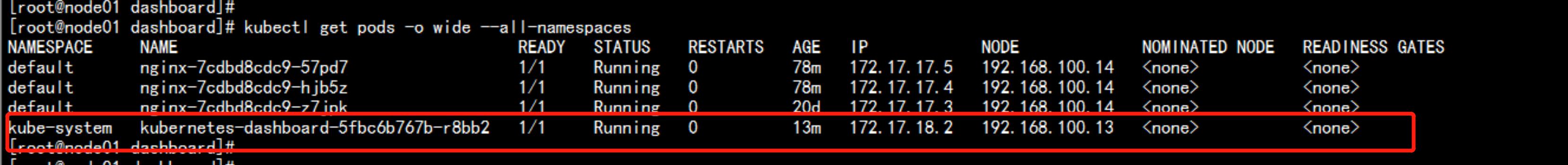

kubectl get pods -o wide --all-namespaces

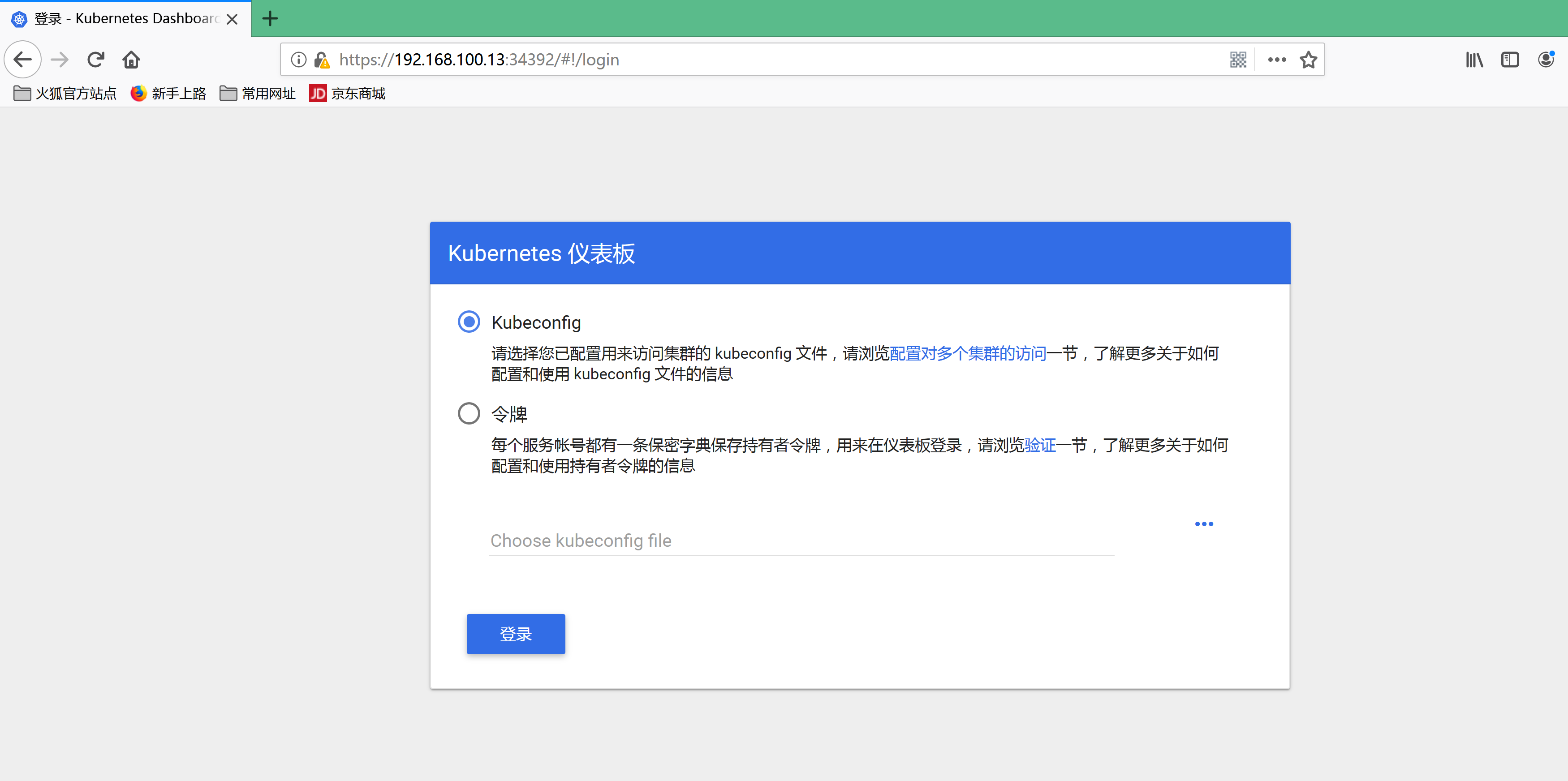

打开浏览器 访问https://192.168.100.13:34392使用 Firefox 浏览器

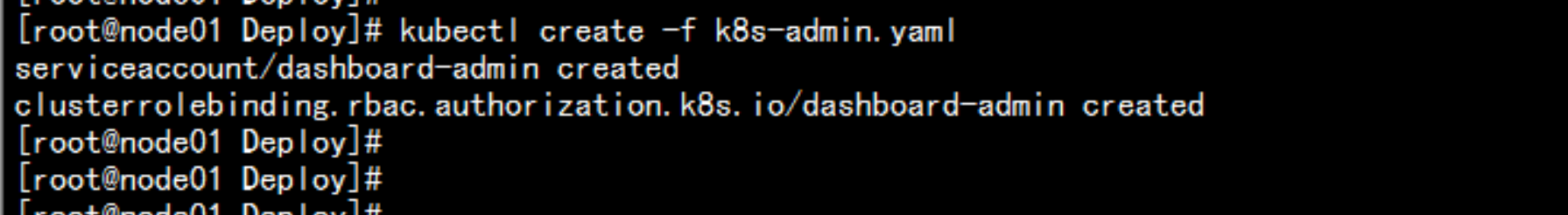

使用 k8s-admin 令牌 登录k8s-admin.yaml---apiVersion: v1kind: ServiceAccountmetadata:name: dashboard-adminnamespace: kube-system---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1beta1metadata:name: dashboard-adminsubjects:- kind: ServiceAccountname: dashboard-adminnamespace: kube-systemroleRef:kind: ClusterRolename: cluster-adminapiGroup: rbac.authorization.k8s.io---

kubectl create -f k8s-admin.yaml

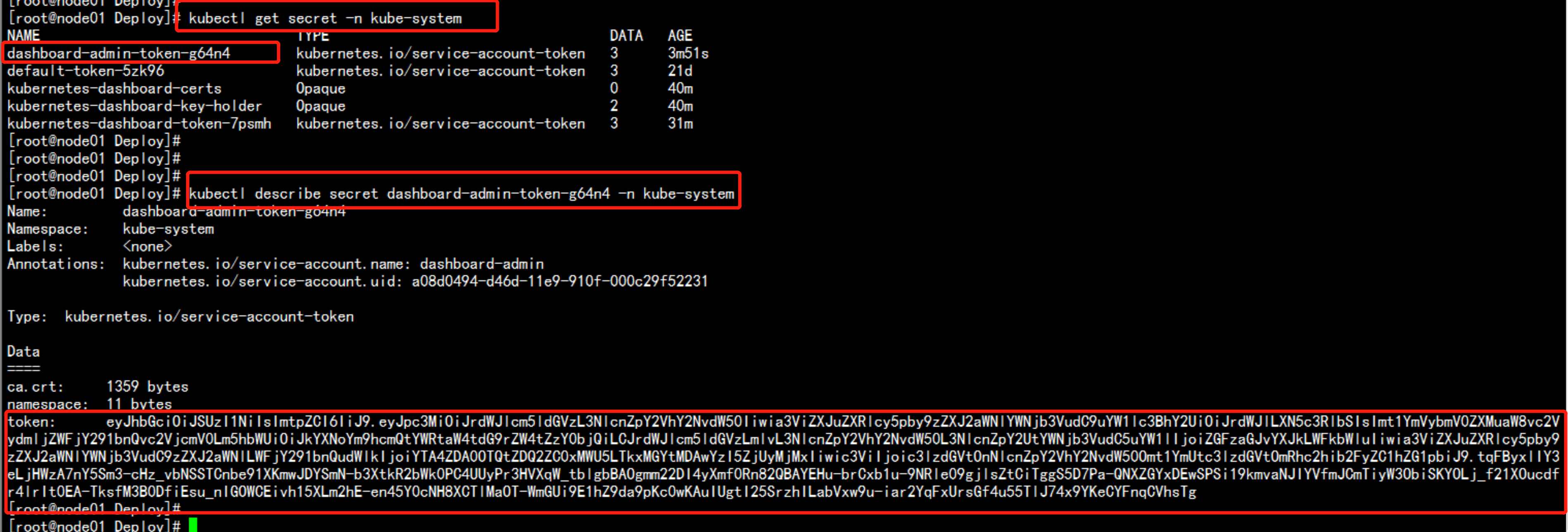

kubectl get secret -n kube-systemkubectl describe secret dashboard-admin-token-g64n4 -n kube-system找到最下面的令牌:eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tZzY0bjQiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYTA4ZDA0OTQtZDQ2ZC0xMWU5LTkxMGYtMDAwYzI5ZjUyMjMxIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.tqFByxlIY3eLjHWzA7nY5Sm3-cHz_vbNSSTCnbe91XKmwJDYSmN-b3XtkR2bWk0PC4UUyPr3HVXqW_tblgbBAOgmm22DI4yXmf0Rn82QBAYEHu-brCxb1u-9NRle09gjlsZtCiTggS5D7Pa-QNXZGYxDEwSPSi19kmvaNJIYVfmJCmTiyW3ObiSKYOLj_f21XOucdfr4lrIt0EA-TksfM3B0DfiEsu_nIGOWCEivh15XLm2hE-en45Y0cNH8XCTlMaOT-WmGUi9E1hZ9da9pKc0wKAuIUgtI25SrzhILabVxw9u-iar2YqFxUrsGf4u55TlJ74x9YKeCYFnqCVhsTg