@zhangyy

2021-05-17T01:51:26.000000Z

字数 3161

阅读 570

k8s1.18.18 的高可用部署

kubernetes升级系列

一:k8s 高可用简介:

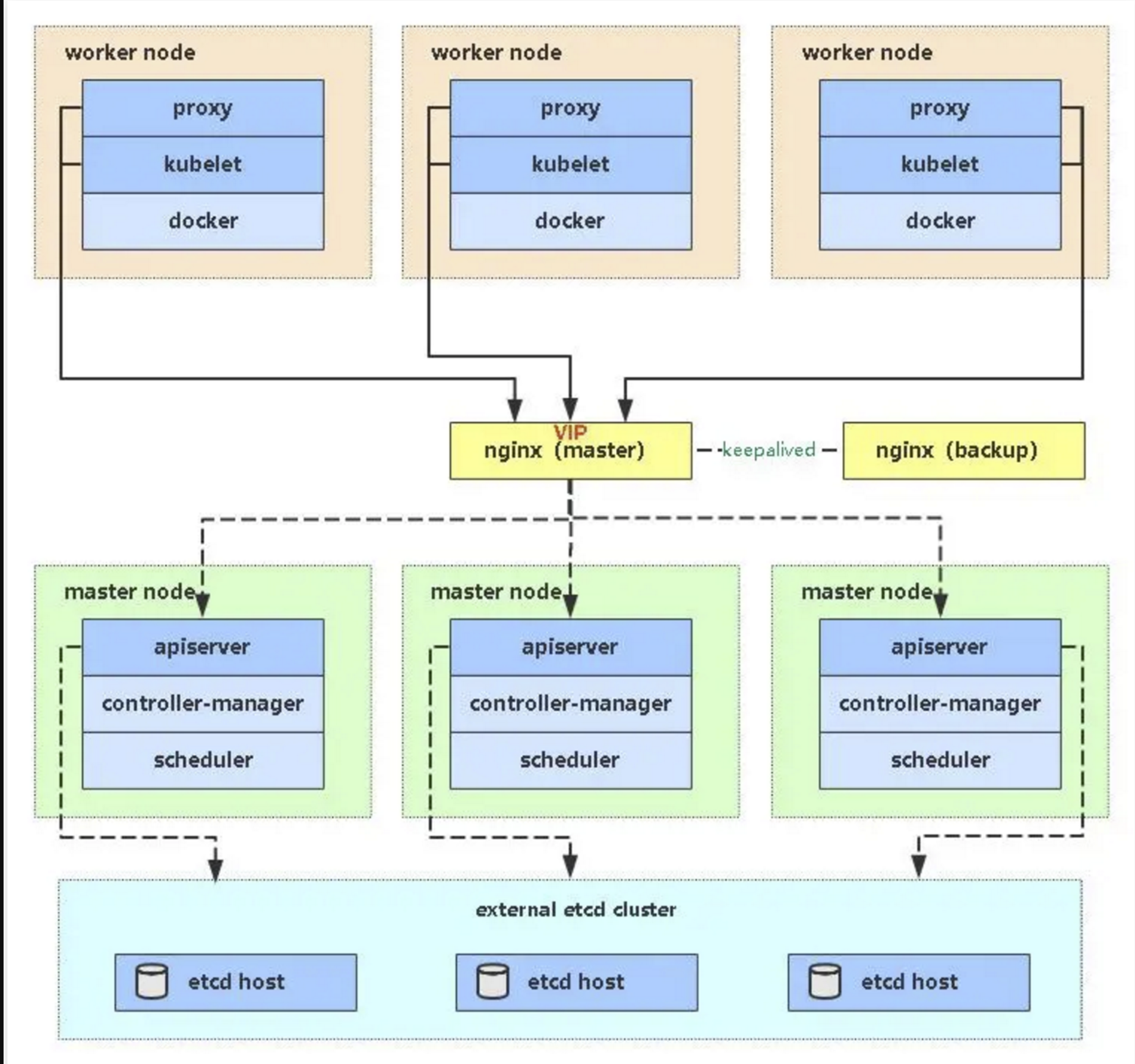

1.1 k8s 多个master 架构

高可用架构(扩容多Master架构)Kubernetes作为容器集群系统,通过健康检查+重启策略实现了Pod故障自我修复能力,通过调度算法实现将Pod分布式部署,并保持预期副本数,根据Node失效状态自动在其他Node拉起Pod,实现了应用层的高可用性。针对Kubernetes集群,高可用性还应包含以下两个层面的考虑:Etcd数据库的高可用性和Kubernetes Master组件的高可用性。而Etcd我们已经采用3个节点组建集群实现高可用,本节将对Master节点高可用进行说明和实施。Master节点扮演着总控中心的角色,通过不断与工作节点上的Kubelet进行通信来维护整个集群的健康工作状态。如果Master节点故障,将无法使用kubectl工具或者API做任何集群管理。Master节点主要有三个服务kube-apiserver、kube-controller-mansger和kube-scheduler,其中kube-controller-mansger和kube-scheduler组件自身通过选择机制已经实现了高可用,所以Master高可用主要针对kube-apiserver组件,而该组件是以HTTP API提供服务,因此对他高可用与Web服务器类似,增加负载均衡器对其负载均衡即可,并且可水平扩容。

1.2 k8s 多个master 架构

二:部署步骤

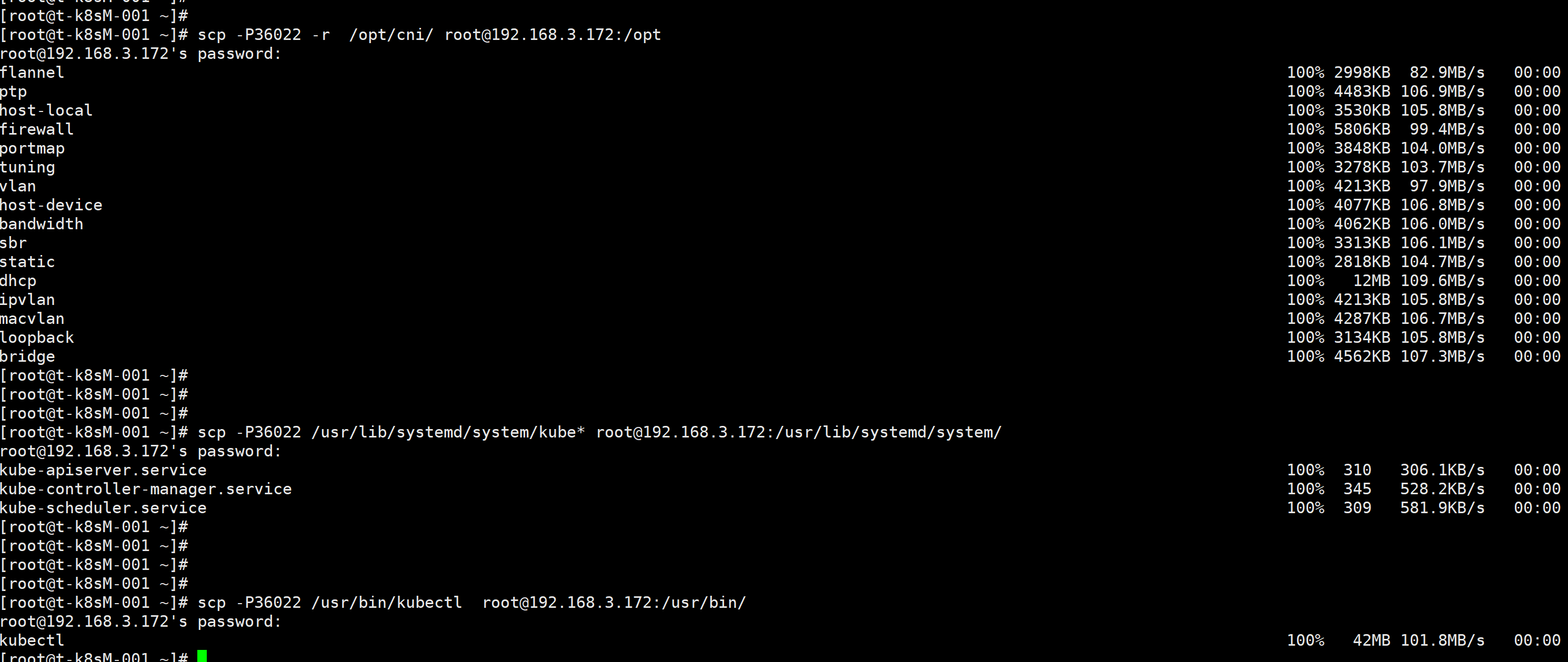

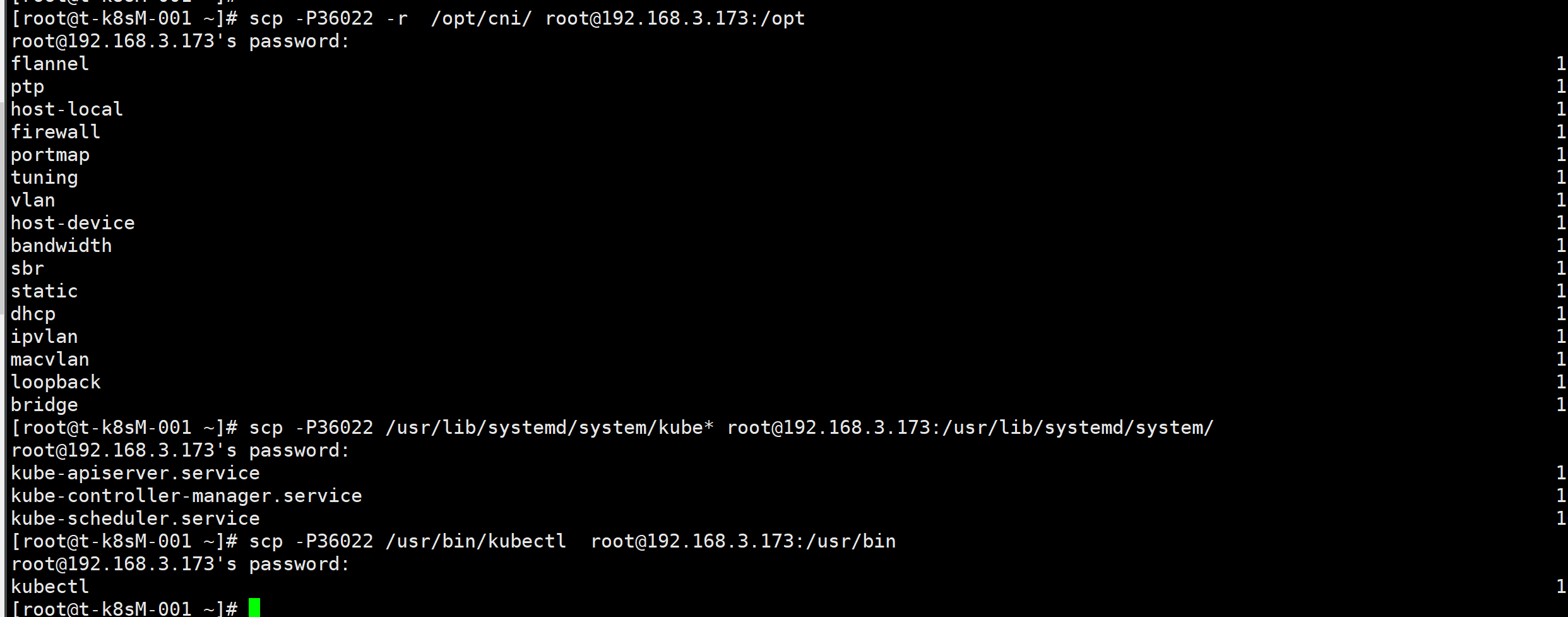

承接上文:2. 拷贝文件(Master1操作)拷贝Master1上所有K8s文件和etcd证书到Master2:scp -P36022 -r /data/application/kubernetes root@192.168.3.172:/data/application/scp -P36022 -r /opt/cni/ root@192.168.3.172:/optscp -P36022 /usr/lib/systemd/system/kube* root@192.168.3.172:/usr/lib/systemd/systemscp -P36022 /usr/bin/kubectl root@192.168.3.172:/usr/bin

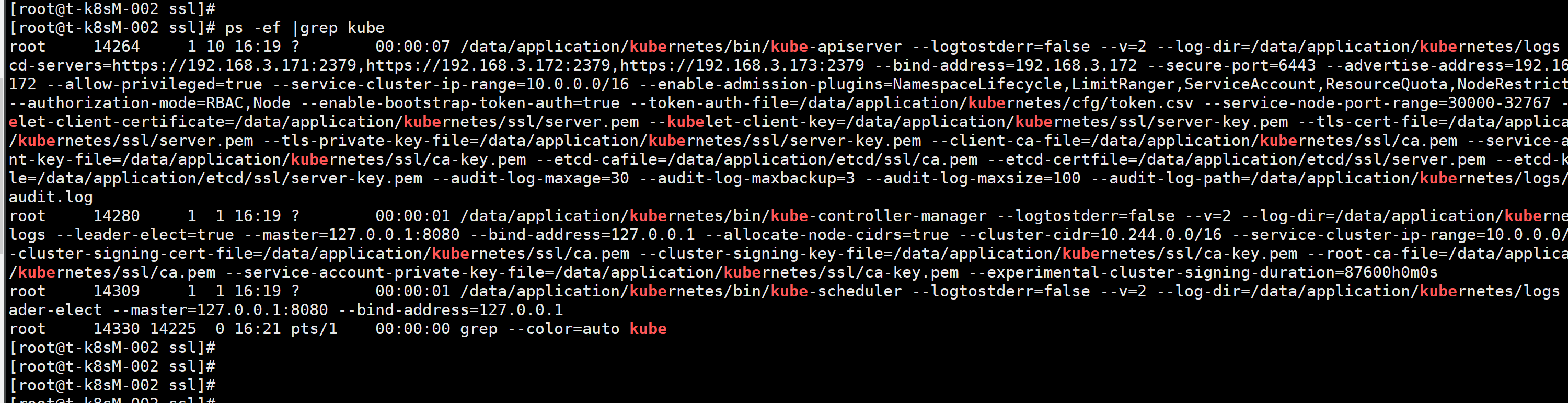

修改配置文件IP和主机名修改apiserver、kubelet和kube-proxy配置文件为本地IP:vim /data/application/kubernetes/cfg/kube-apiserver.conf...--bind-address=192.168.3.172 \--advertise-address=192.168.3.172 \...

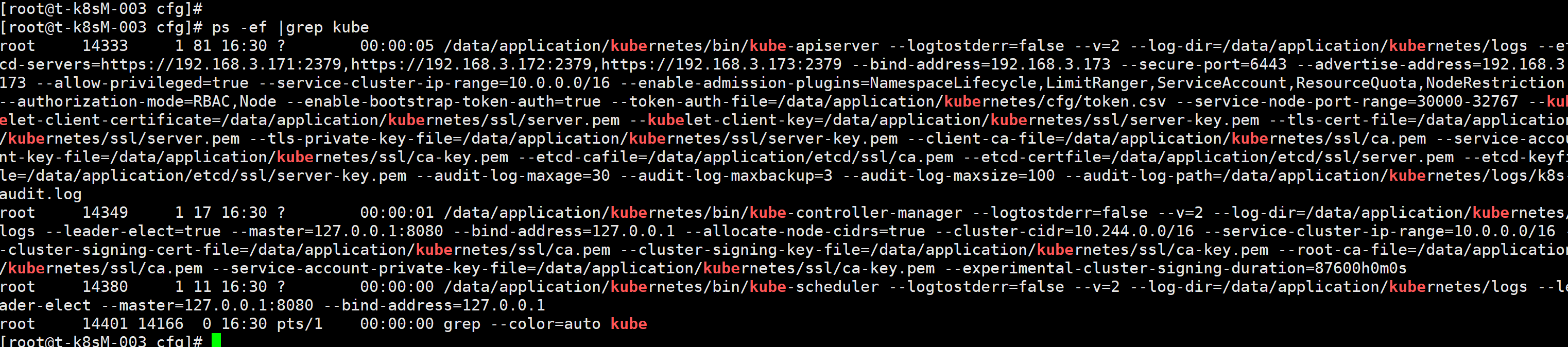

启动systemctl daemon-reloadsystemctl start kube-apiserversystemctl start kube-controller-managersystemctl start kube-scheduler

master3 重复以上配置:scp -P36022 -r /data/application/kubernetes root@192.168.3.173:/data/application/scp -P36022 -r /opt/cni/ root@192.168.3.173:/optscp -P36022 /usr/lib/systemd/system/kube* root@192.168.3.173:/usr/lib/systemd/systemscp -P36022 /usr/bin/kubectl root@192.168.3.173:/usr/bin

vim /data/application/kubernetes/cfg/kube-apiserver.conf------bind-address=192.168.3.173 \--advertise-address=192.168.3.173 \----

启动systemctl daemon-reloadsystemctl start kube-apiserversystemctl start kube-controller-managersystemctl start kube-scheduler

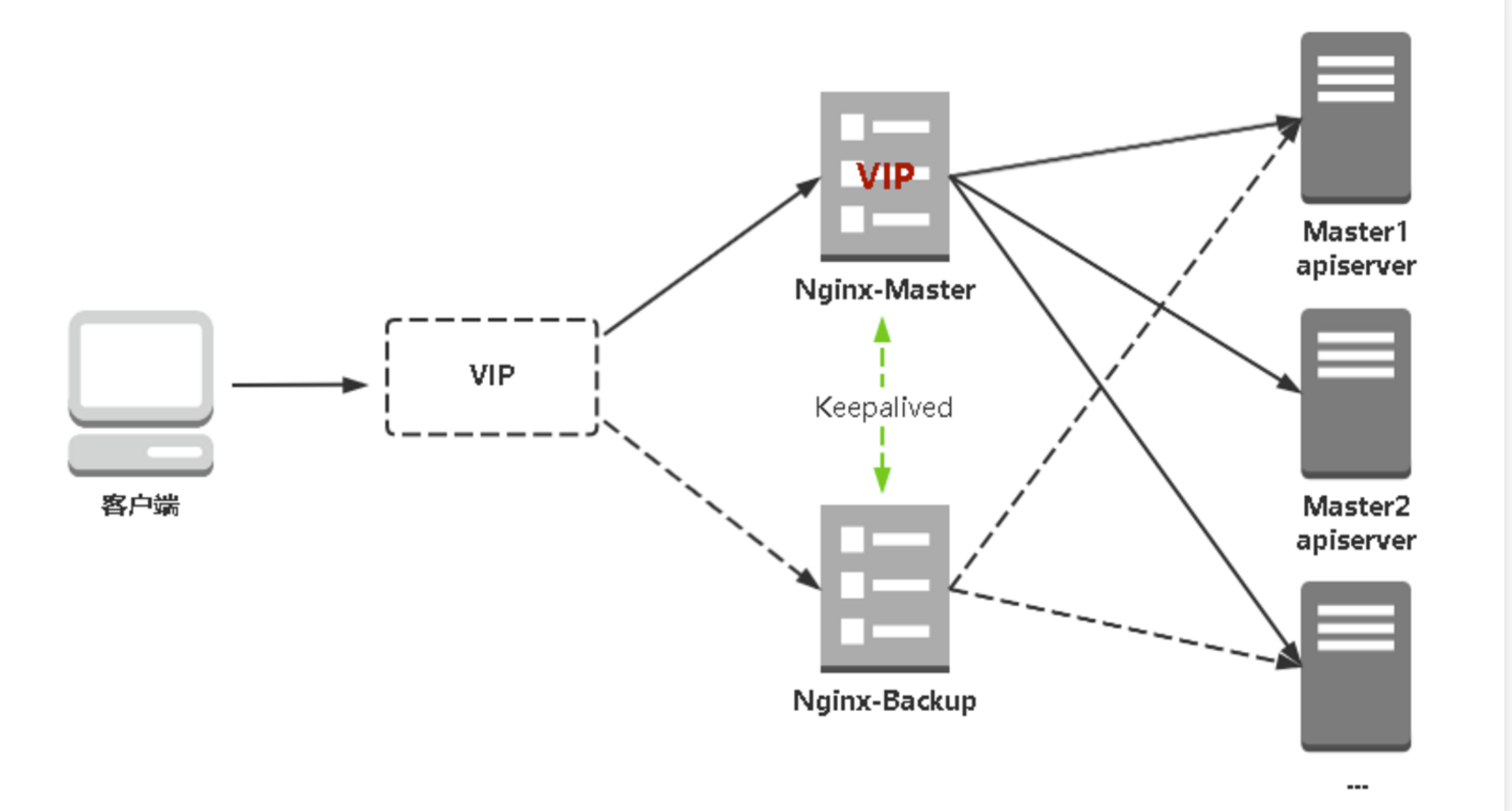

三:部署负载均衡器nginx

kube-apiserver高可用架构图:

这边只做单nginx的 负载均衡nginx服务器的IP 地址: 192.168.3.201 并没有配置keepalive

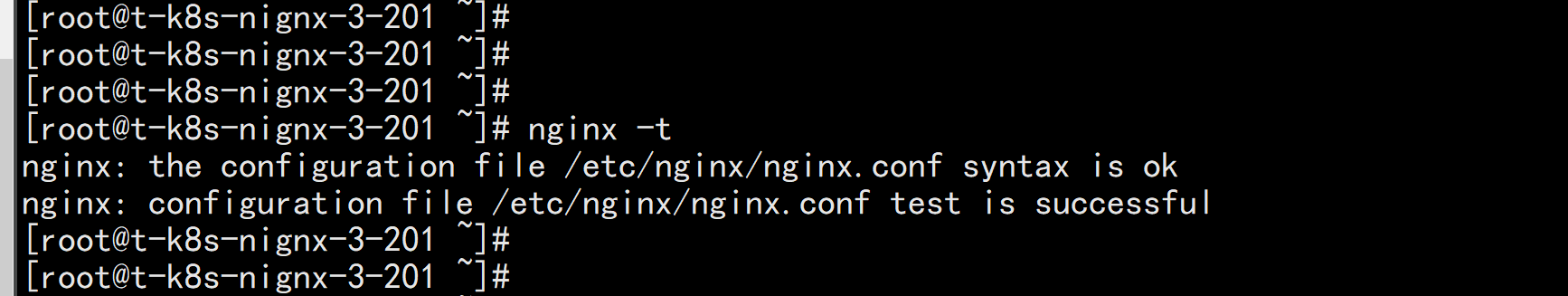

cat > /etc/nginx/nginx.conf << "EOF"user nginx;worker_processes auto;error_log /var/log/nginx/error.log;pid /run/nginx.pid;include /usr/share/nginx/modules/*.conf;events {worker_connections 1024;}# 四层负载均衡,为两台Master apiserver组件提供负载均衡stream {log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';access_log /var/log/nginx/k8s-access.log main;upstream k8s-apiserver {server 192.168.3.171:6443; # Master1 APISERVER IP:PORTserver 192.168.3.172:6443; # Master2 APISERVER IP:PORTserver 192.168.3.173:6443; # Master3 APISERVER IP:PORT}server {listen 6443;proxy_pass k8s-apiserver;}}http {log_format main '$remote_addr - $remote_user [$time_local] "$request" ''$status $body_bytes_sent "$http_referer" ''"$http_user_agent" "$http_x_forwarded_for"';access_log /var/log/nginx/access.log main;sendfile on;tcp_nopush on;tcp_nodelay on;keepalive_timeout 65;types_hash_max_size 2048;include /etc/nginx/mime.types;default_type application/octet-stream;server {listen 80 default_server;server_name _;location / {}}}EOF

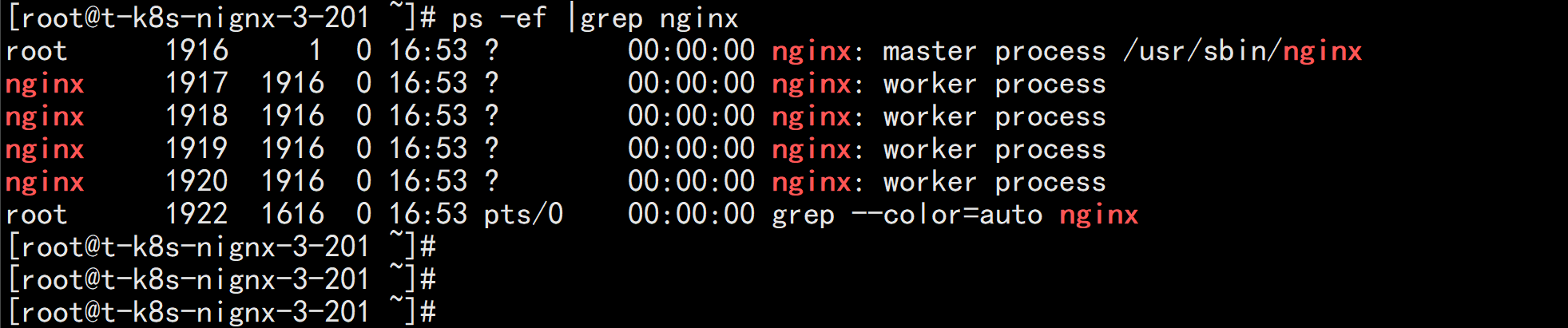

启动nginxnginx -tservice nginx start

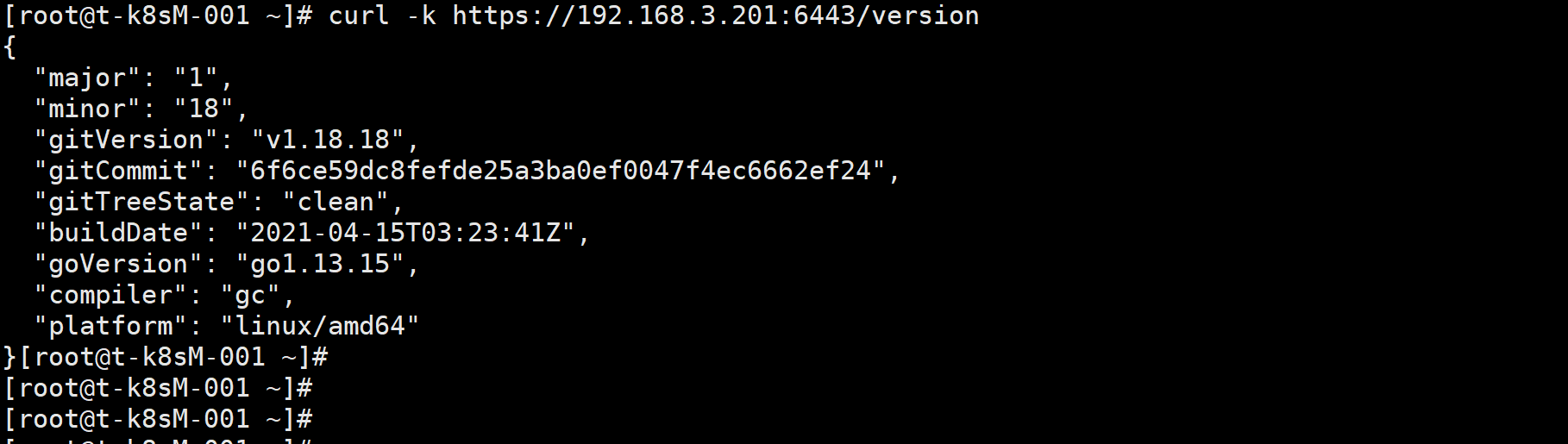

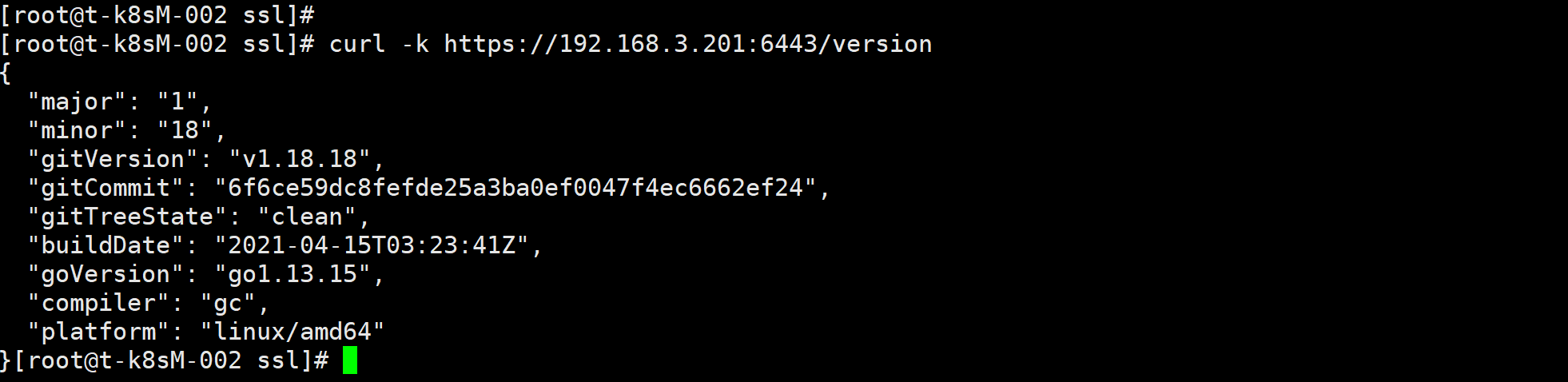

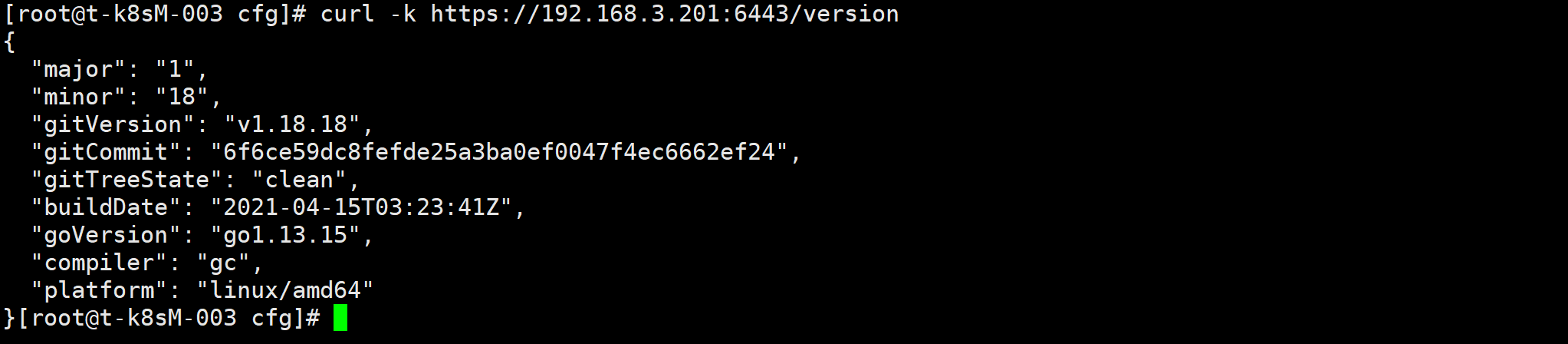

去任意一个k8s master 节点上面去验证curl -k https://192.168.3.201:6443/version

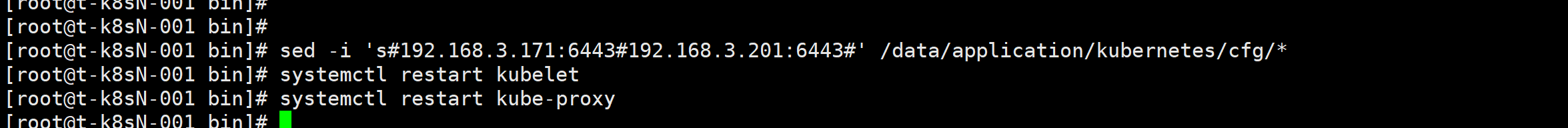

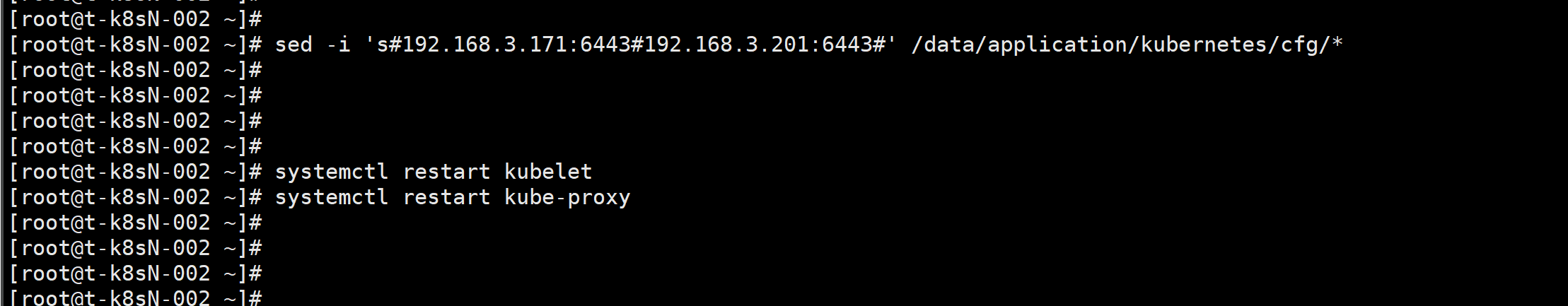

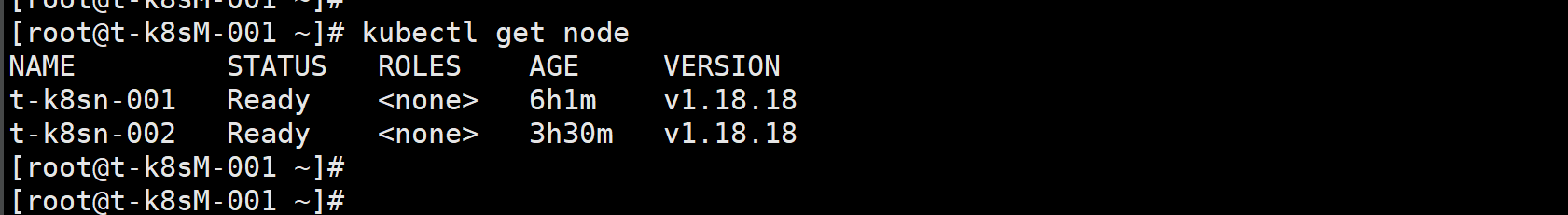

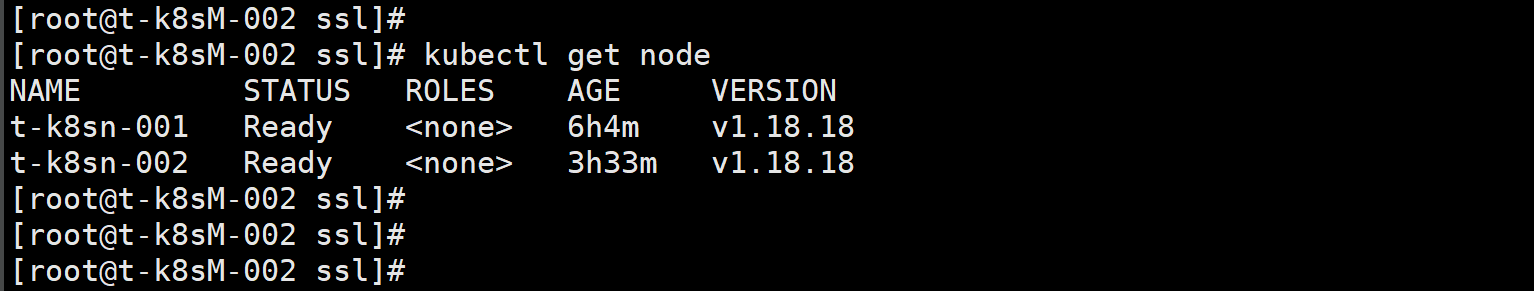

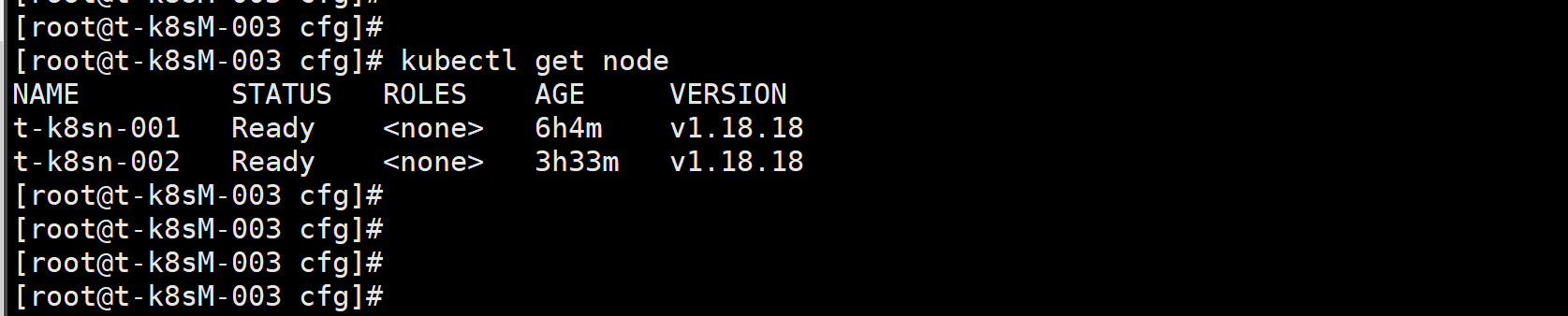

修改所有Worker Node连接LB VIP所有node 节点执行命令sed -i 's#192.168.3.171:6443#192.168.3.201:6443#' /data/application/kubernetes/cfg/*systemctl restart kubeletsystemctl restart kube-proxykubectl get node