@zhangyy

2018-04-12T02:47:47.000000Z

字数 5285

阅读 392

hive 的数据压缩处理

hive的部分

- MapReduce 的数据压缩

- hive 的数据压缩

- hive 支持的文件格式

- hive日志分析,各种压缩的对比

- hive 的函数HQL 查询

一: mapreduce 的压缩

- mapreduce 压缩 主要是在shuffle阶段的优化。shuffle 端的--partition (分区)-- sort (排序)-- combine (合并)-- compress (压缩)-- group (分组)在mapreduce 优化shuffle 从本质上是解决磁盘的IO 与网络IO 问题。减少 集群件的文件传输处理。

二: hive 的压缩:

压缩的和解压需要cpu的,hive 的常见的压缩格式:bzip2,gzip,lzo,snappy等cdh 默认采用的压缩是snappy压缩比:bzip2 > gzip > lzo bzip2 最节省存储空间。注意: sanppy 的并不是压缩比最好的解压速度: lzo > gzip > bzip2 lzo 解压速度是最快的。注意:追求压缩速率最快的sanppy压缩的和解压需要cpu 损耗比较大。集群分: cpu 的密集型 (通常是计算型的网络)hadoop 是 磁盘 IO 和 网络IO 的密集型, 网卡的双网卡绑定。

三: hadoop 的检查 是否支持压缩命令

bin/hadoop checknative

3.1 安装使支持压缩:

tar -zxvf 2.5.0-native-snappy.tar.gz -C /home/hadoop/yangyang/hadoop/lib/native

3.2 命令检测:

bin/hadoop checknative

3.3 mapreduce 支持的压缩:

CodeName:zlib : org.apache.hadoop.io.compress.DefaultCodecgzip : org.apache.hadoop.io.compress.GzipCodecgzip2: org.apache.hadoop.io.compress.Bzip2Codeclzo : org.apache.hadoop.io.compress.LzoCodeclz4 : org.apache.hadoop.io.compress.Lz4Codecsnappy: org.apache.hadoop.io.compress.SnappyCodec

3.4 mapreduce 执行作业临时支持压缩两种方法:

1.在执行命令时候运行。

-Dmapreduce.map.output.compress=true-Dmapreduce.map.output.compress.codec=org.apache.hadoop.io.compress.DefaultCodec

如:

bin/yarn jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.0-cdh5.3.6.jar wordcount -Dmapreduce.map.output.compress=true -Dmapreduce.map.output.compress.codec=org.apache.hadoop.io.compress.DefaultCodec /input/dept.txt /output1可以在bin 的前面加一个time, 会在查看运行的时间测试job 的任务:1. 测运行job 的总时间2. 查看压缩的频率,压缩后的文件大小。

2. 更改配置文件:

更改mapred-site.xml 文件

<property><name>mapreduce.map.output.compress</name><value>true</value></property><property><name>mapreduce.map.output.compress.codec</name><value>org.apache.hadoop.io.compress.DefaultCodec</value></property>更改完成之后重新启动服务就可以

四. hive的支持压缩

4.1 更改临时参数使其生效

hive > set ---> 查看所有参数hive > set hive.exec.compress.intermediate=true -- 开启中间 压缩> set mapred.map.output.compression.codec = CodeName> set hive.exec.compress.output=true> set mapred.map.output.compression.type = BLOCK/RECORD

在hive-site.xml 中去增加相应参数使其永久生效

4.2:hive 支持的文件类型:

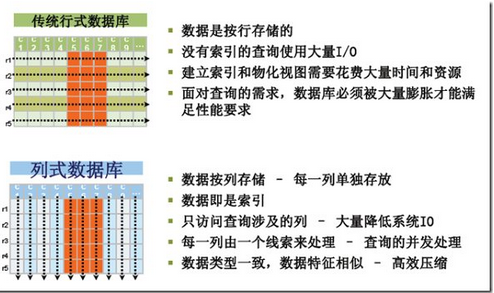

4.2.1 行存储与列式存储区别

数据库列存储不同于传统的关系型数据库,其数据在表中是按行存储的,列方式所带来的重要好处之一就是,由于查询中的选择规则是通过列来定义的,因 此整个数据库是自动索引化的。按列存储每个字段的数据聚集存储,在查询只需要少数几个字段的时候,能大大减少读取的数据量,一个字段的数据聚集存储,那就 更容易为这种聚集存储设计更好的压缩/解压算法。

4.2.2 hive 支持的文件类型:

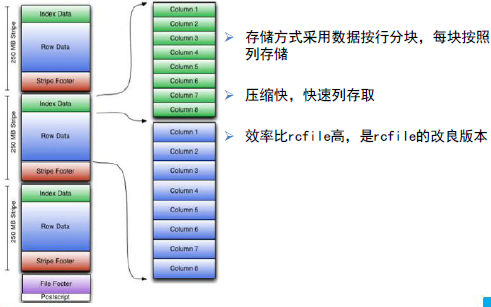

修改hive 的默认文件系列参数:set hive.default.fileformat=OrcTextFile:默认的类型,行存储rcfile:按行块,每块再按列存储avro:二进制ORC rcfile:的升级版,默认是zlib,支持snappy 其格式不支持parquet

4.2.3 ORC格式(hive/shark/spark支持)

使用方法:

create table Adress (name string,street string,city string,state double,zip int)stored as orc tblproperties ("orc.compress"="NONE") --->指定压缩算法row format delimited fields terminated by '\t';

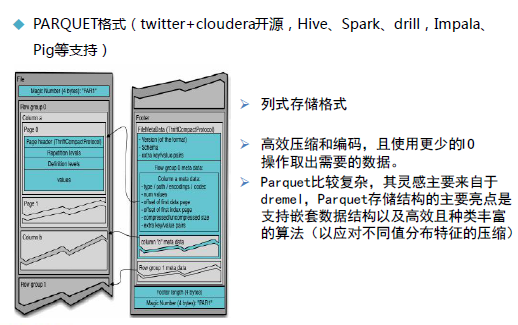

4.2.4 PARQUET格式(twitter+cloudera开源,Hive、Spark、drill,Impala、

Pig等支持)

使用方法:

create table Adress (name string,street string,city string,state double,zip int)stored as parquet ---> 指定文本类型row format delimited fields terminated by '\t';

五:hive日志分析,各种压缩的对比

5.1 在hive 上面创建表结构:

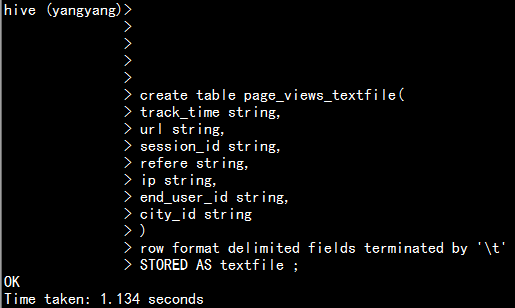

5.1.1 textfile 类型:

create table page_views_textfile(track_time string,url string,session_id string,refere string,ip string,end_user_id string,city_id string)row format delimited fields terminated by '\t'STORED AS textfile ; ---> 指定表的文件类型

加载数据到表中

load data local inpath '/home/hadoop/page_views.data' into table page_views_textfile ;

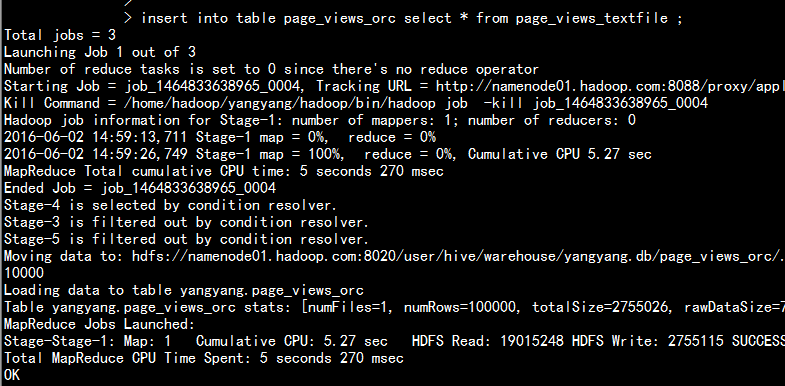

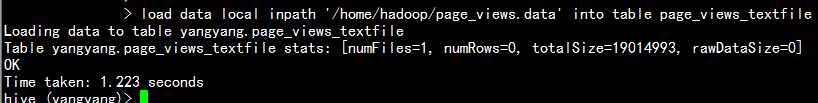

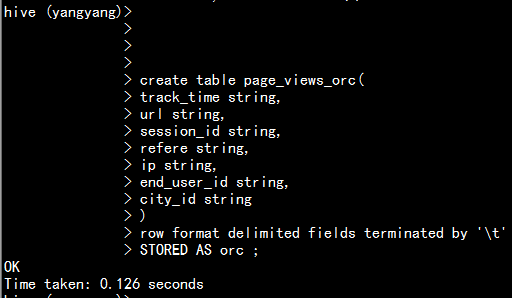

5.1.2 orc 类型:

create table page_views_orc(track_time string,url string,session_id string,refere string,ip string,end_user_id string,city_id string)row format delimited fields terminated by '\t'STORED AS orc ;

插入数据:

insert into table page_views_orc select * from page_views_textfile ;

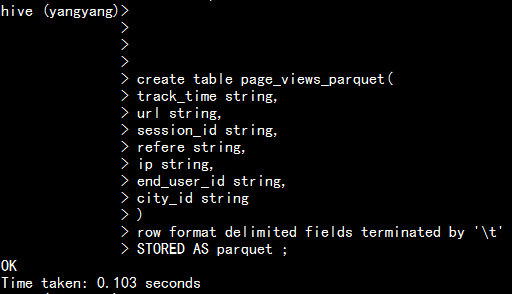

5.1.3 parquet 类型

create table page_views_parquet(track_time string,url string,session_id string,refere string,ip string,end_user_id string,city_id string)row format delimited fields terminated by '\t'STORED AS parquet ;

插入数据:

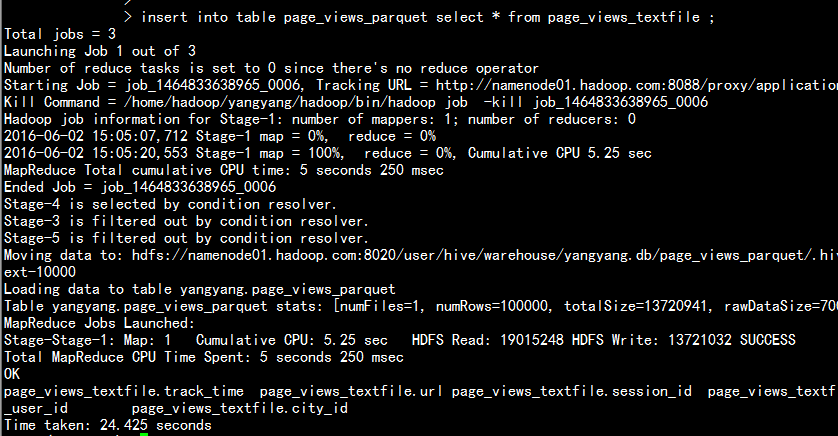

insert into table page_views_parquet select * from page_views_textfile ;

六:比较:

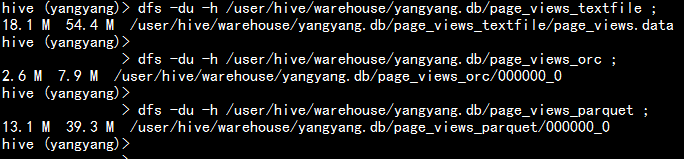

6.1 文件大小统计

hive (yangyang)> dfs -du -h /user/hive/warehouse/yangyang.db/page_views_textfile ;hive (yangyang)> dfs -du -h /user/hive/warehouse/yangyang.db/page_views_orc ;hive (yangyang)> dfs -du -h /user/hive/warehouse/yangyang.db/page_views_parquet ;

从上面可以看出orc 上生成的表最小。

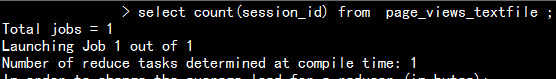

6.2 查找时间测试比较:

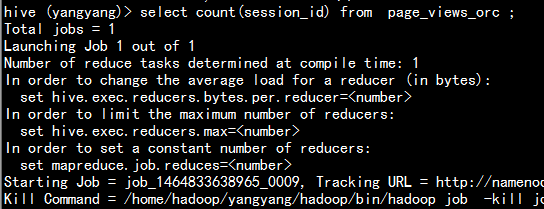

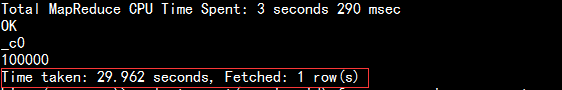

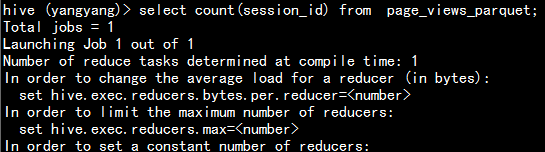

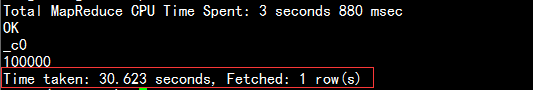

hive (yangyang)> select count(session_id) from page_views_textfile ;hive (yangyang)> select count(session_id) from page_views_orc;hive (yangyang)> select count(session_id) from page_views_parquet;

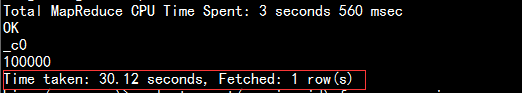

6.3 textfile 文件类型:

6.4 orc 文件类型:

6.5 parquet 类型:

七 hive 创建表与指定压缩:

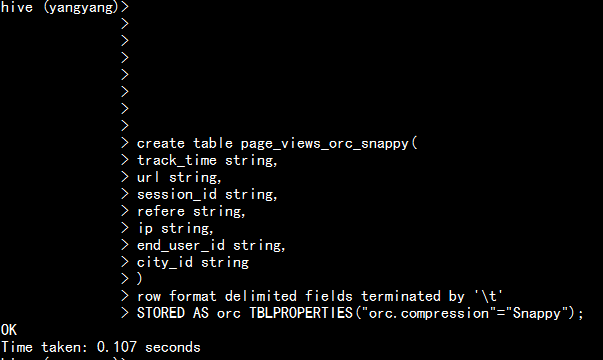

7.1 orc+snappy 格式:

create table page_views_orc_snappy(track_time string,url string,session_id string,refere string,ip string,end_user_id string,city_id string)row format delimited fields terminated by '\t'STORED AS orc TBLPROPERTIES("orc.compression"="Snappy");

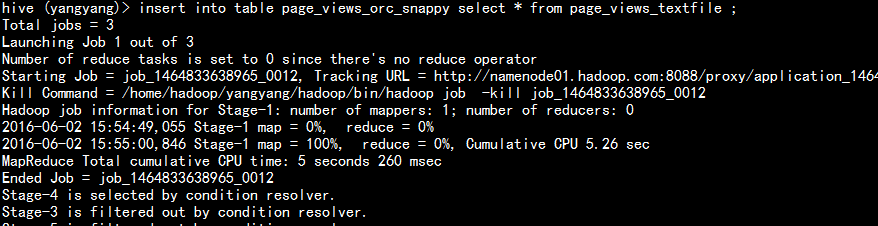

插入数据:insert into table page_views_orc_snappy select * from page_views_textfile ;

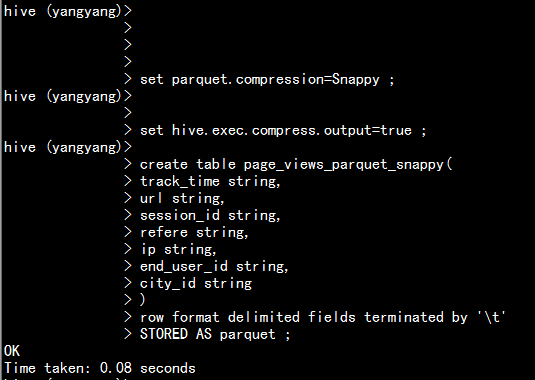

7.2 parquet+snappy 格式:

set parquet.compression=Snappy ;set hive.exec.compress.output=true ;create table page_views_parquet_snappy(track_time string,url string,session_id string,refere string,ip string,end_user_id string,city_id string)row format delimited fields terminated by '\t'STORED AS parquet ;

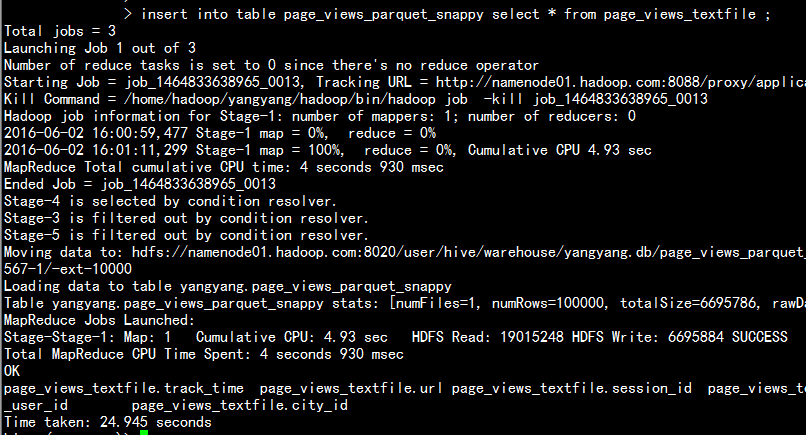

插入数据:insert into table page_views_parquet_snappy select * from page_views_textfile ;

7.3 对比测试:

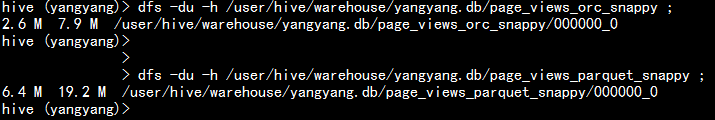

7.3.1 文件大小对比:

hive (yangyang)> dfs -du -h /user/hive/warehouse/yangyang.db/page_views_orc_snappy ;hive (yangyang)> dfs -du -h /user/hive/warehouse/yangyang.db/page_views_parquent_snappy ;

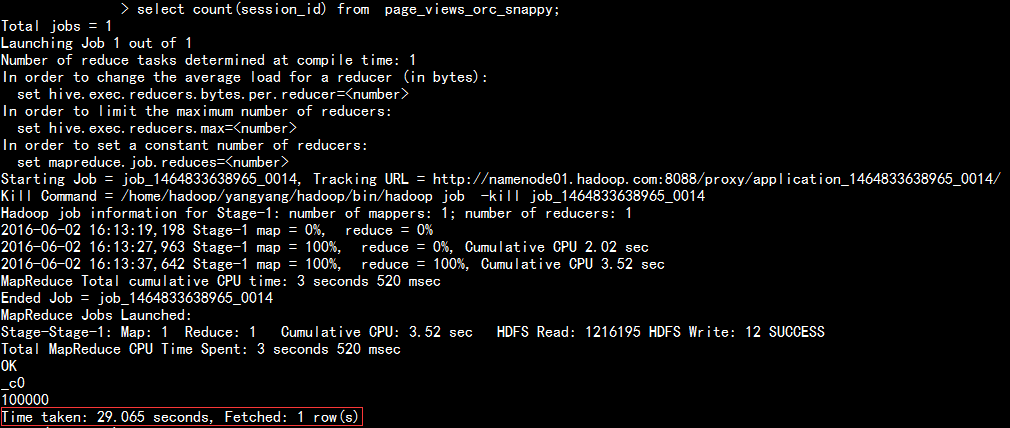

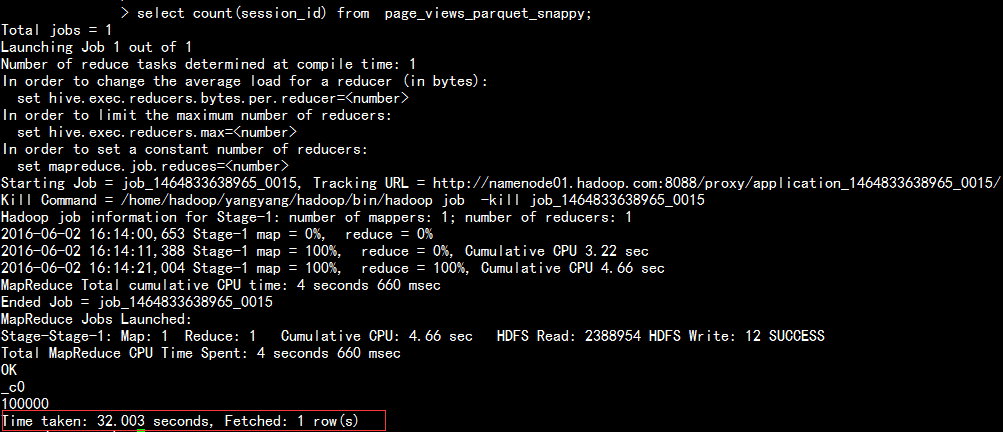

7.3.2 查询对比:

hive (yangyang)> select count(session_id) from page_views_orc_snappy;hive (yangyang)> select count(session_id) from page_views_parquet_snappy;

八 :hive 的函数HQL 查询

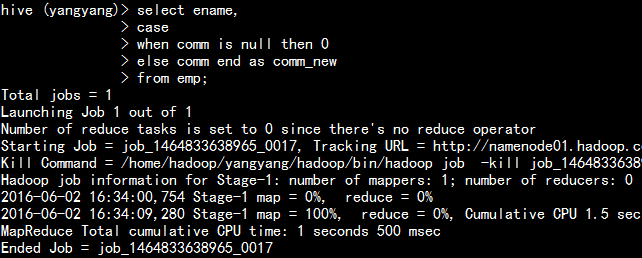

8.1 case --when--then-else

hive(yangyang)>select ename,casewhen comm is null then 0else comm end as comm_newfrom emp;

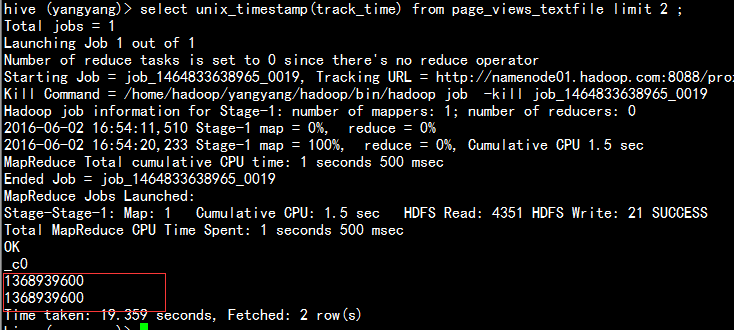

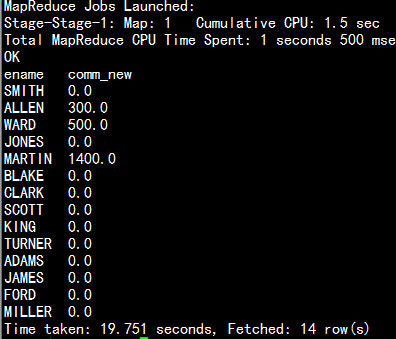

8.1.2 unix_timestamp() 函数:

desc function extended unix_timestamp;

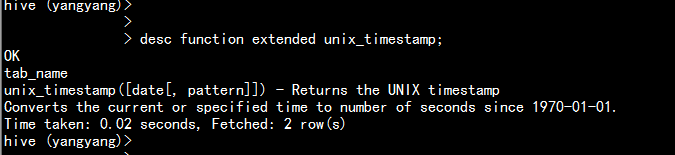

查找时间

select track_time from page_views_textfile limit 2 ;

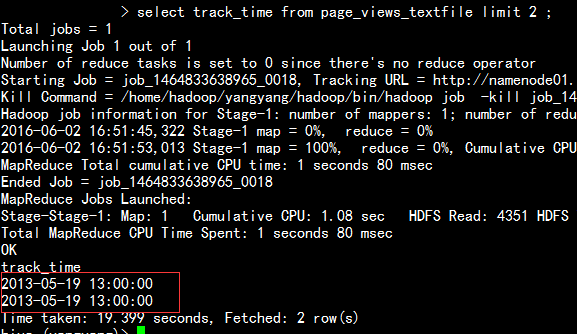

转换时间:

select unix_timestamp(track_time) from page_views_textfile limit 2 ;