@zhangyy

2018-11-19T06:15:29.000000Z

字数 3647

阅读 359

sqoop 的安装与常用抽数操作

协作框架

- sqoop 简介处理

- sqoop 环境配置处理

- sqoop 数据导入导出处理

一: sqoop 简介处理

- 1. Sqoop是一个用来将Hadoop和关系型数据库中的数据相互转移的工具,可以将一个关系型数据库(例如 : MySQL ,Oracle ,Postgres等)中的数据导进到Hadoop的HDFS中,也可以将HDFS的数据导进到关系型数据库中。- 2. Sqoop中一大亮点就是可以通过hadoop的mapreduce把数据从关系型数据库中导入数据到HDFS,可以将hive 的数据,hdfs 上面的数据进行 提取的到关系型数据当中。 反之亦可操作。

二:sqoop 安装与配置处理:

环境需求: 安装好的hadoop环境与hive 环境已经配置。

2.1 配置单节点的zookeeper 环境

tar -zxvf zookeeper-3.4.5-cdh5.3.6.tar.gzmv zookeeper-3.4.5-cdh5.3.6 yangyang/zookeepercd yangyang/zookeeper/confcp -p zoo_sample.cfg zoo.cfg

2.2 给定zookeeper 的Datadir 目录:

# the directory where the snapshot is stored.# do not use /tmp for storage, /tmp here is just# example sakes.#dataDir=/tmp/zookeeper<!--给定dataDir 目录 -->dataDir=/home/hadoop/yangyang/zookeeper/data# the port at which the clients will connect

echo "1" > /home/hadoop/yangyang/zookeeper/myid

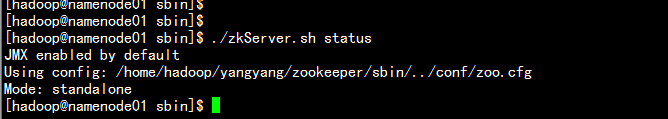

2.3 启动zookeeper服务

cd /home/hadoop/yangyang/zookeer/sbin/./zkServer.sh start

tar -zxvf sqoop-1.4.5-cdh5.3.6.tar.gzmv sqoop-1.4.5-cdh5.3.6 yangyang/sqoopcp -p mysql-connector-java-5.1.27-bin.jar /home/hadoop/yangyang/sqoop/lib/cd yangyang/sqoop/confcp -p sqoop-env-template.sh sqoop-env.sh

2.4 配置sqoop环境

vim sqoop-env.sh

#Set path to where bin/hadoop is available<!--配置hadoop目录-->export HADOOP_COMMON_HOME=/home/hadoop/yangyang/hadoop#Set path to where hadoop-*-core.jar is available<!--配置hadoop的mapreduce目录-->export HADOOP_MAPRED_HOME=/home/hadoop/yangyang/hadoop<!--配置hbase 目录>#set the path to where bin/hbase is available#export HBASE_HOME=#Set the path to where bin/hive is available<!--配置hive的目录-->export HIVE_HOME=/home/hadoop/yangyang/hive<!--配置zookeeper 的目录处理>#Set the path for where zookeper config dir isexport ZOOCFGDIR=/home/hadoop/yangyang/zookeeper/conf

二:sqoop 的环境测试处理

2.1 在mysql 的环境下创建数据库,并创建表处理

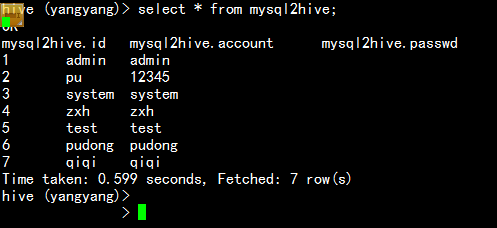

mysql -uroot -p123456create database yangyang;use yangyang;创建一个my_user表:CREATE TABLE `my_user` (`id` tinyint(4) NOT NULL AUTO_INCREMENT,`account` varchar(255) DEFAULT NULL,`passwd` varchar(255) DEFAULT NULL,PRIMARY KEY (`id`));插入数据:INSERT INTO `my_user` VALUES ('1', 'admin', 'admin');INSERT INTO `my_user` VALUES ('2', 'pu', '12345');INSERT INTO `my_user` VALUES ('3', 'system', 'system');INSERT INTO `my_user` VALUES ('4', 'zxh', 'zxh');INSERT INTO `my_user` VALUES ('5', 'test', 'test');INSERT INTO `my_user` VALUES ('6', 'pudong', 'pudong');INSERT INTO `my_user` VALUES ('7', 'qiqi', 'qiqi');

三: 将mysql的数据表导入到hdfs 上面:

3.1 sqoop 导入不指定目录:

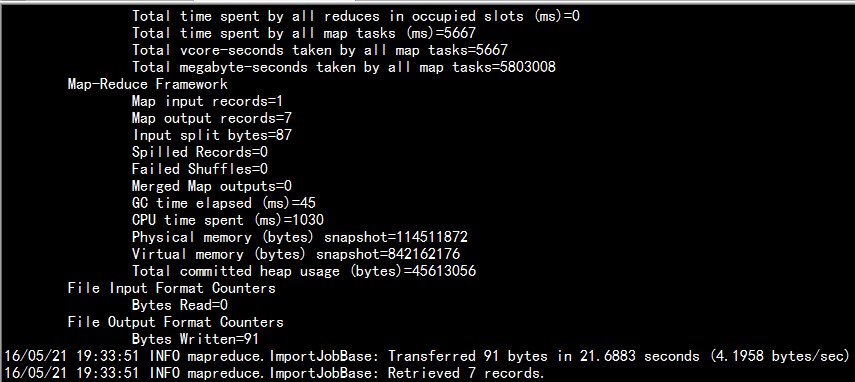

bin/sqoop import \--connect jdbc:mysql://namenode01.hadoop.com:3306/yangyang \--username root \--password 123456 \--table my_user导出的路径在hdfs 上面的目录是/usr/hadoop/my_user/注意:不指定导入目录,默认情况是导入到hdfs上面用户家目录下面。默认导入到HDFS里面,分隔符是,

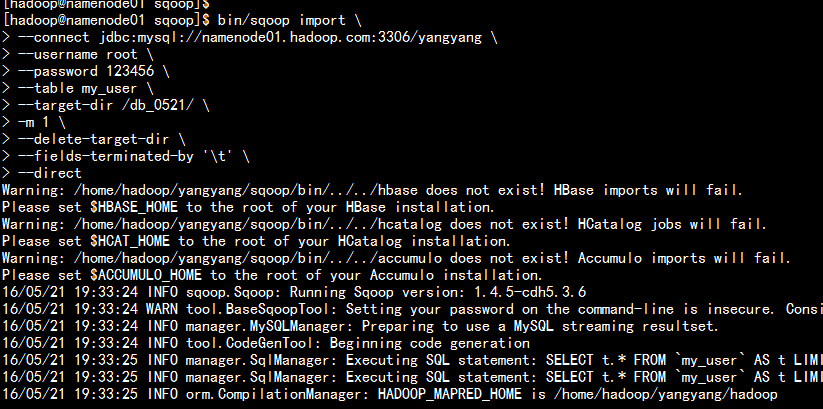

3.2 导入带目录路径:

bin/sqoop import \--connect jdbc:mysql://namenode01.hadoop.com:3306/yangyang \--username root \--password 123456 \--table my_user \--target-dir /db_0521/ \-m 1 \--delete-target-dir \--fields-terminated-by '\t' \--direct

3.3 增量导入:

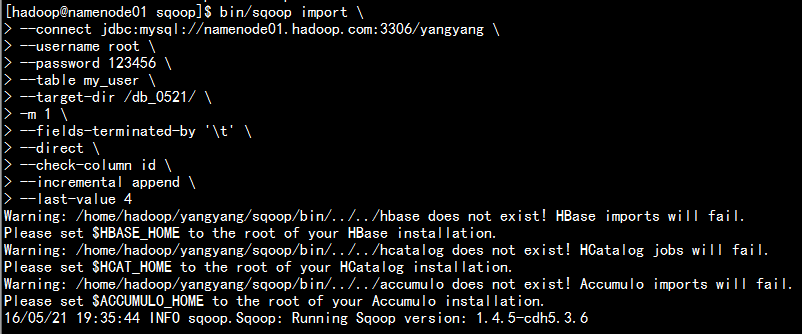

bin/sqoop import \--connect jdbc:mysql://namenode01.hadoop.com:3306/yangyang \--username root \--password 123456 \--table my_user \--target-dir /db_0521/ \-m 1 \--fields-terminated-by '\t' \--direct \--check-column id \--incremental append \--last-value 4

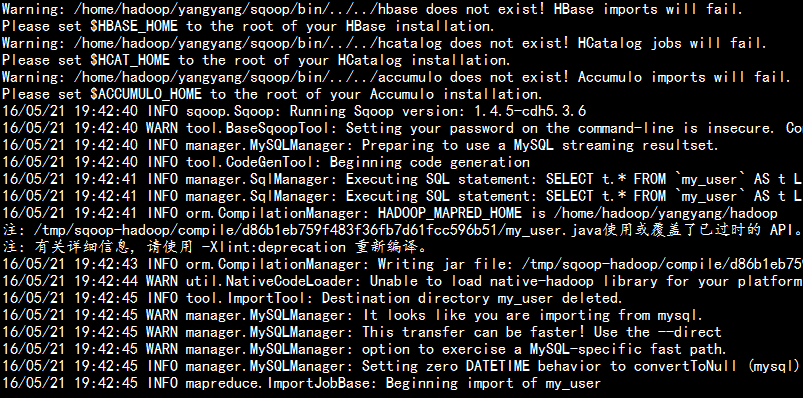

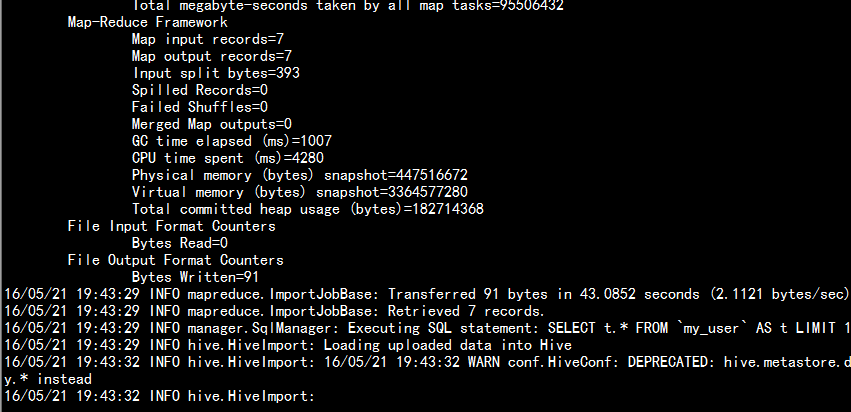

3.4 从mysql 导入hive 当中:

bin/sqoop import \--connect jdbc:mysql://namenode01.hadoop.com:3306/yangyang \--username root \--password 123456 \--table my_user \--delete-target-dir \--hive-import \--hive-database yangyang \--hive-table mysql2hive \--fields-terminated-by '\t'

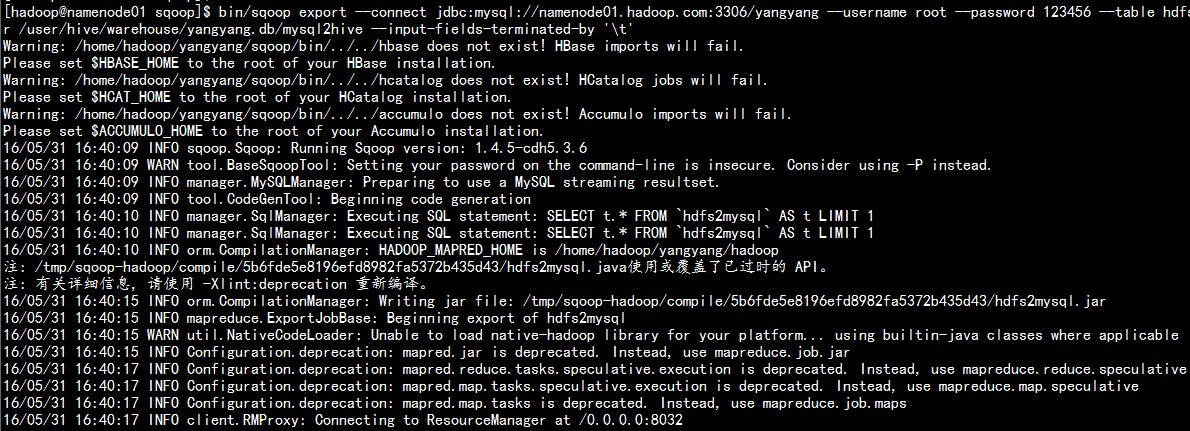

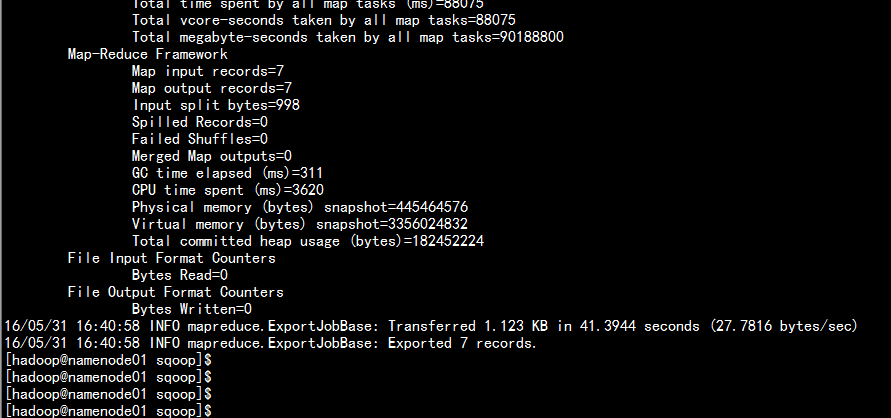

3.5 从hdfs 当中导出mysql 当中:

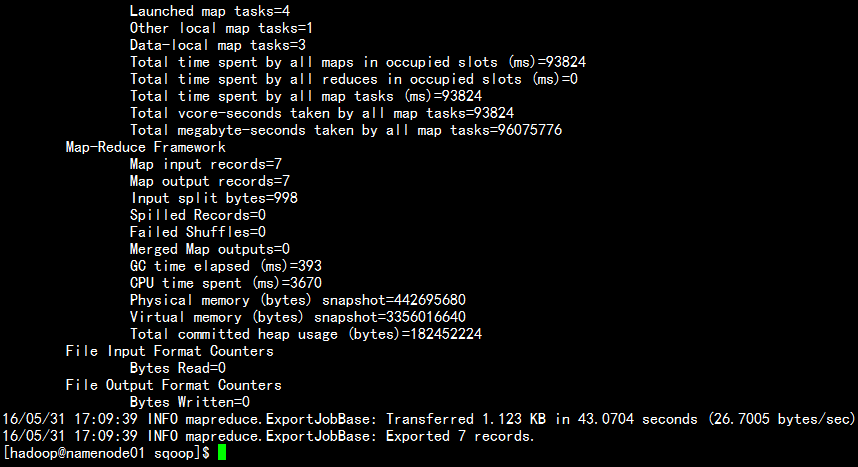

新建一张空表:create table hdfs2mysql like my_user;导出命令bin/sqoop export \--connect jdbc:mysql://namenode01.hadoop.com:3306/yangyang \--username root \--password 123456 \--table hdfs2mysql \--export-dir /user/hive/warehouse/yangyang.db/mysql2hive \--input-fields-terminated-by '\t'

从hive 当中导出到mysql 当中:注意:其实就是从HDFS导入到RDBMS

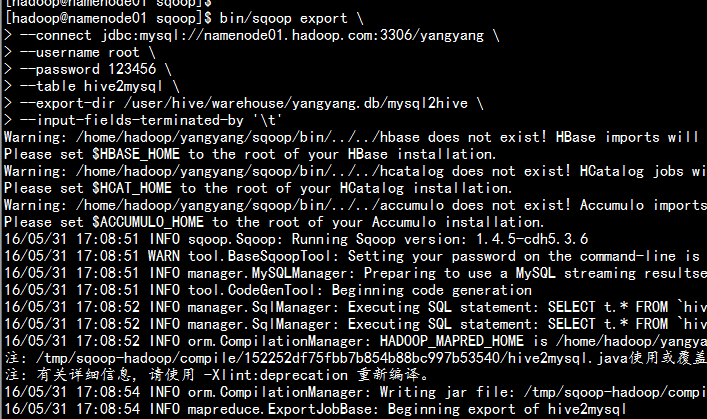

新建一张空表:create table hive2mysql like my_user;导出命令:bin/sqoop export \--connect jdbc:mysql://namenode01.hadoop.com:3306/yangyang \--username root \--password 123456 \--table hive2mysql \--export-dir /user/hive/warehouse/yangyang.db/mysql2hive \--input-fields-terminated-by '\t'