@zhangyy

2016-04-19T02:01:25.000000Z

字数 932

阅读 395

HDFS API基本操作

大数据系列

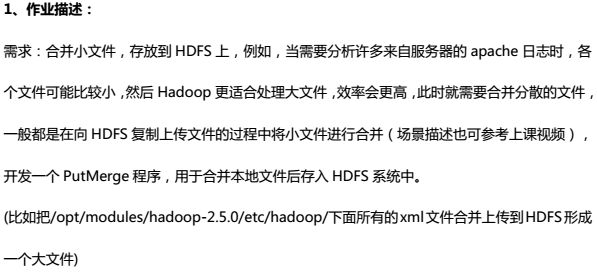

题目需求:

1.合并hadoop 的/home/hadoop/yangyang/hadoop/etc/ 下面的所有配置文件,合并到 hdfs 上/yangyang/hdfs.txt

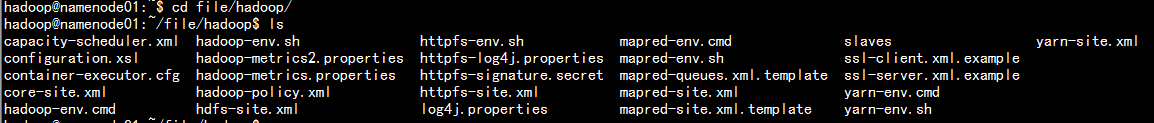

#mkdir /home/hadoop/file

#cp -p home/hadoop/yangyang/hadoop/etc/* /home/hadoop/

#hdfs -dfs -mkdir /yangyang

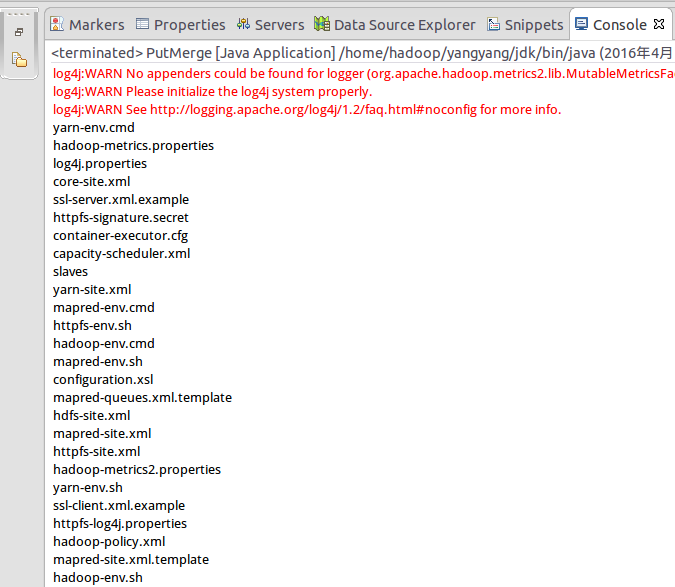

java 代码

public class PutMerge {public static void main(String[] args) throws IOException {Configuration conf = new Configuration();FileSystem hdfs = FileSystem.get(conf);FileSystem local = FileSystem.getLocal(conf);Path inputDir = new Path("/home/hadoop/file/hadoop");Path hdfsFile = new Path("/yangyang/hdfs.txt");FileStatus[] inputFiles = local.listStatus(inputDir);FSDataOutputStream out = hdfs.create(hdfsFile);for (int i = 0; i < inputFiles.length; i++) {System.out.println(inputFiles[i].getPath().getName());FSDataInputStream in = local.open(inputFiles[i].getPath());byte buffer[] = new byte[256];int bytesRead = 0;while ((bytesRead = in.read(buffer)) > 0) {out.write(buffer, 0, bytesRead);}in.close();}out.close();}}

输出结果:

下载查看文件

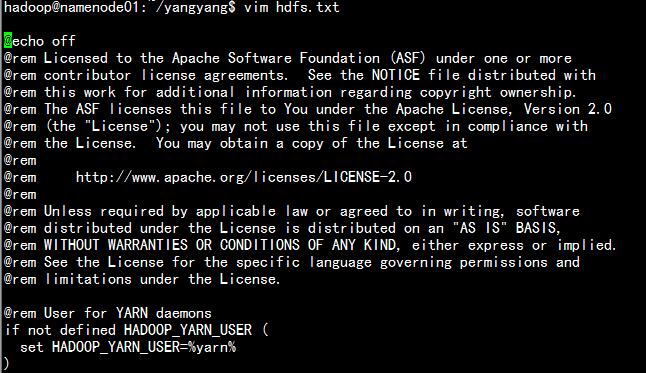

#hdfs dfs -ls /yangyang

#hdfs dfs -get /yangyang/hdfs.txt

#vim hdfs.txt