@zhangyy

2021-07-16T10:30:44.000000Z

字数 24178

阅读 803

kubeadmin 安装k8s1.20集群

kubernetes架构系列

一:k8s1.20.x 的重要更新

1、Kubectl debug 设置一个临时容器2、Sidecar3、Volume:更改目录权限,fsGroup4、ConfigMap和SecretK8S官网:https://kubernetes.io/docs/setup/最新版高可用安装:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/

二:k8s1.20.x 的安装

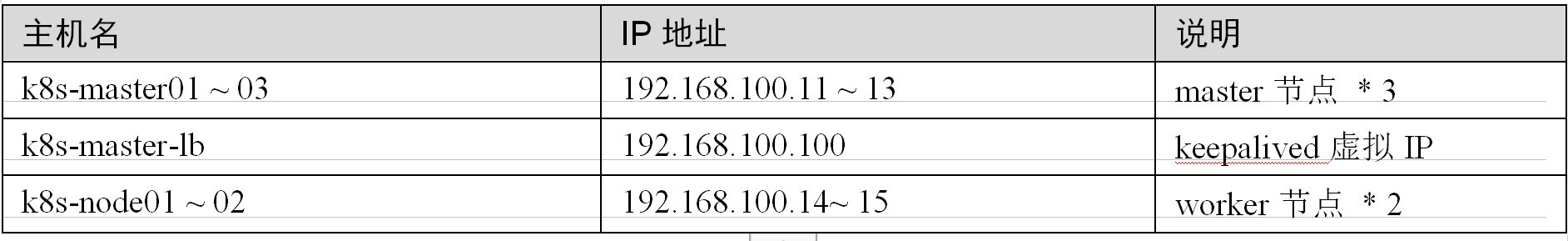

2.1 :高可用Kubernetes集群规划

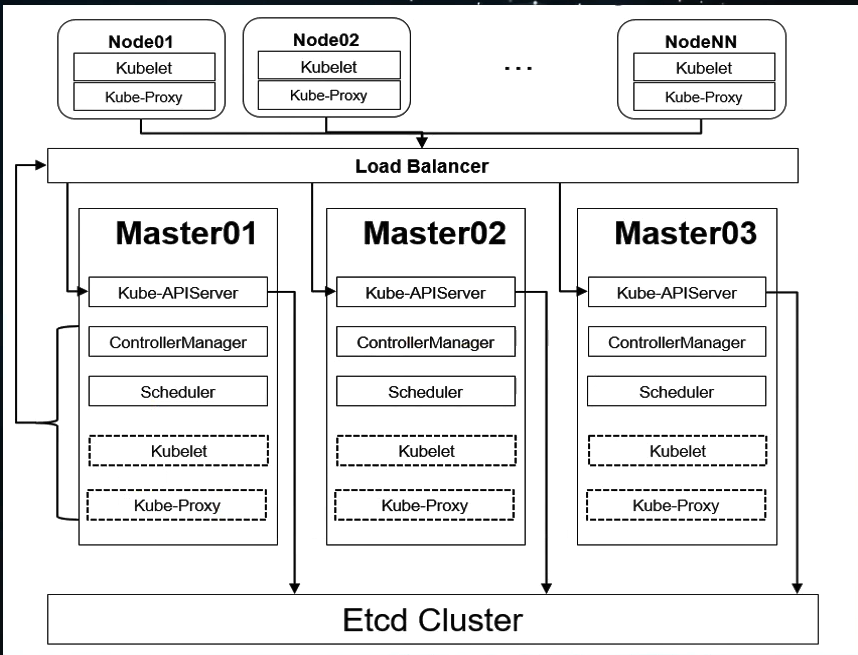

k8s 的高可用的架构图

所有节点配置hosts,修改/etc/hosts如下:cat /etc/hosts----192.168.100.11 node01.flyfish.cn192.168.100.12 node02.flyfish.cn192.168.100.13 node03.flyfish.cn192.168.100.14 node04.flyfish.cn192.168.100.15 node05.flyfish.cn192.168.100.16 node06.flyfish.cn192.168.100.17 node07.flyfish.cn192.168.100.18 node08.flyfish.cn----

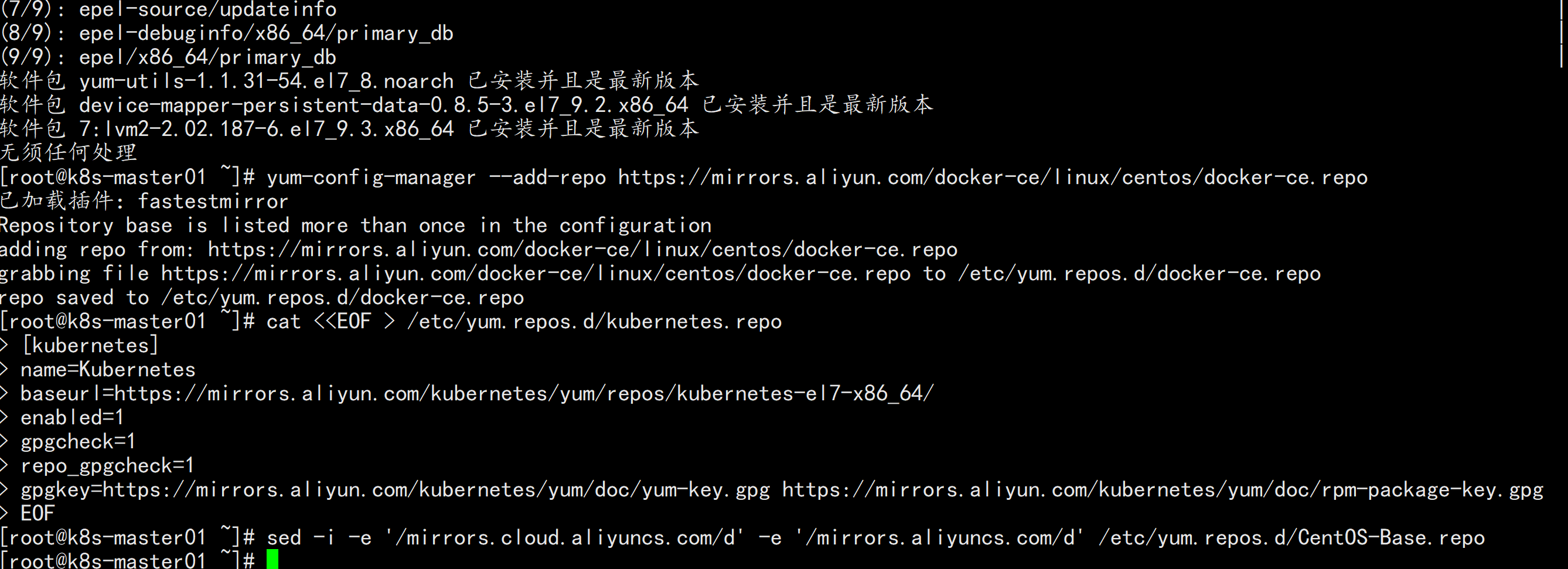

2.2 yum 的更新配置 (所有节点全部安装)

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repoyum install -y yum-utils device-mapper-persistent-data lvm2yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repocat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOFsed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

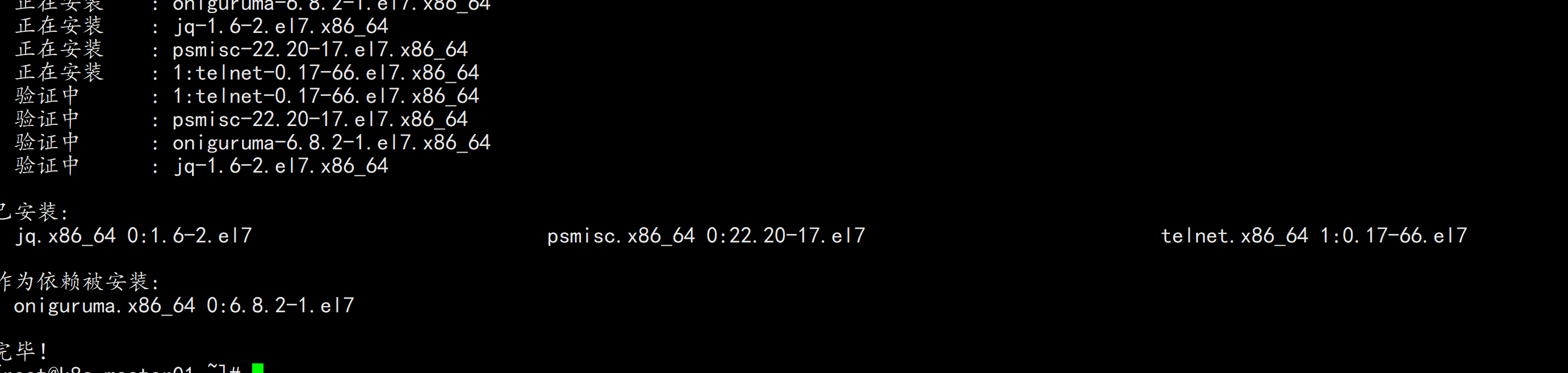

必备工具安装:yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

所有节点关闭防火墙、selinux、dnsmasq、swap。服务器配置如下:systemctl disable --now firewalldsystemctl disable --now dnsmasqsystemctl disable --now NetworkManagersetenforce 0sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinuxsed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

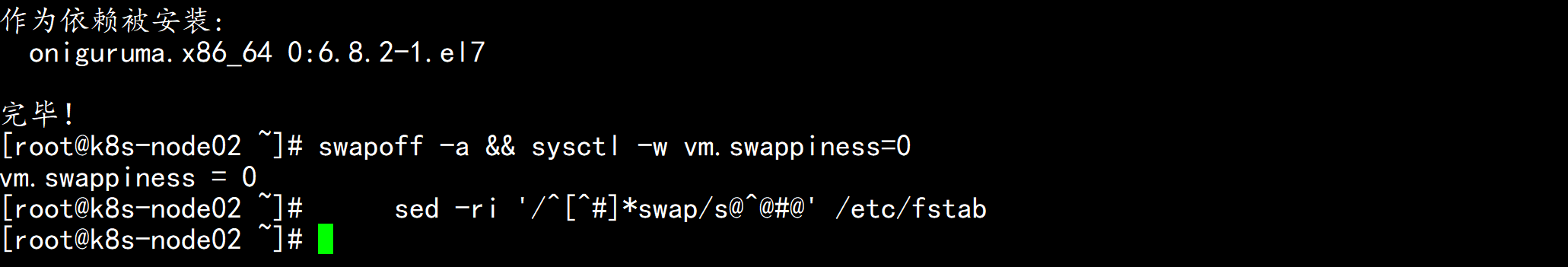

关闭swap分区 (全部节点)swapoff -a && sysctl -w vm.swappiness=0sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

安装ntpdaterpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpmyum install ntpdate -y所有节点同步时间。时间同步配置如下:ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtimeecho 'Asia/Shanghai' >/etc/timezonentpdate time2.aliyun.com加入到crontab*/5 * * * * ntpdate time2.aliyun.com所有节点配置limit:ulimit -SHn 65535vim /etc/security/limits.conf# 末尾添加如下内容* soft nofile 655360* hard nofile 131072* soft nproc 655350* hard nproc 655350* soft memlock unlimited* hard memlock unlimited

安装ntpdaterpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpmyum install ntpdate -y所有节点同步时间。时间同步配置如下:ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtimeecho 'Asia/Shanghai' >/etc/timezonentpdate time2.aliyun.com加入到crontab*/5 * * * * ntpdate time2.aliyun.com所有节点配置limit:ulimit -SHn 65535vim /etc/security/limits.conf# 末尾添加如下内容* soft nofile 655360* hard nofile 131072* soft nproc 655350* hard nproc 655350* soft memlock unlimited* hard memlock unlimitedMaster01节点免密钥登录其他节点:ssh-keygen -t rsafor i in k8s-master01.flyfish.cn k8s-master02.flyfish.cn k8s-master03.flyfish.cn k8s-node01.flyfish.cn k8s-node02.flyfish.cn;do ssh-copy-id -i .ssh/id_rsa.pub $i;done所有节点升级系统并重启:yum update -y && reboot

下载安装源码文件:cd /root/ ; git clone https://github.com/dotbalo/k8s-ha-install.git

CentOS 7安装yum源如下:curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repoyum install -y yum-utils device-mapper-persistent-data lvm2yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repocat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOFsed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

CentOS 8 安装源如下:curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-8.repoyum install -y yum-utils device-mapper-persistent-data lvm2yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repocat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOFsed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

所有节点升级系统并重启,此处升级没有升级内核,下节会单独升级内核:yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 -yyum update -y --exclude=kernel* && reboot #CentOS7需要升级,8不需要

1.1.2 内核配置CentOS7 需要升级内核至4.18+https://www.kernel.org/ 和 https://elrepo.org/linux/kernel/el7/x86_64/CentOS 7 dnf可能无法安装内核dnf --disablerepo=\* --enablerepo=elrepo -y install kernel-ml kernel-ml-develgrubby --default-kernel使用如下方式安装最新版内核rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.orgrpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm查看最新版内核yum --disablerepo="*" --enablerepo="elrepo-kernel" list available[root@k8s-node01 ~]# yum --disablerepo="*" --enablerepo="elrepo-kernel" list availableLoaded plugins: fastestmirrorLoading mirror speeds from cached hostfile* elrepo-kernel: mirrors.neusoft.edu.cnelrepo-kernel | 2.9 kB 00:00:00elrepo-kernel/primary_db | 1.9 MB 00:00:00Available Packageselrepo-release.noarch 7.0-5.el7.elrepo elrepo-kernelkernel-lt.x86_64 4.4.229-1.el7.elrepo elrepo-kernelkernel-lt-devel.x86_64 4.4.229-1.el7.elrepo elrepo-kernelkernel-lt-doc.noarch 4.4.229-1.el7.elrepo elrepo-kernelkernel-lt-headers.x86_64 4.4.229-1.el7.elrepo elrepo-kernelkernel-lt-tools.x86_64 4.4.229-1.el7.elrepo elrepo-kernelkernel-lt-tools-libs.x86_64 4.4.229-1.el7.elrepo elrepo-kernelkernel-lt-tools-libs-devel.x86_64 4.4.229-1.el7.elrepo elrepo-kernelkernel-ml.x86_64 5.7.7-1.el7.elrepo elrepo-kernelkernel-ml-devel.x86_64 5.7.7-1.el7.elrepo elrepo-kernelkernel-ml-doc.noarch 5.7.7-1.el7.elrepo elrepo-kernelkernel-ml-headers.x86_64 5.7.7-1.el7.elrepo elrepo-kernelkernel-ml-tools.x86_64 5.7.7-1.el7.elrepo elrepo-kernelkernel-ml-tools-libs.x86_64 5.7.7-1.el7.elrepo elrepo-kernelkernel-ml-tools-libs-devel.x86_64 5.7.7-1.el7.elrepo elrepo-kernelperf.x86_64 5.7.7-1.el7.elrepo elrepo-kernelpython-perf.x86_64 5.7.7-1.el7.elrepo elrepo-kernel安装最新版:yum --enablerepo=elrepo-kernel install kernel-ml kernel-ml-devel –y安装完成后reboot更改内核顺序:grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg && grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)" && reboot开机后查看内核[appadmin@k8s-node01 ~]$ uname -aLinux k8s-node01 5.7.7-1.el7.elrepo.x86_64 #1 SMP Wed Jul 1 11:53:16 EDT 2020 x86_64 x86_64 x86_64 GNU/LinuxCentOS 8按需升级:可以采用dnf升级,也可使用上述同样步骤升级(使用上述步骤注意elrepo-release-8.1版本)rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.orgdnf install https://www.elrepo.org/elrepo-release-8.1-1.el8.elrepo.noarch.rpmdnf --disablerepo=\* --enablerepo=elrepo -y install kernel-ml kernel-ml-develgrubby --default-kernel && reboot

安装依赖包:本所有节点安装ipvsadm:yum install ipvsadm ipset sysstat conntrack libseccomp -y所有节点配置ipvs模块,在内核4.19+版本nf_conntrack_ipv4已经改为nf_conntrack,本例安装的内核为4.18,使用nf_conntrack_ipv4即可:modprobe -- ip_vsmodprobe -- ip_vs_rrmodprobe -- ip_vs_wrrmodprobe -- ip_vs_shmodprobe -- nf_conntrack_ipv4cat /etc/modules-load.d/ipvs.confip_vsip_vs_rrip_vs_wrrip_vs_shnf_conntrack_ipv4ip_tablesip_setxt_setipt_setipt_rpfilteript_REJECTipip然后执行systemctl enable --now systemd-modules-load.service即可

开启一些k8s集群中必须的内核参数,所有节点配置k8s内核:cat <<EOF > /etc/sysctl.d/k8s.confnet.ipv4.ip_forward = 1net.bridge.bridge-nf-call-iptables = 1fs.may_detach_mounts = 1vm.overcommit_memory=1vm.panic_on_oom=0fs.inotify.max_user_watches=89100fs.file-max=52706963fs.nr_open=52706963net.netfilter.nf_conntrack_max=2310720net.ipv4.tcp_keepalive_time = 600net.ipv4.tcp_keepalive_probes = 3net.ipv4.tcp_keepalive_intvl =15net.ipv4.tcp_max_tw_buckets = 36000net.ipv4.tcp_tw_reuse = 1net.ipv4.tcp_max_orphans = 327680net.ipv4.tcp_orphan_retries = 3net.ipv4.tcp_syncookies = 1net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.ip_conntrack_max = 65536net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.tcp_timestamps = 0net.core.somaxconn = 16384EOFsysctl --system

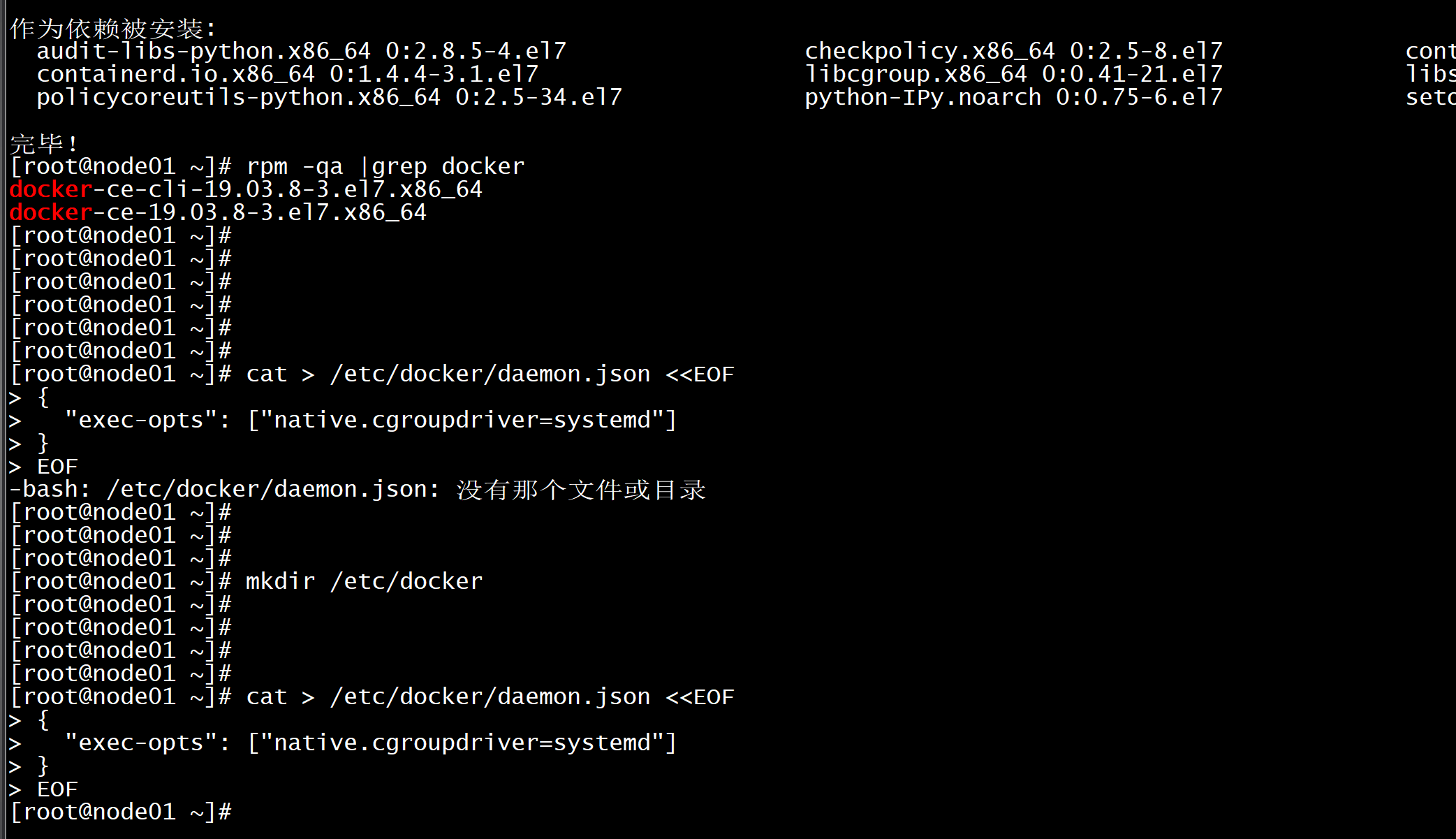

1.1.3 基本组件安装本节主要安装的是集群中用到的各种组件,比如Docker-ce、Kubernetes各组件等。查看可用docker-ce版本:yum list docker-ce.x86_64 --showduplicates | sort -r[root@k8s-master01 k8s-ha-install]# wget https://download.docker.com/linux/centos/7/x86_64/edge/Packages/containerd.io-1.2.13-3.2.el7.x86_64.rpm安装 docker-ce 19.03 版本:yum install -y docker-ce-cli-19.03.8-3.el7.x86_64 docker-ce-19.03.8-3.el7.x86_64

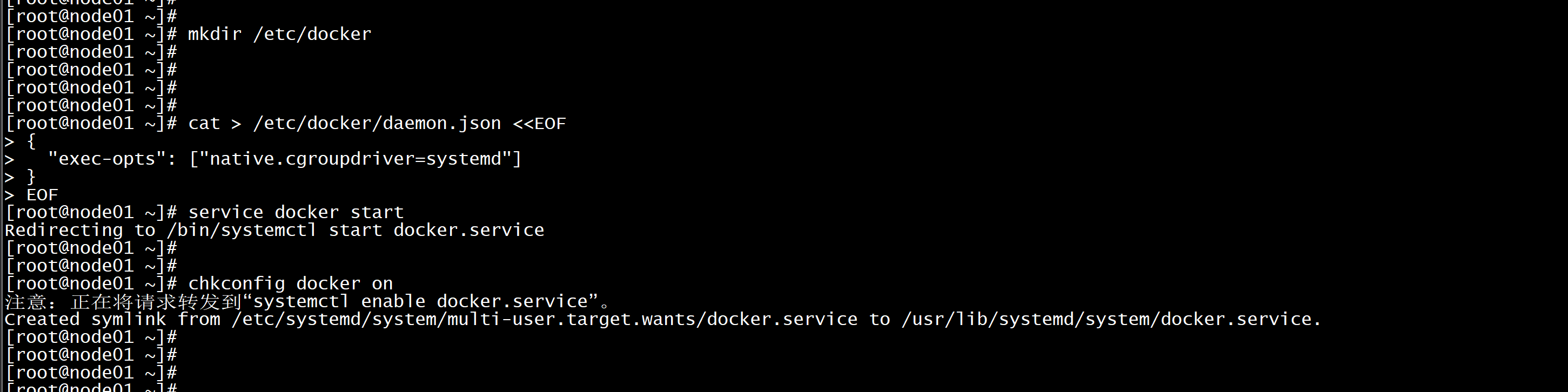

温馨提示:由于新版kubelet建议使用systemd,所以可以把docker的CgroupDriver改成systemdcat > /etc/docker/daemon.json <<EOF{"exec-opts": ["native.cgroupdriver=systemd"]}EOF

启动dockerservice docker startchkconfig docker on

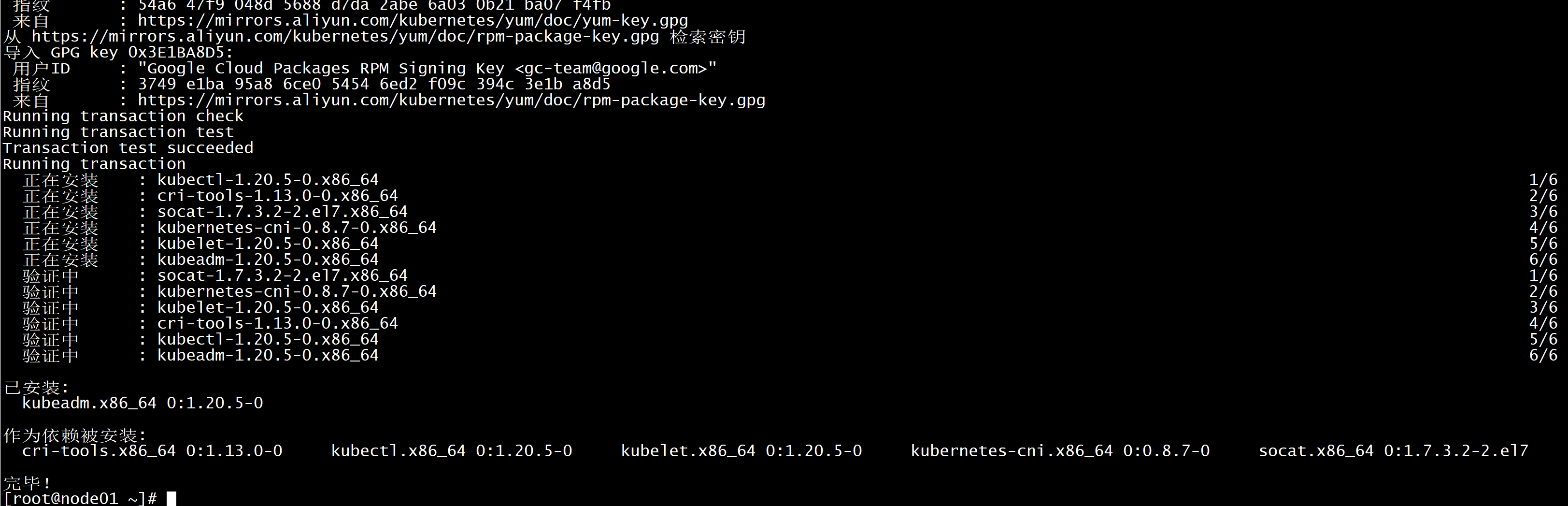

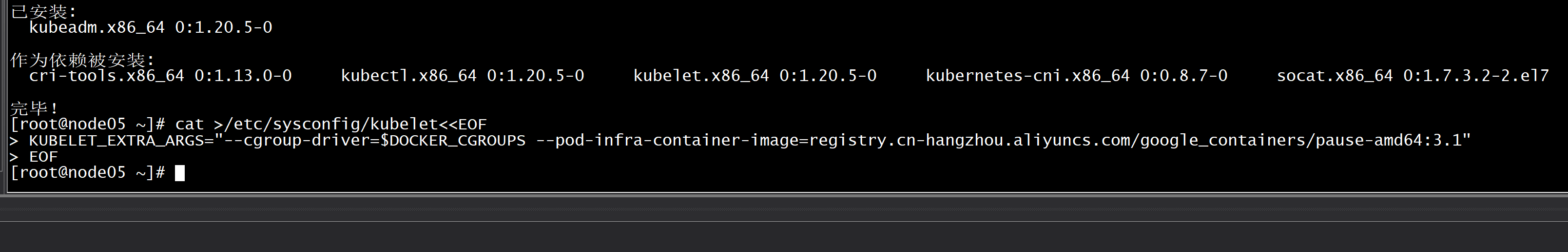

安装k8s组件:yum list kubeadm.x86_64 --showduplicates | sort -r所有节点安装最新版本kubeadm:yum install kubeadm -y所有节点安装指定版本k8s组件:yum install -y kubeadm-1.20.5-0.x86_64 kubectl-1.20.5-0.x86_64 kubelet-1.20.5-0.x86_64所有节点设置开机自启动Docker:systemctl daemon-reload && systemctl enable --now docker默认配置的pause镜像使用gcr.io仓库,国内可能无法访问,所以这里配置Kubelet使用阿里云的pause镜像:DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f4)cat >/etc/sysconfig/kubelet<<EOFKUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"EOF

--

设置Kubelet开机自启动:systemctl daemon-reloadsystemctl enable --now kubelet

1.1.4 高可用组件安装所有Master节点通过yum安装HAProxy和KeepAlived:yum install keepalived haproxy -y

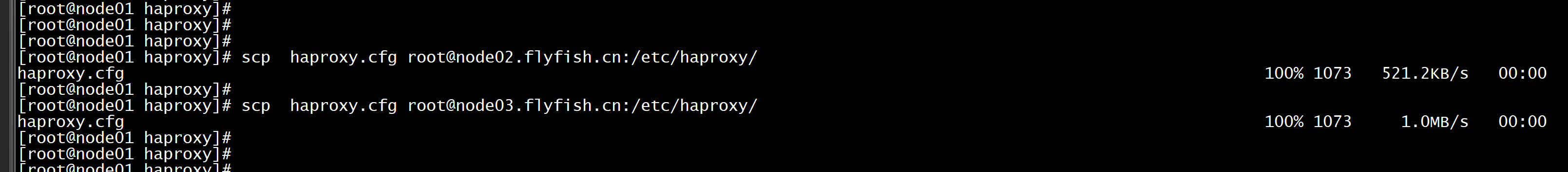

所有Master节点配置HAProxy(详细配置参考HAProxy文档,所有Master节点的HAProxy配置相同):[root@k8s-master01 etc]# mkdir /etc/haproxy[root@k8s-master01 etc]# vim /etc/haproxy/haproxy.cfgglobalmaxconn 2000ulimit-n 16384log 127.0.0.1 local0 errstats timeout 30sdefaultslog globalmode httpoption httplogtimeout connect 5000timeout client 50000timeout server 50000timeout http-request 15stimeout http-keep-alive 15sfrontend monitor-inbind *:33305mode httpoption httplogmonitor-uri /monitorlisten statsbind *:8006mode httpstats enablestats hide-versionstats uri /statsstats refresh 30sstats realm Haproxy\ Statisticsstats auth admin:adminfrontend k8s-masterbind 0.0.0.0:16443bind 127.0.0.1:16443mode tcpoption tcplogtcp-request inspect-delay 5sdefault_backend k8s-masterbackend k8s-mastermode tcpoption tcplogoption tcp-checkbalance roundrobindefault-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100server node01.flyfish.cn 192.168.100.11:6443 checkserver node02.flyfish.cn 192.168.100.12:6443 checkserver node03.flyfish.cn 192.168.100.13:6443 check----三台机器的配置是一样的:scp haproxy.cfg root@node02.flyfish.cn:/etc/haproxy/scp haproxy.cfg root@node03.flyfish.cn:/etc/haproxy/

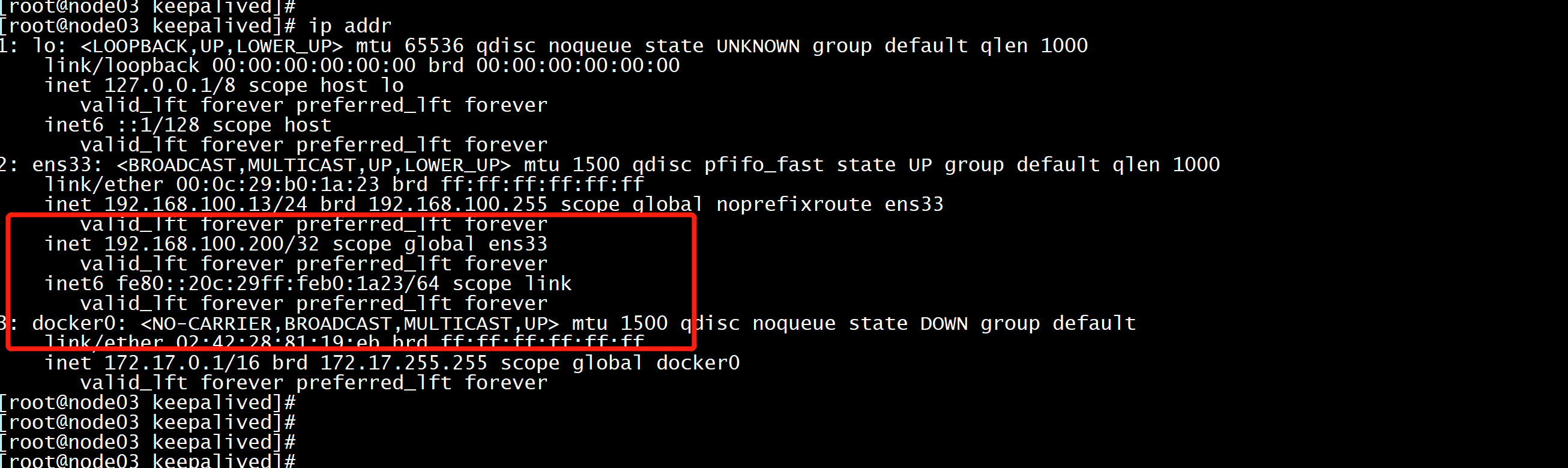

Master01节点的配置:[root@k8s-master01 etc]# mkdir /etc/keepalived[root@k8s-master01 ~]# vim /etc/keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs {router_id LVS_DEVEL}vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 2weight -5fall 3rise 2}vrrp_instance VI_1 {state MASTERinterface ens33mcast_src_ip 192.168.100.11virtual_router_id 51priority 100advert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.100.200}track_script {chk_apiserver}}

Master02节点的配置:! Configuration File for keepalivedglobal_defs {router_id LVS_DEVEL}vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 2weight -5fall 3rise 2}vrrp_instance VI_1 {state BACKUPinterface ens33mcast_src_ip 192.168.100.12virtual_router_id 51priority 101advert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.100.200}track_script {chk_apiserver}}

Master03节点的配置:! Configuration File for keepalivedglobal_defs {router_id LVS_DEVEL}vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 2weight -5fall 3rise 2}vrrp_instance VI_1 {state BACKUPinterface ens33mcast_src_ip 192.168.100.13virtual_router_id 51priority 102advert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.100.200}track_script {chk_apiserver}}注意上述的健康检查是关闭的,集群建立完成后再开启:track_script {chk_apiserver}

配置KeepAlived健康检查文件:[root@k8s-master01 keepalived]# cat /etc/keepalived/check_apiserver.sh#!/bin/basherr=0for k in $(seq 1 5)docheck_code=$(pgrep kube-apiserver)if [[ $check_code == "" ]]; thenerr=$(expr $err + 1)sleep 5continueelseerr=0breakfidoneif [[ $err != "0" ]]; thenecho "systemctl stop keepalived"/usr/bin/systemctl stop keepalivedexit 1elseexit 0fi

启动haproxy和keepalived (所有master 启动)[root@k8s-master01 keepalived]# systemctl enable --now haproxy[root@k8s-master01 keepalived]# systemctl enable --now keepalived

集群初始化:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/

各Master节点的kubeadm-config.yaml配置文件如下:Master01:daocloud.io/daocloud-----apiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: 7t2weq.bjbawausm0jaxuryttl: 24h0m0susages:- signing- authenticationkind: InitConfigurationlocalAPIEndpoint:advertiseAddress: 192.168.100.11bindPort: 6443nodeRegistration:criSocket: /var/run/dockershim.sockname: node01.flyfish.cntaints:- effect: NoSchedulekey: node-role.kubernetes.io/master---apiServer:certSANs:- 192.168.100.200timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrolPlaneEndpoint: 192.168.100.200:16443controllerManager: {}dns:type: CoreDNSetcd:local:dataDir: /var/lib/etcdimageRepository: registry.cn-hangzhou.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: v1.20.5networking:dnsDomain: cluster.localpodSubnet: 172.168.100.0/16serviceSubnet: 10.96.0.0/12scheduler: {}----

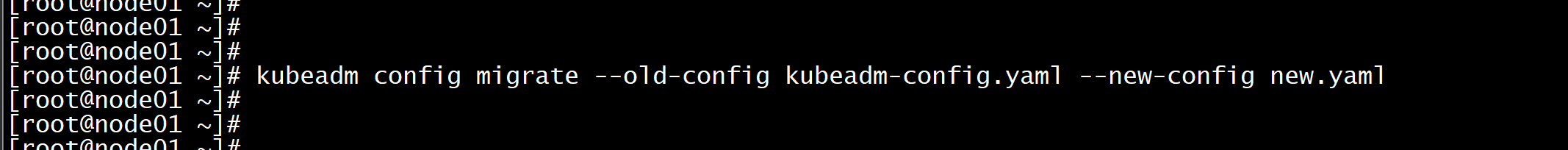

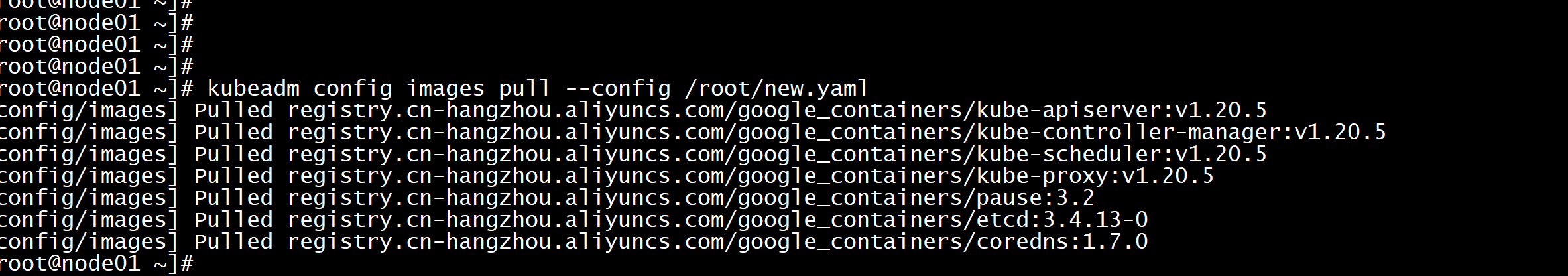

更新kubeadm文件kubeadm config migrate --old-config kubeadm-config.yaml --new-config new.yaml所有Master节点提前下载镜像,可以节省初始化时间:(master 节点)kubeadm config images pull --config /root/new.yaml所有节点设置开机自启动kubeletsystemctl enable --now kubelet

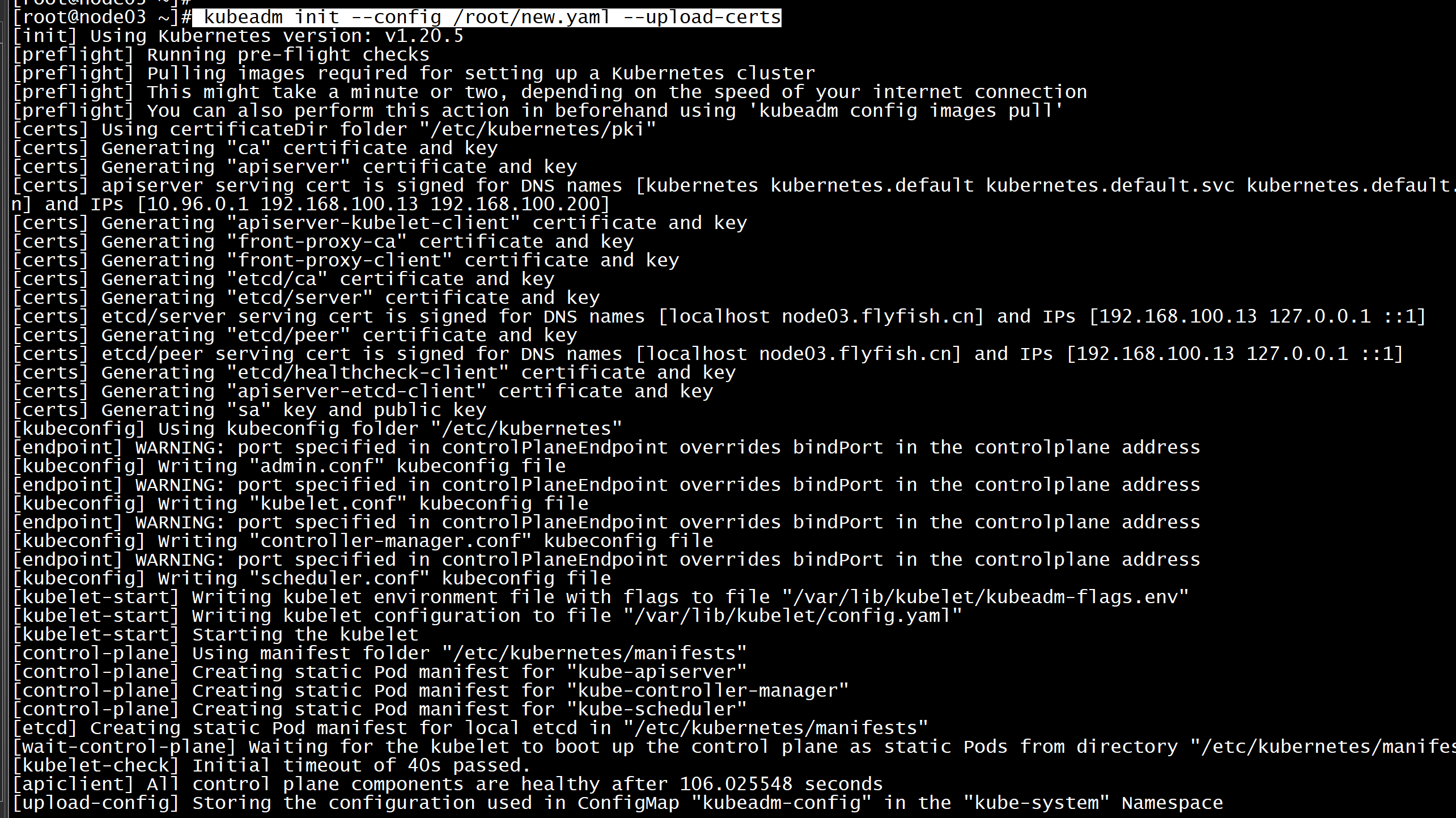

Master01节点初始化,初始化以后会在/etc/kubernetes目录下生成对应的证书和配置文件,之后其他Master节点加入Master01即可:kubeadm init --config /root/kubeadm-config.yaml --upload-certs不用配置文件初始化:kubeadm init --control-plane-endpoint "LOAD_BALANCER_DNS:LOAD_BALANCER_PORT" --upload-certs

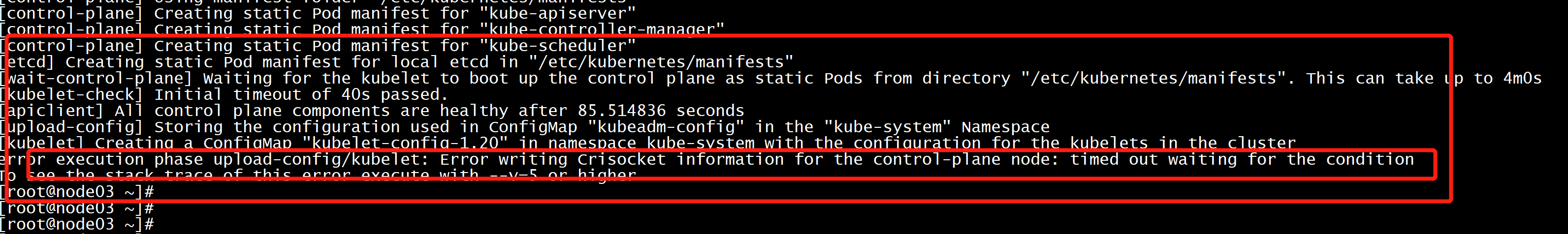

初始化失败报错error execution phase upload-config/kubelet: Error writing Crisocket information for the control-plane node: timed out waiting for the conditionTo see the stack trace of this error execute with --v=5 or higher

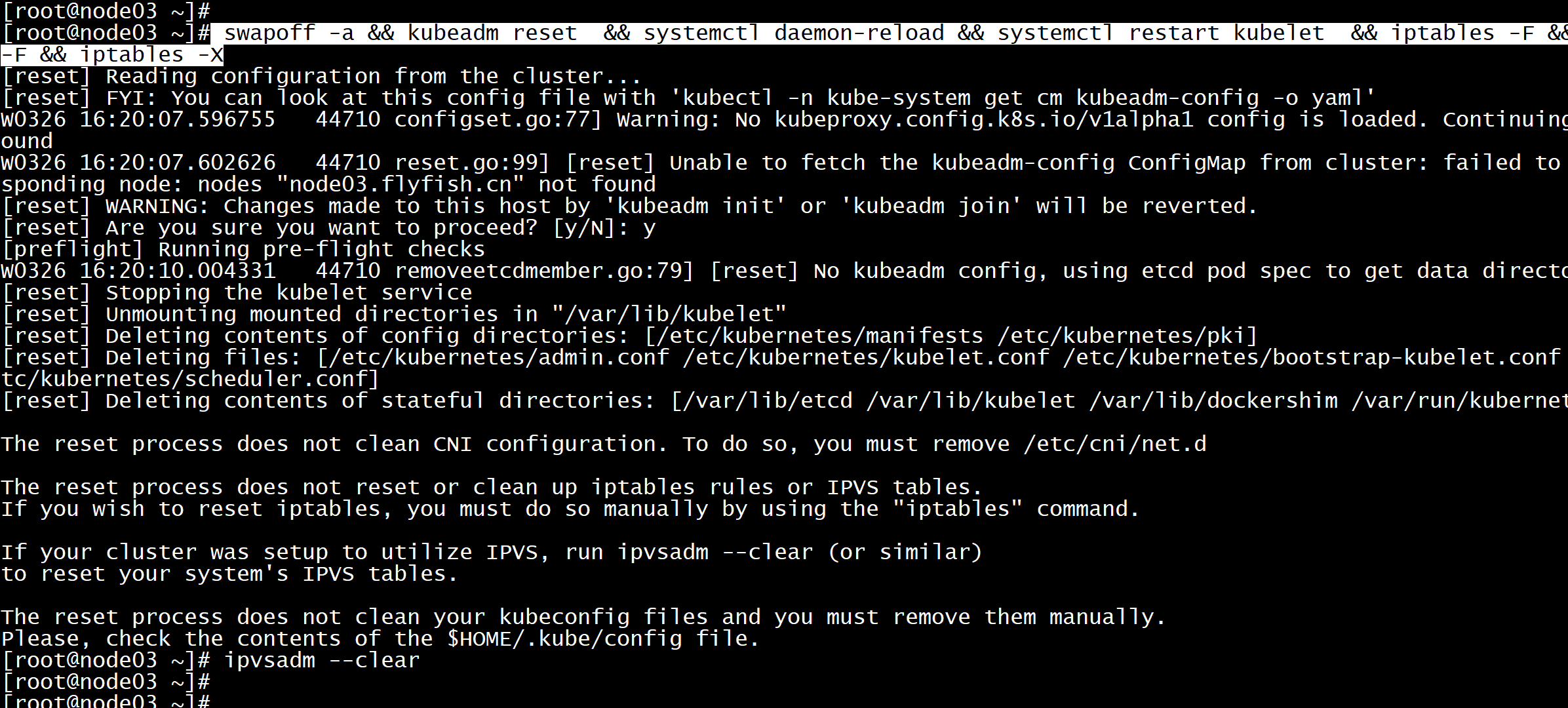

解决方法:所有主机停掉kubeletservice kubelet stop执行命令:swapoff -a && kubeadm reset && systemctl daemon-reload && systemctl restart kubelet && iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -Xipvsadm --clear

再次初始化:kubeadm init --config /root/new.yaml --upload-certs

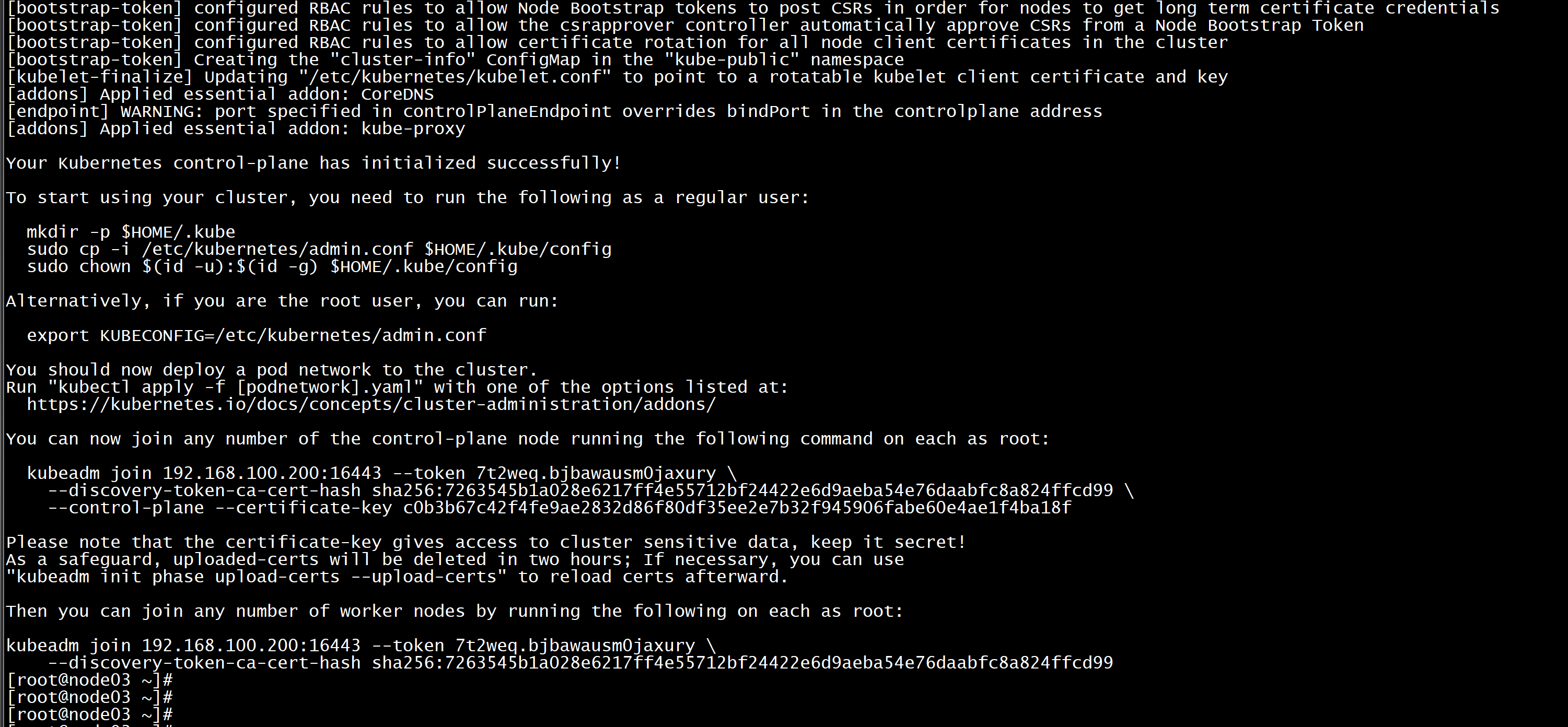

Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of the control-plane node running the following command on each as root:kubeadm join 192.168.100.200:16443 --token 7t2weq.bjbawausm0jaxury \--discovery-token-ca-cert-hash sha256:7263545b1a028e6217ff4e55712bf24422e6d9aeba54e76daabfc8a824ffcd99 \--control-plane --certificate-key c0b3b67c42f4fe9ae2832d86f80df35ee2e7b32f945906fabe60e4ae1f4ba18fPlease note that the certificate-key gives access to cluster sensitive data, keep it secret!As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.100.200:16443 --token 7t2weq.bjbawausm0jaxury \--discovery-token-ca-cert-hash sha256:7263545b1a028e6217ff4e55712bf24422e6d9aeba54e76daabfc8a824ffcd99

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

所有Master节点配置环境变量,用于访问Kubernetes集群:cat <<EOF >> /root/.bashrcexport KUBECONFIG=/etc/kubernetes/admin.confEOFsource /root/.bashrc查看节点状态:[root@k8s-master01 ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master01 NotReady master 14m v1.12.3采用初始化安装方式,所有的系统组件均以容器的方式运行并且在kube-system命名空间内,此时可以查看Pod状态:

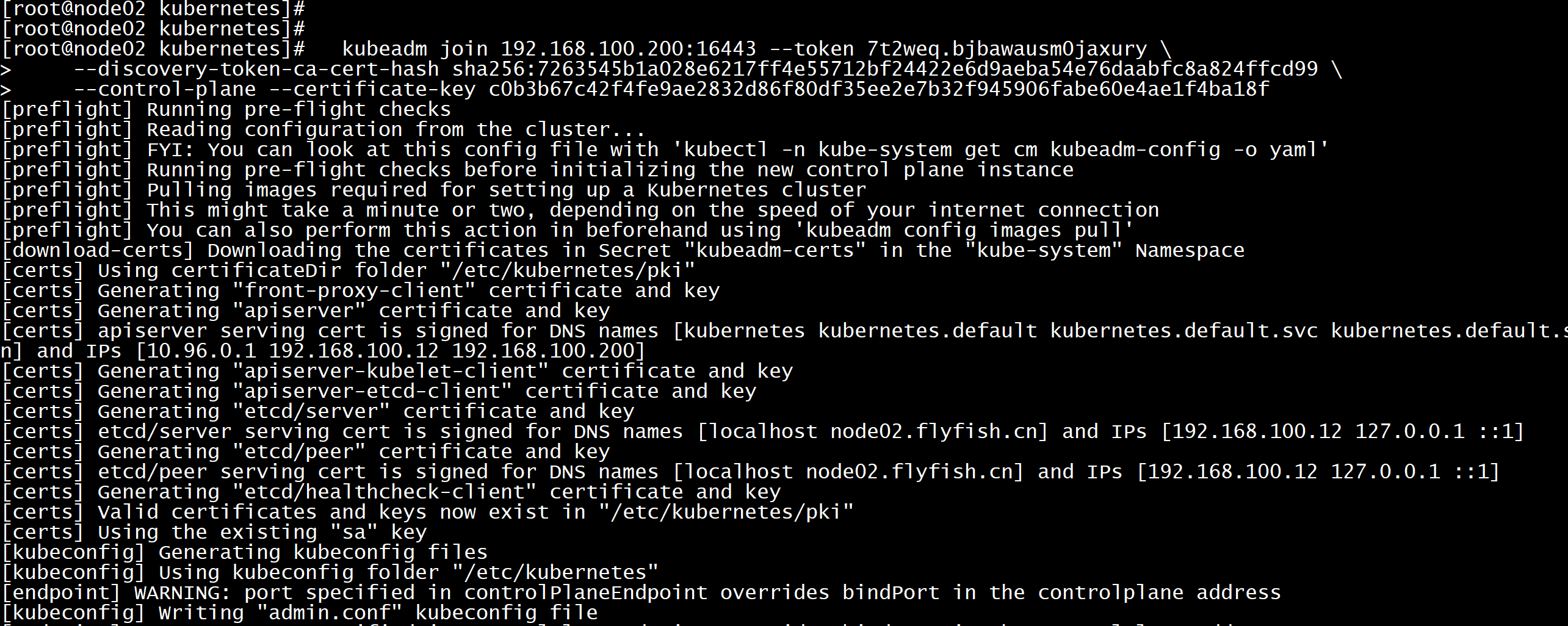

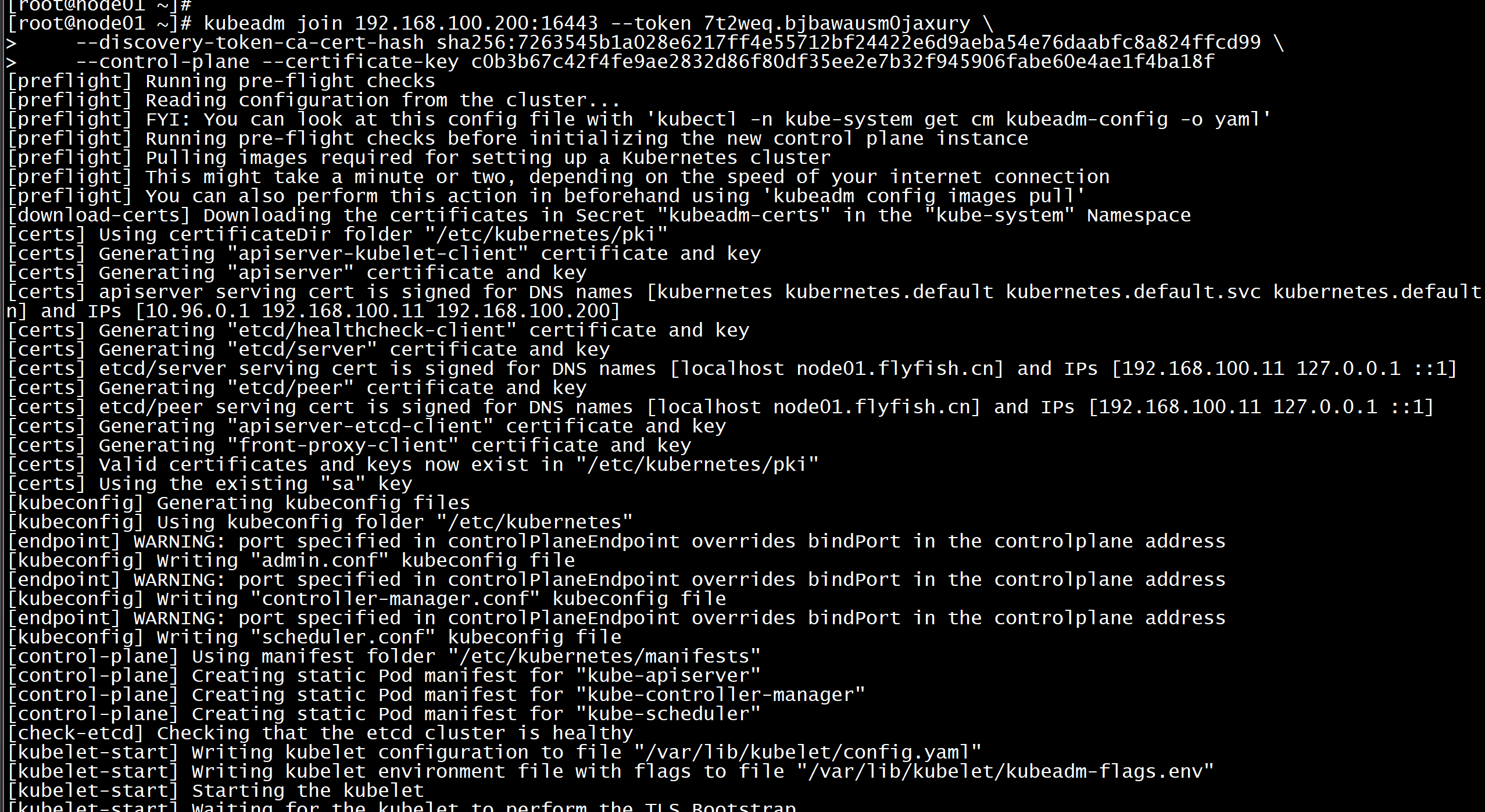

其他master 加入集群:kubeadm join 192.168.100.200:16443 --token 7t2weq.bjbawausm0jaxury \--discovery-token-ca-cert-hash sha256:7263545b1a028e6217ff4e55712bf24422e6d9aeba54e76daabfc8a824ffcd99 \--control-plane --certificate-key c0b3b67c42f4fe9ae2832d86f80df35ee2e7b32f945906fabe60e4ae1f4ba18f

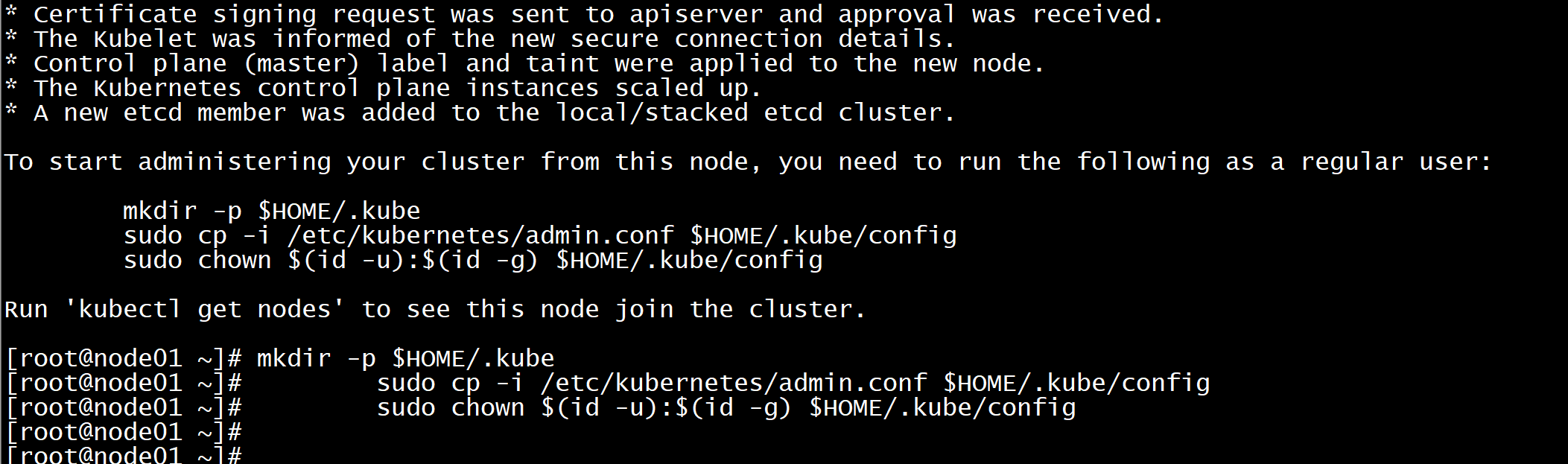

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

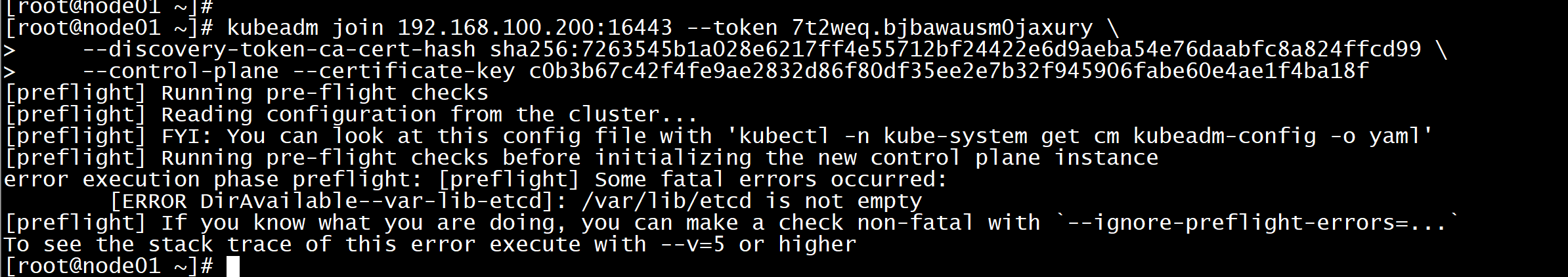

加master节点报错[ERROR DirAvailable--var-lib-etcd]: /var/lib/etcd is not empty[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`To see the stack trace of this error execute with --v=5 or higher

rm -rf /var/lib/etcd

在初始化:kubeadm join 192.168.100.200:16443 --token 7t2weq.bjbawausm0jaxury \--discovery-token-ca-cert-hash sha256:7263545b1a028e6217ff4e55712bf24422e6d9aeba54e76daabfc8a824ffcd99 \--control-plane --certificate-key c0b3b67c42f4fe9ae2832d86f80df35ee2e7b32f945906fabe60e4ae1f4ba18f

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

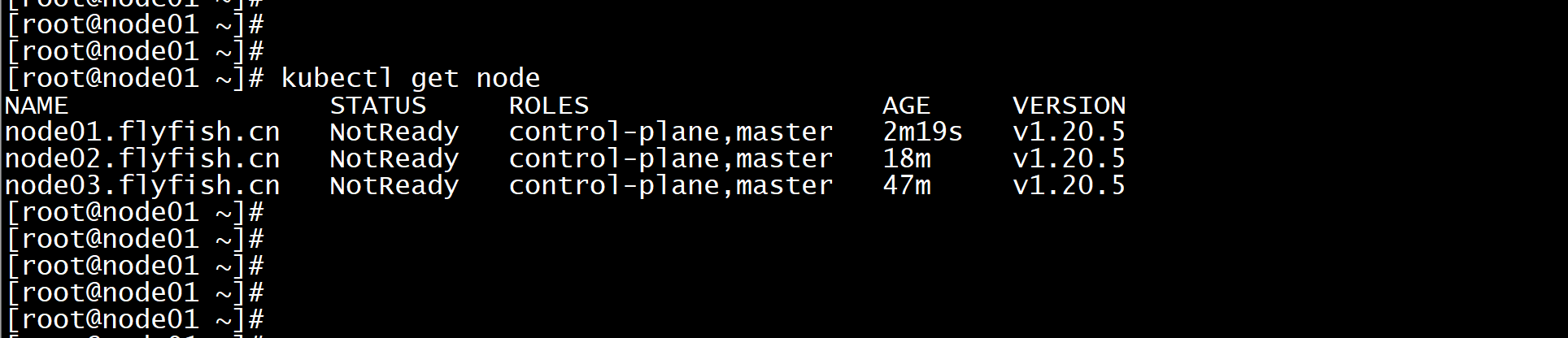

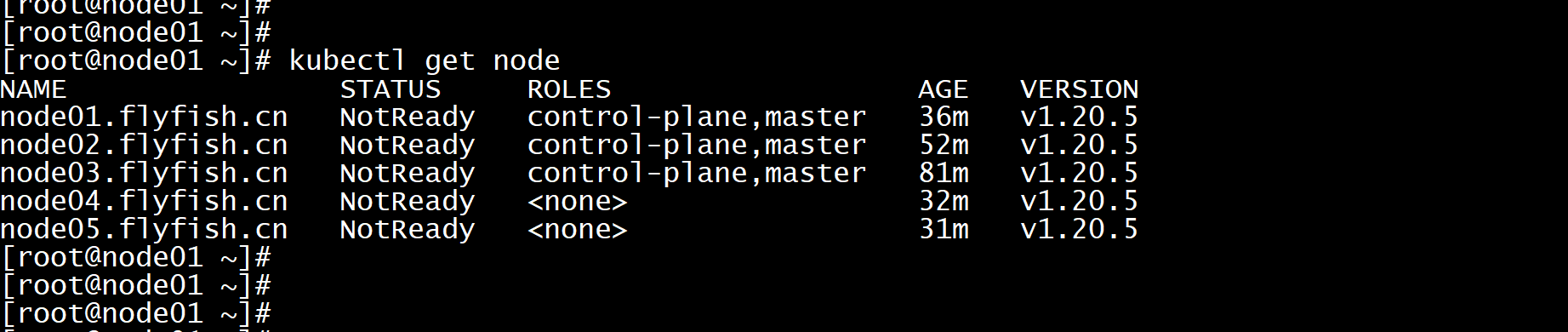

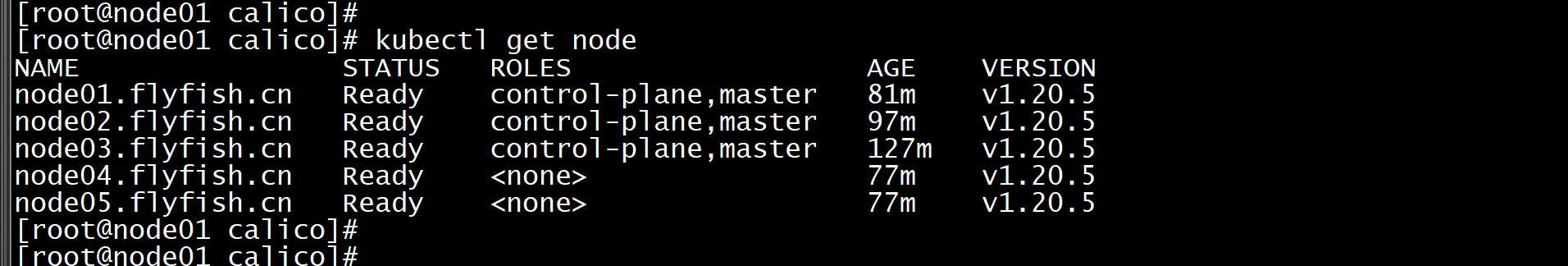

kubectl get node

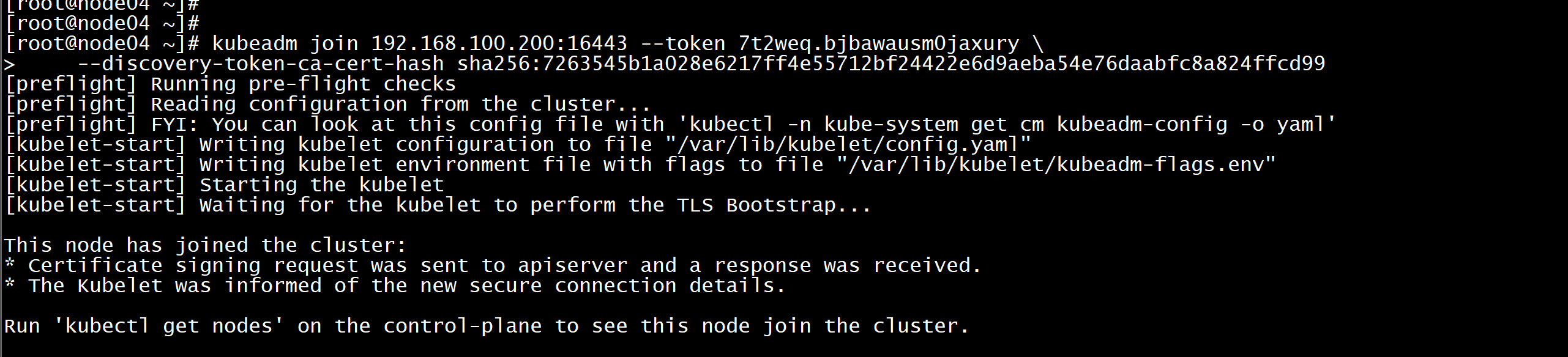

node 节点加入:kubeadm join 192.168.100.200:16443 --token 7t2weq.bjbawausm0jaxury \--discovery-token-ca-cert-hash sha256:7263545b1a028e6217ff4e55712bf24422e6d9aeba54e76daabfc8a824ffcd99

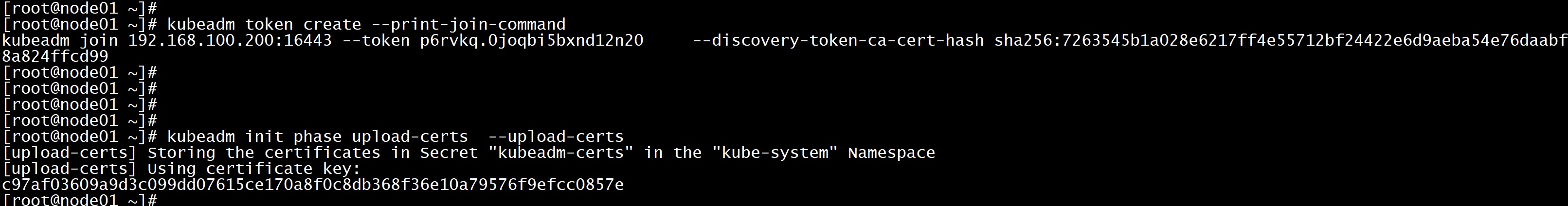

Token过期后生成新的token:(集群扩容与缩容的问题)kubeadm token create --print-join-command-----kubeadm join 192.168.100.200:16443 --token p6rvkq.0joqbi5bxnd12n20 --discovery-token-ca-cert-hash sha256:7263545b1a028e6217ff4e55712bf24422e6d9aeba54e76daabfc8a824ffcd99-----Master需要生成--certificate-keykubeadm init phase upload-certs --upload-certs-----[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace[upload-certs] Using certificate key:c97af03609a9d3c099dd07615ce170a8f0c8db368f36e10a79576f9efcc0857e----初始化其他master加入集群kubeadm join 192.168.100.200:16443 --token p6rvkq.0joqbi5bxnd12n20 --discovery-token-ca-cert-hash sha256:7263545b1a028e6217ff4e55712bf24422e6d9aeba54e76daabfc8a824ffcd99 --control-plane --certificate-key c97af03609a9d3c099dd07615ce170a8f0c8db368f36e10a79576f9efcc0857e

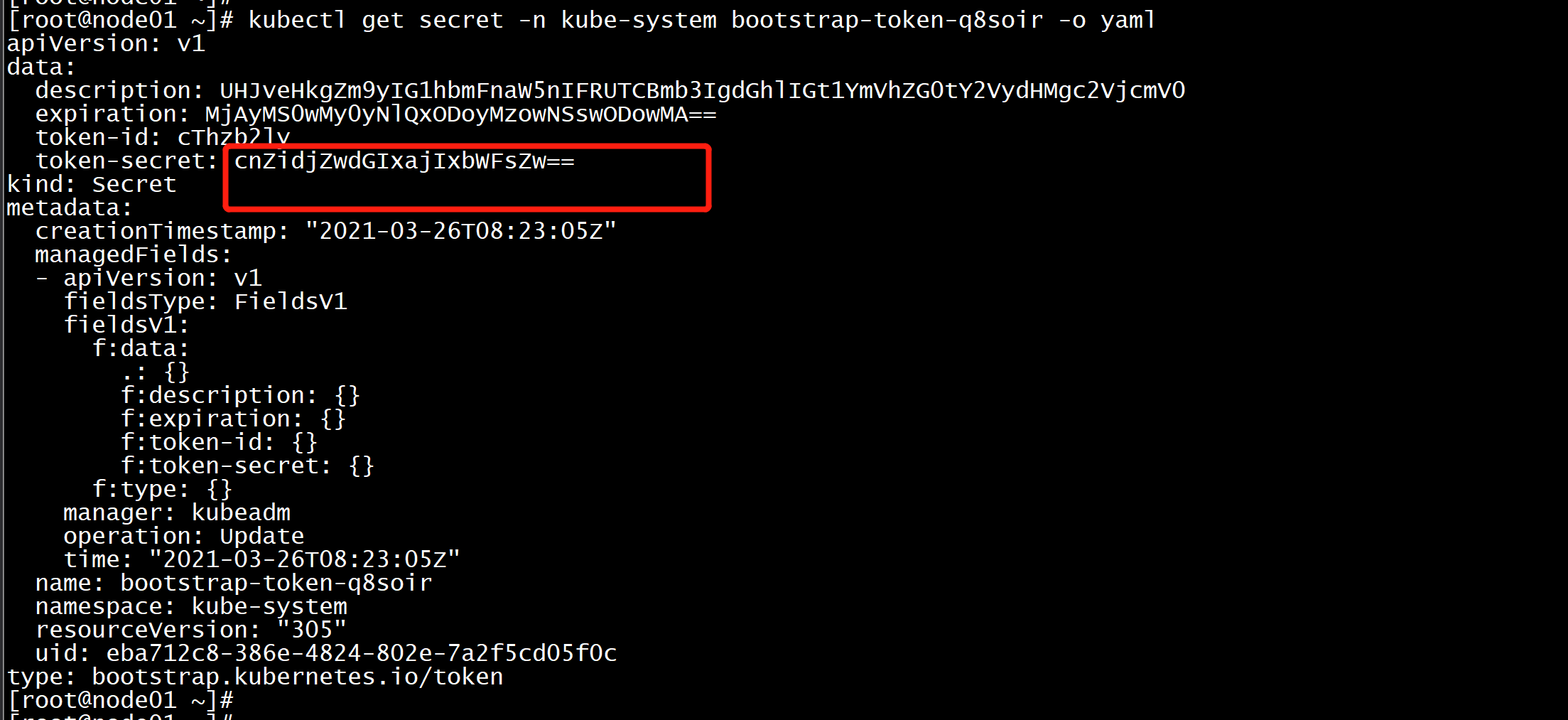

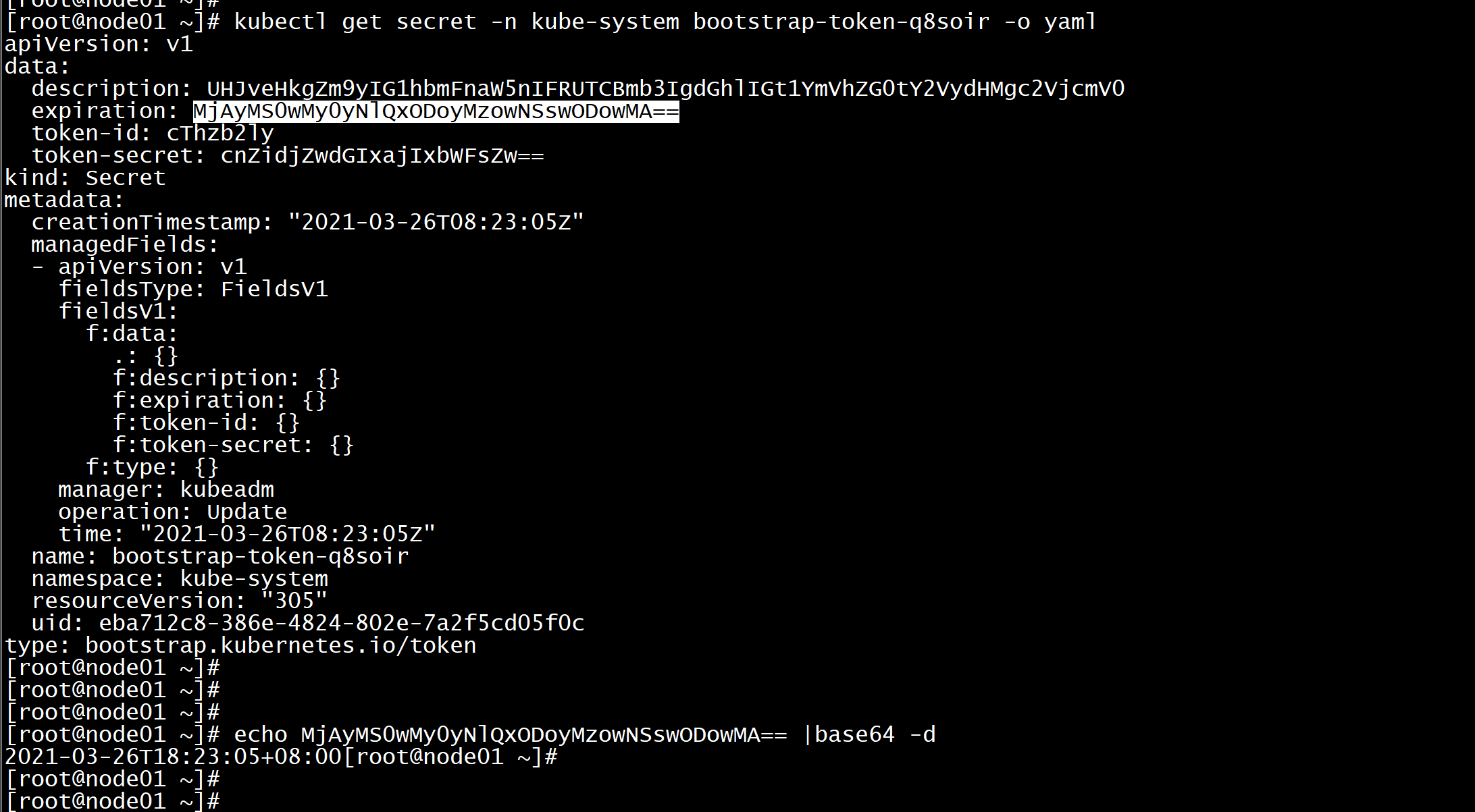

查看token的过期时间kubectl get secret -n kube-system -o wide

kubectl get secret -n kube-system bootstrap-token-q8soir -o yaml-----apiVersion: v1data:description: UHJveHkgZm9yIG1hbmFnaW5nIFRUTCBmb3IgdGhlIGt1YmVhZG0tY2VydHMgc2VjcmV0expiration: MjAyMS0wMy0yNlQxODoyMzowNSswODowMA==token-id: cThzb2lytoken-secret: cnZidjZwdGIxajIxbWFsZw==kind: Secretmetadata:creationTimestamp: "2021-03-26T08:23:05Z"managedFields:- apiVersion: v1fieldsType: FieldsV1fieldsV1:f:data:.: {}f:description: {}f:expiration: {}f:token-id: {}f:token-secret: {}f:type: {}manager: kubeadmoperation: Updatetime: "2021-03-26T08:23:05Z"name: bootstrap-token-q8soirnamespace: kube-systemresourceVersion: "305"uid: eba712c8-386e-4824-802e-7a2f5cd05f0ctype: bootstrap.kubernetes.io/token----

echo MjAyMS0wMy0yNlQxODoyMzowNSswODowMA== |base64 -d

kubectl get node

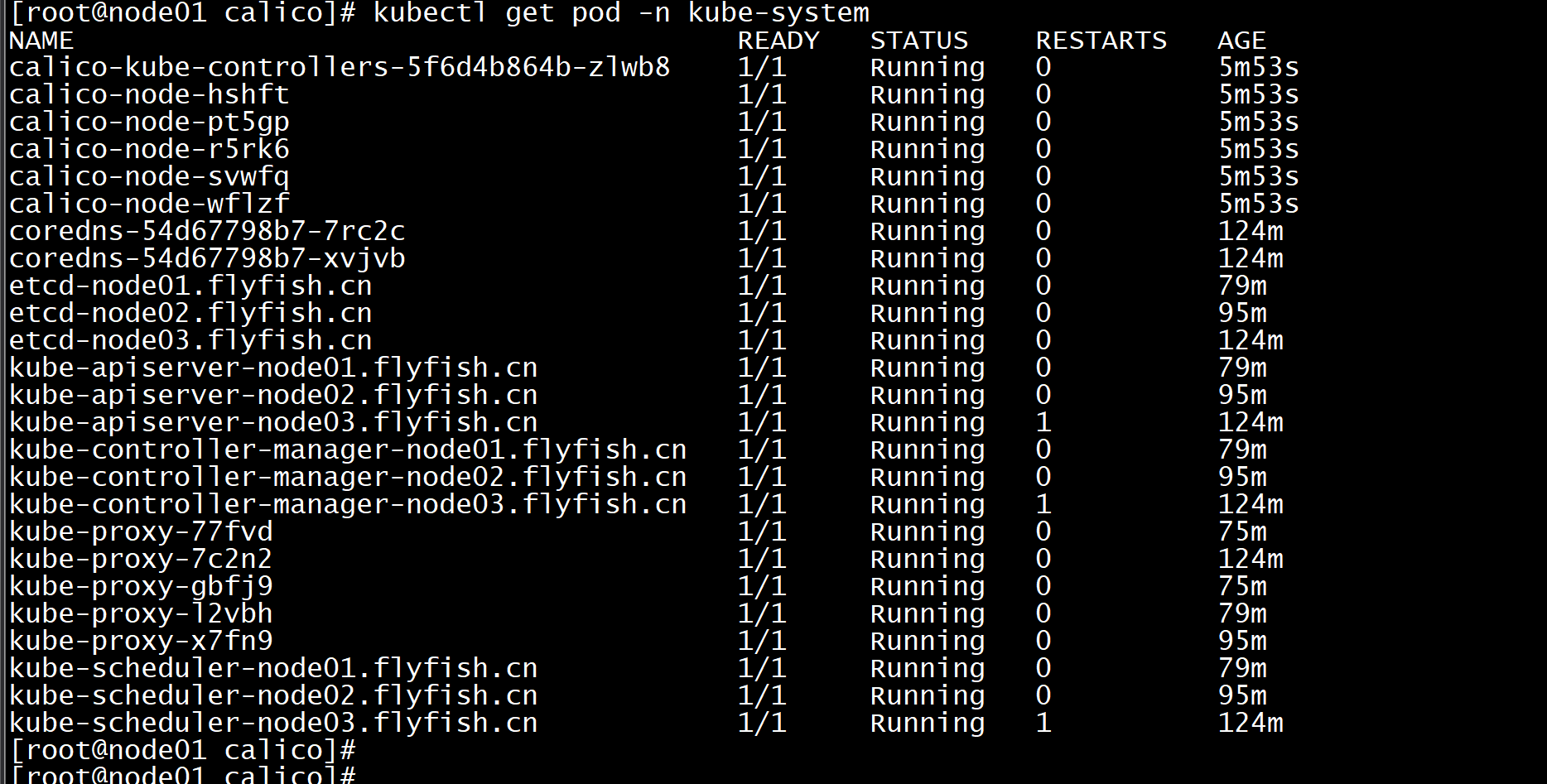

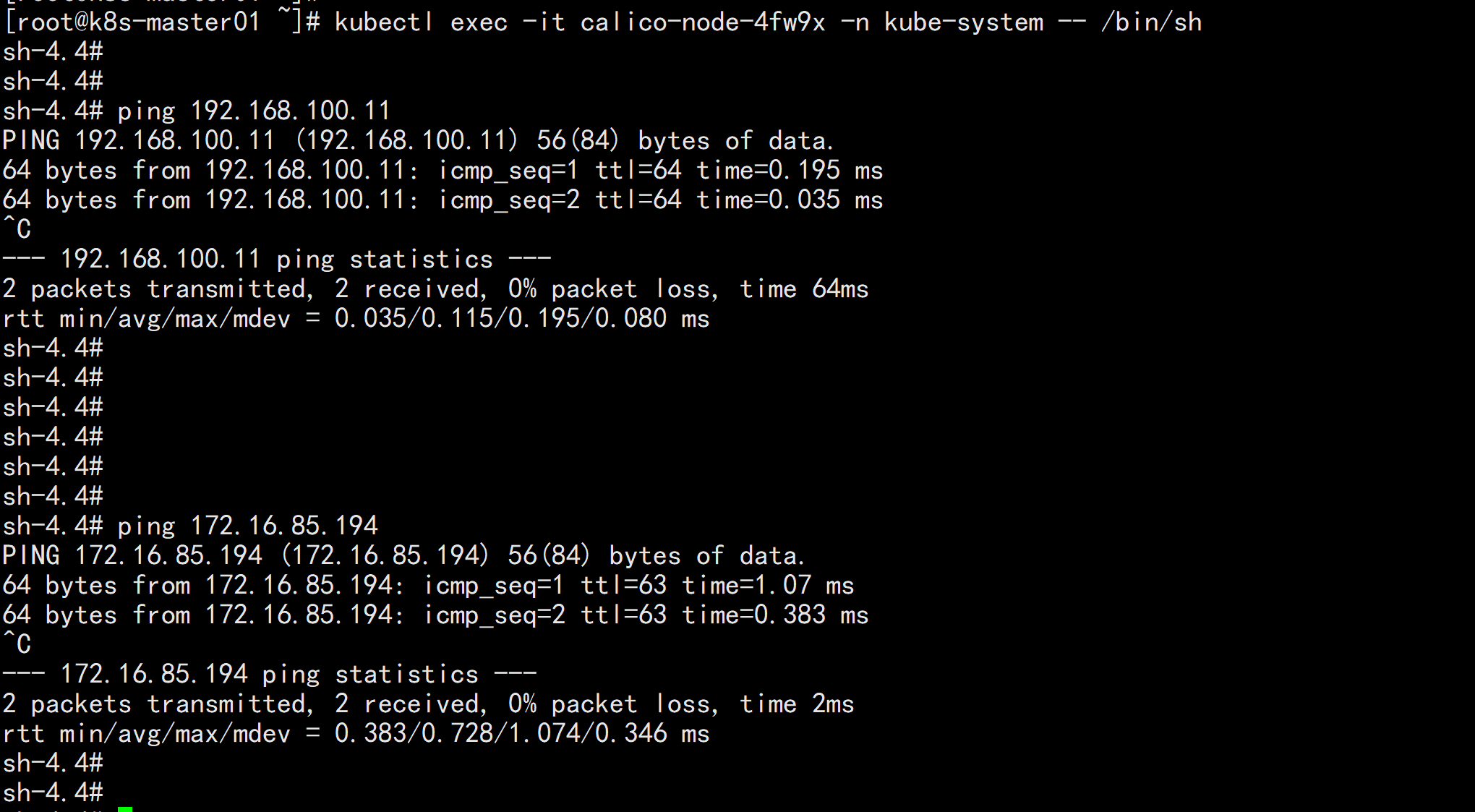

修改calico-etcd.yaml的以下位置cd /root/k8s-ha-install && git checkout manual-installation-v1.20.x && cd calico/-----sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://192.168.100.11:2379,https://192.168.100.12:2379,https://192.168.100.13:2379"#g' calico-etcd.yamlETCD_CA=`cat /etc/kubernetes/pki/etcd/ca.crt | base64 | tr -d '\n'`ETCD_CERT=`cat /etc/kubernetes/pki/etcd/server.crt | base64 | tr -d '\n'`ETCD_KEY=`cat /etc/kubernetes/pki/etcd/server.key | base64 | tr -d '\n'`sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yamlsed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yamlPOD_SUBNET=`cat /etc/kubernetes/manifests/kube-controller-manager.yaml | grep cluster-cidr= | awk -F= '{print $NF}'`sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "192.168.0.0/16"@ value: '"${POD_SUBNET}"'@g' calico-etcd.yaml-----kubectl apply -f calico-etcd.yamlkubectl get node -n kue-systemkubectl get node

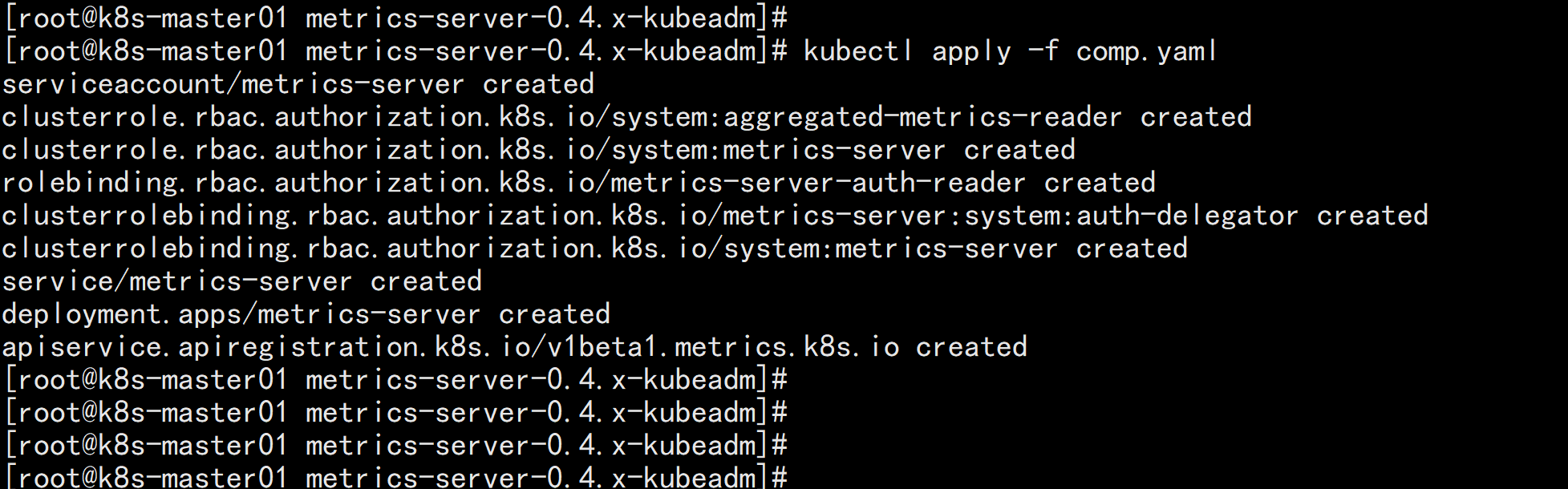

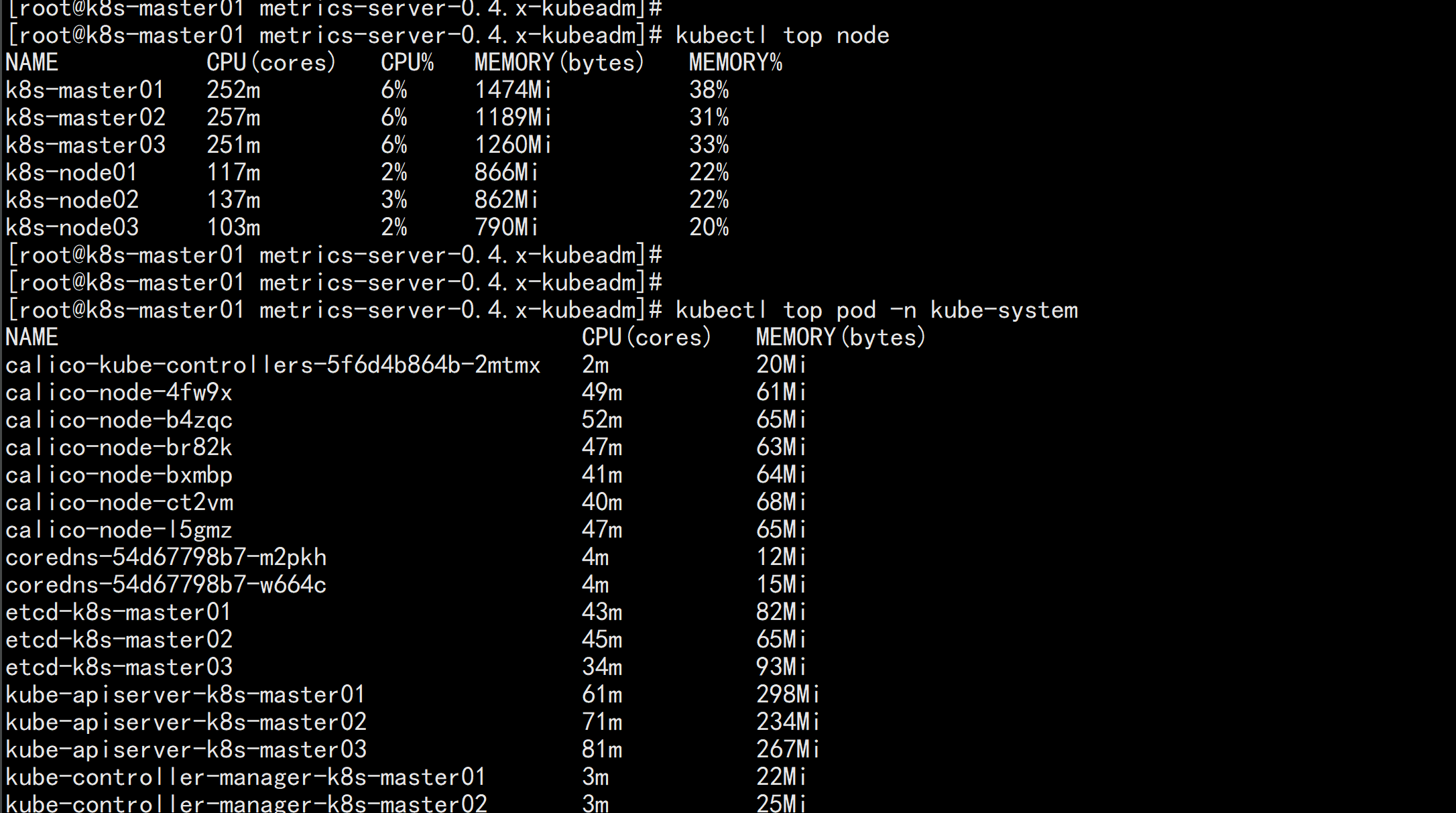

配置metric servercd /root/k8s-ha-install/metrics-server-0.4.x-kubeadmvim comp.ymal----apiVersion: v1kind: ServiceAccountmetadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:labels:k8s-app: metrics-serverrbac.authorization.k8s.io/aggregate-to-admin: "true"rbac.authorization.k8s.io/aggregate-to-edit: "true"rbac.authorization.k8s.io/aggregate-to-view: "true"name: system:aggregated-metrics-readerrules:- apiGroups:- metrics.k8s.ioresources:- pods- nodesverbs:- get- list- watch---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:labels:k8s-app: metrics-servername: system:metrics-serverrules:- apiGroups:- ""resources:- pods- nodes- nodes/stats- namespaces- configmapsverbs:- get- list- watch---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata:labels:k8s-app: metrics-servername: metrics-server-auth-readernamespace: kube-systemroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: extension-apiserver-authentication-readersubjects:- kind: ServiceAccountname: metrics-servernamespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:labels:k8s-app: metrics-servername: metrics-server:system:auth-delegatorroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:auth-delegatorsubjects:- kind: ServiceAccountname: metrics-servernamespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:labels:k8s-app: metrics-servername: system:metrics-serverroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:metrics-serversubjects:- kind: ServiceAccountname: metrics-servernamespace: kube-system---apiVersion: v1kind: Servicemetadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-systemspec:ports:- name: httpsport: 443protocol: TCPtargetPort: httpsselector:k8s-app: metrics-server---apiVersion: apps/v1kind: Deploymentmetadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-systemspec:selector:matchLabels:k8s-app: metrics-serverstrategy:rollingUpdate:maxUnavailable: 0template:metadata:labels:k8s-app: metrics-serverspec:containers:- args:- --cert-dir=/tmp- --secure-port=4443- --metric-resolution=30s- --kubelet-insecure-tls- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname# - --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt # change to front-proxy-ca.crt for kubeadm- --requestheader-username-headers=X-Remote-User- --requestheader-group-headers=X-Remote-Group- --requestheader-extra-headers-prefix=X-Remote-Extra-image: registry.cn-beijing.aliyuncs.com/dotbalo/metrics-server:v0.4.1imagePullPolicy: IfNotPresentlivenessProbe:failureThreshold: 3httpGet:path: /livezport: httpsscheme: HTTPSperiodSeconds: 10name: metrics-serverports:- containerPort: 4443name: httpsprotocol: TCPreadinessProbe:failureThreshold: 3httpGet:path: /readyzport: httpsscheme: HTTPSperiodSeconds: 10securityContext:readOnlyRootFilesystem: truerunAsNonRoot: truerunAsUser: 1000volumeMounts:- mountPath: /tmpname: tmp-dir- name: ca-sslmountPath: /etc/kubernetes/pkinodeSelector:kubernetes.io/os: linuxpriorityClassName: system-cluster-criticalserviceAccountName: metrics-servervolumes:- emptyDir: {}name: tmp-dir- name: ca-sslhostPath:path: /etc/kubernetes/pki---apiVersion: apiregistration.k8s.io/v1kind: APIServicemetadata:labels:k8s-app: metrics-servername: v1beta1.metrics.k8s.iospec:group: metrics.k8s.iogroupPriorityMinimum: 100insecureSkipTLSVerify: trueservice:name: metrics-servernamespace: kube-systemversion: v1beta1versionPriority: 100----kubectl apply -f comp.yaml

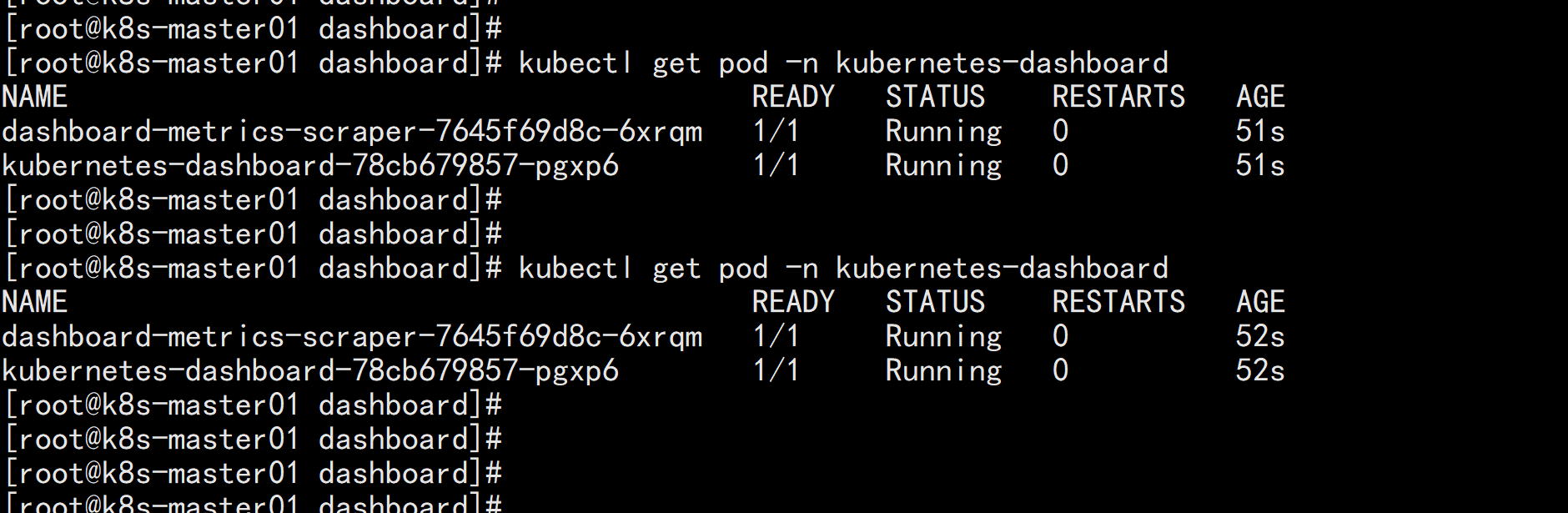

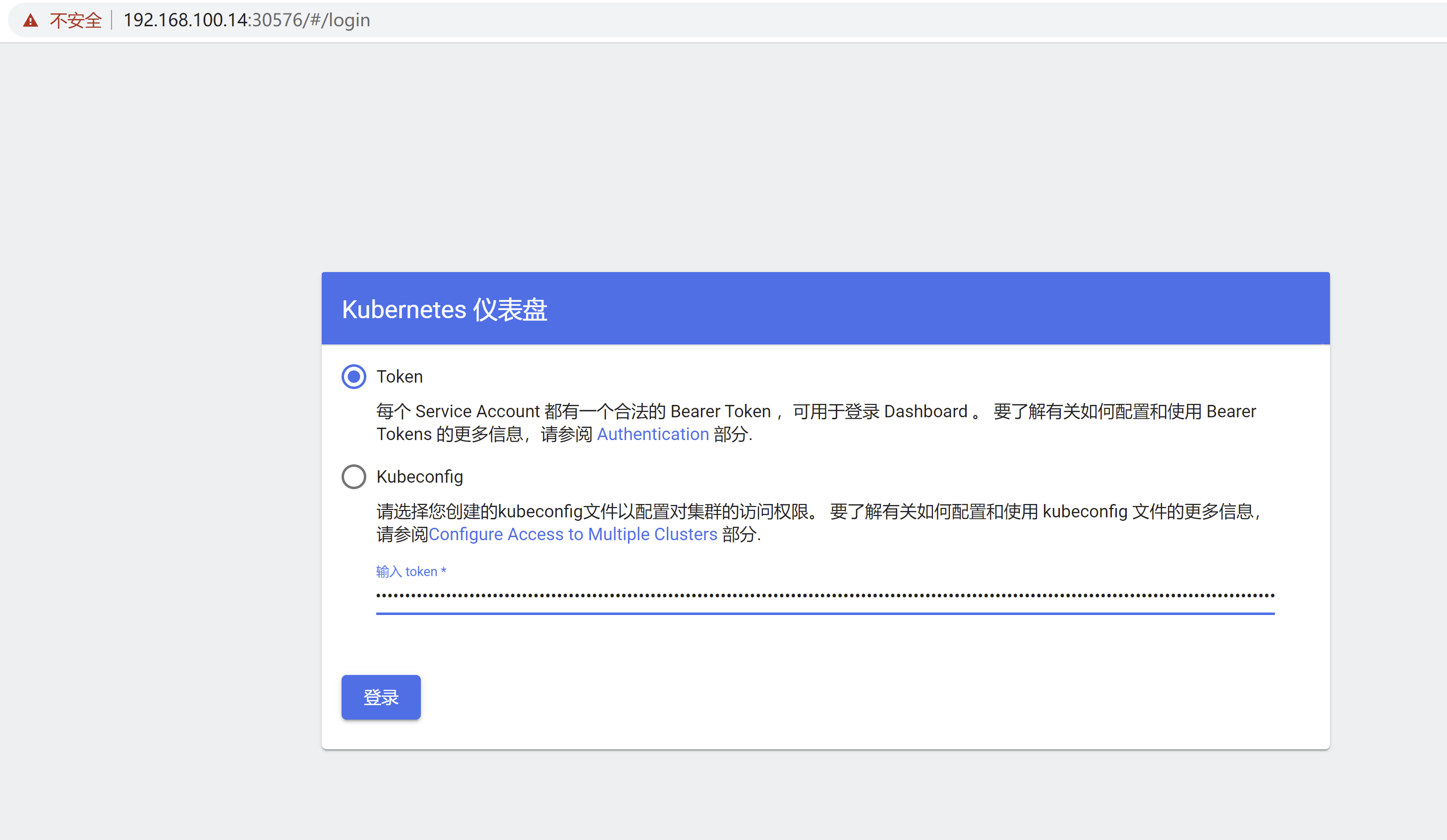

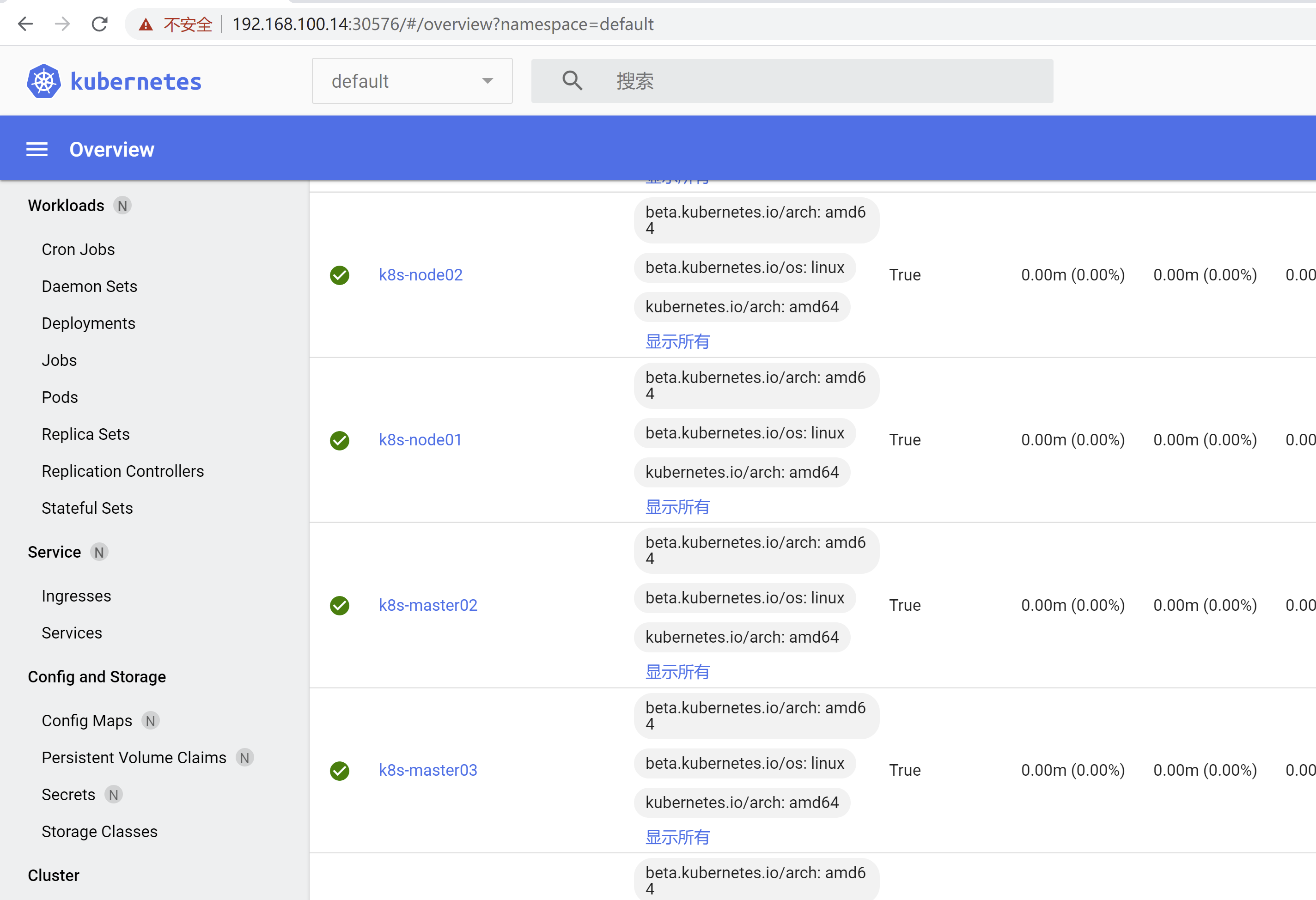

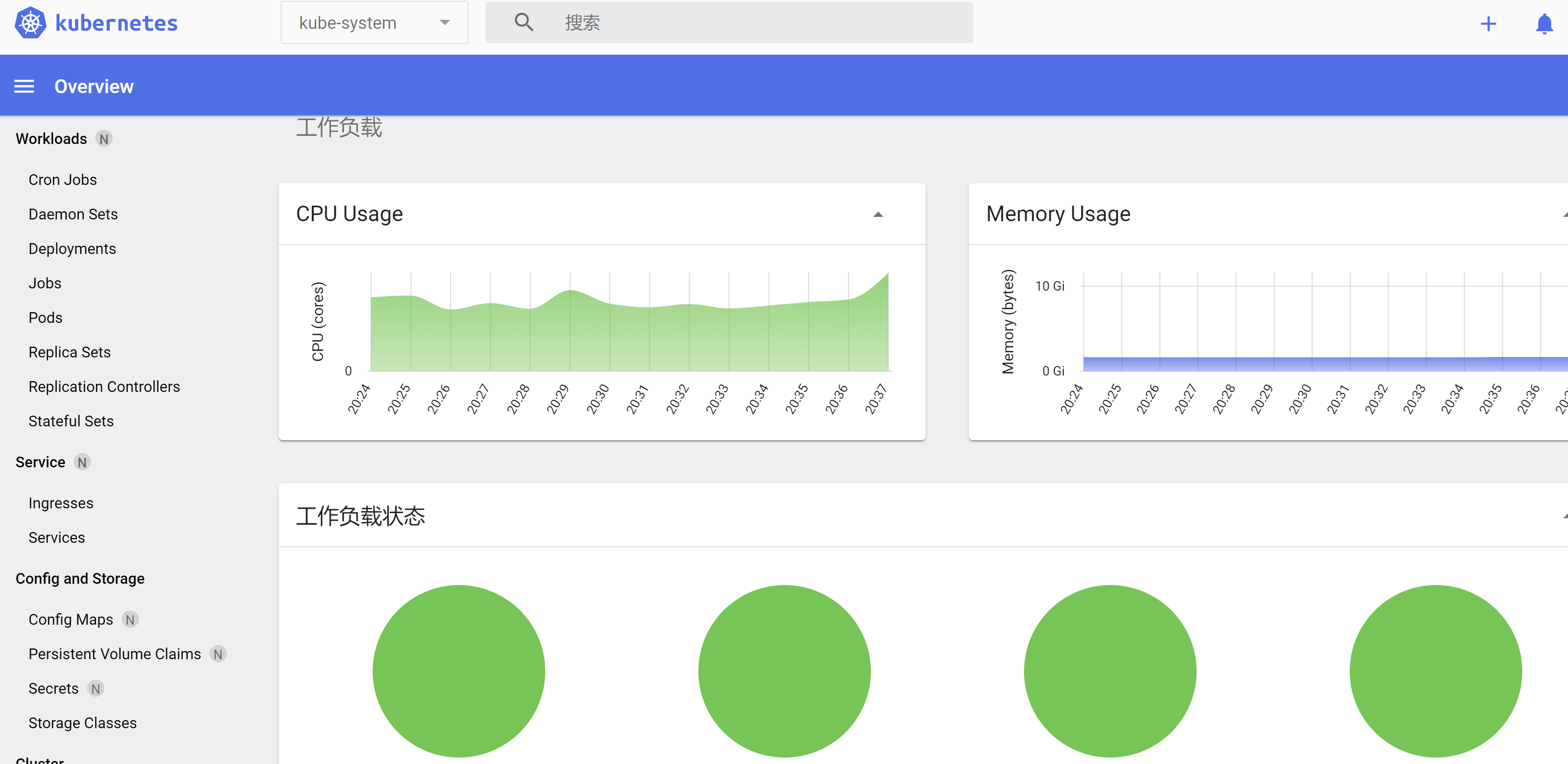

安装dashbaordcd /root/k8s-ha-install/dashboardkubectl apply -f dashboard-user.yamlkubectl apply -f dashboard.yamlkubectl get pod -n kubernetes-dashboard

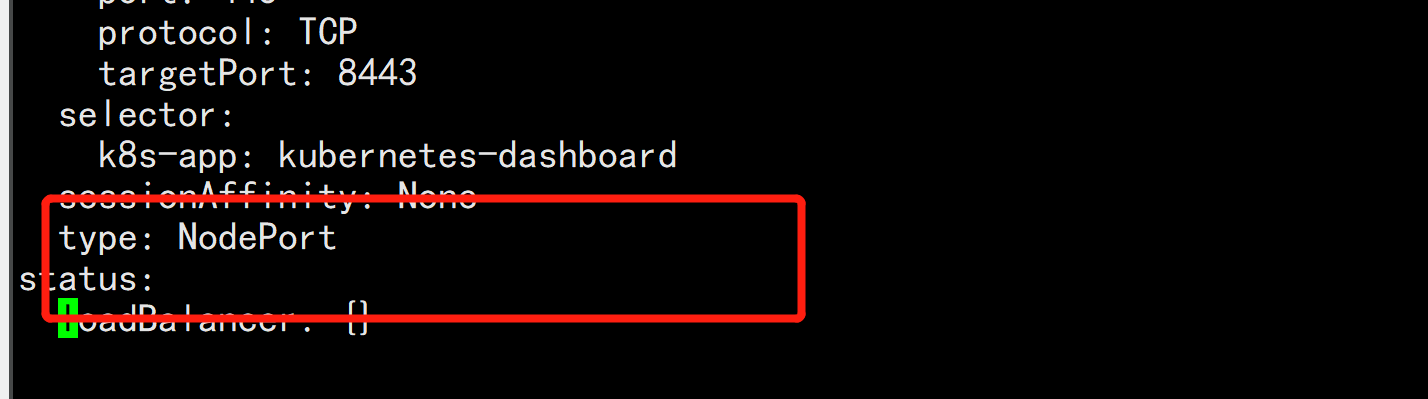

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard改一下svc 的 类型:type: Cluster-IP改为: type: NodePort

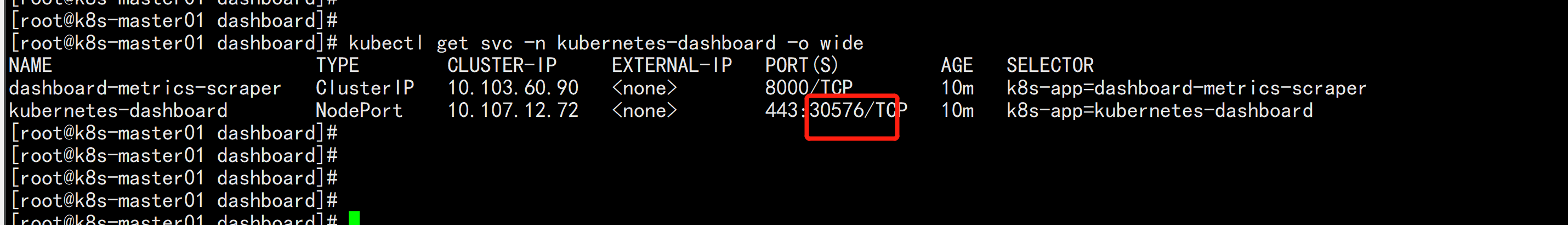

kubectl get svc -n kubernetes-dashboard -o wide

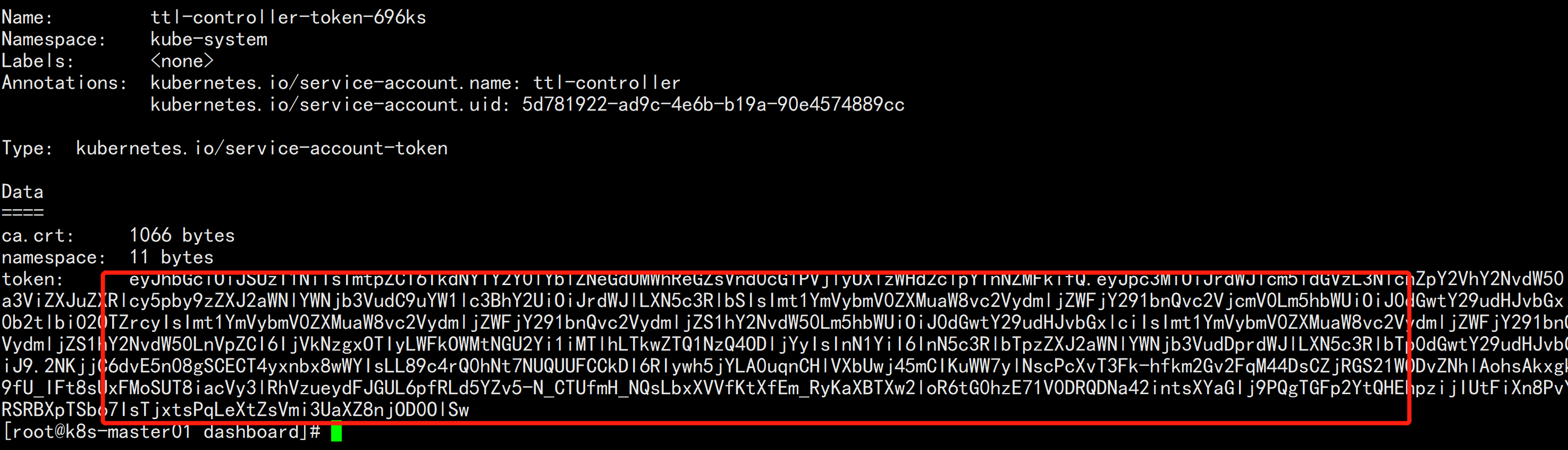

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

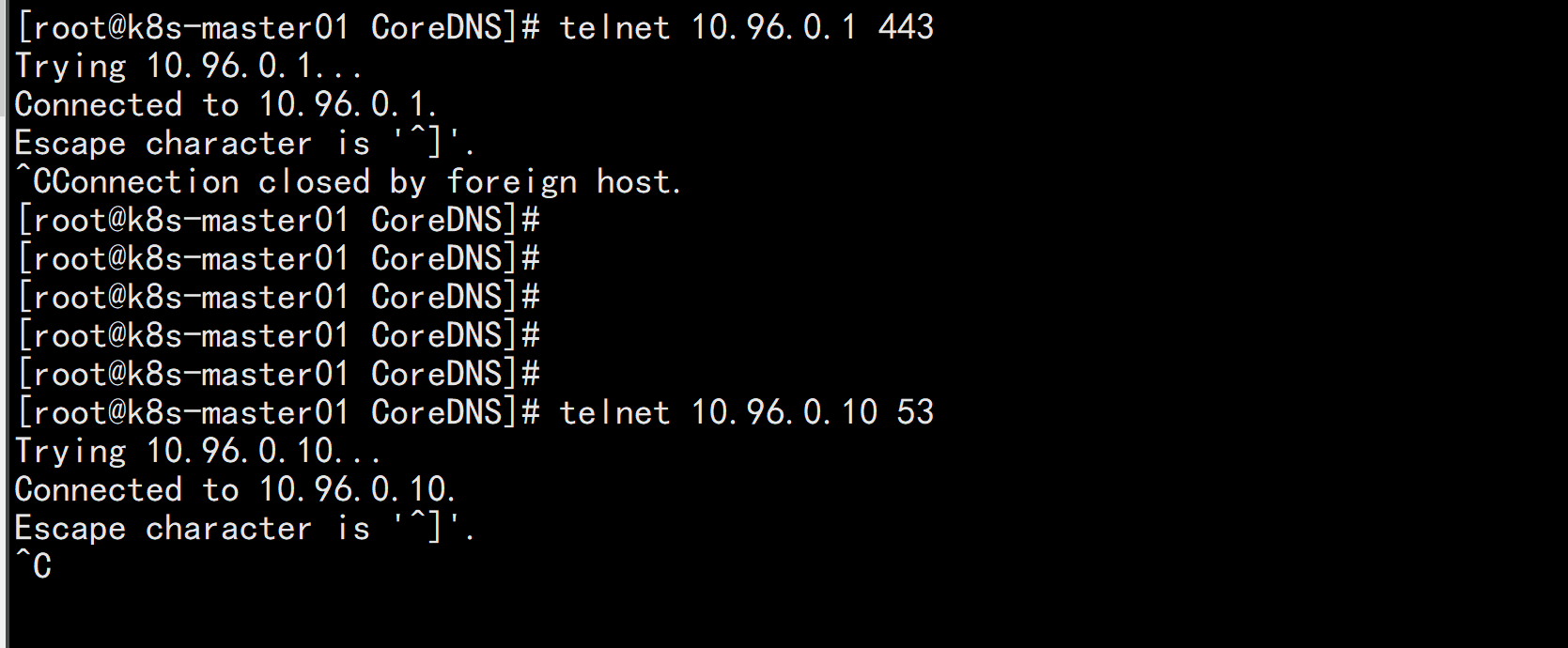

集群测试:kubectl get svc -n kube-systemtelnet 10.96.0.1 443telnet 10.96.0.10 53

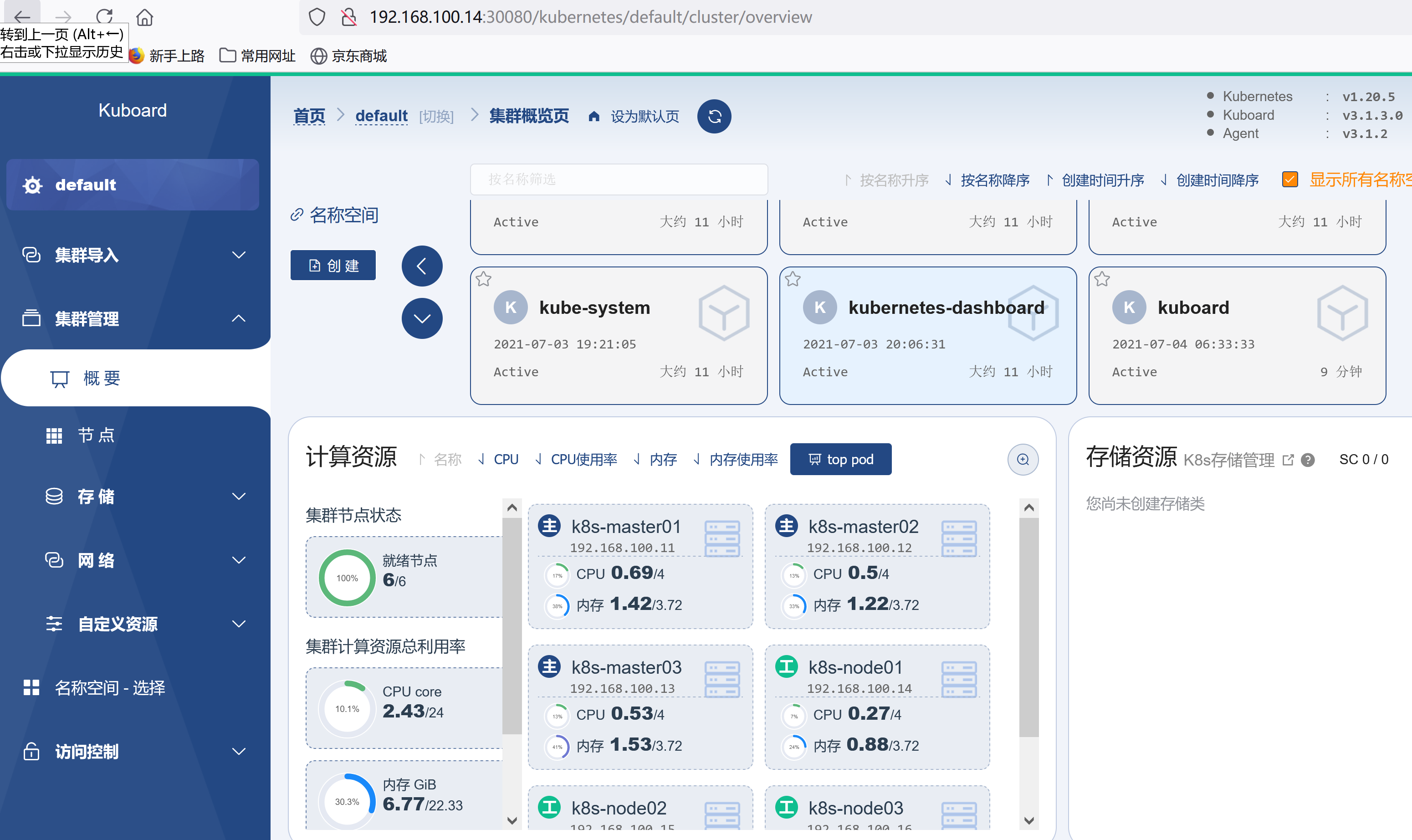

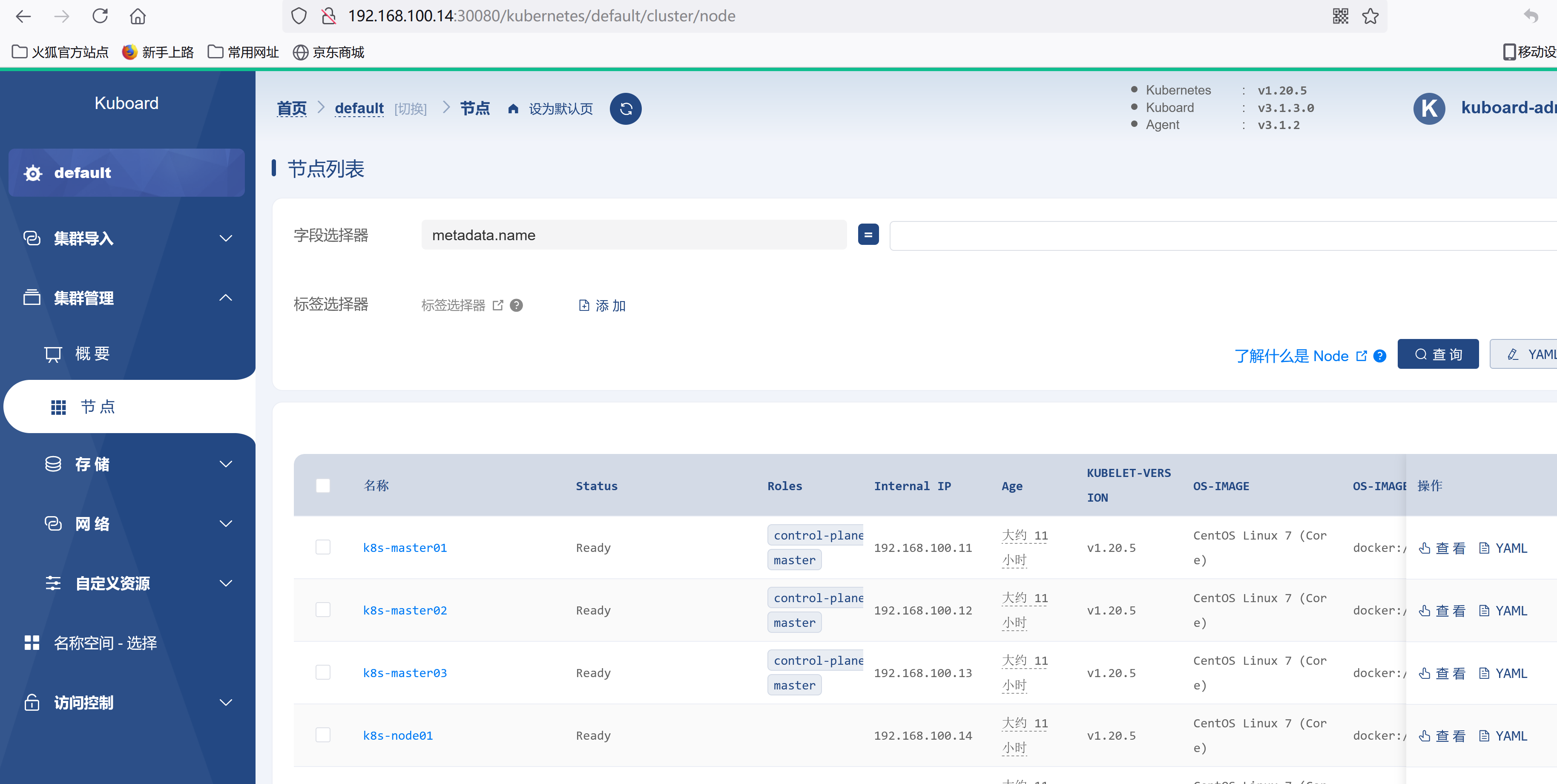

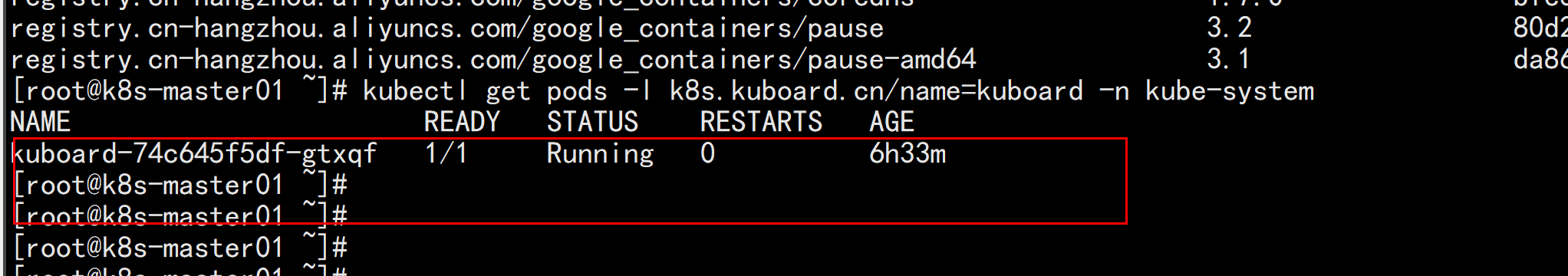

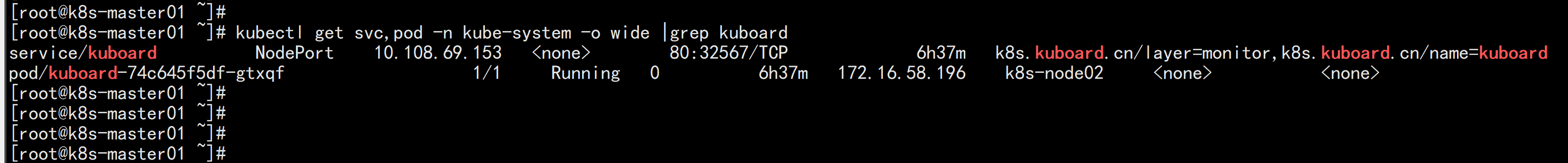

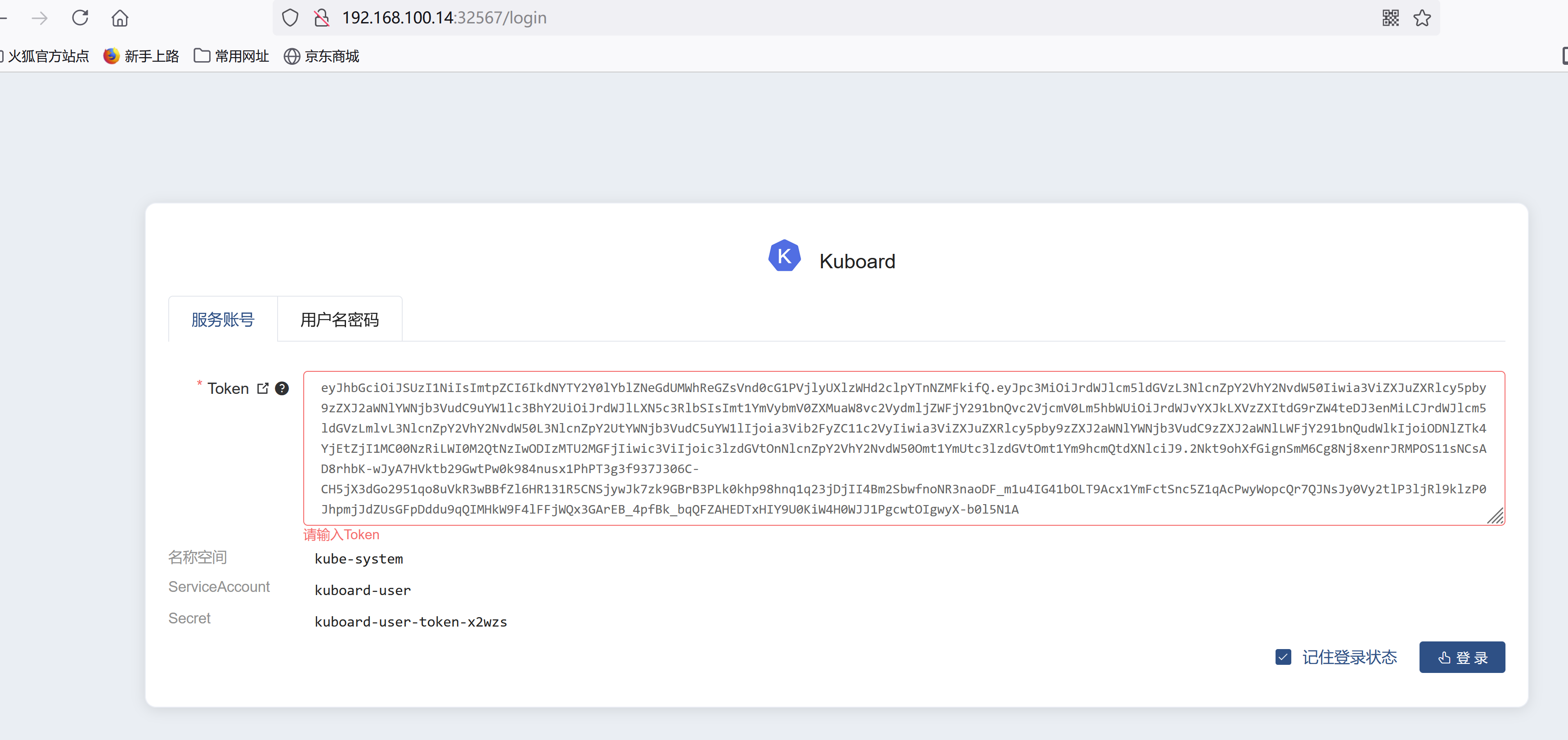

部署kuborad在node 节点上面 下载镜像:docker pull eipwork/kuboard:latestkubectl apply -f https://kuboard.cn/install-script/kuboard.yamlkubectl get pods -l k8s.kuboard.cn/name=kuboard -n kube-systemkubectl get svc,pod -n kube-system -o wide |grep kuboard

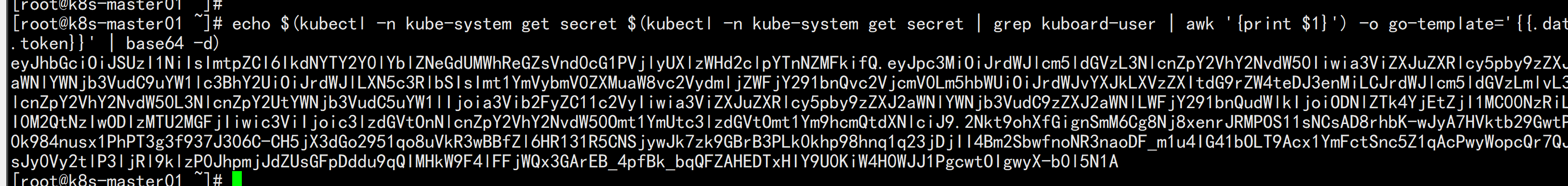

获取Token# 如果您参考 www.kuboard.cn 提供的文档安装 Kuberenetes,可在第一个 Master 节点上执行此命令echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d)

卸载:kuborad-v2kubectl delete -f https://kuboard.cn/install-script/kuboard.yaml

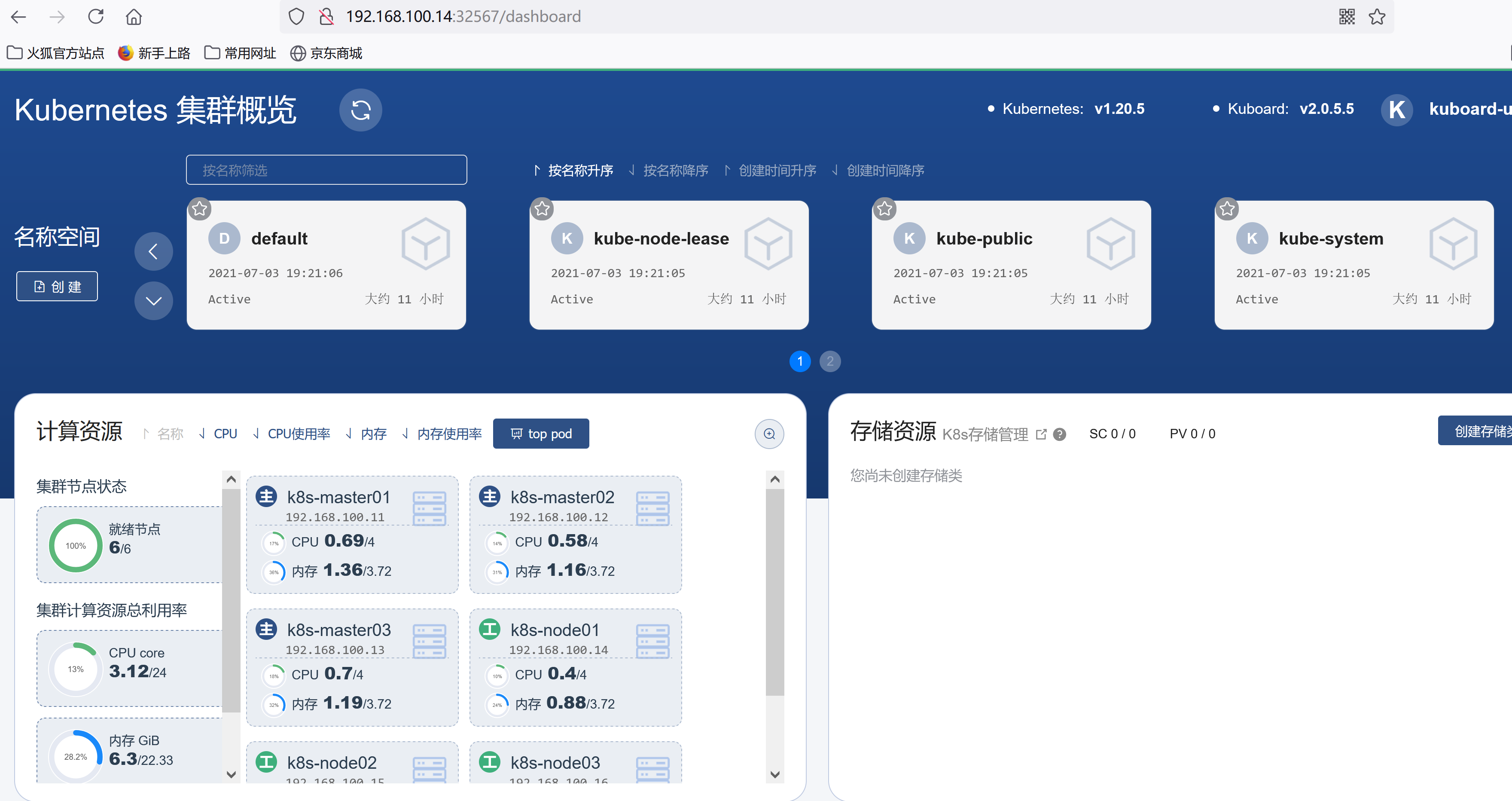

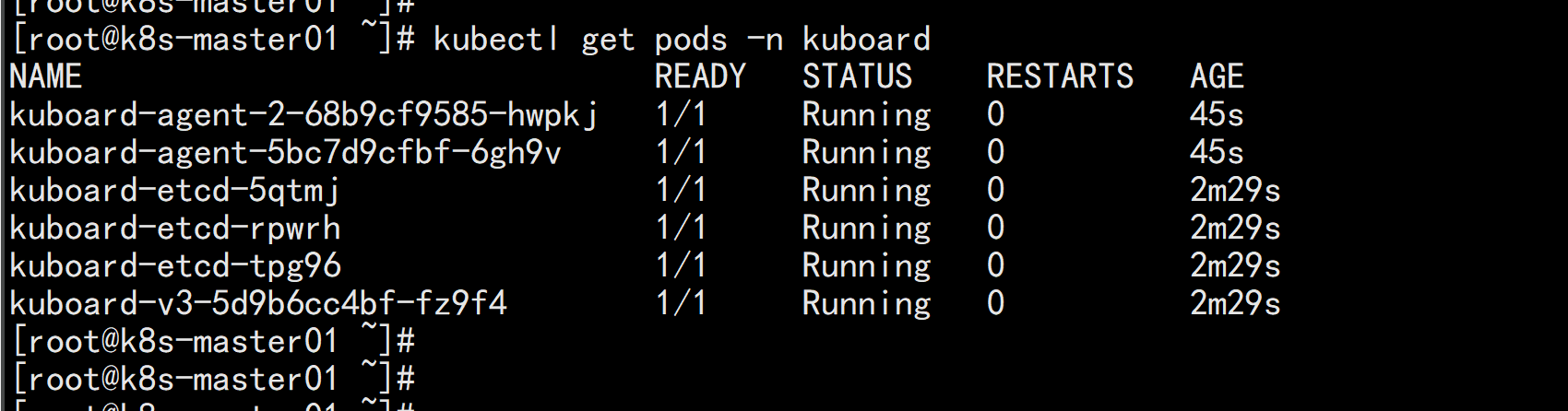

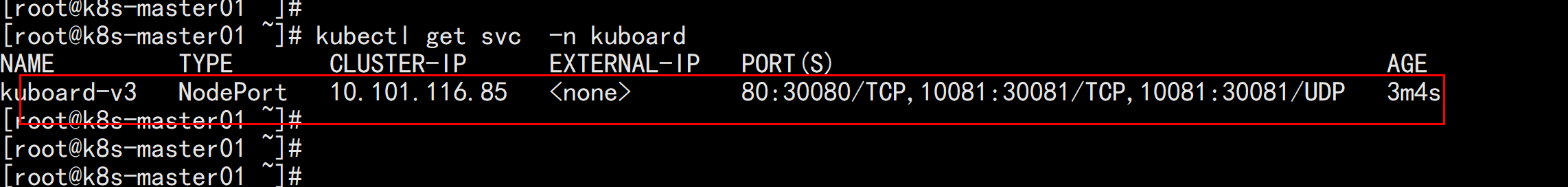

安装kuboard-v3在node 节点上面下载镜像:docker pull eipwork/kuboard:v3docker pull eipwork/etcd-host:3.4.16-1mkdir /datachmod 777 -R /data配置镜像下载策略wget https://addons.kuboard.cn/kuboard/kuboard-v3.yamlvim kuboard-v3.ymal---imagePullPolicy: IfNotPresent (共有两处)---kubectl apply -f kuboard-v3.yaml

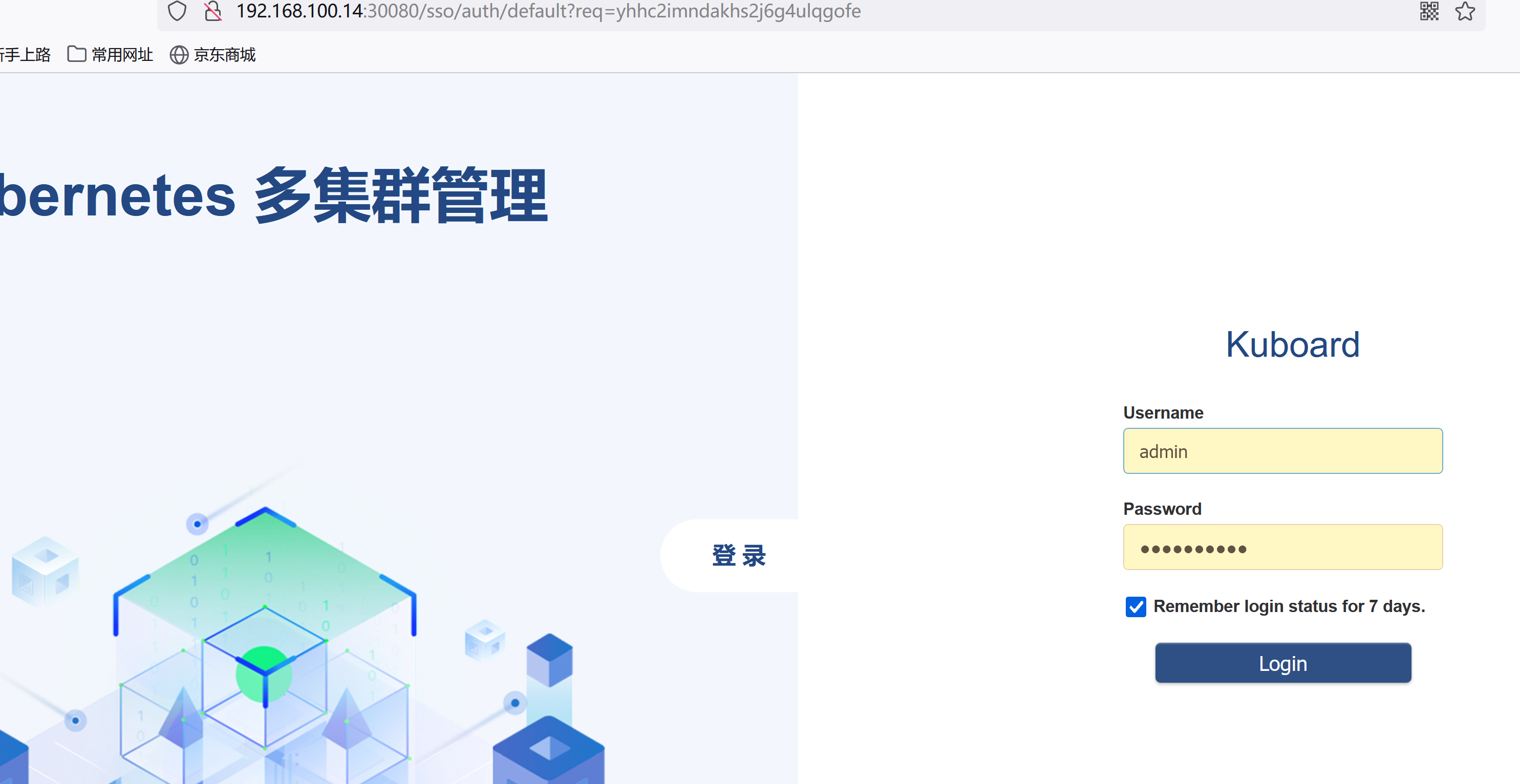

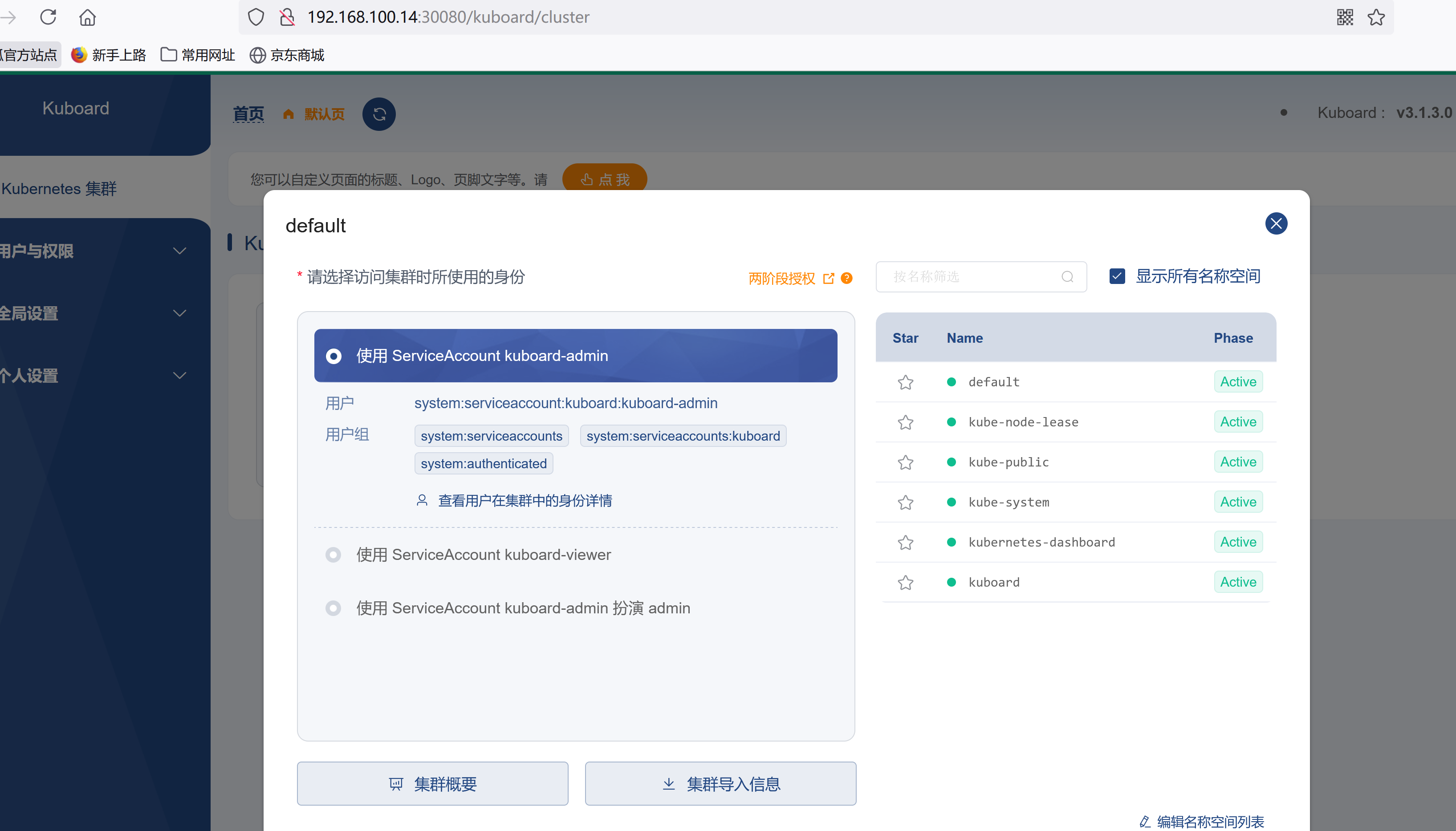

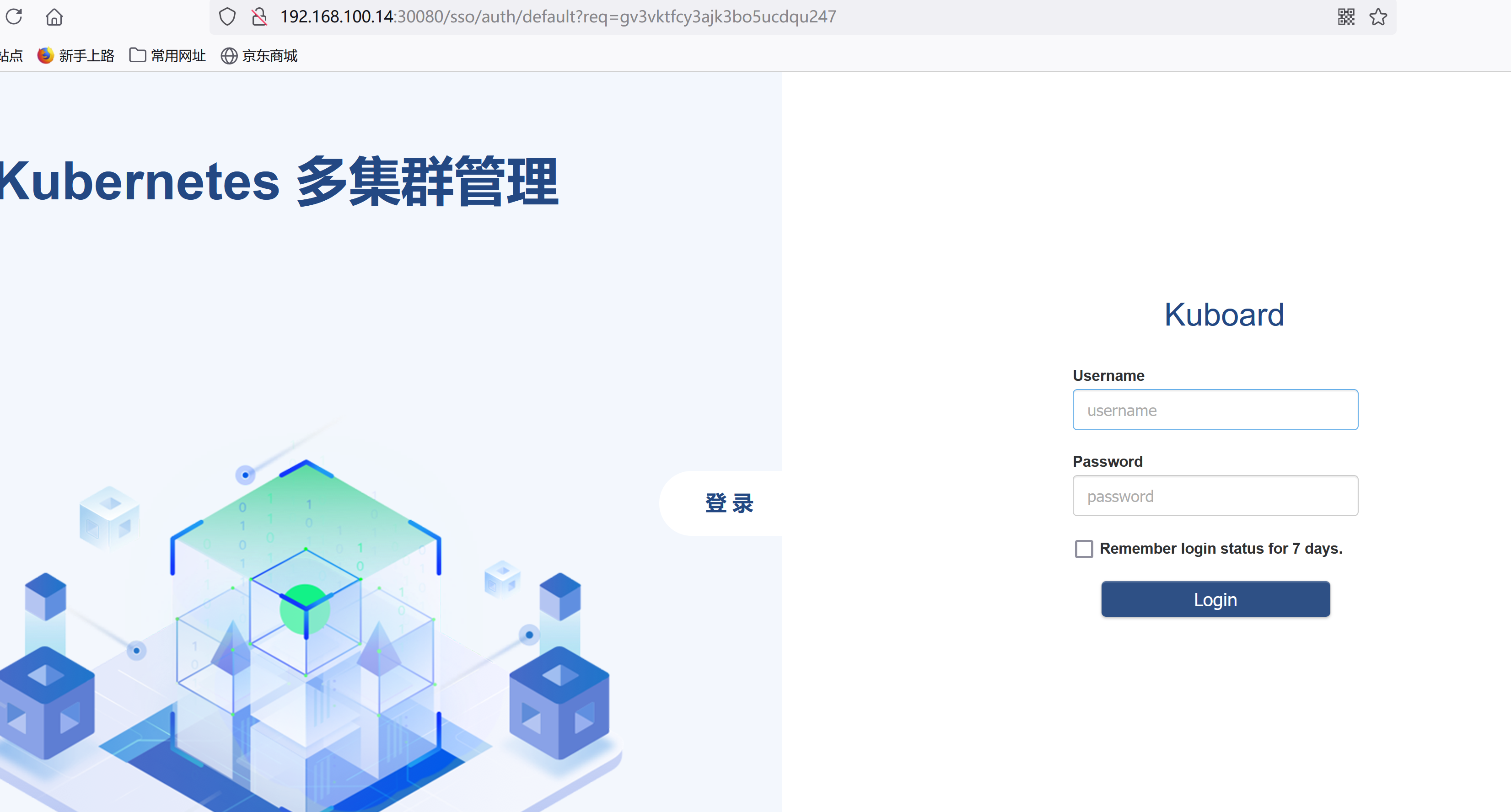

访问 Kuboard在浏览器中打开链接 http://your-node-ip-address:30080输入初始用户名和密码,并登录用户名: admin密码: Kuboard123