@zhangyy

2020-11-30T10:11:47.000000Z

字数 7839

阅读 1195

使用kubeadmin 配置 k8s 集群

kubernetes系列

一:系统初始化

二:kubernetes 安装

一:系统初始化

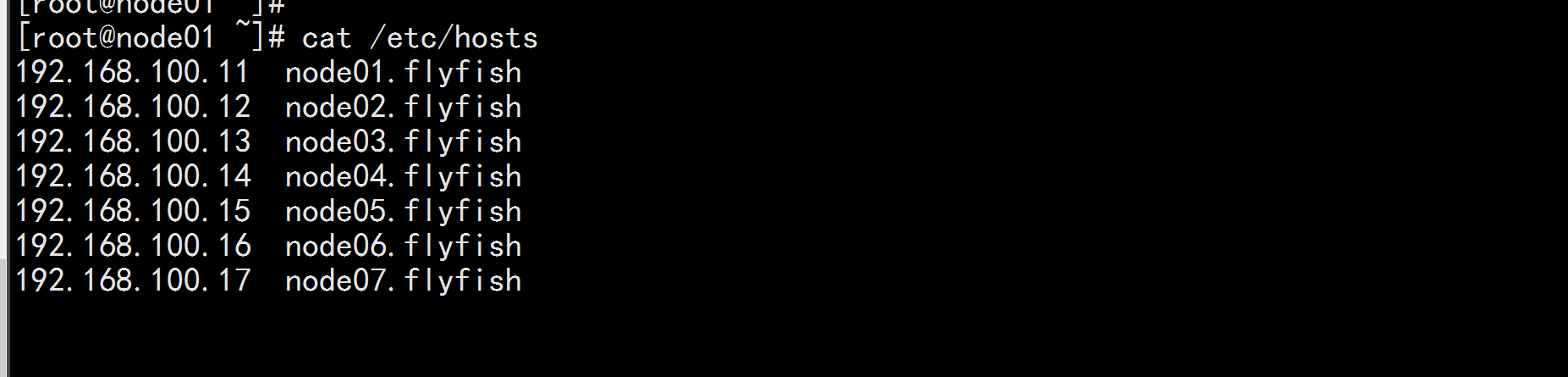

1.1 系统主机名

192.168.100.11 node01.flyfish192.168.100.12 node02.flyfish192.168.100.13 node03.flyfish192.168.100.14 node04.flyfish192.168.100.15 node05.flyfish192.168.100.16 node06.flyfish192.168.100.17 node07.flyfish

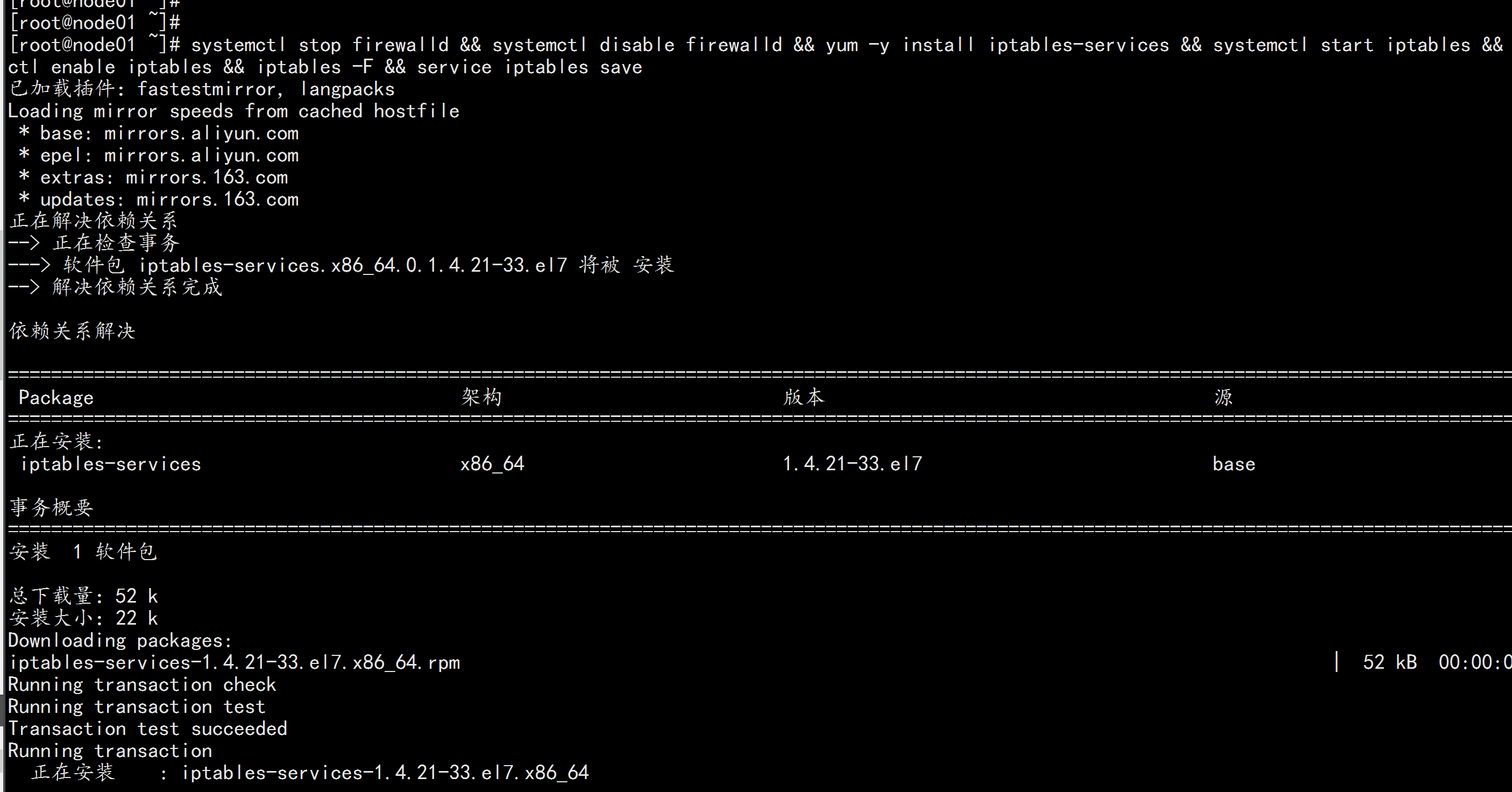

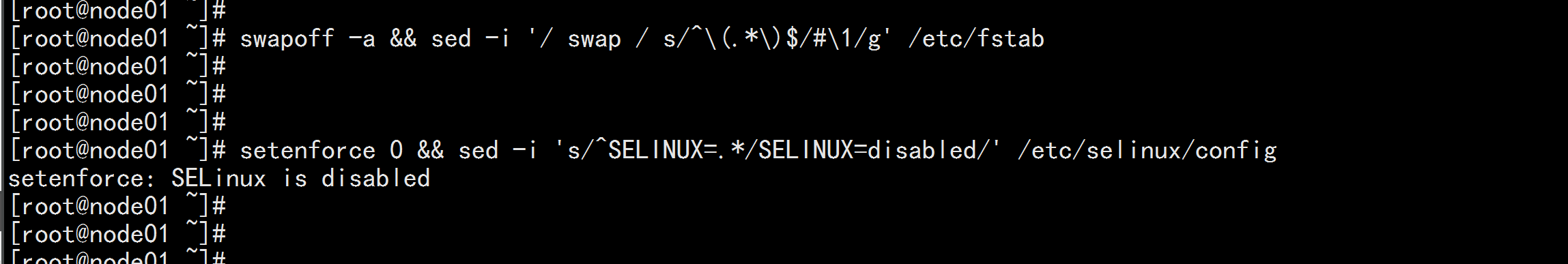

1.2 关闭firewalld 清空iptables 与 selinux 规则

系统节点全部执行:systemctl stop firewalld && systemctl disable firewalld && yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

关闭 SELINUX与swap 内存swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstabsetenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

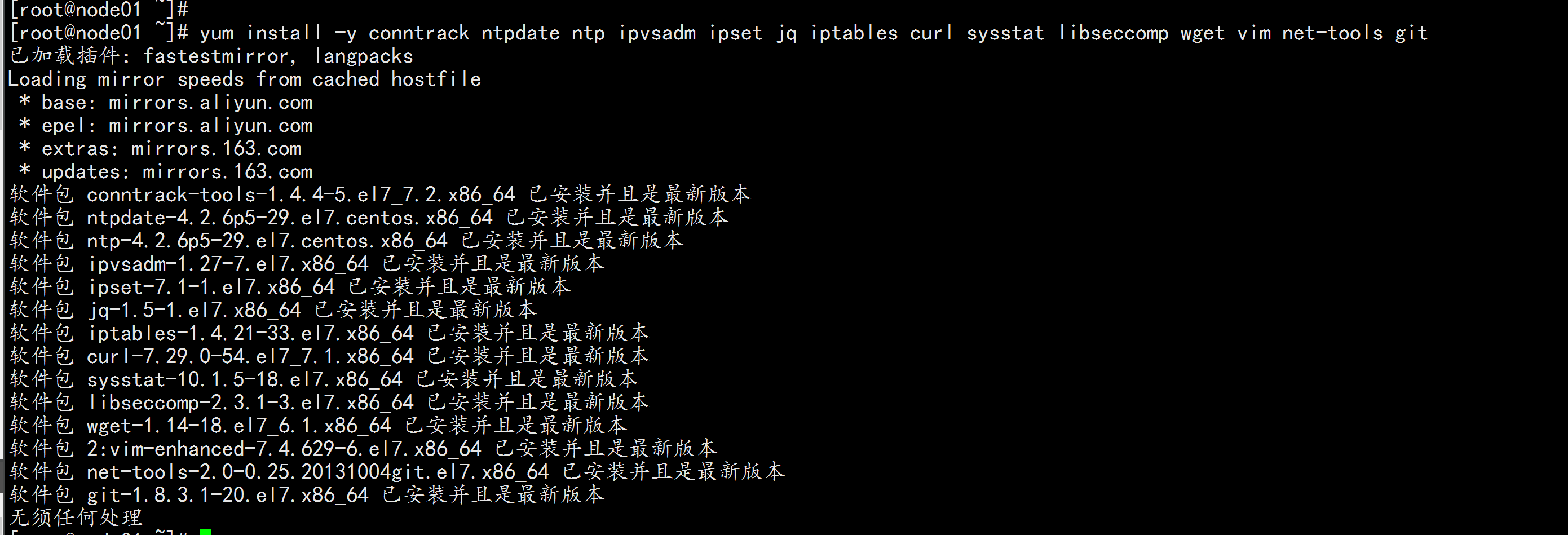

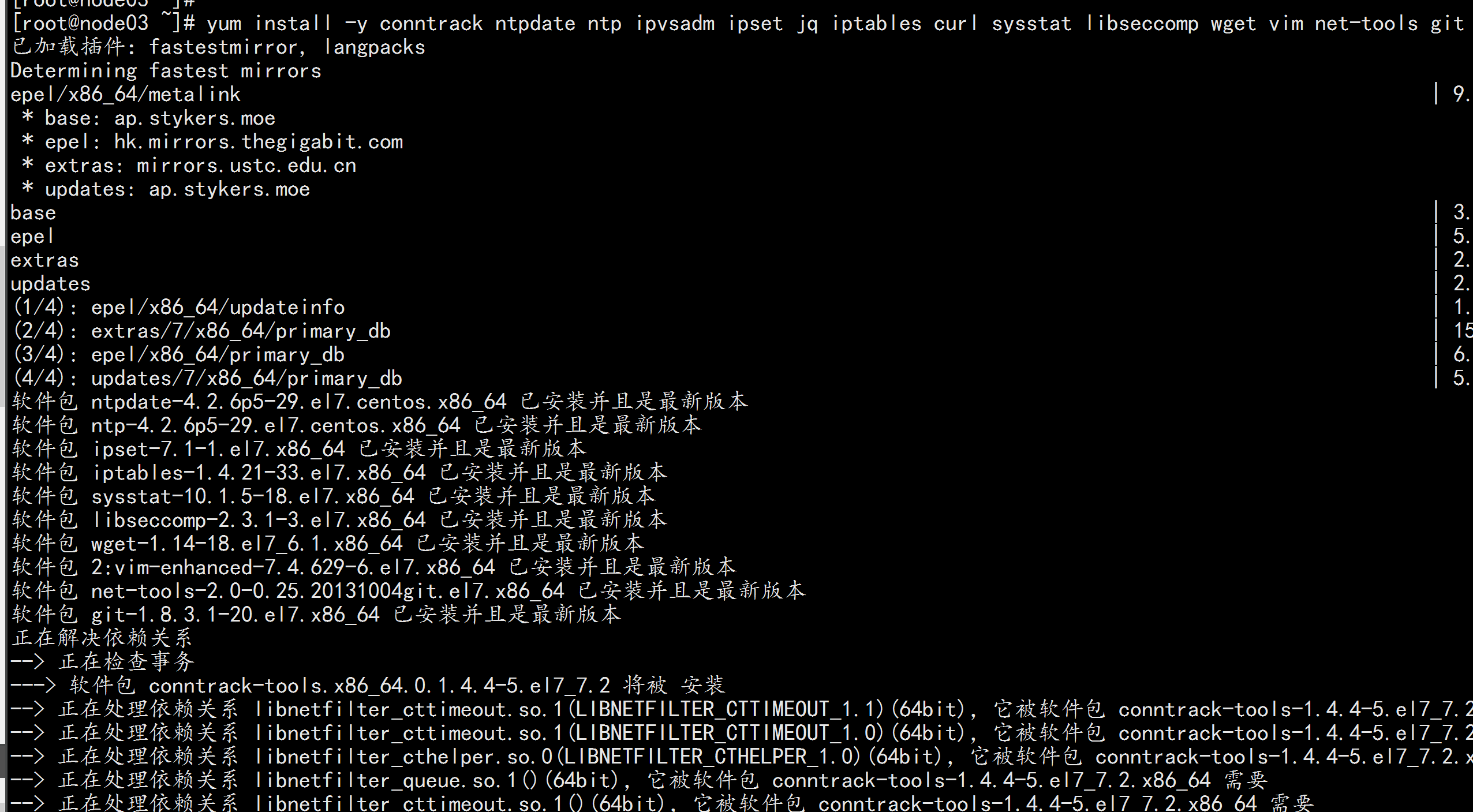

1.3 安装 依赖包

全部节点安装yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

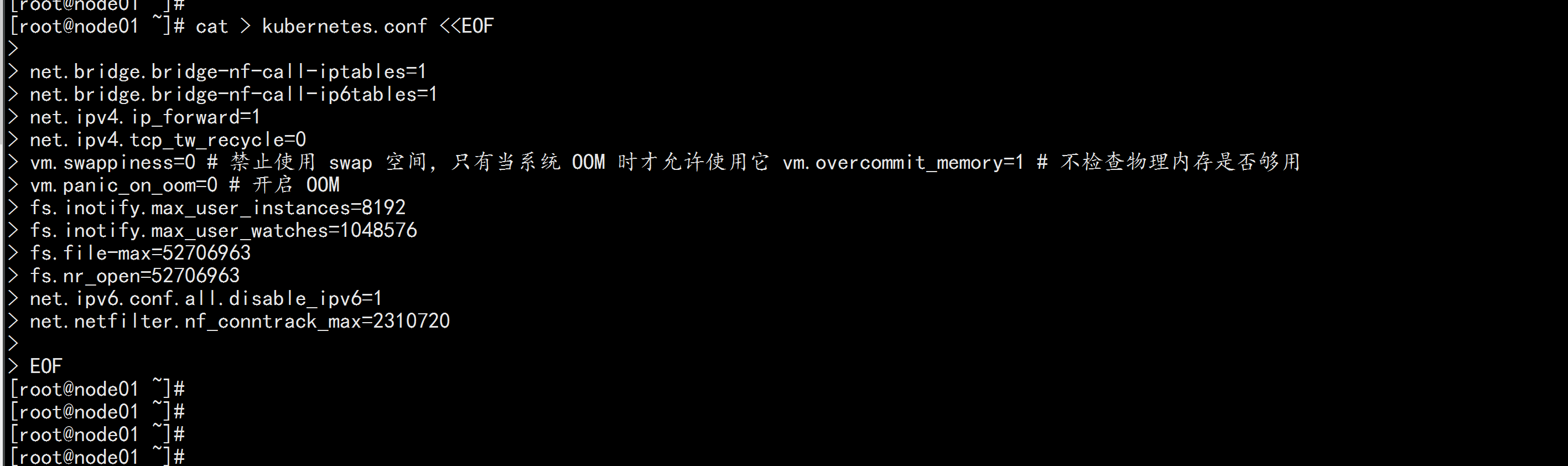

1.4 升级调整内核参数,对于 K8S

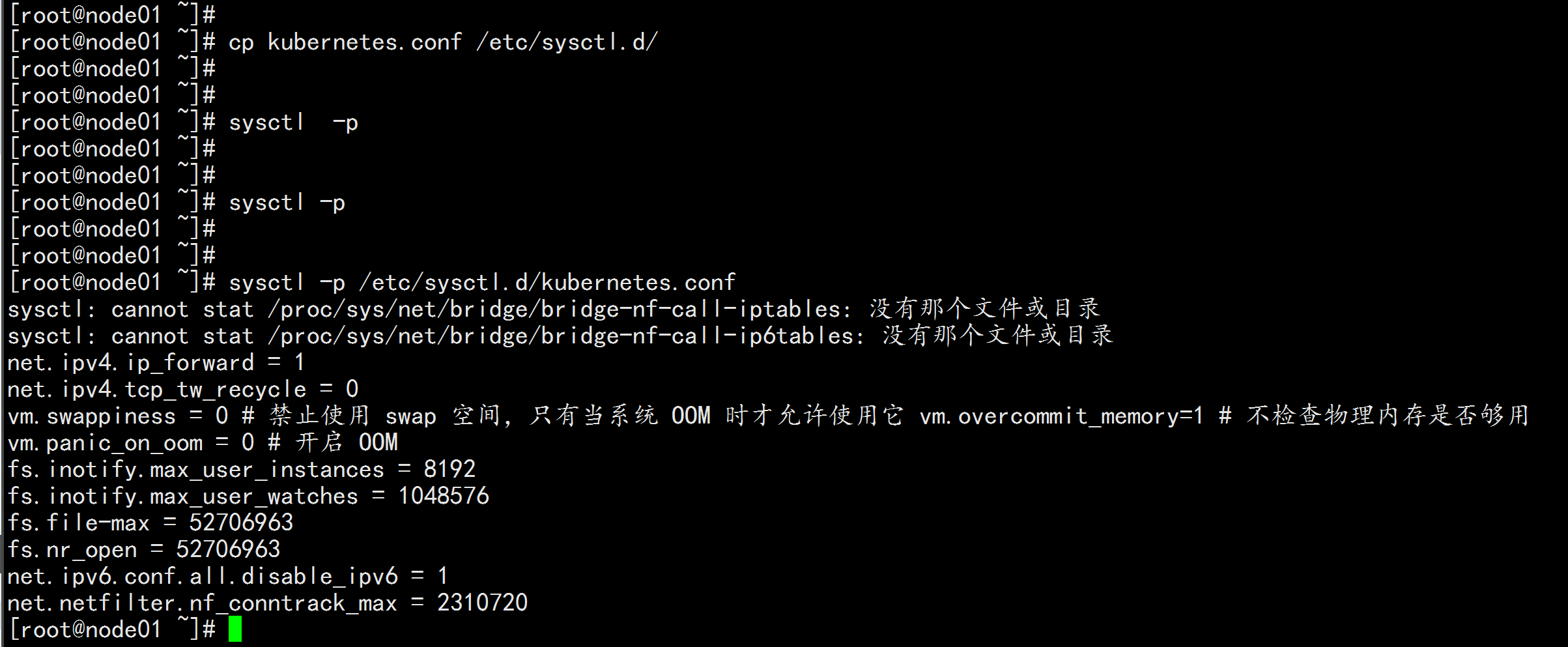

所有节点都执行cat > kubernetes.conf <<EOFnet.bridge.bridge-nf-call-iptables=1net.bridge.bridge-nf-call-ip6tables=1net.ipv4.ip_forward=1net.ipv4.tcp_tw_recycle=0vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它 vm.overcommit_memory=1 # 不检查物理内存是否够用vm.panic_on_oom=0 # 开启 OOMfs.inotify.max_user_instances=8192fs.inotify.max_user_watches=1048576fs.file-max=52706963fs.nr_open=52706963net.ipv6.conf.all.disable_ipv6=1net.netfilter.nf_conntrack_max=2310720EOFcp kubernetes.conf /etc/sysctl.d/kubernetes.confsysctl -p /etc/sysctl.d/kubernetes.conf

1.5 调整系统时区

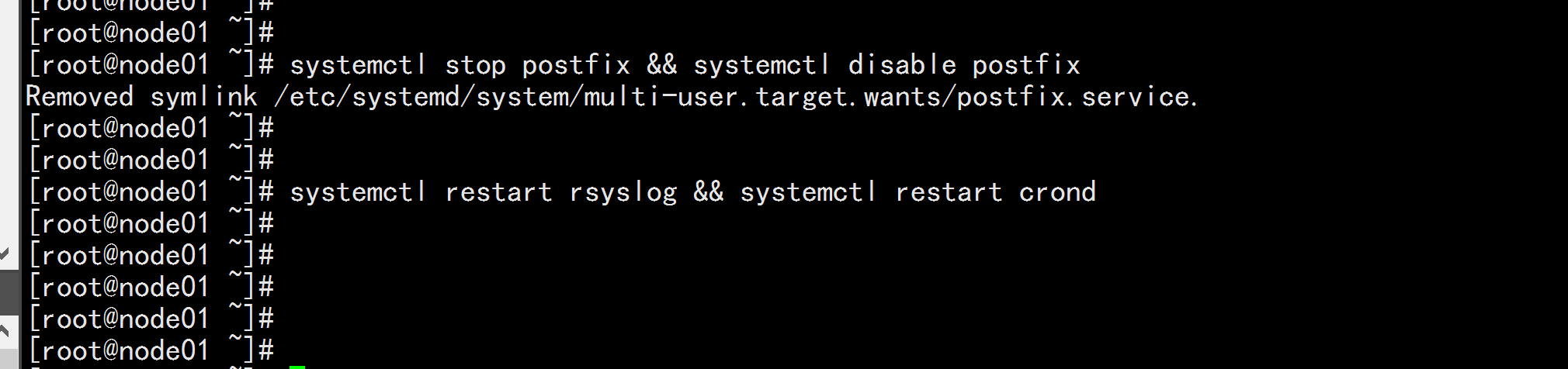

# 设置系统时区为 中国/上海 timedatectl set-timezone Asia/Shanghai# 将当前的 UTC 时间写入硬件时钟 timedatectl set-local-rtc 0# 重启依赖于系统时间的服务systemctl restart rsyslog && systemctl restart crond

1.6 关闭系统不需要服务

systemctl stop postfix && systemctl disable postfix

1.7 设置 rsyslogd 和 systemd journald

mkdir /var/log/journal # 持久化保存日志的目录mkdir /etc/systemd/journald.conf.dcat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF[Journal]# 持久化保存到磁盘Storage=persistent# 压缩历史日志Compress=yesSyncIntervalSec=5mRateLimitInterval=30sRateLimitBurst=1000# 最大占用空间 10GSystemMaxUse=10G# 单日志文件最大 200MSystemMaxFileSize=200M# 日志保存时间 2 周MaxRetentionSec=2week# 不将日志转发到 syslogForwardToSyslog=noEOFsystemctl restart systemd-journald

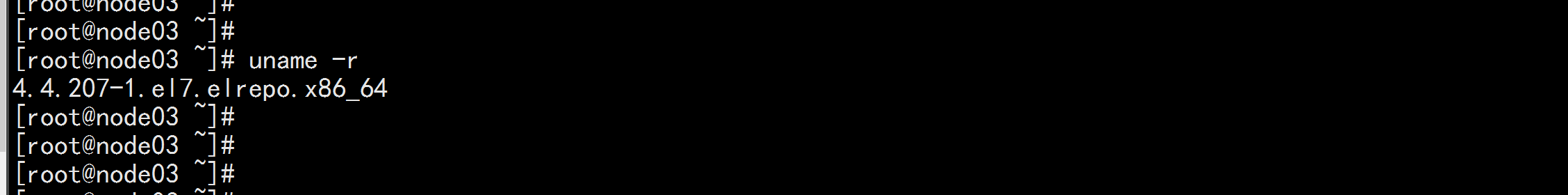

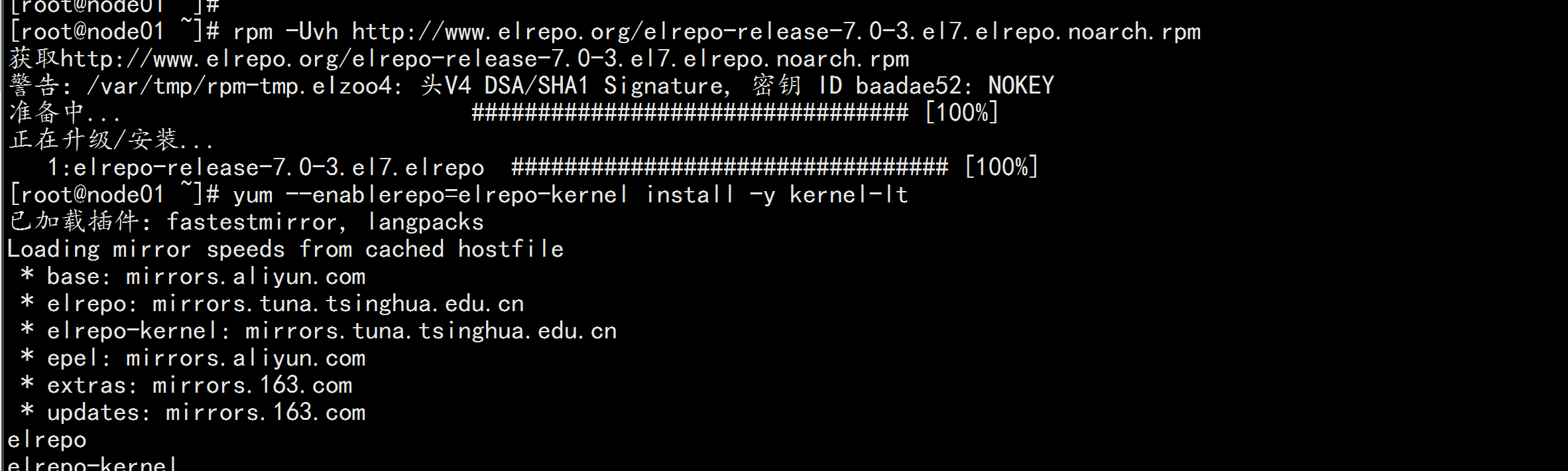

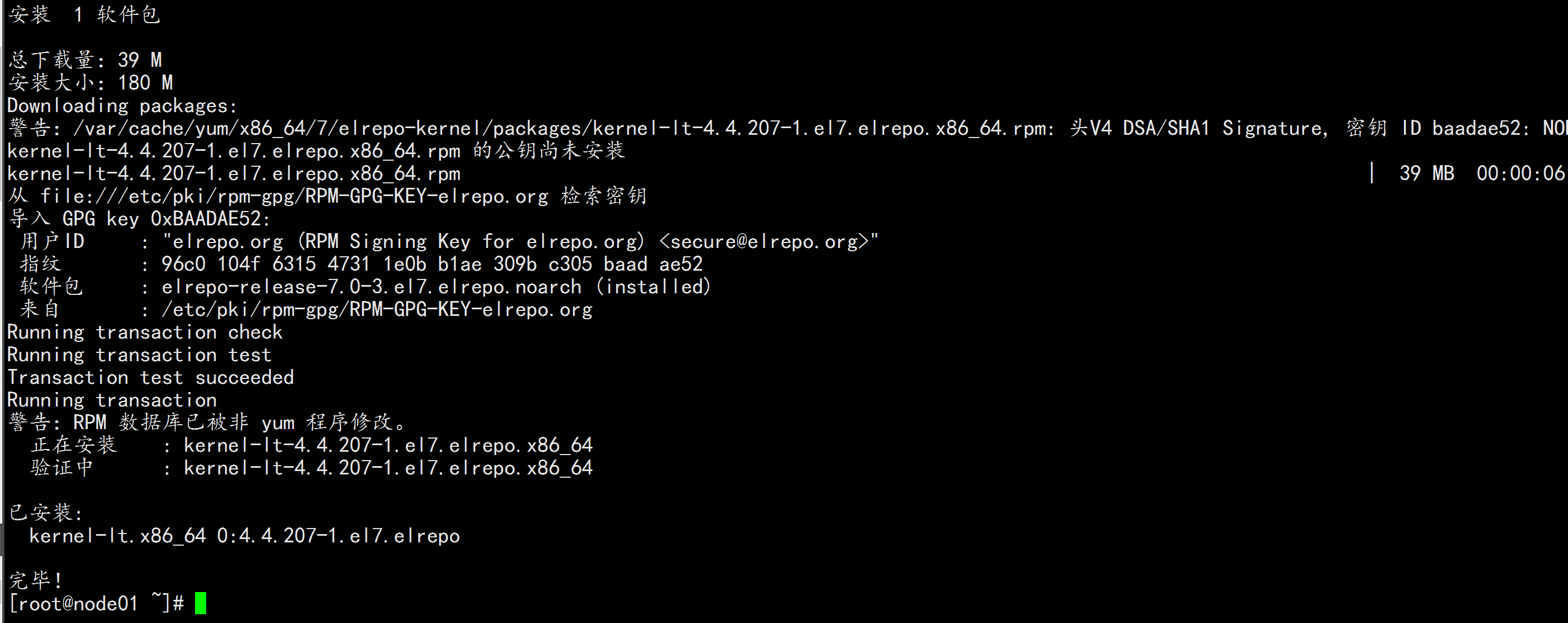

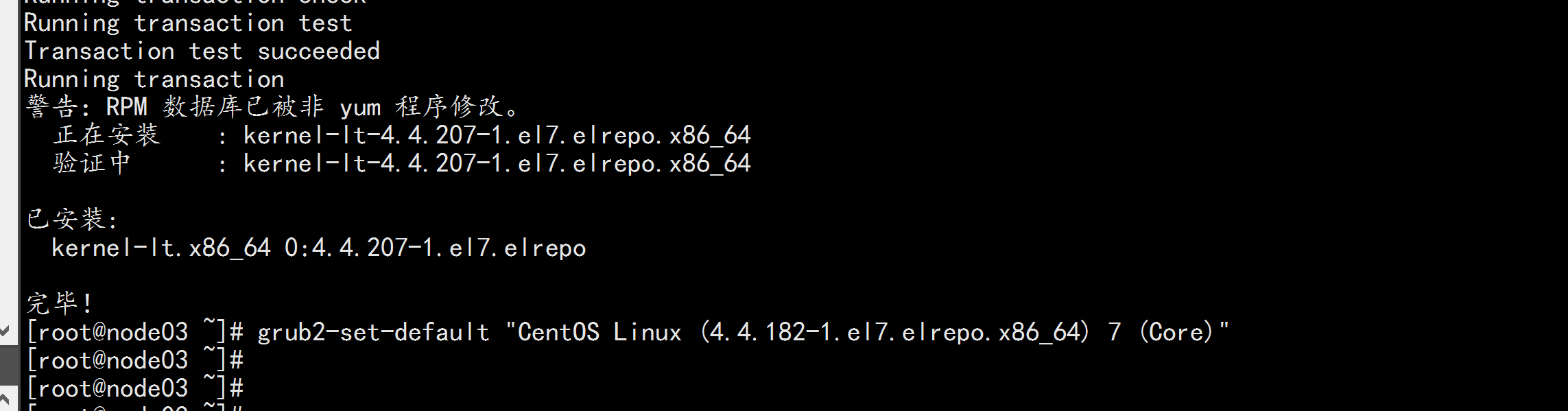

1.7 升级系统内核为 4.44

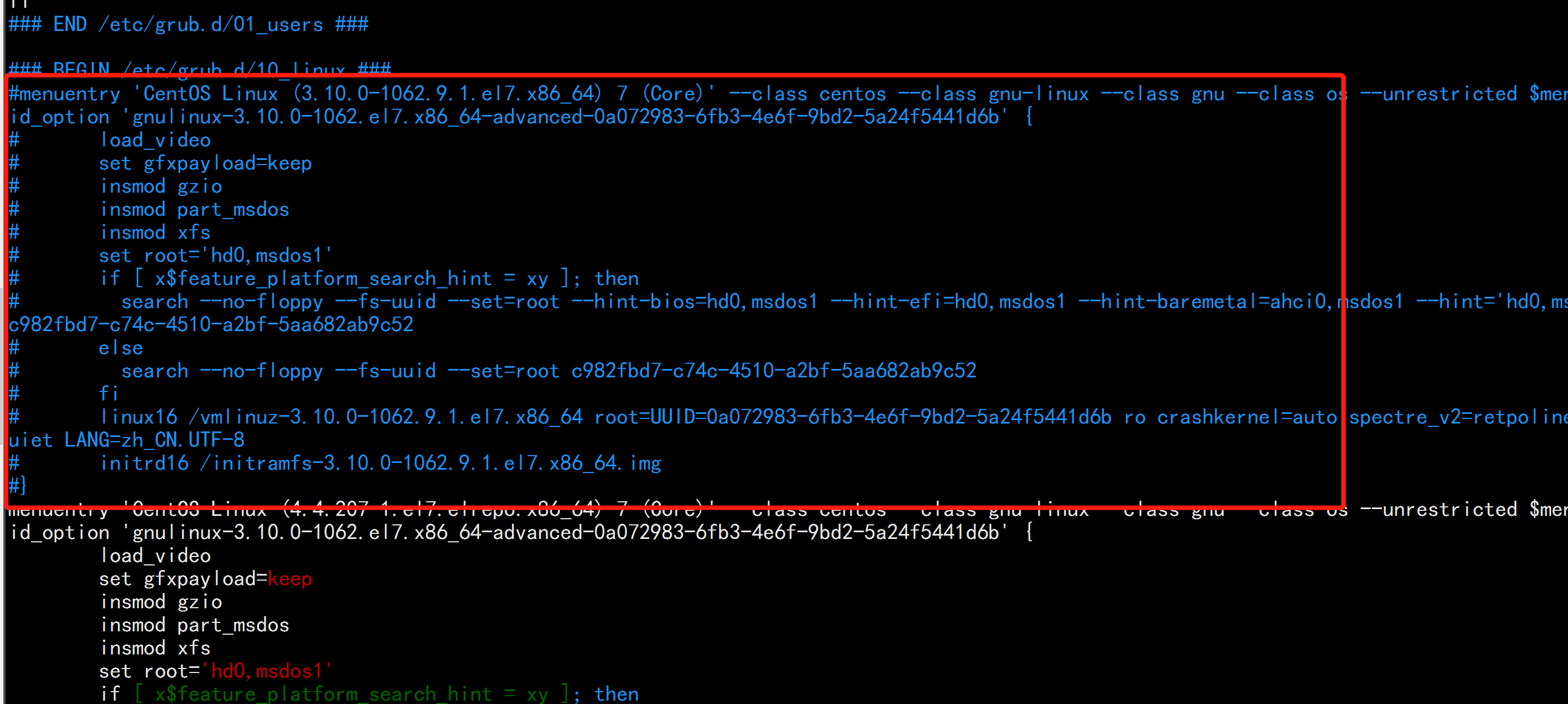

CentOS 7.x 系统自带的 3.10.x 内核存在一些 Bugs,导致运行的 Docker、Kubernetes 不稳定,例如: rpm -Uvhhttp://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpmrpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm# 安装完成后检查 /boot/grub2/grub.cfg 中对应内核 menuentry 中是否包含 initrd16 配置,如果没有,再安装 一次!yum --enablerepo=elrepo-kernel install -y kernel-lt# 设置开机从新内核启动grub2-set-default "CentOS Linux (4.4.182-1.el7.elrepo.x86_64) 7 (Core)"reboot# 重启后安装内核源文件yum --enablerepo=elrepo-kernel install kernel-lt-devel-$(uname -r) kernel-lt-headers-$(uname -r)-----------或者:rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org && \rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm && \yum --disablerepo=\* --enablerepo=elrepo-kernel repolist && \yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-ml.x86_64 && \yum remove -y kernel-tools-libs.x86_64 kernel-tools.x86_64 && \yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-ml-tools.x86_64 && \grub2-set-default 0

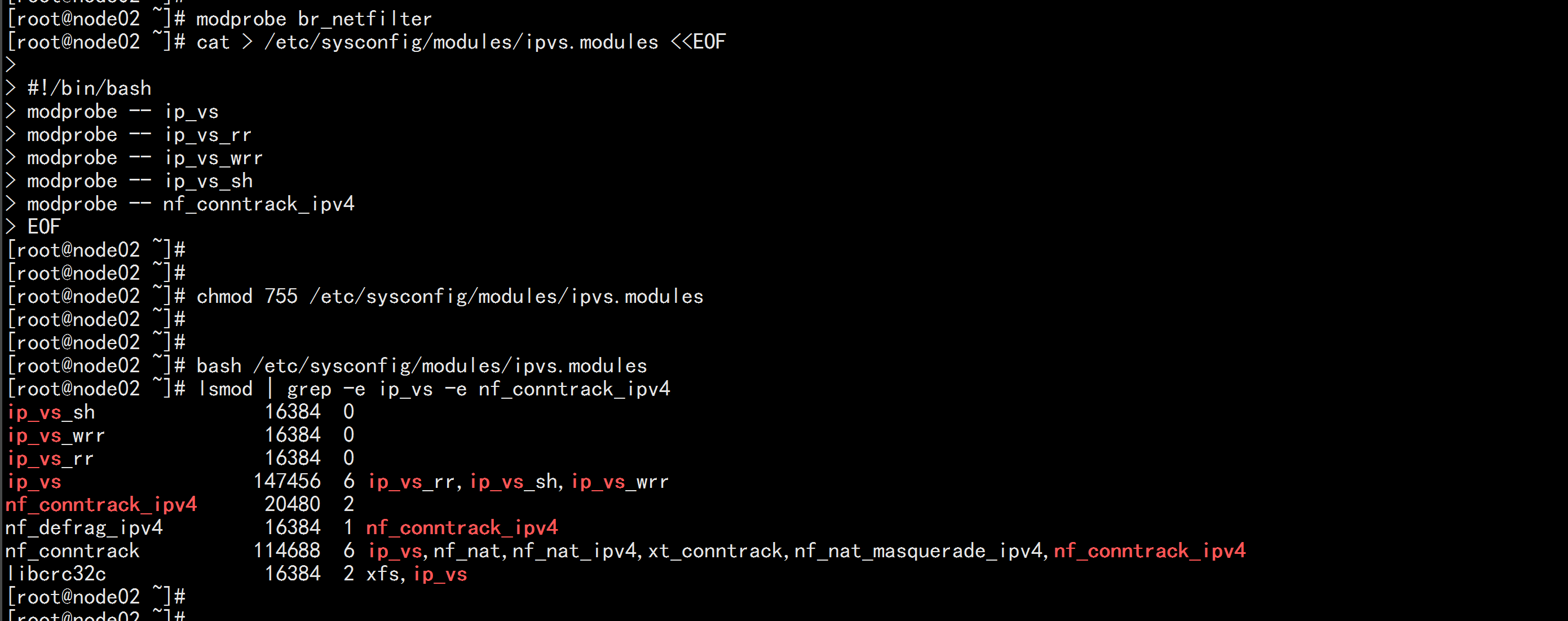

1.8 kube-proxy开启ipvs的前置条件

modprobe br_netfiltercat > /etc/sysconfig/modules/ipvs.modules <<EOF#!/bin/bashmodprobe -- ip_vsmodprobe -- ip_vs_rrmodprobe -- ip_vs_wrrmodprobe -- ip_vs_shmodprobe -- nf_conntrack_ipv4EOFchmod 755 /etc/sysconfig/modules/ipvs.modulesbash /etc/sysconfig/modules/ipvs.moduleslsmod | grep -e ip_vs -e nf_conntrack_ipv4

二: 开始安装k8s 集群

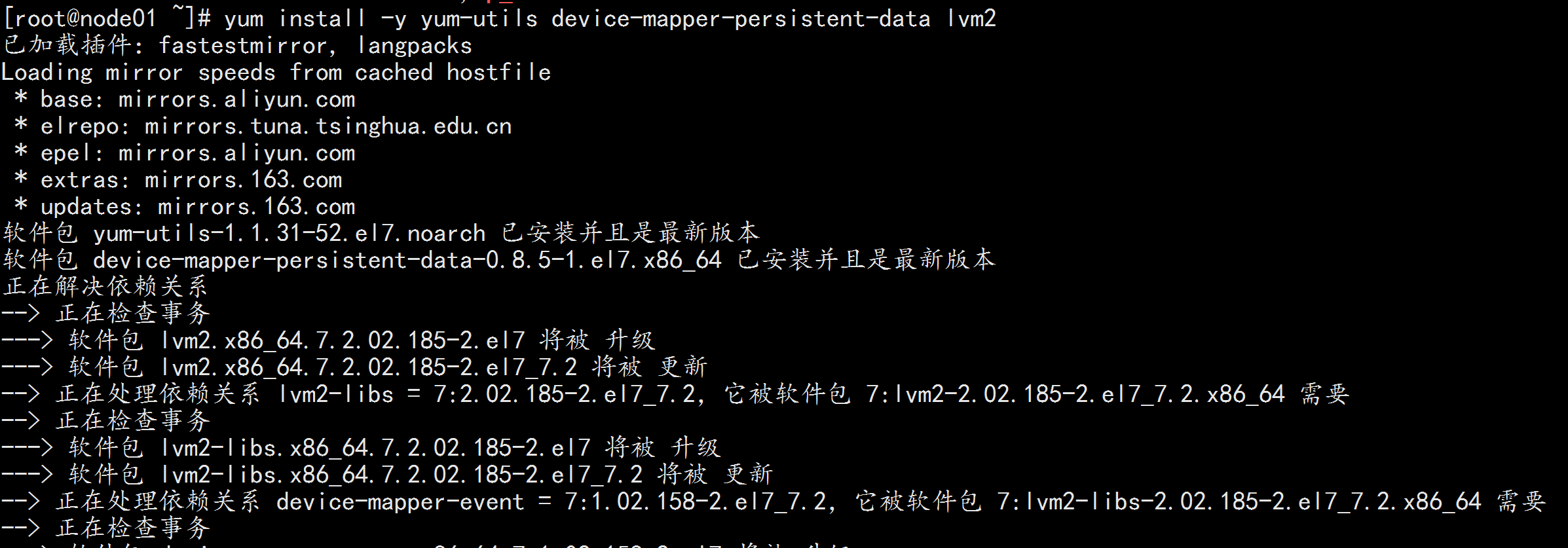

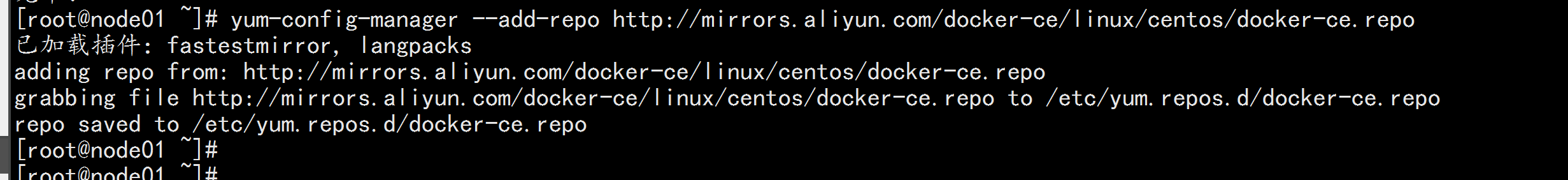

2.1 安装docker

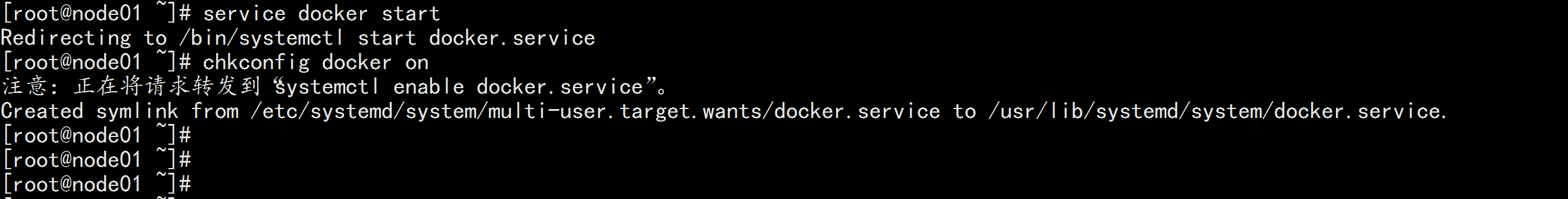

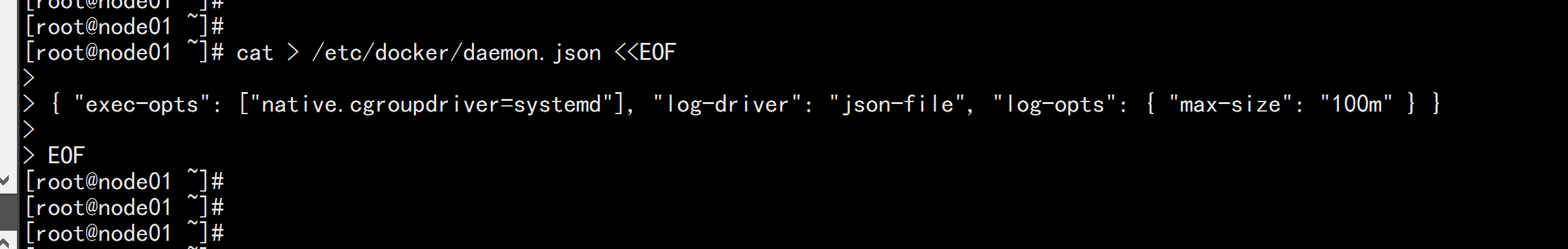

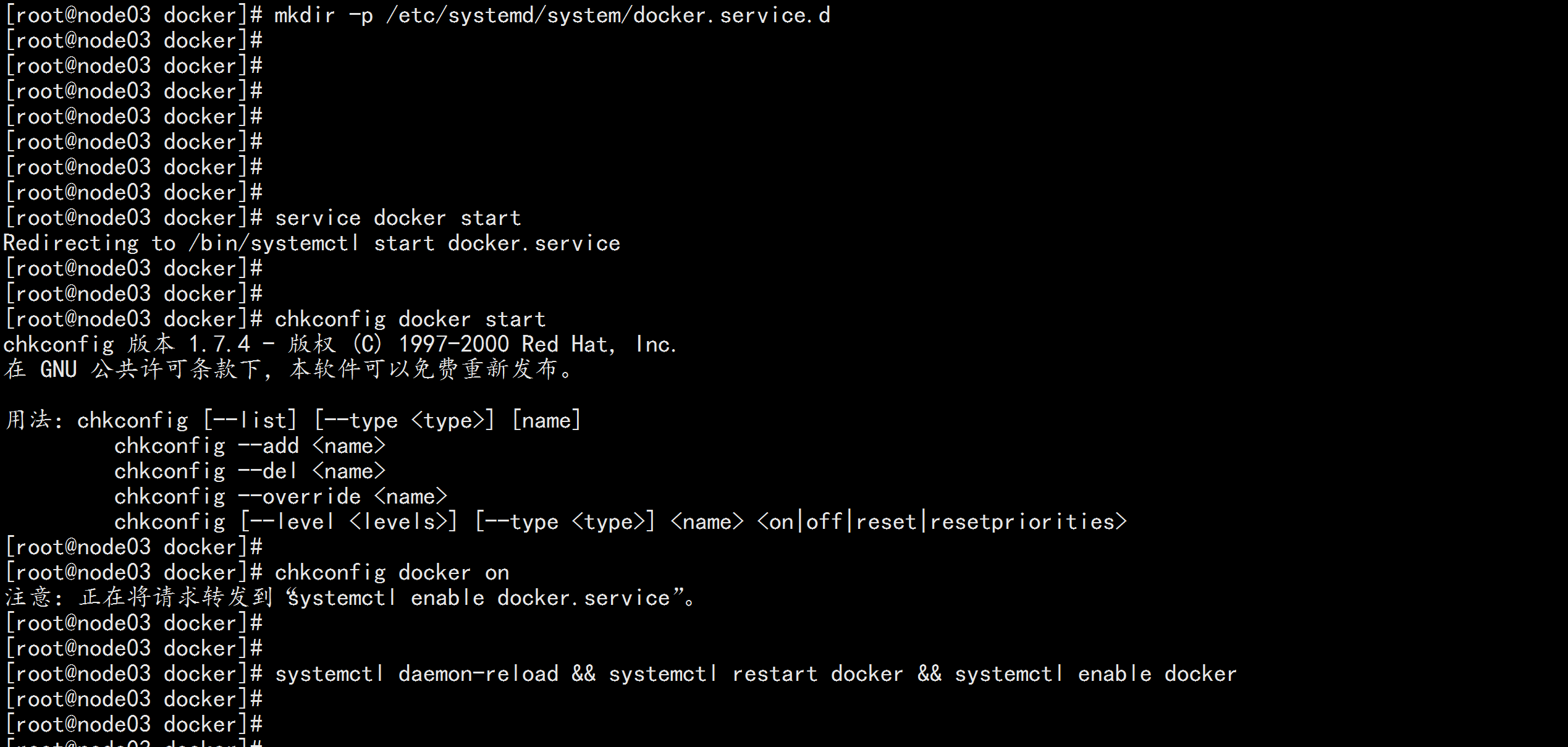

机器节点都执行:yum install -y yum-utils device-mapper-persistent-data lvm2yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repoyum update -y && yum install docker-ce-18.09.9 docker-ce-cli-18.09.9 containerd.io -y重启机器: reboot查看内核版本: uname -r在加载: grub2-set-default "CentOS Linux (4.4.182-1.el7.elrepo.x86_64) 7 (Core)" && reboot如果还不行就改 文件 : vim /etc/grub2.cfg 注释掉 3.10 的 内核保证 内核的版本 为 4.4service docker startchkconfig docker on## 创建 /etc/docker 目录mkdir /etc/docker# 配置 daemon.cat > /etc/docker/daemon.json <<EOF{ "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" } }EOFmkdir -p /etc/systemd/system/docker.service.d# 重启docker服务systemctl daemon-reload && systemctl restart docker && systemctl enable docker

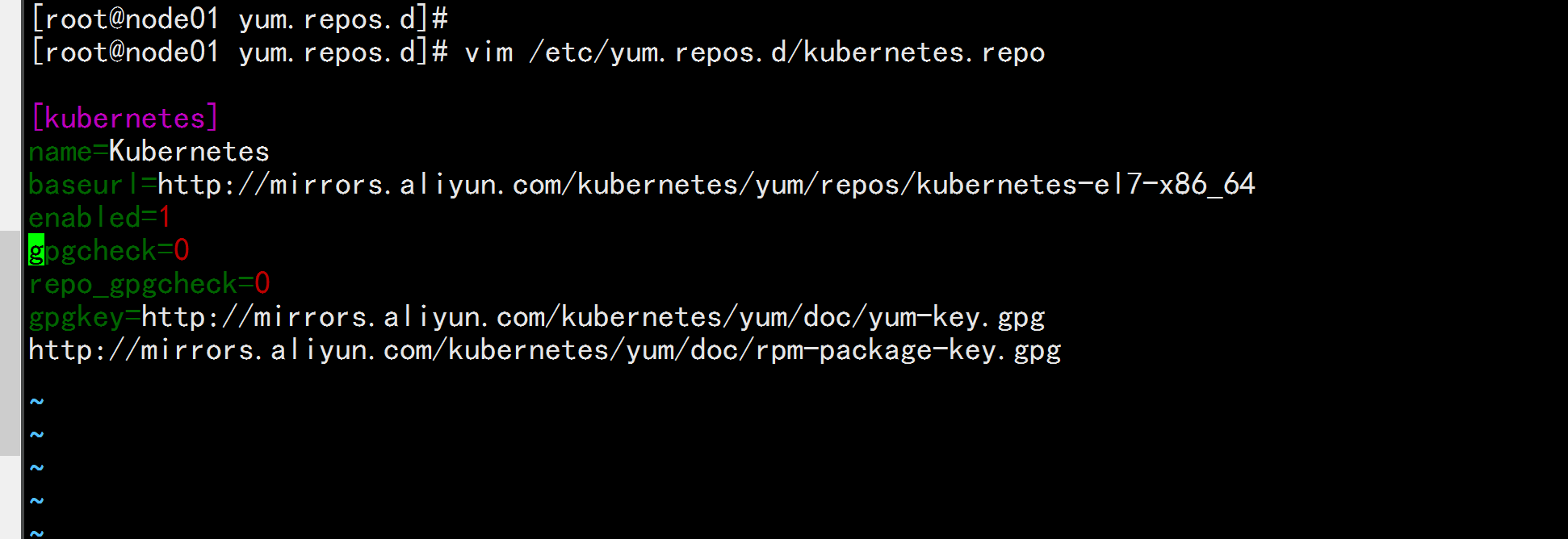

2.2 安装 Kubeadm (主从配置)

cat >> /etc/yum.repos.d/kubernetes.repo << EOF[kubernetes]name=Kubernetesbaseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpghttp://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF

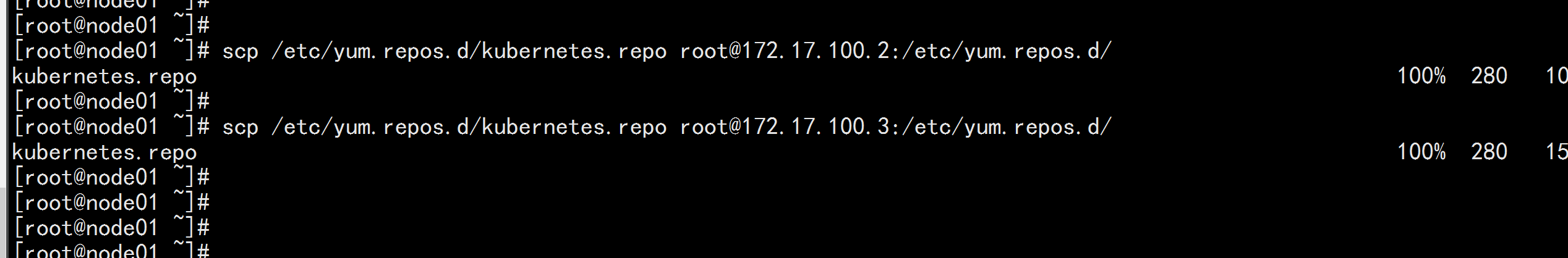

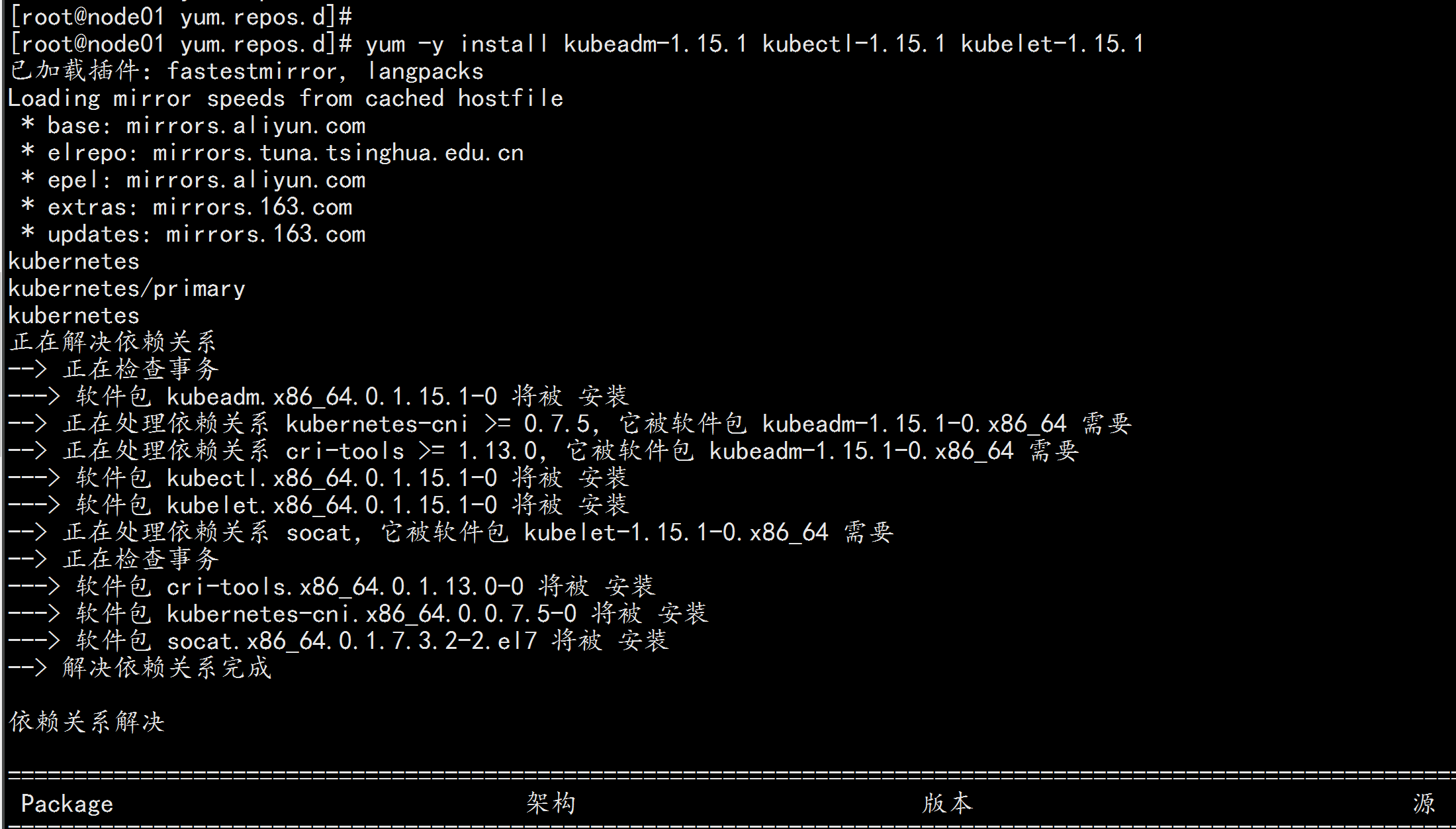

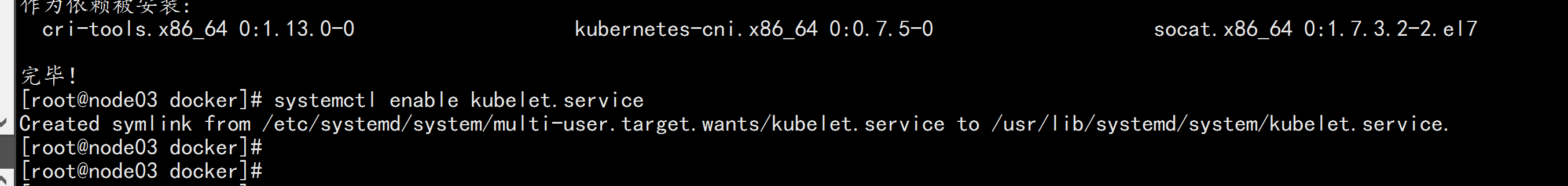

yum -y install kubeadm-1.15.1 kubectl-1.15.1 kubelet-1.15.1systemctl enable kubelet.service

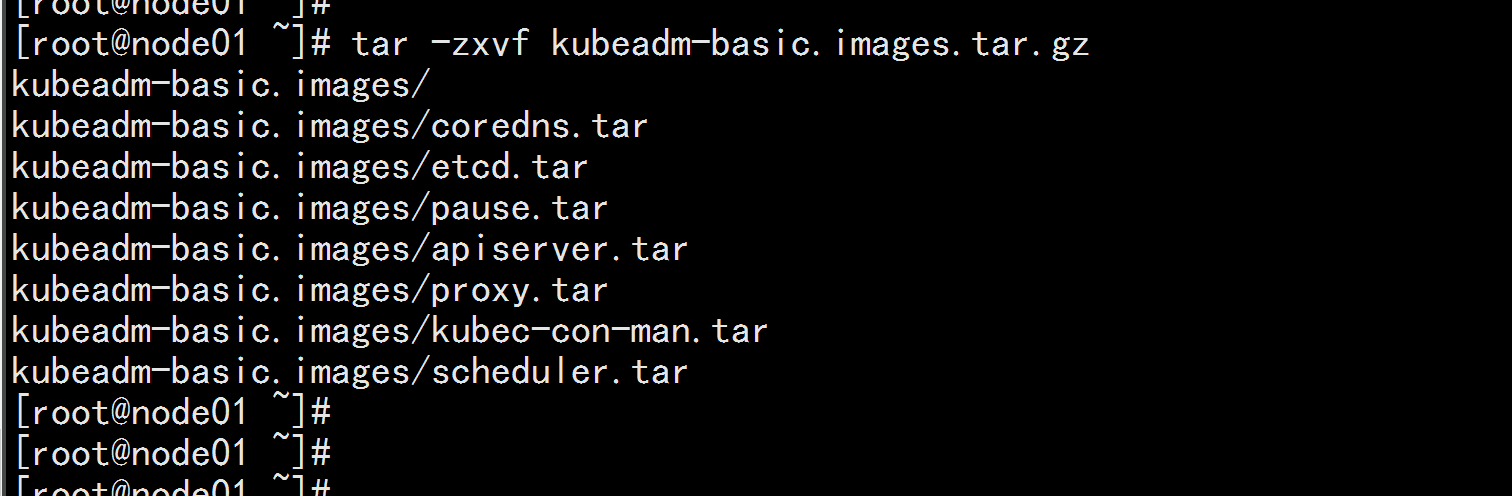

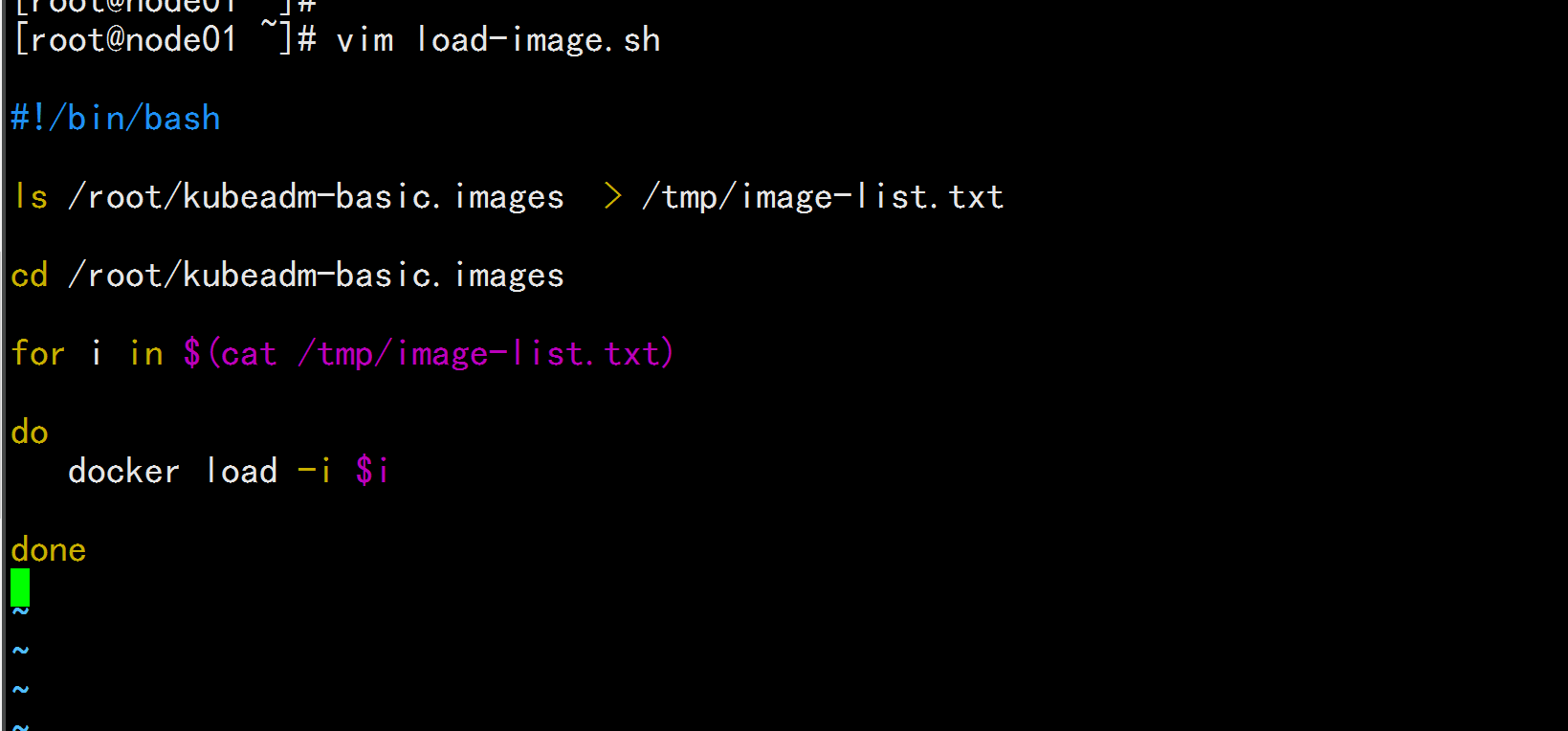

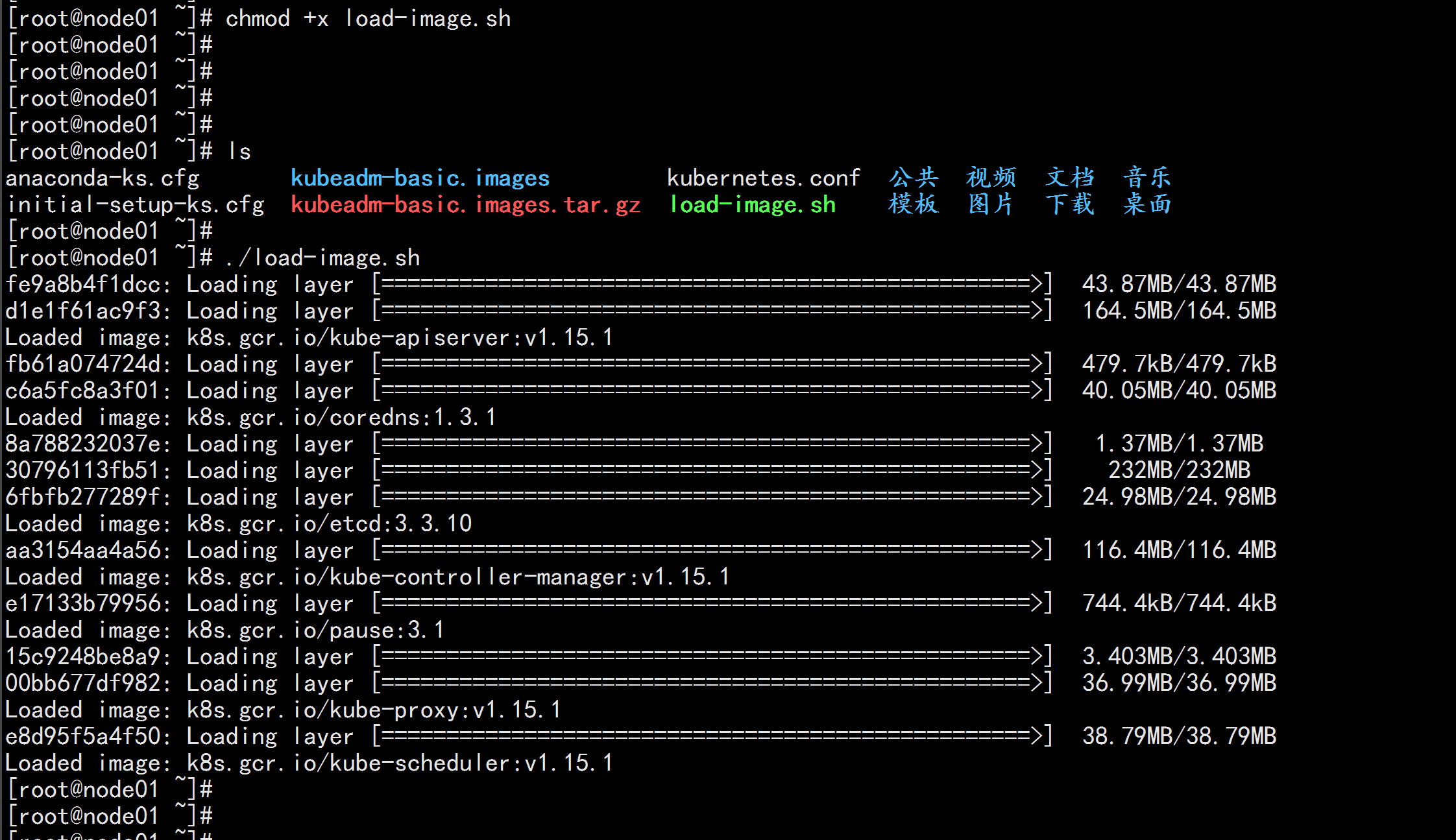

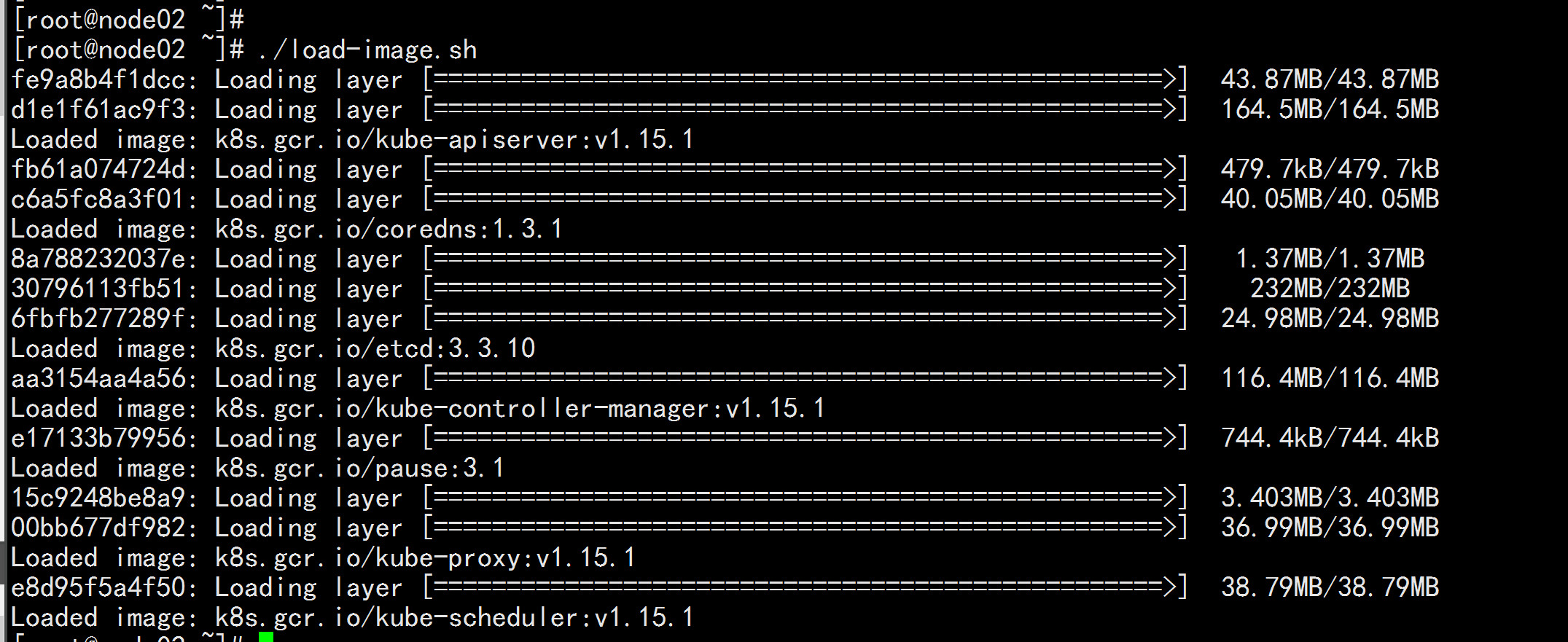

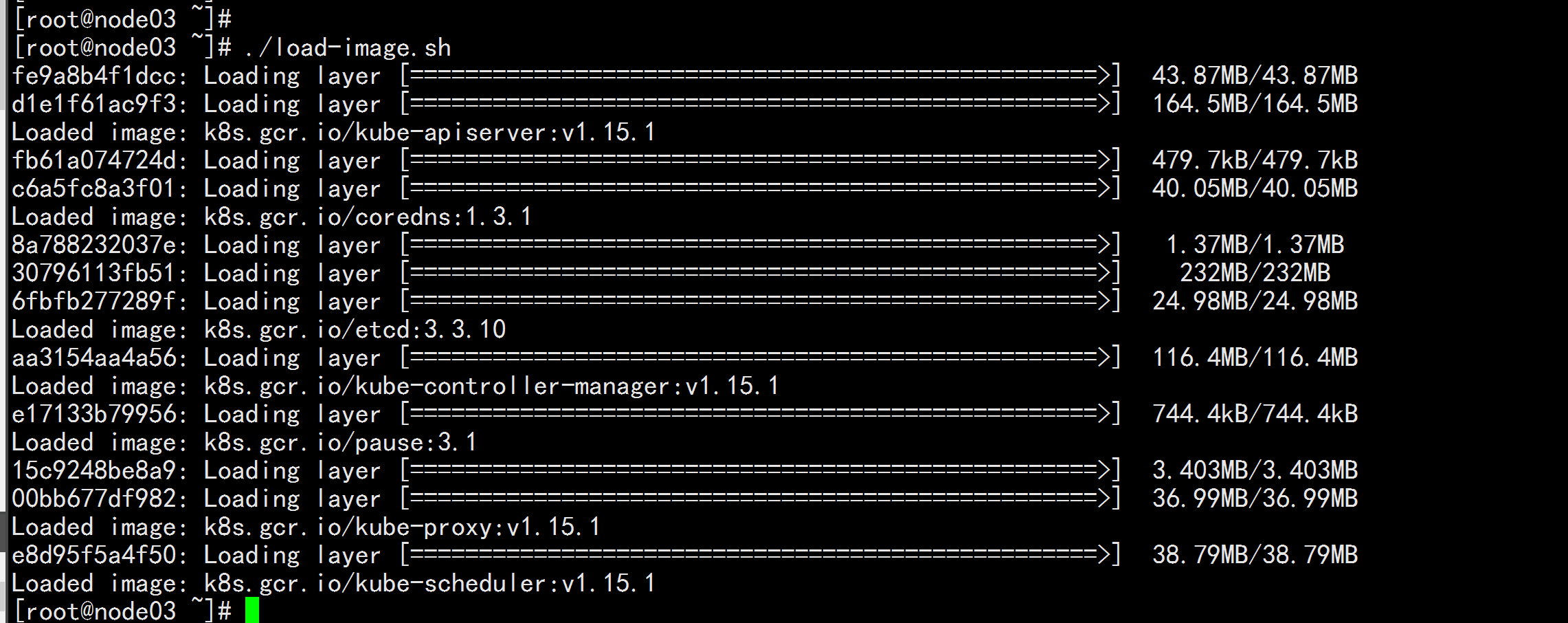

节点全部配置:上传导入文件kubeadm-basic.images.tar.gztar -zxvf kubeadm-basic.images.tar.gz### 写一个导入脚本vim load-image.sh---#!/bin/bashls /root/kubeadm-basic.images > /tmp/image-list.txtcd /root/kubeadm-basic.imagesfor i in $(cat /tmp/image-list.txt)dodocker load -i $idone---chmod +x load-image.sh./load-image.sh

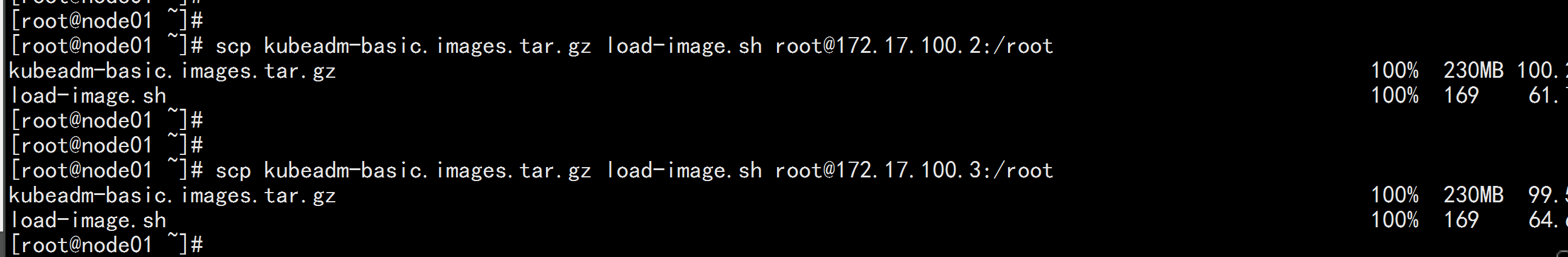

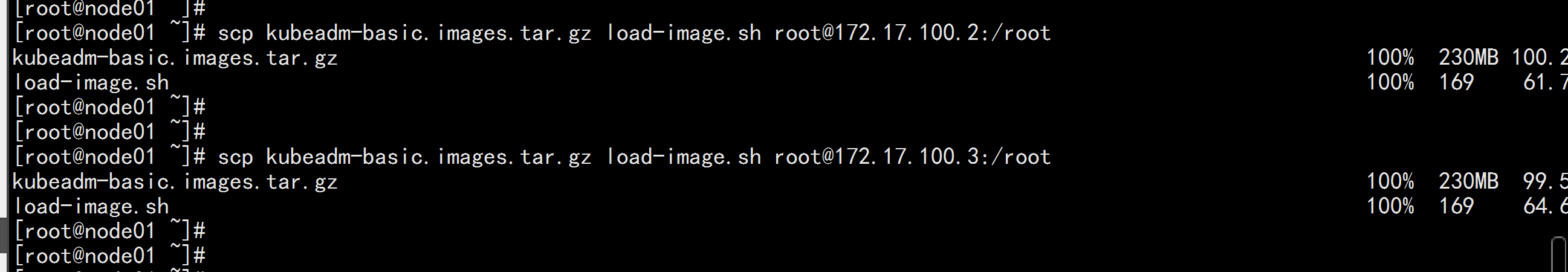

scp kubeadm-basic.images.tar.gz load-image.sh root@172.17.100.2:/rootscp kubeadm-basic.images.tar.gz load-image.sh root@172.17.100.3:/root

login : 172.17.100.2tar -zxvf kubeadm-basic.images.tar.gz./load-image.shlogin: 172.17.100.3tar -zxvf kubeadm-basic.images.tar.gz./load-image.sh

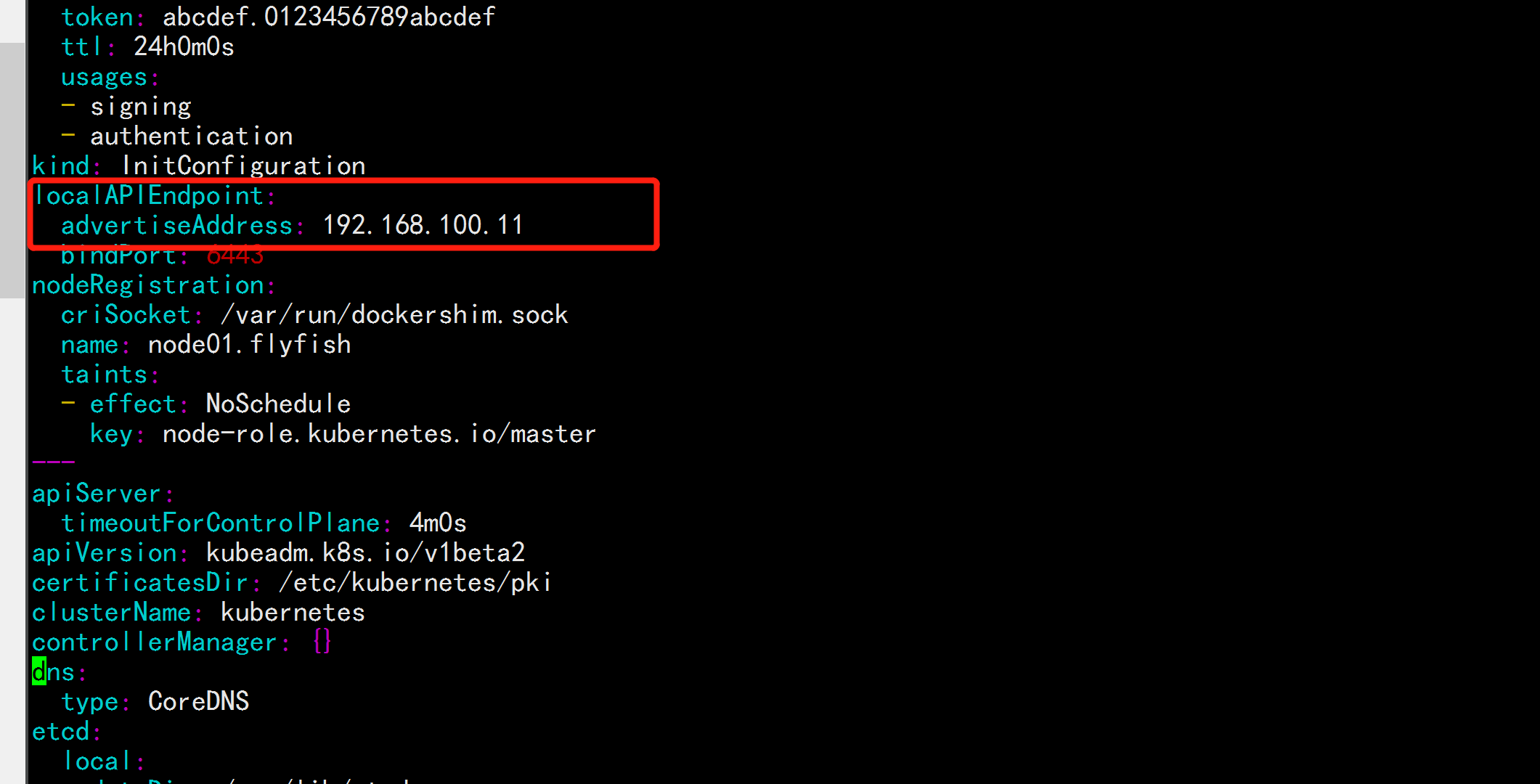

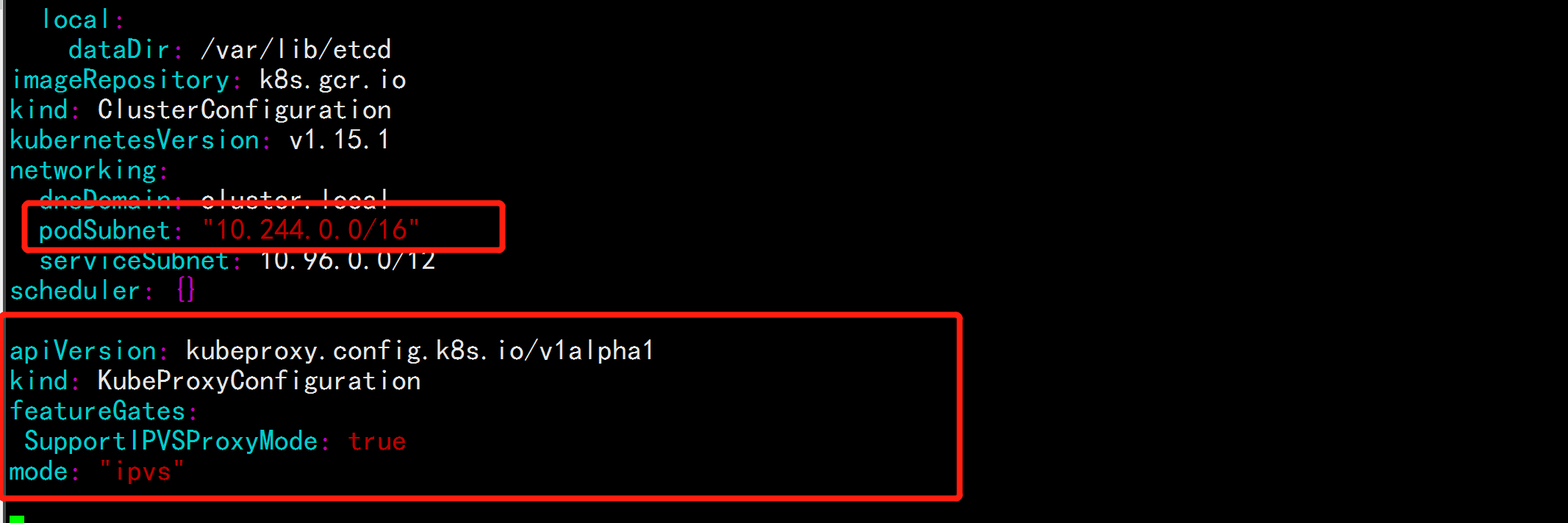

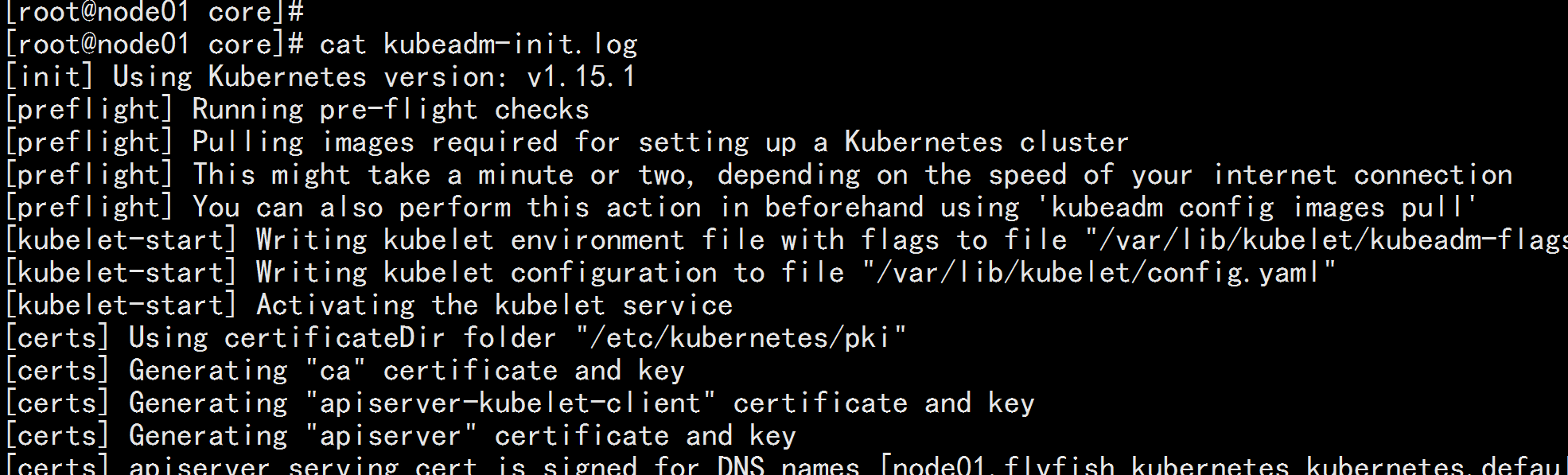

2.3 初始化主节点

kubeadm config print init-defaults > kubeadm-config.yamlvim kubeadm-config.yaml---advertiseAddress: 192.168.100.11 (改为主节点IP地址)增加podSubnet: "10.244.0.0/16"------apiVersion: kubeproxy.config.k8s.io/v1alpha1kind: KubeProxyConfigurationfeatureGates:SupportIPVSProxyMode: truemode: "ipvs"---

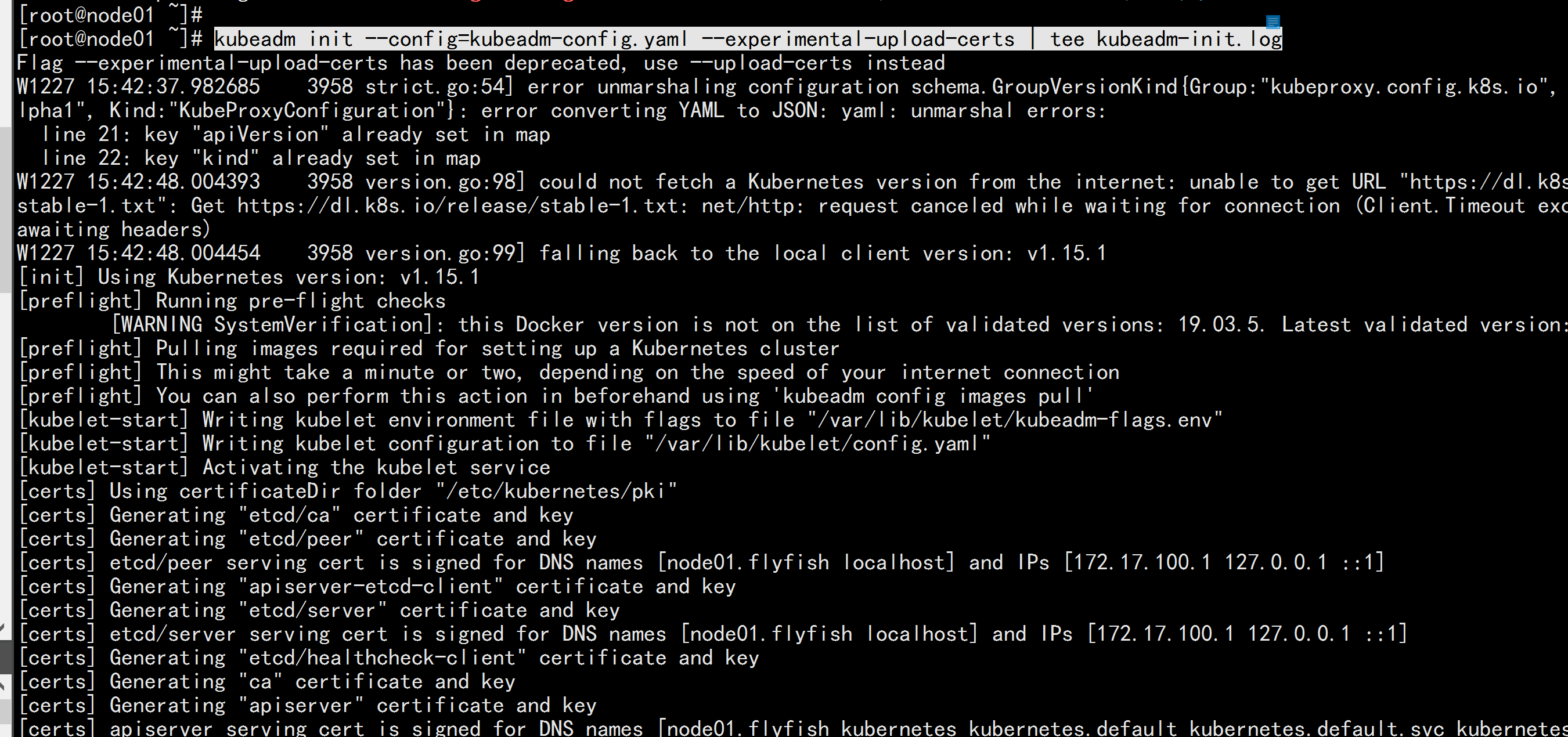

kubeadm init --config=kubeadm-config.yaml --experimental-upload-certs | tee kubeadm-init.log

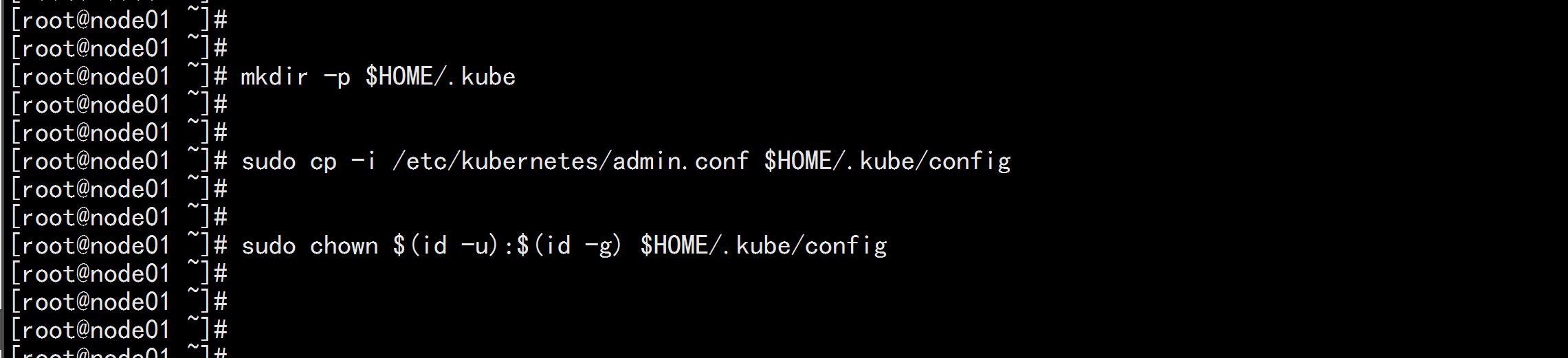

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

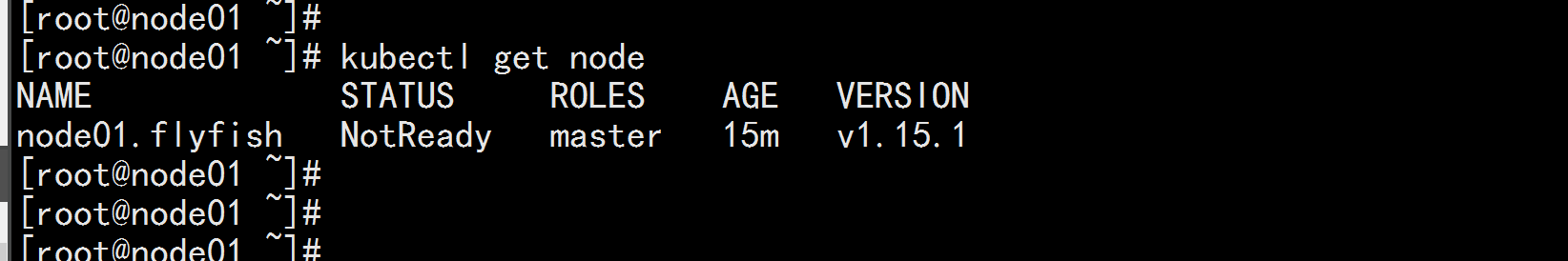

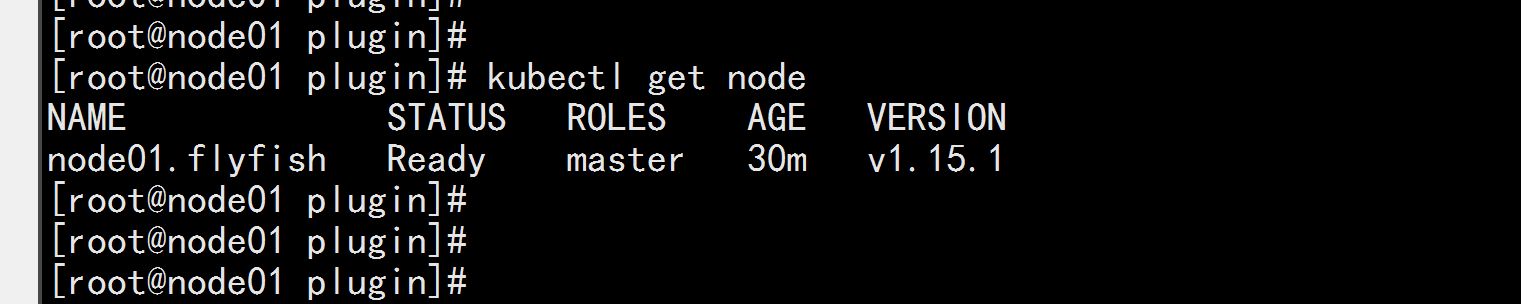

kubectl get node

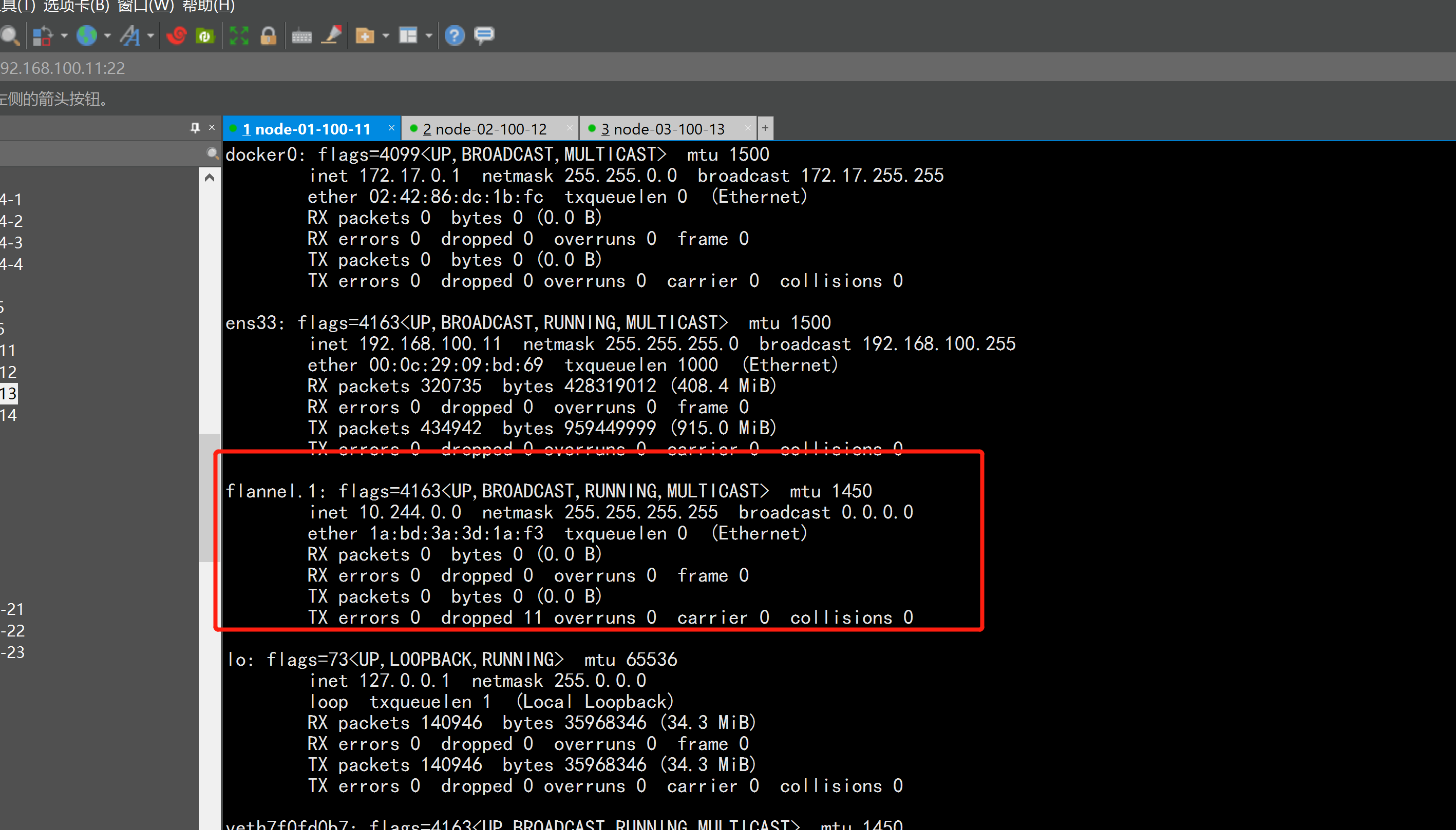

2.4 准备flannel 的 网络

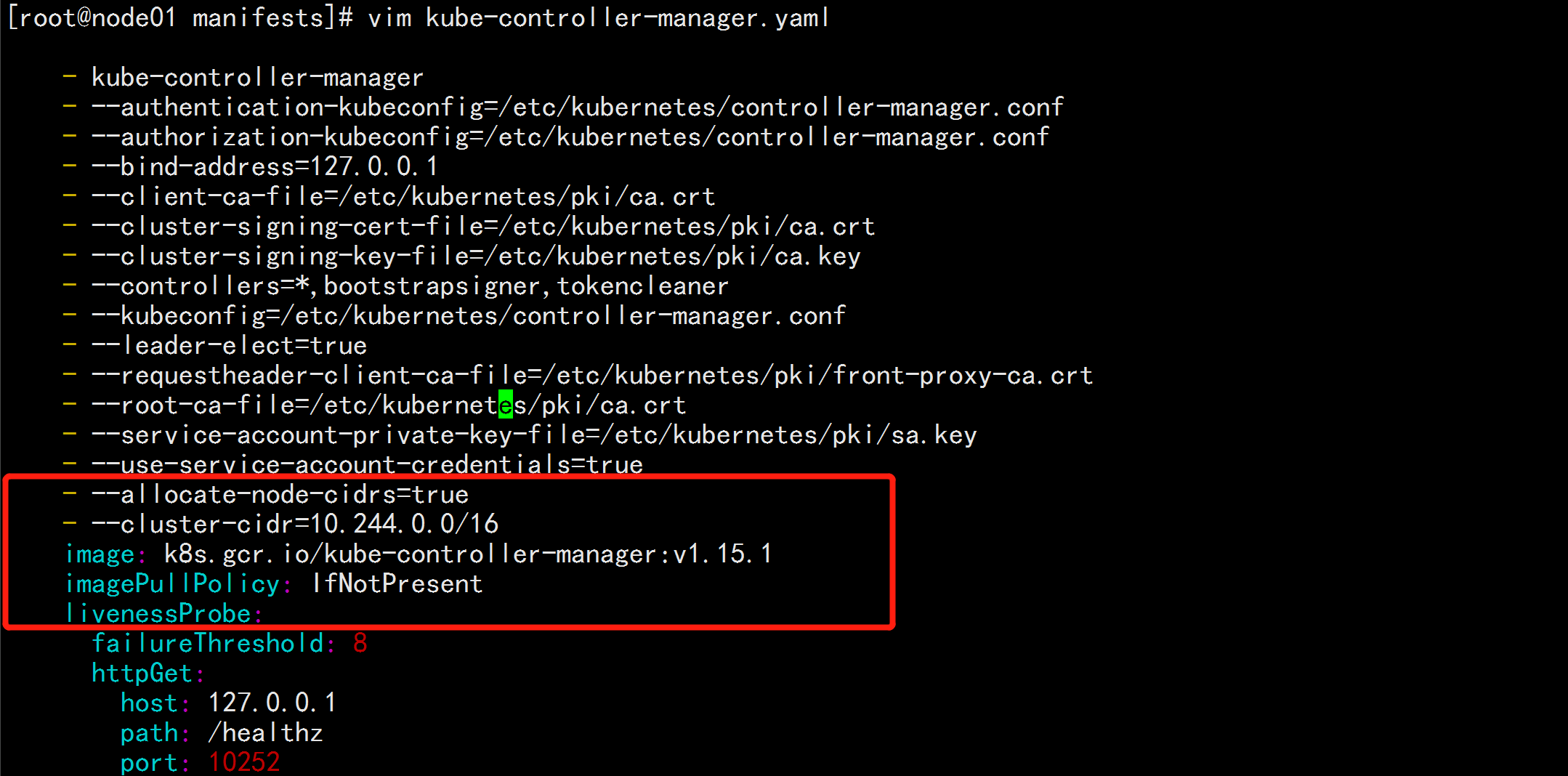

cd /etc/kubernetes/manifests/vim kube-controller-manager.yaml---增加:- --allocate-node-cidrs=true- --cluster-cidr=10.244.0.0/16---

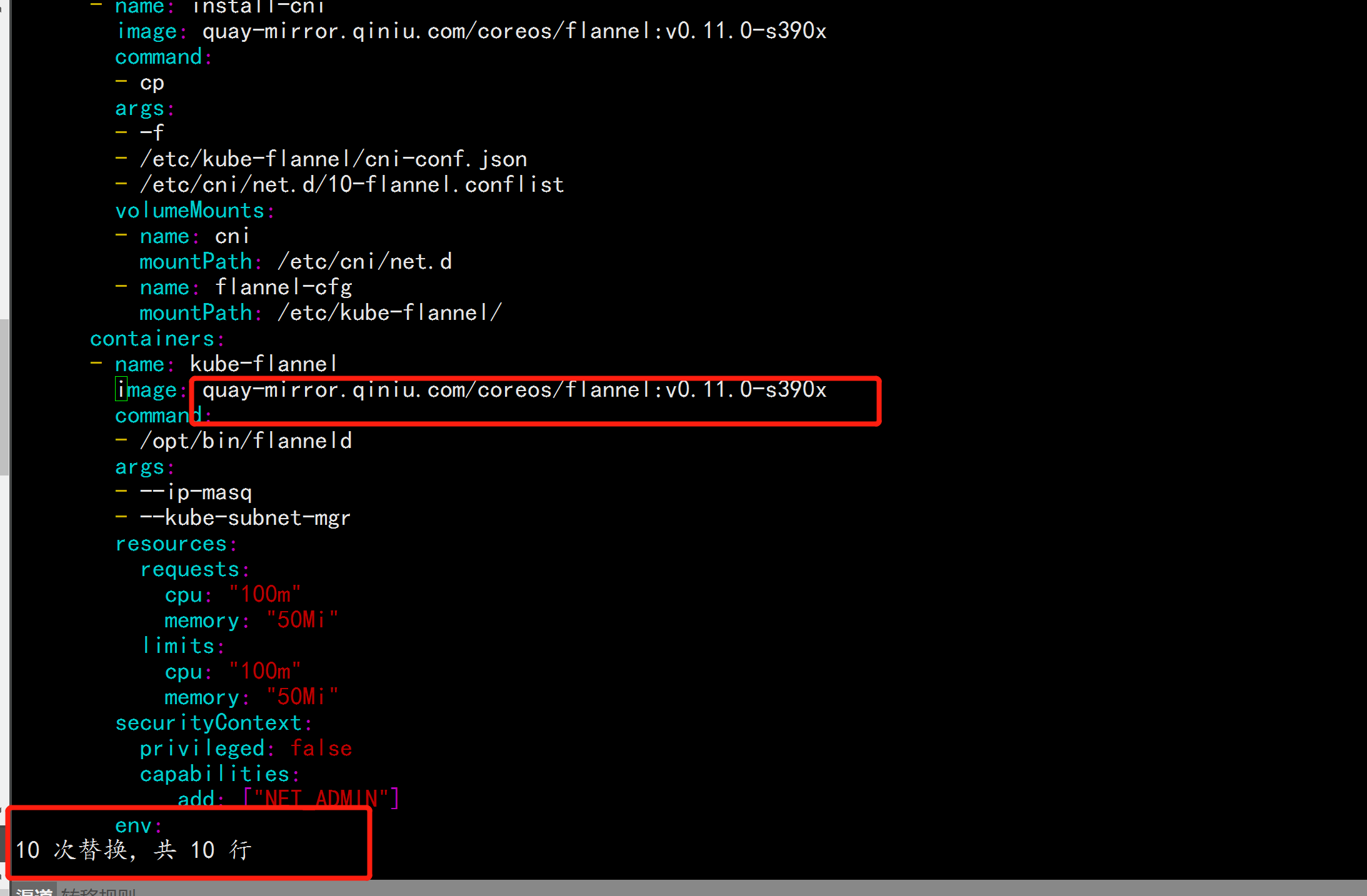

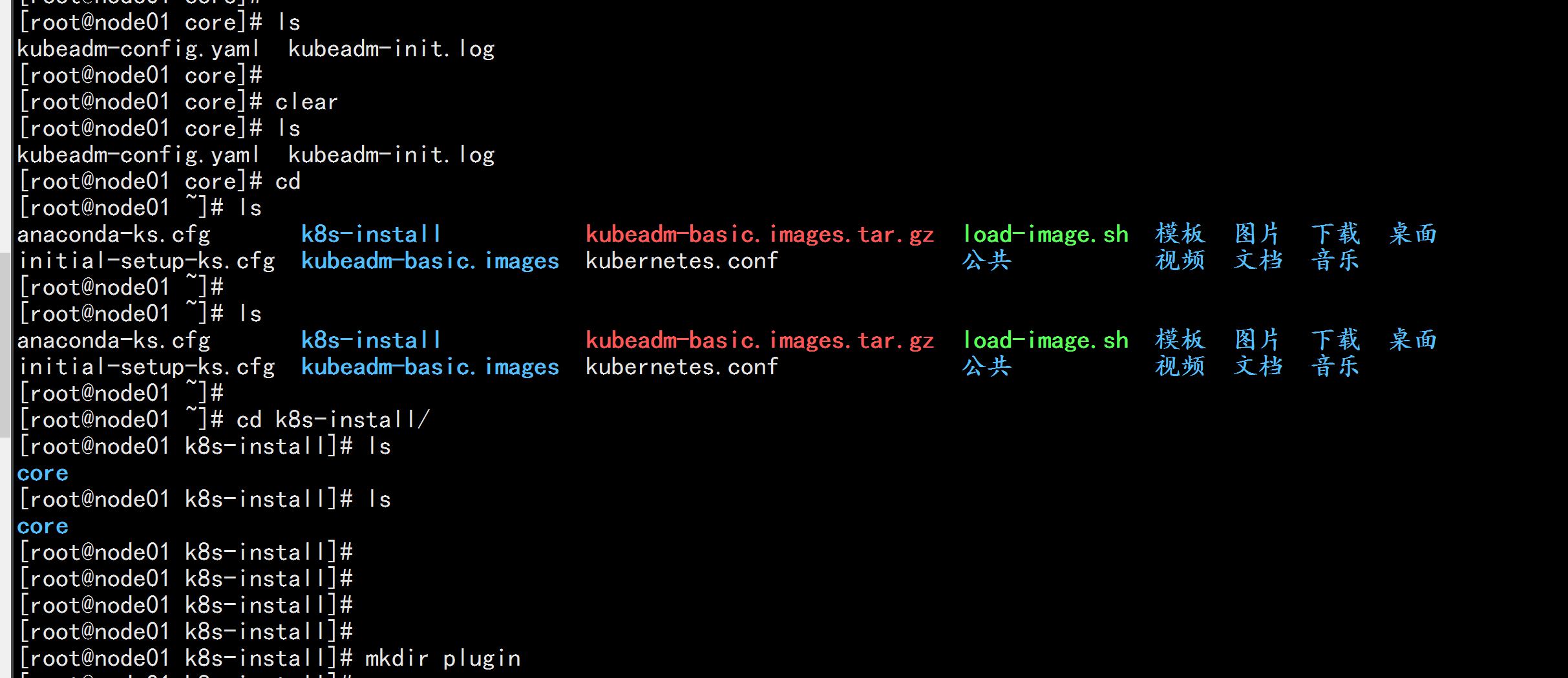

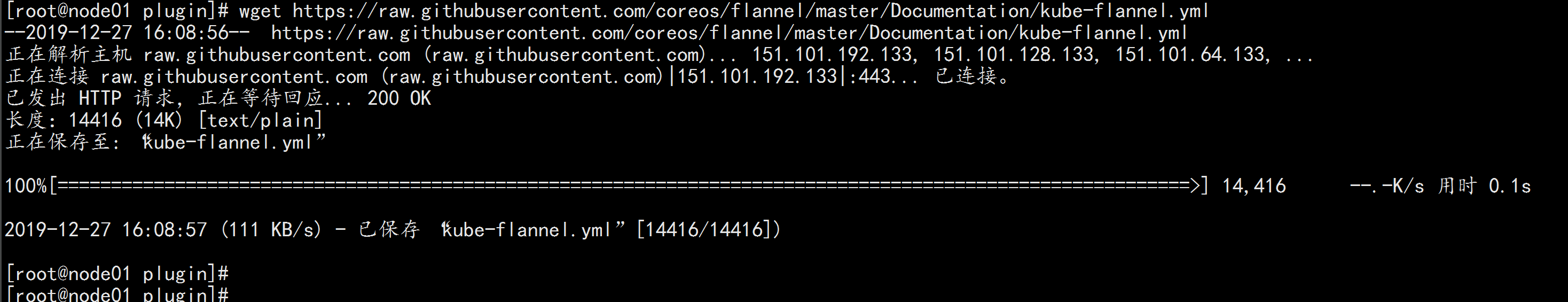

mkdir -p k8s-install/{core,plugin}mv kubeadm-init.log k8s-install/coremv kubeadm-config.yaml k8s-install/corecd k8s-install/pluginwget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlvim kube-flannel.yml-----因为quay.io/coreos/flannel:v0.11.0-amd64 这个 quay.io 这个 在国外需要翻墙,才能下载到flannel:v0.11.0-amd64 资源 , 所以要改掉 改成:quay-mirror.qiniu.com-----

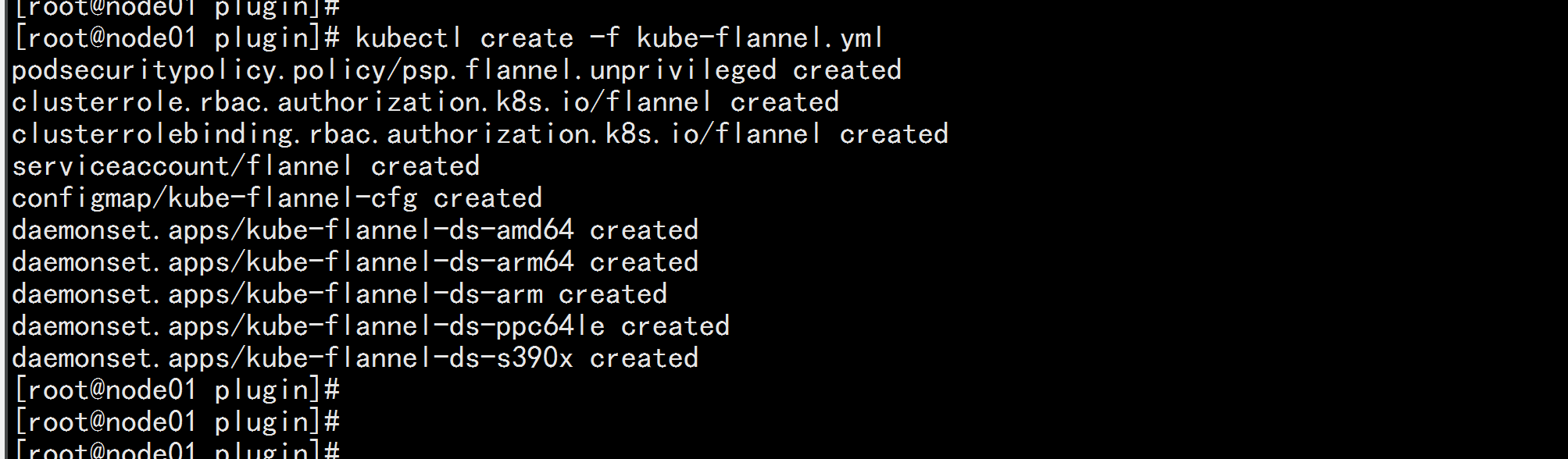

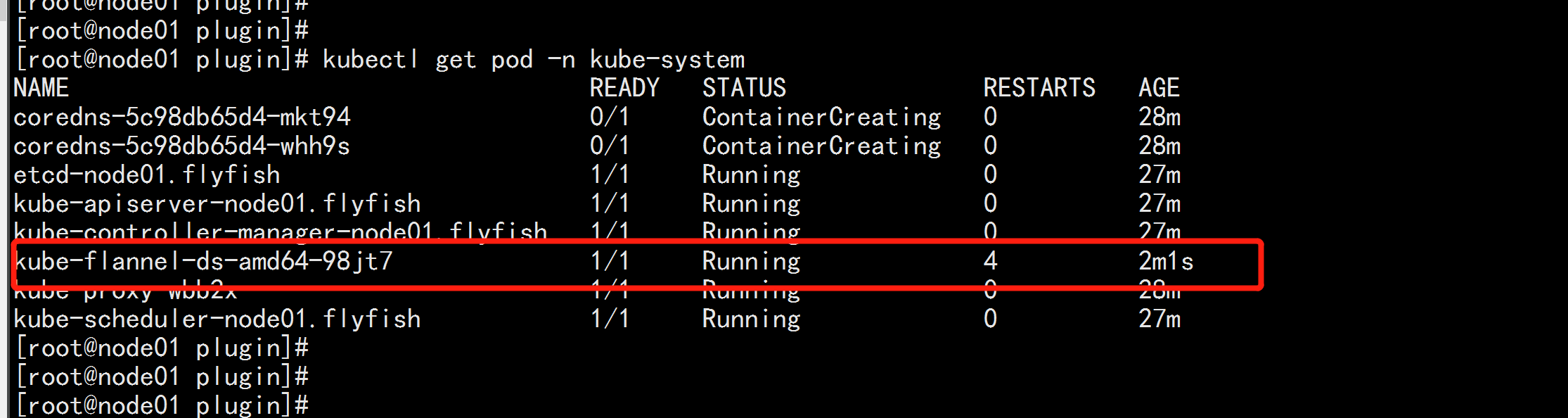

kubectl create -f kube-flannel.ymlkubectl get pod -n kube-system

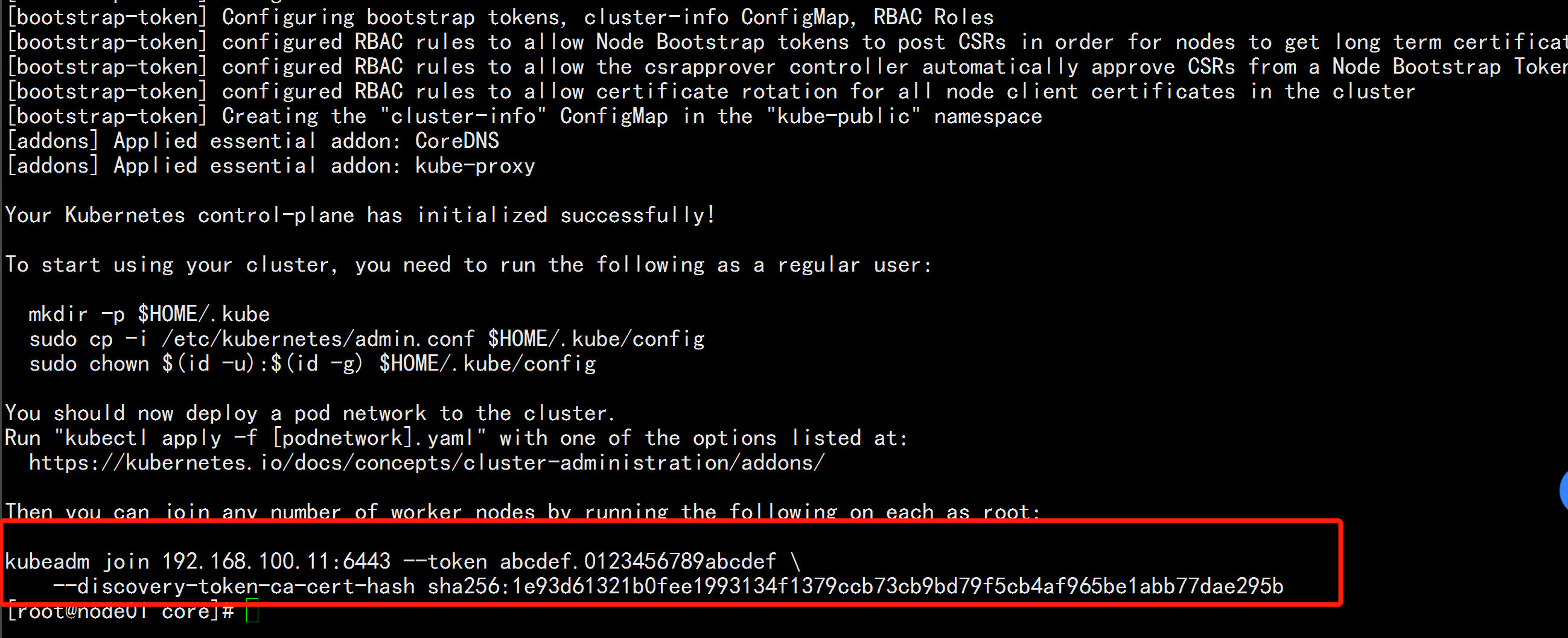

2.5 将其它节点加入到这个集群里面

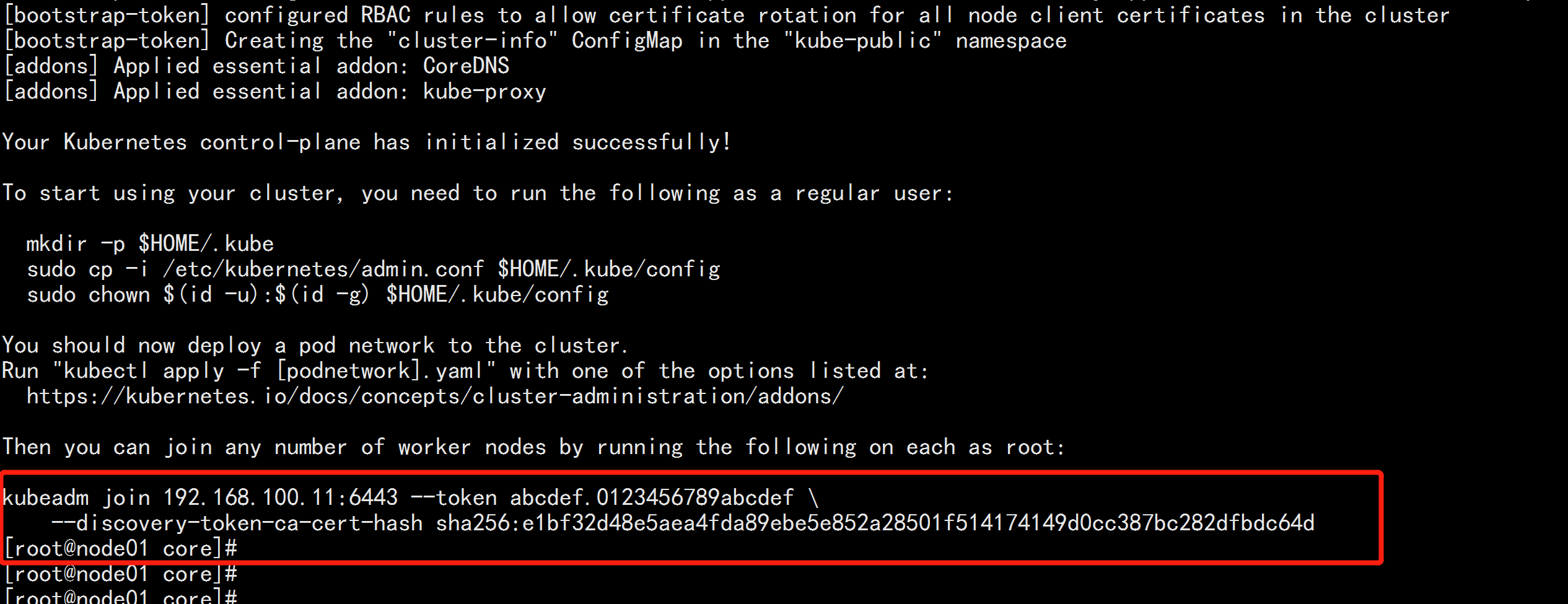

node01.flyfishcd /root/k8s-install/cat kubeadm-init.log---到最后一行有加入命令kubeadm join 192.168.100.11:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:1e93d61321b0fee1993134f1379ccb73cb9bd79f5cb4af965be1abb77dae295b---node02.flyfish:kubeadm join 192.168.100.11:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:1e93d61321b0fee1993134f1379ccb73cb9bd79f5cb4af965be1abb77dae295bnode03.flyfish:kubeadm join 192.168.100.11:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:1e93d61321b0fee1993134f1379ccb73cb9bd79f5cb4af965be1abb77dae295b

node01.flyfish:kubectl get nodekubectl get pods -n kube-system至此k8s 三节点集群完成安装:

2.6 部署dashboard 界面

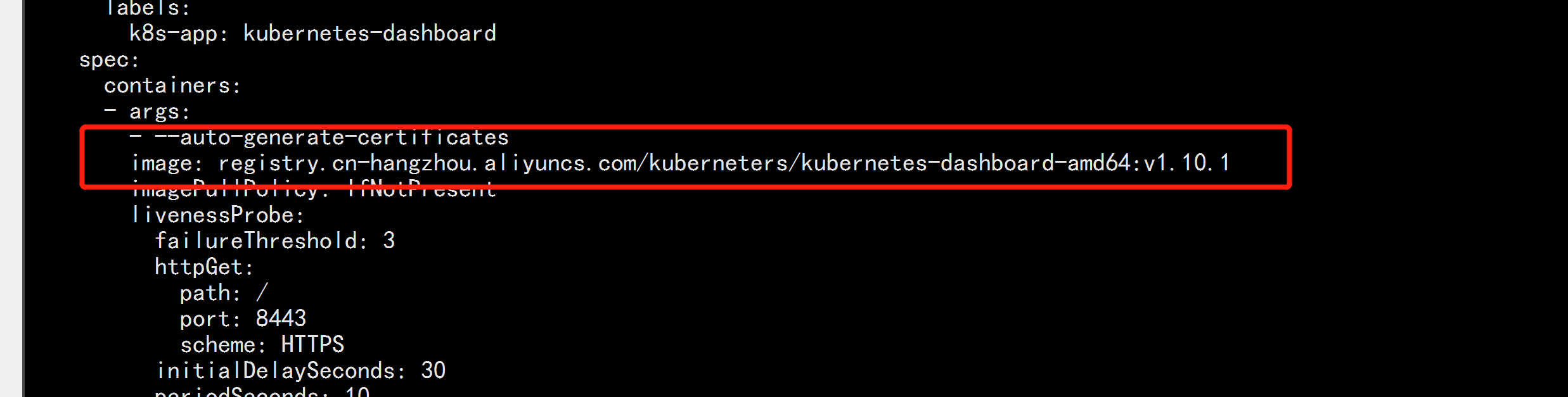

注:在node01.flyfish节点上进行如下操作1.创建Dashboard的yaml文件wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml----使用如下命令或直接手动编辑kubernetes-dashboard.yaml文件sed -i 's/k8s.gcr.io/registry.cn-hangzhou.aliyuncs.com/kuberneters/g' kubernetes-dashboard.yamlsed -i '/targetPort:/a\ \ \ \ \ \ nodePort: 30001\n\ \ type: NodePort' kubernetes-dashboard.yaml---手动编辑kubernetes-dashboard.yaml文件时,需要修改两处内容,首先在Dashboard Deployment部分修改Dashboard镜像下载链接,由于默认从官方社区下载,而不“科学上网”是无法下载的,因此修改为:image: registry.cn-hangzhou.aliyuncs.com/kuberneters/kubernetes-dashboard-amd64:v1.10.1 修改后内容如图:

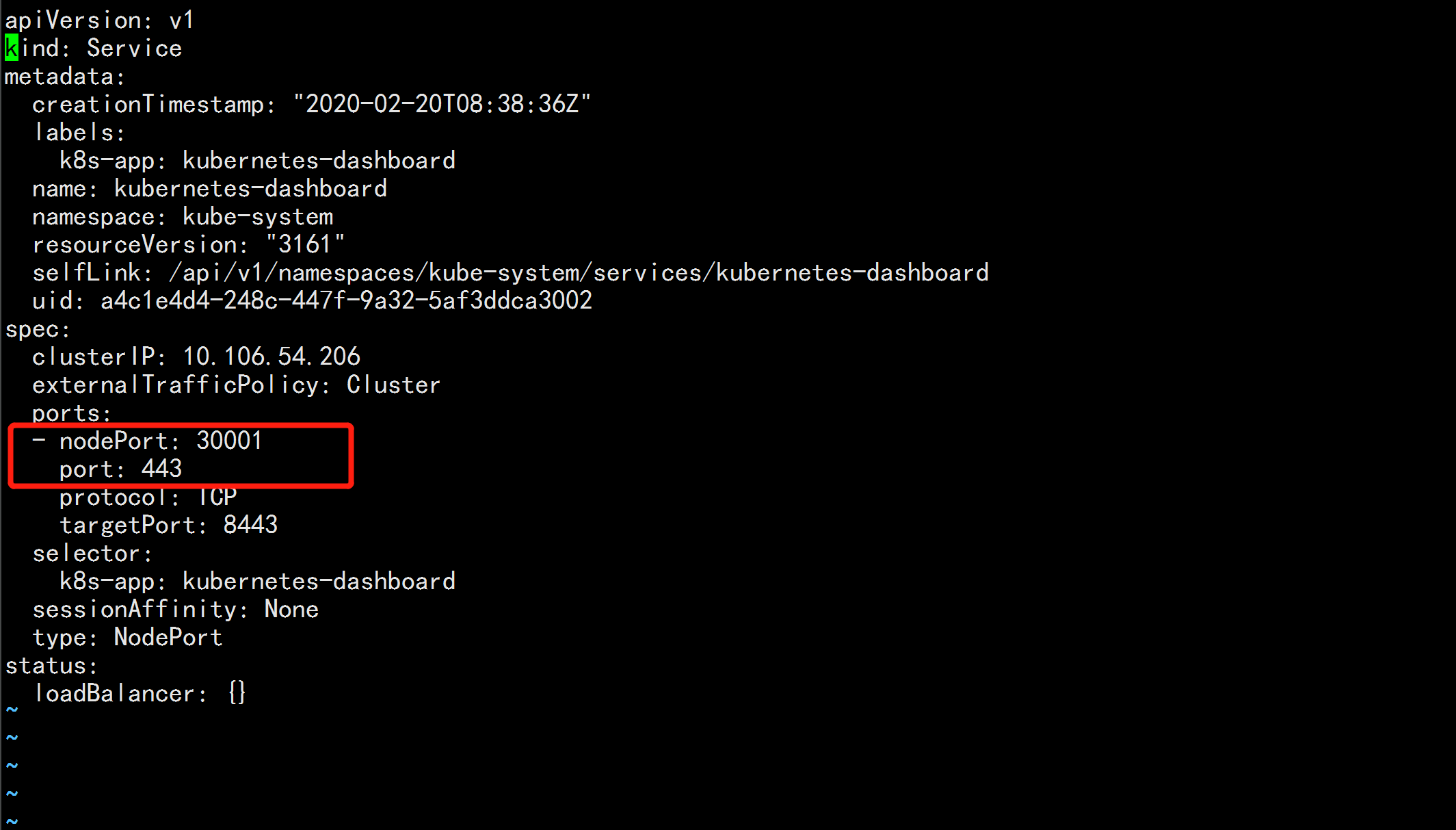

此外,需要在Dashboard Service内容加入nodePort: 30001和type: NodePort两项内容,将Dashboard访问端口映射为节点端口,以供外部访问,编辑完成后,状态如图

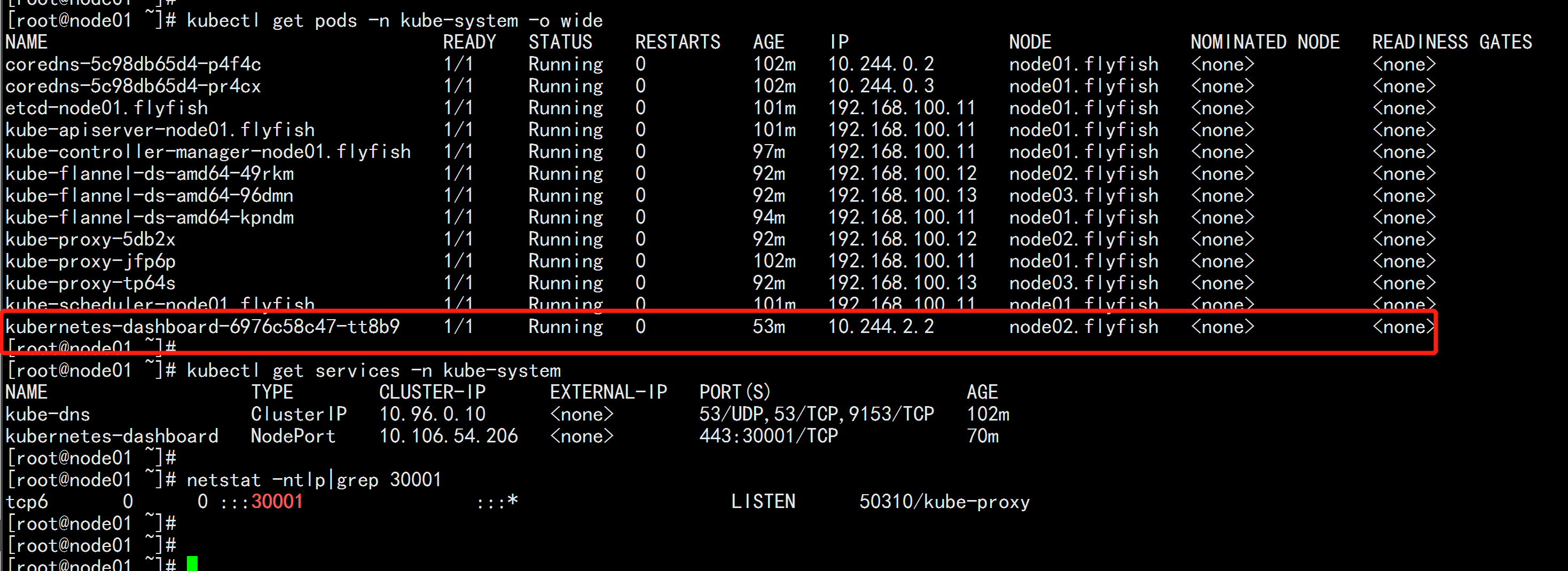

部署Dashboardkubectl create -f kubernetes-dashboard.yaml创建完成后,检查相关服务运行状态kubectl get deployment kubernetes-dashboard -n kube-systemkubectl get pods -n kube-system -o widekubectl get services -n kube-systemnetstat -ntlp|grep 30001

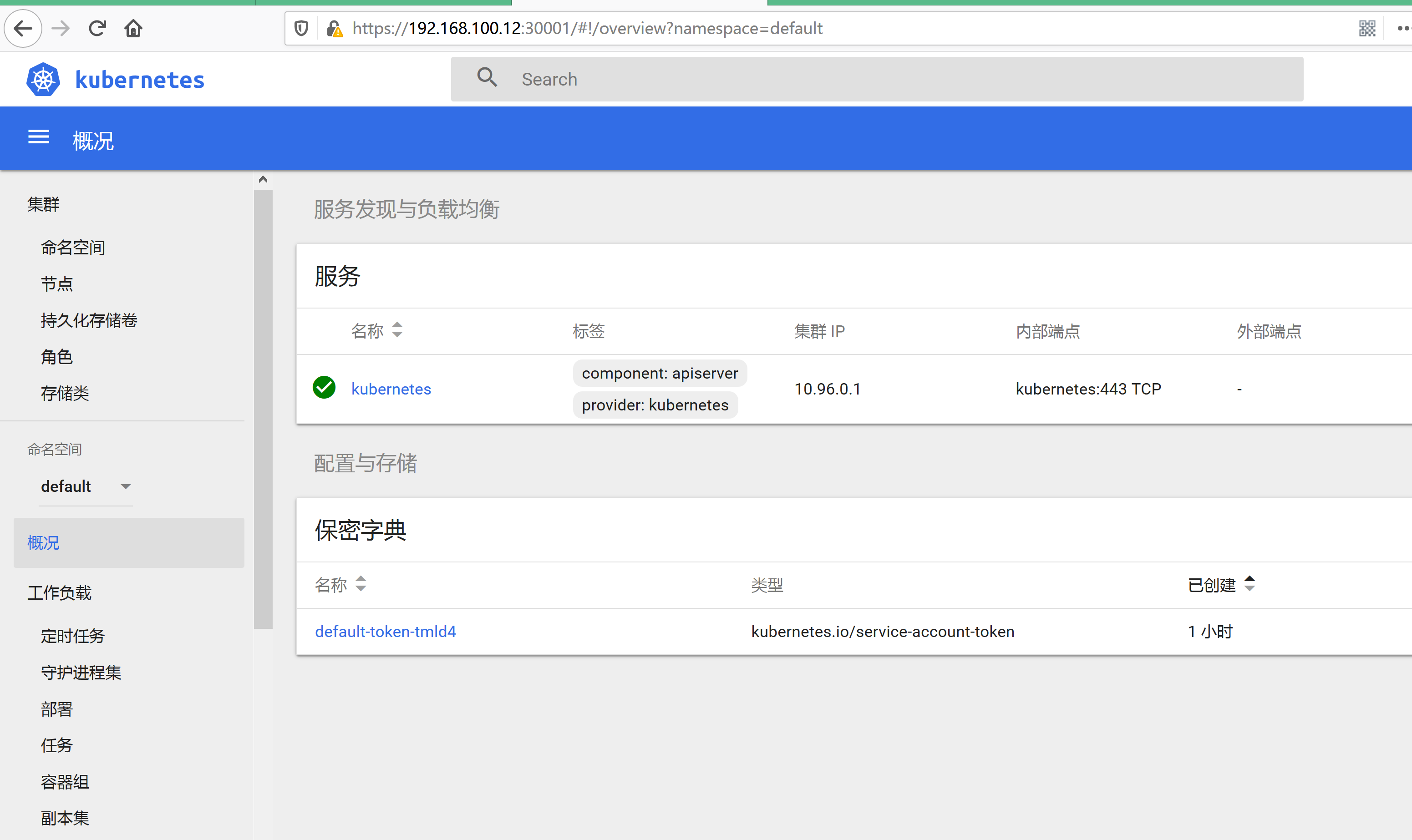

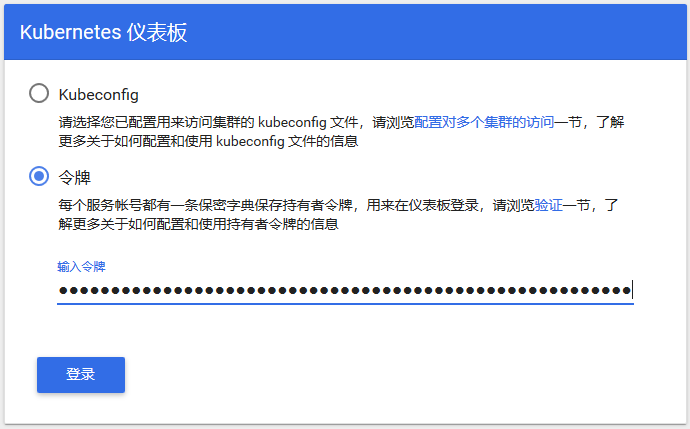

在Firefox浏览器输入Dashboard访问地址:https://192.168.100.12:30001

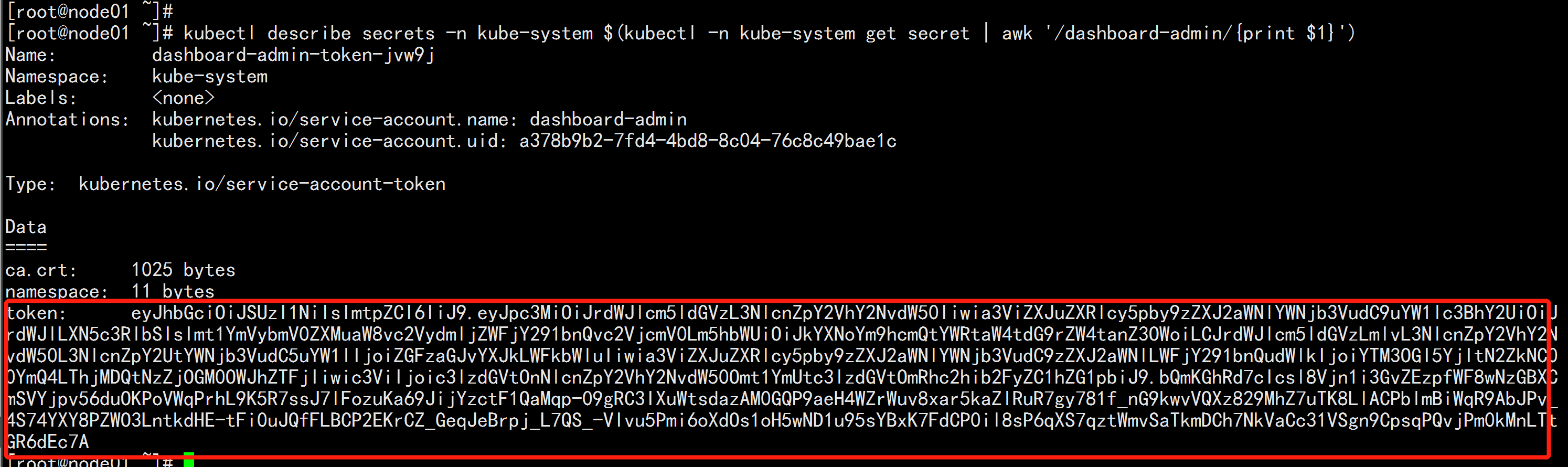

授权令牌kubectl create serviceaccount dashboard-admin -n kube-systemkubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-adminkubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

使用输出的token登录Dashboard

认证通过后,登录Dashboard首页如图