@zhangyy

2020-07-01T02:34:16.000000Z

字数 3983

阅读 316

hive on spark 性能调优

hive的部分

1.SparkSQL集成Hive,需将hive-site.xml复制到{SAPRK_HOME/conf}目录下,即可!!a.将hive-site.xml复制到{SAPRK_HOME/conf}目录下;b.将hive-site.xml复制到所有Spark节点;c.将MySQL驱动包[mysql-connector-java-5.1.36-bin.jar]复制到{SPARK_HOME/jars};d.开启Hadoop;$>zKServer.sh start$>start-dfs.sh$>strat-yarn.she.开启sparkSQL$>spark-sql //默认开启“Local模式”等价于:spark-sql --master localf.如果在Standalone模式下:$>spark-sql --master spark://master:7077如果在Spark on yarn模式下:$>spark-sql --master yarng.在spark-sql命令行中,编写HQLspark-sql>show databases;spark-sql>use hive;spark-sql>select * from student;

thriftServer Beeline 连接 Hive1.将hive-site.xml复制到{SAPRK_HOME/conf}目录下;<!--配置hiveserver2主机(这里最好是配置ip地址,以便于从Windows连接)--><property><name>hive.server2.thrift.bind.host</name><value>master</value></property><!--配置beeline远程客户端连接时的用户名和密码。这个用户名要在对应的hadoop的配置文件core-site.xml中也配置--><property><name>hive.server2.thrift.client.user</name><value>Alex_lei</value></property><property><name>hive.server2.thrift.client.password</name><value>123456</value></property>2.开启hive的ThriftServer服务$>hiveserver23.在{SPARK_HOME/bin}目录下,执行beeline$>beelineBeeline version 1.2.1.spark2 by Apache Hivebeeline>4.在{beeline>}光标处,添加!connect,如下:beeline>!connect jdbc:hive2://master:10000/default5.添加用户名:beeline> !connect jdbc:hive2://master:10000/defaultConnecting to jdbc:hive2://master:10000/defaultEnter username for jdbc:hive2://master:10000/default:Alex_lei6.添加密码:beeline> !connect jdbc:hive2://master:10000/defaultConnecting to jdbc:hive2://master:10000/defaultEnter username for jdbc:hive2://master:10000/default: Alex_leiEnter password for jdbc:hive2://master:10000/default: ******7.成功连接!beeline> !connect jdbc:hive2://master:10000/defaultConnecting to jdbc:hive2://master:10000/defaultEnter username for jdbc:hive2://master:10000/default: Alex_leiEnter password for jdbc:hive2://master:10000/default: ******18/09/07 12:51:11 INFO jdbc.Utils: Supplied authorities: master:1000018/09/07 12:51:11 INFO jdbc.Utils: Resolved authority: master:1000018/09/07 12:51:11 INFO jdbc.HiveConnection: Will try to open client transport with JDBC Uri: jdbc:hive2://master:10000/defaultConnected to: Apache Hive (version 1.2.1)Driver: Hive JDBC (version 1.2.1.spark2)Transaction isolation: TRANSACTION_REPEATABLE_READ0: jdbc:hive2://master:10000/default>0: jdbc:hive2://master:10000/default>0: jdbc:hive2://master:10000/default>0: jdbc:hive2://master:10000/default> show databases;8.退出0: jdbc:hive2://master:10000/default> !quitClosing: 0: jdbc:hive2://master:10000/default

问题:cannot access /home/hyxy/soft/spark/lib/spark-assembly-*.jar: No such file or directory原因:我们开启Hive客户端找不到这个jar包,是由于saprk2.0之后没有lib这个目录了,这个jar包也不存在,spark2.0之前还是存在的。解决方案:(1)修改spark版本(不建议)(2)修改{HIVE_HOME/bin}目录下的hive可执行脚本修改【sparkAssemblyPath=`ls ${SPARK_HOME}/lib/spark-assembly-*.jar`】:--> 【sparkAssemblyPath=`ls ${SPARK_HOME}/jars/*.jar`】------

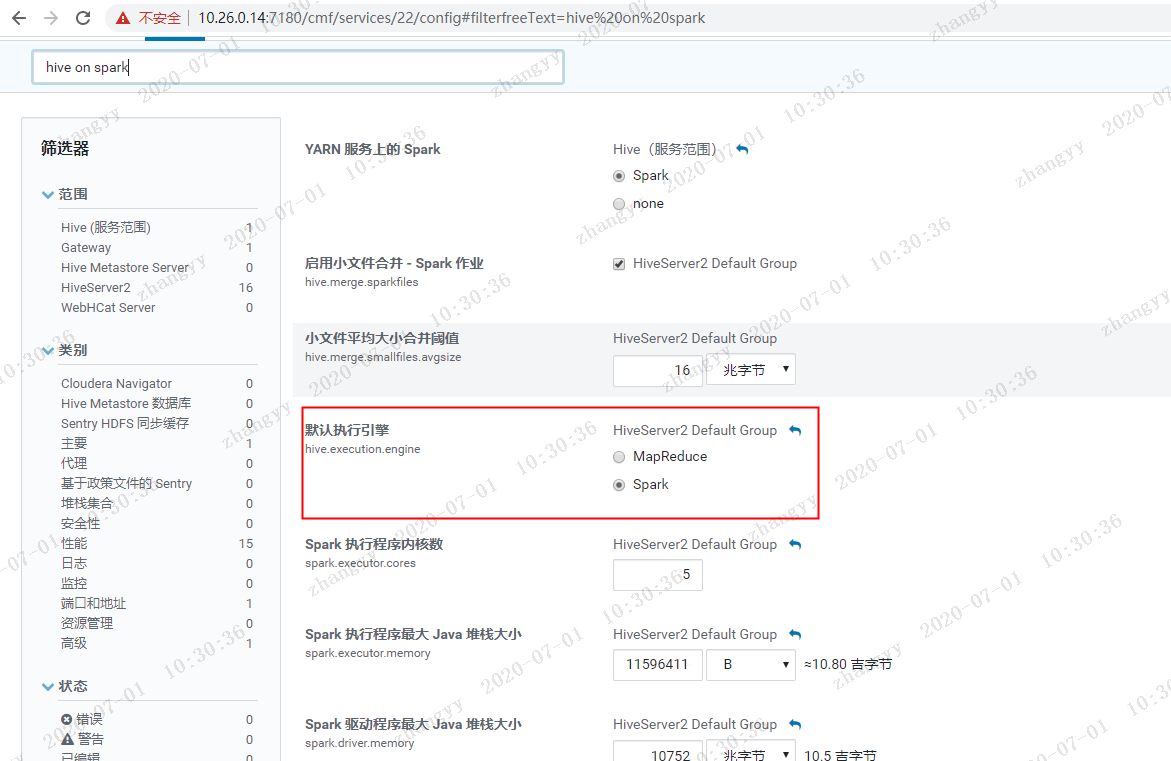

CDH 上面 直接启用就可以就可以了

常用设置参数:reset;set hive.execution.engine=spark;set hive.map.aggr = false;set hive.auto.convert.join = false;set hive.merge.mapfiles=true;set hive.merge.mapredfiles=true;set hive.merge.size.per.task=100000000;-- 动态分区参数SET hive.exec.dynamic.partition=TRUE;SET hive.exec.dynamic.partition.mode=nonstrict;SET hive.exec.max.dynamic.partitions=100000;SET hive.exec.max.dynamic.partitions.pernode=100000;-- 资源参数,根据任务调整-- spark引擎参数set spark.executor.cores=2;set spark.executor.memory=4G;set spark.executor.instances=10;

----------------------------------------------------任务占用资源计算cores : 核心数executor.memory :单个excutor分配内存executor.instances=10:executor个数任务占用总核心数:2 * 10 + 1 = 21 1是driver占用的核数占用总内存:2 * 4 * 10 = 40

内存调优版本:2.1.2,其他的版本我暂时没有确认,2.0之前的与2.0之后的不一样。1.先介绍几个名词:--> Reserved Memory(预留内存)--> User Memory(用户内存)--> Spark Memory(包括Storage Memory 和 Execution Memory)-->Spark内存默认为1G2.预留内存=300M,不可更改。用户内存是1G=(1024M-300M)*0.25spark Memory = (1024M-300M)*0.75存储内存=Spark Memory*0.5计算内存=Spark Memory*0.53.作用:预留内存:用于存储Spark相关定义的参数,如sc,sparksession等。用户内存:用于存储用户级别相关定义的数据,如:参数或变量。Spark内存:用于计算(Execution Memory)和cache缓存(Storage Memory)。4.在分配executor内存,需考虑最小内存数为:450Mval minSystemMemory = (reservedMemory * 1.5).ceil.toLong5.内存抢占问题a.缓存数据大于执行数据(RDD):storage Memory强占Execution Memoryb.Execution Memory占优,storage Memory必须释放!!Execution Memory优先级比较高