@xtccc

2016-05-08T05:04:04.000000Z

字数 3507

阅读 4036

Q&A

Phoenix

1. Jar包相关问题

1.1 找不到 com.google.protobuf.LiteralByteString

在Spark中,在通过Java App来操作Phoenix时,遇到如下的异常:

java.lang.IllegalAccessError: class com.google.protobuf.HBaseZeroCopyByteString cannot access its superclass com.google.protobuf.LiteralByteString

解决方法:

在CLASSPATH中增加hbase-protocol相关的Jar包,例如hbase-protocol-0.98.6-cdh5.2.0.jar

1.2 phoenix.jar不兼容问题

在通过Java App来操作Phoenix时,遇到如下的异常:

Exception in thread "main" java.sql.SQLException: ERROR 2006 (INT08): Incompatible jars detected between client and server. Ensure that phoenix.jar is put on the classpath of HBase in every region server: org.apache.hadoop.hbase.protobuf.generated.ZooKeeperProtos$MetaRegionServer.hasState()Z

Caused by: java.lang.NoSuchMethodError:org.apache.hadoop.hbase.protobuf.generated.ZooKeeperProtos$MetaRegionServer.hasState()Z

经过排查,这个异常是因为运行时在CLASSPATH里增加了hbase-protocol相关的Jar包(例如hbase-protocol-0.98.6-cdh5.2.0.jar)而引起的,看来hbase-protocol-0.98.6-cdh5.2.0.jar与phoenix-client.jar是会有冲突的。

2. 无法删除Phoenix的表

如果在创建表时出了错误,例如建表语句中出现了重复的列:

create table "test-4" ("pk" varchar not null primary key, "c1" varchar, "c1" varchar);

此时,执行SQL会返回错误信息,但是可以看到这个表"test-4"依然是被创建了。

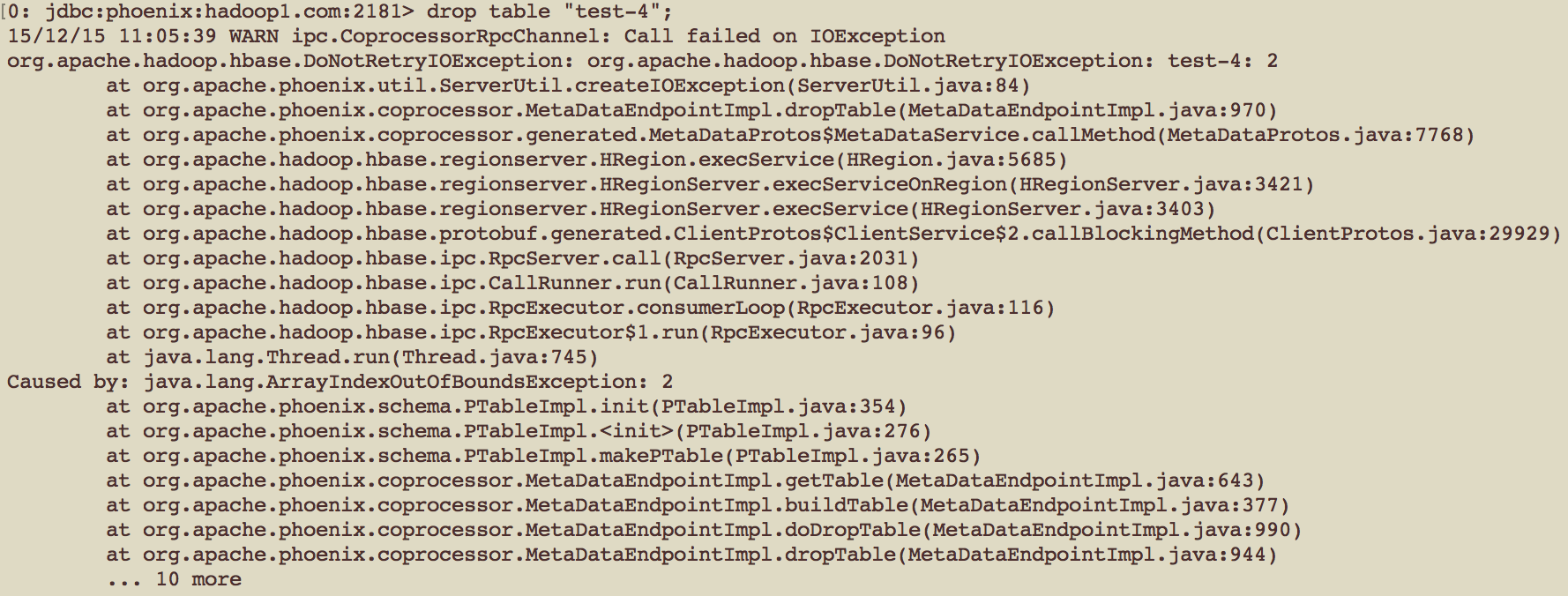

当要删除这个表时,会发现删除不了:

如果我们在HBase中删除这张表:

disable 'test-4'drop 'test-4'

那么,在HBase中就看不到这张表了

在ZooKeeper的Node也看不到这张表了:

hbase zkclils /hbase/table

但是,在Phoenix中依然能看到这张表"test-4"

解决方法

在Phoenix中,每张表的meta data都保存在系统表SYSTEM.CATALOG中。

我们在HBase中删除了表"test-4",如果在Phoenix中依然能看到这张表,那么就需要从系统表SYSTEM.CATALOG中删除表"test-4"的信息:

delete from SYSTEM.CATALOG where TABLE_NAME = 'test-4';

这是一个Phoenix的一个Bug。

3. 持续upsert大量数据会有异常

问题描述:

当我通过JDBC、以Phoenix SQL(upsert into ···)的形式向Phoenix表中持续写入大量的数据时,如果达到一定的数据量(例如200万条),在client端就会出现异常:

INFO [2016-05-08 10:00:00,793] (AsyncProcess.java:883) - #11003, waiting for some tasks to finish. Expected max=0, tasksSent=5, tasksDone=1, currentTasksDone=1, retries=0 hasError=false, tableName=fsn:tz_product_trailINFO [2016-05-08 09:55:20,716] (ClientCnxn.java:1096) - Client session timed out, have not heard from server in 48353ms for sessionid 0x2535ac3b64fbb09, closing socket connection and attempting reconnectINFO [2016-05-08 09:55:59,858] (ClientCnxn.java:975) - Opening socket connection to server hadoop1.com/59.215.222.3:2181. Will not attempt to authenticate using SASL (unknown error)INFO [2016-05-08 09:55:59,864] (ClientCnxn.java:852) - Socket connection established to hadoop1.com/59.215.222.3:2181, initiating sessionINFO [2016-05-08 09:55:59,865] (ClientCnxn.java:1094) - Unable to reconnect to ZooKeeper service, session 0x2535ac3b64fbb09 has expired, closing socket connectionWARN [2016-05-08 09:55:59,877] (HConnectionManager.java:2523) - This client just lost its session with ZooKeeper, closing it. It will be recreated next time someone needs itorg.apache.zookeeper.KeeperException\$SessionExpiredException: KeeperErrorCode = Session expiredat org.apache.hadoop.hbase.zookeeper.ZooKeeperWatcher.connectionEvent(ZooKeeperWatcher.java:403)at org.apache.hadoop.hbase.zookeeper.ZooKeeperWatcher.process(ZooKeeperWatcher.java:321)at org.apache.zookeeper.ClientCnxn\$EventThread.processEvent(ClientCnxn.java:522)at org.apache.zookeeper.ClientCnxn\$EventThread.run(ClientCnxn.java:498)INFO [2016-05-08 09:55:59,881] (HConnectionManager.java:1893) - Closing zookeeper sessionid=0x2535ac3b64fbb09INFO [2016-05-08 09:55:59,881] (ClientCnxn.java:512) - EventThread shut down

相关链接:

在网络上也有一些与这个问题相关的文章:

解决方法:

首先给出最简单的解决方法。

不要在一个Phoenix Connection中持续地upsert大量数据,每upsert一批较大的数据之后(如100M条),就关闭该连接,然后重新打开一个新连接后继续upsert。这种方法可以有效地解决这个问题。

原因分析:

首先,可以肯定的是:这些异常与警告信息,与我们通过一个session向phoenix表中持续写入大量数据有关。