@wanghuijiao

2021-09-22T08:40:36.000000Z

字数 14802

阅读 5116

YOLOv4 新手教程-人体形态检测&人体检测

技术文档

前言

- 背景

- YOLOv4在公开数据集上精度已经达到了很高,在公开数据集上的调参没有太大意义。为了让新手快速上手YOLOv4,本篇文档以人体形态检测任务和人体检测任务为背景,训练基于YOLOv4-tiny 的人体形态检测模型和人体检测模型。

- 目标

- 详细描述YOLOv4的基本使用流程

- 进阶功能简单介绍

- 内容提要

- 基本功能包括:安装、训练以及测试自定义数据集流程

- 进阶功能包括:调参方法,数据增强方法,结果可视化等

- YOLOv4原理请见 论文阅读 YOLOv4: Optimal Speed and Accuracy of Object Detection,本篇仅涉及实验层面的操作。

- 官方源码:Github-AlexeyAB/darknet

- 以下如无特殊说明,默认在10.0.10.56服务器的whj_yolov4虚拟环境下,项目路径:

/ssd01/wanghuijiao/pose_detector02

1. 跑通YOLOv4源码

- 本章主要是配置YOLOv4运行环境,由于YOLOv4是用C语言实现的,所以需要用CMake或make进行编译。其次,使用作者提供的预训练模型对YOLOv4效果进行复现,初步走通流程。

环境配置(默认使用Linux系统)

- CMake >= 3.18

- CUDA >= 10.2

- OpenCV >= 2.4

- cuDNN >= 8.0.2 (如这里所述,复制cudnn.h,libcudnn.so...等)

使用

make编译- 在项目文件夹下直接运行

make命令。 - 在Makefile文件中可以更改以下设置:

GPU=1用CUDA编译,CUDA需要在\myurl{/usr/local/cuda}目录下CUDNN=1用cuDNN v5-v7编译,cuDNN需要在/usr/local/cudnn目录下CUDNN_HALF=1使用单精度来训练和测试,可以在Titan V / Tesla V100 / DGX-2或一些更新的设备上获得测试性能3倍的提升,训练速度2倍的提升。OPENCV=1,编译过程中使用OpenCV 4.x/3.x/2.4.x,方便测试的时候可以使用视频和摄像头。DEBUG=1,编译debug版本的YOLO。OPENMP=1,在多核CPU平台上使用OpenMP加速。LIBSO=1,编译生成darknet.so和可执行的库文件。

- 在项目文件夹下直接运行

下载预训练模型

- 下载 yolov4.weights到darknet下。

- 跑通原始YOLOv4 Demo

- 对图像测试:

./darknet detector test ./cfg/coco.data ./cfg/yolov4.cfg ./yolov4.weights data/dog.jpg -i 0 -thresh 0.25 - 对视频测试:

./darknet detector demo ./cfg/coco.data ./cfg/yolov4.cfg ./yolov4.weights test50.mp4 -i 0 -thresh 0.25 - 对视频流测试:

./darknet detector demo ./cfg/coco.data ./cfg/yolov4.cfg ./yolov4.weights rtsp://admin:admin12345@192.168.0.228:554 -i 0 -thresh 0.25 - 测试COCO数据集AP:

- 对图像测试:

2. 数据集

人体形态数据集(自制)

- 上一章已经跑通了YOLOv4官方源码,本章重点介绍人体形态数据集的标签文件和内容说明。

- 人体形态数据集简介

- V1.0版数据全部来源于曼孚数据集,包含了所有用VOTT标注的标签,主要包括人体站、坐、蹲、躺、半躺(摔倒的中间过程)、其他等六种人体形态,共有23301张图片,41935条标签,平均一张图片包含1.8条标签(bbox)。

- 该数据集会在这个Gitee项目对标签文件和版本进行跟踪管理,不定义更新中。关于该数据集的详细内容请移步该项目。

- 对于新手而言,重点在YOLOv4的模型训练,因此直接使用提供的人体形态数据集即可,对于标注暂不做要求。

- 数据集在服务器上位置:

10.0.10.56:/ssd01/wanghuijiao/dataset/manfu_human/v1.0 标签格式说明

- 与YOLOv4官方要求一致,每张图片的标签文件与图片同名,仅后缀改为.txt

标签文件中每一行格式为:

<object-class> <x_center> <y_center> <width> <height>,一行表示一个bbox。<object-class>表示标签编号,取值范围从0到class-1<x_center> <y_center> <width> <height>依次是bbox的中心横纵坐标和宽高,均为相对于图片宽高的比值,是浮点数,计算方式如:<x> = <absolute_x> / <image_width> 或 <height> = <absolute_height> / <image_height>。比如

close_2m_stand_coldlight_longsleeve_backshot_withoutshelter_CJ67_P0964.mp4#t=1.966667.txt内容为:0 0.41476345840130513 0.4560756579689358 0.20309951060358902 0.91215131593787160 0.4942903752039152 0.3217391304347827 0.07014681892332793 0.6434782608695654

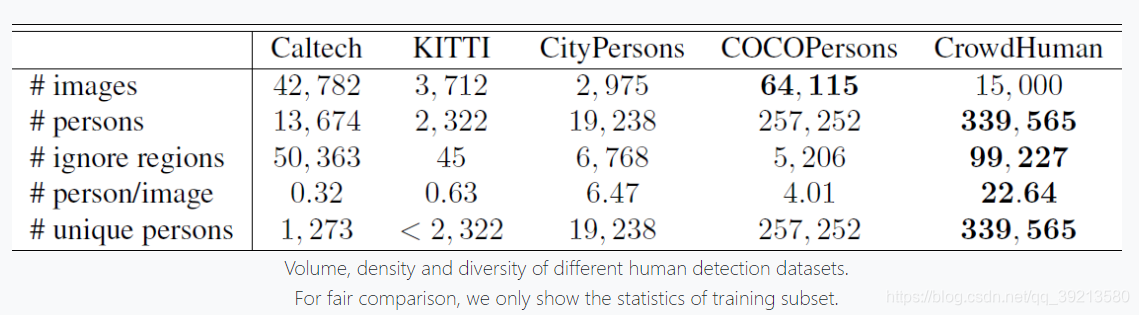

人体数据集(公开)

- 下表对比了几个包含人体的公开数据集,但实际上,这次训练只用到了COCOPersons和CrowdHuman俩数据集。其他日后再学习。

图片来自CrowdHuman官网

图片来自CrowdHuman官网

- COCOPersons 简介

- 人体数据多为少人、近景、站姿,坐姿较少,躺着的几乎没有,人体之间Overlap也不高。

- CrowdHuman 简介

- 人体数据特点是多人、远景、近景、站姿、坐姿、人体Overlap很高。

- TODO:以下三个数据集暂未了解,以后安排上,之后另开一篇专门介绍这些数据集,包括数据来源和数据描述(图片分布,包含什么类型的人体数据,适合解决什么样的问题)、数据标签格式说明、数据集文件说明等。“针对人的检测,会是未来的一个基础任务,除了模型层面外的工作,最好在数据层面也进行一些优化,主要就是收集各种公开数据集中“人”的数据(记录每个公开数据集的数据特点,比如是室内/室外、多人/单人、视角等),合在一起训练。”(by 张一杨)

- Caltech

- KITTI

- CityPersons

- 数据集格式转换脚本(后续补上)

- cocohuman2yolo.py

- crowdhuman2yolo.py

3. 准备配置文件

配置文件说明

- 不同的数据集中包含的类别数量和标签等内容不尽相同,因此在人体形态数据集上训练之前,需要先对模型结构和数据集相关信息进行配置。以下将以Yolov4人体形态检测器为例,说明配置文件需要更改的内容。

配置文件

- 模型配置文件:在开启训练之前需要首先准备YOLOv4的模型配置文件

darknet/cfg/yolov4_pose.cfg, YOLOv4通过.cfg文件定义网络结构,此类文件一般放在darknet/cfg目录下。

- 文件中batch设置训练一次使用的图片数量;

- width和height设置图像输入大小,为32的倍数;

- learning_rate设置学习率,用4 GPU训练一般设为0.00065;

- max_batches设置训练轮次,一般可以设置为目标数量*2000,但是不要少于6000;

- 设置steps为max_batches*0.8,max_batches*0.9,这里设置是为了调整学习率,一般学习率在max_batches*0.8,max_batches*0.9缩小10倍,具体缩小比例可以在scales中调整;

- 设置所有的classes为自定义数据集的目标数量,这里classes=6;在网络结构中每个[yolo]层之前的[convolutional]中(即第221行、274行)设置filters=(classes + 5)x3=33。

数据配置文件:准备数据文件

darknet/cfg/pose.data,YOLOv4用.data文件记录数据集相关信息;- 其中,classes表示目标类别数量;

- train表示训练数据的路径;

- valid表示测试数据的路径;

- Names表示目标类别的名称;

- backup表示训练结果存放路径。

- eval表示数据集标签格式,此处采用coco格式。

- 本实验的

darknet/cfg/pose.data内容示例如下:

classes = 6train = /ssd01/wanghuijiao/dataset/manfu_human/v1.0/train_v1.0.txtvalid = /ssd01/wanghuijiao/dataset/manfu_human/v1.0/test_v1.0.txtnames = /ssd01/wanghuijiao/pose_detector02/data/pose.namesbackup = ./backup_manfu_human_v1.0_512eval = coco

- 数据集标签文件:准备

darknet/data/pose.names,此文件记录数据集中标签名称,本实验的darknet/data/pose.names内容示例如下:

standsitsquatliehalf_lieother_pose

- 模型配置文件:在开启训练之前需要首先准备YOLOv4的模型配置文件

“Yolov4-tiny 人体检测器”配置文件内容

- 以下路径在10.0.10.56服务器

- 模型配置文件:

/ssd01/wanghuijiao/pose_detector02/cfg/yolov4-tiny_human_416.cfg - 数据配置文件:

/ssd01/wanghuijiao/pose_detector02/cfg/crowdhuman-416.data - 数据集标签文件:

/ssd01/wanghuijiao/pose_detector02/data/crowdhuman.names

“Yolov4-tiny 人体形态检测器”配置文件内容

- 以下路径在10.0.10.56服务器

- 模型配置文件:

/ssd01/wanghuijiao/pose_detector02/cfg/yolov4-tiny_human_pose_416.cfg - 数据配置文件:

/ssd01/wanghuijiao/pose_detector02/cfg/pose.data - 数据集标签文件:

/ssd01/wanghuijiao/pose_detector02/data/pose.names

4. 模型训练

训练过程说明

- 本章将在人体形态数据集上开启YOLOv4的训练,目标是对人体形态站、坐、蹲、躺、半躺(摔倒的中间状态)等5类形态有较好的检测效果。其次,对训练过程中调参方法和数据增强方法进行介绍。

训练命令

- 从在ImageNet上预训练过的darknet53网络的前105层开始,从

10.0.10.56: /ssd01/wanghuijiao/pose_detector02/csdarknet53-omega.conv.105下载csdarknet53-omega.conv.105 - 用多GPU训练

./darknet detector train \cfg/pose_b.data \ # 数据配置文件cfg/pose_yolov4.cfg \ # 模型配置文件./csdarknet53-omega.conv.105 \ # ImageNet预训练权重-dont_show \-map \-gpus 4,5,6,7

- 从在ImageNet上预训练过的darknet53网络的前105层开始,从

调参方法

- 调节学习率learning_rate,如果是四卡GPU训练,调为

0.00065,若是单卡,则使用默认的0.00231 - width, height,基本上常用的是416*416, 512*512, 608*608,可以同时对一个模型尝试训练这几个尺寸的分辨率,根据部署需要(速度、精度)择优录取

- 调节学习率learning_rate,如果是四卡GPU训练,调为

- 数据增强方法

- mosaic:开启是在模型配置文件

.cfg中参数配置(大约第24行)处增加mosaic=1, 关闭则注释掉这一行。 - cutmix: 同上,增加或注释

cutmix=1。

- mosaic:开启是在模型配置文件

“Yolov4-tiny 人体检测器”训练

- 训练过程

- 先在COCO person类上训练6000个batch

- 然后在CrowdHuman person类上继续训练14000个batch

- 原因:COCO person上训练完之后发现对多人、躺倒的人漏检较多,所以在CrowdHuman上继续训练

- 训练命令(10.0.10.56:/ssd01/wanghuijiao/pose_detector02 路径下)

# 1.先在COCO person上训练6000batch,预训练权重是官方提供的yolov4-tiny.conv.29(应该是COCO80类上训练的)./darknet detector train \cfg/yolov4-tiny_cocohuman_416.data \cfg/yolov4-tiny_human_416.cfg \yolov4-tiny.conv.29 \-dont_show \-map \-gpus 4,5,6,7# 2.然后在CrowdHuman person类上继续训练14000个batch./darknet detector train \cfg/crowdhuman-416.data \cfg/yolov4-tiny_human_416.cfg \backup/yolov4-tiny_cocohuman_416/yolov4-tiny_human_416_best.weights \ # 在COCO person类上的batch6000预训练权重-dont_show \-map \-gpus 4,5,6,7 \# -clear 添加这个选项会从batch=0开始训练,不添加就从batch6000继续

“Yolov4-tiny 人体形态检测器”训练

- 训练过程

- 使用“Yolov4-tiny 人体检测器”作为预训练模型在曼孚v1.0上(未平衡的数据集)进行训练 40000 batch

- 原因:“Yolov4-tiny 人体检测器” 已经可以对各种姿态的人体检测效果比较好了,在此基础上进行人体形态检测训练,效果比从ImageNet或者单独COCO80类预训练模型开始训练,效果要好。

- 训练命令(10.0.10.56:/ssd01/wanghuijiao/pose_detector02 路径下)

./darknet detector train \cfg/pose.data \cfg/yolov4-tiny_human_pose_416.cfg \backup/yolov4-tiny_manfu_humanPose_416/yolov4-tiny_human_pose_416_best.weights \-dont_show \-map \-gpus 4,5,6,7 \-clear

5. 模型测试与性能

性能指标讲解

- 原理请看查漏补缺(2) TP/FP/TN/FN、accuracy/precision/recall、IOU、mAP

- 源码解析(to do)

“Yolov4-tiny 人体检测器” 结果

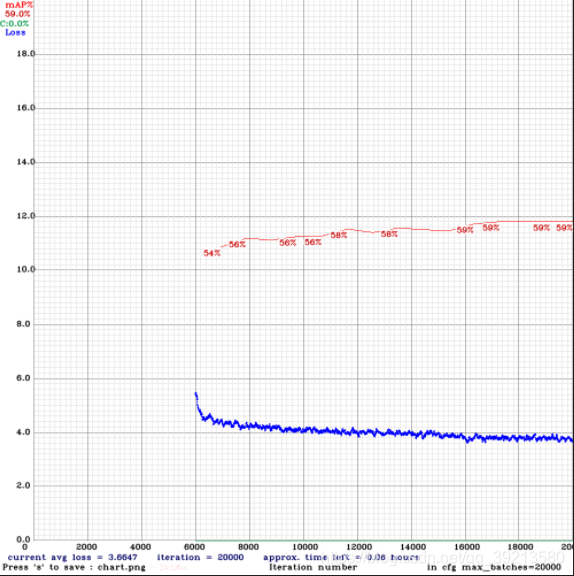

- 模型训练过程mAP、Loss等指标变化(只保存了从CrowdHuman上finetune的部分)

性能指标

实验编号/分支名 参数 精度 0817 yolov4_tiny_crowdhuman_416 IOU_th=0.25; w=416,h=416; 预训练模型:yolov4-tiny.conv.29 person ap = 59.12% 权重:

/ssd01/wanghuijiao/pose_detector02/backup/yolov4_tiny_crowdhuman_416/yolov4-tiny_human_416_best.weights模型性能分析& Demo

- 主要测试了6个场景(来自实拍+影视剧,室外+家居+团战三个场景demo在一个视频中)

- 办公室(近、远景,办公桌遮挡):模型可直接使用,误检和漏检都比较少,办公桌遮挡的人也可以检测出来,唯一不足是对于趴倒的人检测框不准,具体看这个demo

- 室外(近景少人多为站姿、蹲姿):模型可直接使用,误检和漏检都比较少,唯一不足是当两人重合度高时,只能检测出一个框。奉上demo-开头部分

- 家居(近景少人多为坐姿、站姿):模型可直接使用,误检和漏检都比较少,唯一不足是当两人重合度高时,只能检测出一个框。奉上demo-末尾部分

- 团战(远景多人、兽人):模型不可用,模型对大波的远景下的人群基本检测不出来,只能检测出来落单的人。奉上demo-中间部分

- 近战(中长景多人):模型基本可用,对于晃动的镜头偶尔有漏检,爆炸场面偶尔有误检。demo

- 近战(近景多人、迷彩服):模型效果最差,由于画面中人的脸和身体基本全部是深色和迷彩服,模型误检和漏检都很高。原因是数据集中几乎不包括这种数据。demo在此。

- 总结:

- 目前的模型比较适合近景和中长景镜头下的场景。COCO和CrowdHuman数据集内数据也差不多长这样。对于远景镜头下人群的检测场景,后续如有需要可以通过补充数据,重新训练模型达到。

- 其实同时训练了416*416和608*608两种分辨率的Yolov4-tiny,但是实测发现608分辨率的检测模型对于对大目标(近景人物特写镜头,一个人脸或者上半身占画面一半以上左右)漏检比较多,猜测是提高分辨率后大目标感受野相较416模型变大,coco和crowdhuman数据集中没有类似尺寸的框,导致检测不到。

- 关于这个问题,后续需要尝试一下

- 多个人在画面内身体互相遮挡的时候只检测一个框,这个后续回调一下NMS阈值看看

- 主要测试了6个场景(来自实拍+影视剧,室外+家居+团战三个场景demo在一个视频中)

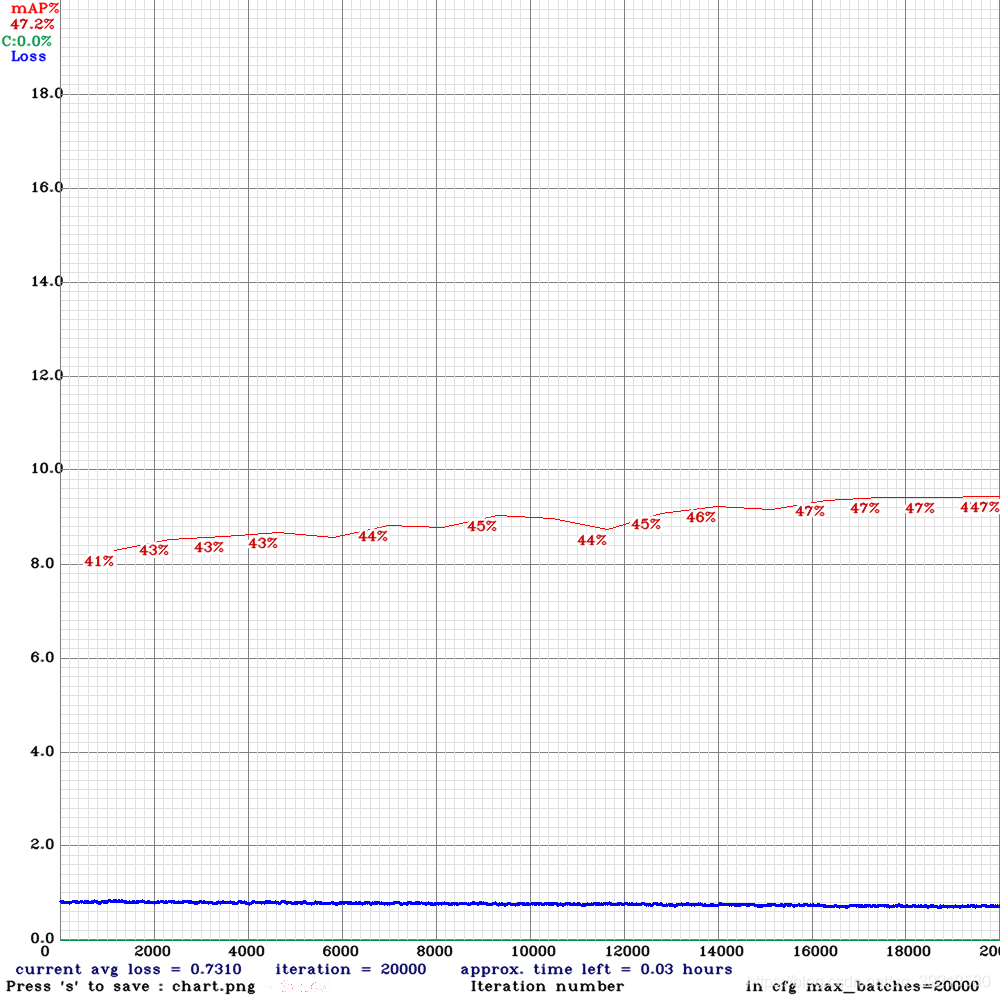

“Yolov4-tiny 人体形态检测器” 结果

模型训练结果图mAP、Loss等指标变化(只保存了训练 40000 batch的后20000 batch部分)

性能指标

实验编号/分支名 参数 精度 0820 yolov4-tiny_manfu_humanPose_416 IOU_th=0.25; w=416,h=416; 测试集:test_v1.0.txt; 预训练模型:v4-tiny 人体检测器 stand ap = 68.94%; sit ap = 84.11%; squat ap = 49.3%; lie ap = 32.5%; half_lie ap = 34.15%; other_pose ap = 14.10%, mAP = 47.19 % - 对应权重:

/ssd01/wanghuijiao/pose_detector02/backup/yolov4-tiny_manfu_humanPose_416/yolov4-tiny_human_pose_416_best.weights - 模型性能 & Demo

附录1:调试过程记录

0806

- 测试集test_v1.0.txt

# test_v1.0.txt# weights: ./backup_manfu_human_v1.0_512/pose_yolov4_best.weightsclass_id = 0, name = stand, ap = 64.18% (TP = 2450, FP = 1119)class_id = 1, name = sit, ap = 72.67% (TP = 2426, FP = 924)class_id = 2, name = squat, ap = 33.98% (TP = 84, FP = 96)class_id = 3, name = lie, ap = 23.06% (TP = 10, FP = 5)class_id = 4, name = half_lie, ap = 33.38% (TP = 2, FP = 2)class_id = 5, name = other_pose, ap = 1.21% (TP = 0, FP = 0)for conf_thresh = 0.25, precision = 0.70, recall = 0.58, F1-score = 0.63for conf_thresh = 0.25, TP = 4972, FP = 2146, FN = 3591, average IoU = 48.36 %IoU threshold = 50 %, used Area-Under-Curve for each unique Recallmean average precision (mAP@0.50) = 0.380806, or 38.08 %

- 测试集test_b_v1.0.txt

# test_v1.0.txt# weights: ./backup_manfu_human_v1.0_b_512/pose_yolov4_b_best.weightsclass_id = 0, name = stand, ap = 68.38% (TP = 536, FP = 238)class_id = 1, name = sit, ap = 79.06% (TP = 429, FP = 143)class_id = 2, name = squat, ap = 63.94% (TP = 125, FP = 36)class_id = 3, name = lie, ap = 55.34% (TP = 132, FP = 78)class_id = 4, name = half_lie, ap = 30.82% (TP = 58, FP = 47)class_id = 5, name = other_pose, ap = 34.12% (TP = 15, FP = 6)for conf_thresh = 0.25, precision = 0.70, recall = 0.58, F1-score = 0.64for conf_thresh = 0.25, TP = 1295, FP = 548, FN = 919, average IoU = 53.73 %IoU threshold = 50 %, used Area-Under-Curve for each unique Recallmean average precision (mAP@0.50) = 0.552780, or 55.28 %

0809

- 测试集test_v1.0.txt

calculation mAP (mean average precision)...Detection layer: 139 - type = 27Detection layer: 150 - type = 27Detection layer: 161 - type = 274708detections_count = 13112, unique_truth_count = 8563class_id = 0, name = stand, ap = 73.59% (TP = 2901, FP = 856)class_id = 1, name = sit, ap = 86.05% (TP = 2810, FP = 462)class_id = 2, name = squat, ap = 57.60% (TP = 138, FP = 96)class_id = 3, name = lie, ap = 38.77% (TP = 126, FP = 105)class_id = 4, name = half_lie, ap = 44.31% (TP = 513, FP = 330)class_id = 5, name = other_pose, ap = 39.18% (TP = 25, FP = 17)for conf_thresh = 0.25, precision = 0.78, recall = 0.76, F1-score = 0.77for conf_thresh = 0.25, TP = 6513, FP = 1866, FN = 2050, average IoU = 63.42 %IoU threshold = 50 %, used Area-Under-Curve for each unique Recallmean average precision (mAP@0.50) = 0.565842, or 56.58 %Total Detection Time: 90 SecondsSet -points flag:`-points 101` for MS COCO`-points 11` for PascalVOC 2007 (uncomment `difficult` in voc.data)`-points 0` (AUC) for ImageNet, PascalVOC 2010-2012, your custom datasetmean_average_precision (mAP@0.5) = 0.565842Saving weights to ./backup_manfu_human_v1.0_512/pose_yolov4_120000.weights

- 测试集test_b_v1.0.txt

1036detections_count = 2797, unique_truth_count = 2214class_id = 0, name = stand, ap = 68.25% (TP = 530, FP = 140)class_id = 1, name = sit, ap = 80.36% (TP = 457, FP = 104)class_id = 2, name = squat, ap = 63.12% (TP = 148, FP = 38)class_id = 3, name = lie, ap = 61.53% (TP = 164, FP = 50)class_id = 4, name = half_lie, ap = 33.34% (TP = 133, FP = 114)class_id = 5, name = other_pose, ap = 54.09% (TP = 35, FP = 16)for conf_thresh = 0.25, precision = 0.76, recall = 0.66, F1-score = 0.71for conf_thresh = 0.25, TP = 1467, FP = 462, FN = 747, average IoU = 61.19 %IoU threshold = 50 %, used Area-Under-Curve for each unique Recallmean average precision (mAP@0.50) = 0.601145, or 60.11 %Total Detection Time: 19 SecondsSet -points flag:`-points 101` for MS COCO`-points 11` for PascalVOC 2007 (uncomment `difficult` in voc.data)`-points 0` (AUC) for ImageNet, PascalVOC 2010-2012, your custom datasetmean_average_precision (mAP@0.5) = 0.601145Saving weights to ./backup_manfu_human_v1.0_b_512/pose_yolov4_b_120000.weights

0809

- yolov4_mf_v1.0

- yolov4_mfb_v1.0

- lie误报很多,且置信度很高80%+

- half_lie误报高

0817 human detector yolov4-tiny

- yolov4-tiny_human_416

- demo:

calculation mAP (mean average precision)...Detection layer: 30 - type = 27Detection layer: 37 - type = 272696detections_count = 57081, unique_truth_count = 11004class_id = 0, name = person, ap = 59.12% (TP = 5692, FP = 1653)for conf_thresh = 0.25, precision = 0.77, recall = 0.52, F1-score = 0.62for conf_thresh = 0.25, TP = 5692, FP = 1653, FN = 5312, average IoU = 58.60 %IoU threshold = 50 %, used Area-Under-Curve for each unique Recallmean average precision (mAP@0.50) = 0.591248, or 59.12 %Total Detection Time: 20 SecondsSet -points flag:`-points 101` for MS COCO`-points 11` for PascalVOC 2007 (uncomment `difficult` in voc.data)`-points 0` (AUC) for ImageNet, PascalVOC 2010-2012, your custom datasetmean_average_precision (mAP@0.5) = 0.591248

- yolov4-tiny_human_608

calculation mAP (mean average precision)...Detection layer: 30 - type = 27Detection layer: 37 - type = 272696detections_count = 63890, unique_truth_count = 11004class_id = 0, name = person, ap = 60.66% (TP = 5477, FP = 1682)for conf_thresh = 0.25, precision = 0.77, recall = 0.50, F1-score = 0.60for conf_thresh = 0.25, TP = 5477, FP = 1682, FN = 5527, average IoU = 58.19 %IoU threshold = 50 %, used Area-Under-Curve for each unique Recallmean average precision (mAP@0.50) = 0.606624, or 60.66 %Total Detection Time: 22 SecondsSet -points flag:`-points 101` for MS COCO`-points 11` for PascalVOC 2007 (uncomment `difficult` in voc.data)`-points 0` (AUC) for ImageNet, PascalVOC 2010-2012, your custom datasetmean_average_precision (mAP@0.5) = 0.606624

0820 v4-tiny 人体形态检测更新

- pretrained model: v4-tiny human detector trained on CrowdHuman

- 对应权重:

/ssd01/wanghuijiao/pose_detector02/backup/yolov4-tiny_manfu_humanPose_416/yolov4-tiny_human_pose_416_best.weights

calculation mAP (mean average precision)...Detection layer: 30 - type = 27Detection layer: 37 - type = 274708detections_count = 49418, unique_truth_count = 8563class_id = 0, name = stand, ap = 68.94% (TP = 2448, FP = 969)class_id = 1, name = sit, ap = 84.11% (TP = 2684, FP = 656)class_id = 2, name = squat, ap = 49.37% (TP = 107, FP = 68)class_id = 3, name = lie, ap = 32.50% (TP = 60, FP = 55)class_id = 4, name = half_lie, ap = 34.15% (TP = 182, FP = 144)class_id = 5, name = other_pose, ap = 14.10% (TP = 5, FP = 4)for conf_thresh = 0.25, precision = 0.74, recall = 0.64, F1-score = 0.69for conf_thresh = 0.25, TP = 5486, FP = 1896, FN = 3077, average IoU = 58.11 %IoU threshold = 50 %, used Area-Under-Curve for each unique Recallmean average precision (mAP@0.50) = 0.471944, or 47.19 %Total Detection Time: 75 Seconds

附录2:提升Yolov4模型训练效果的技巧(by作者AlexeyAB)

训练前

- 将

.cfg文件中random=1,这样有助于提升对不同分辨率的precision - 在

.cfg文件中增加模型分辨率,比如height=608, width=608。 - 检查训练集的标签都标上了,没有错标漏标等

- 损失非常高,mAP非常低是训练错误吗?

- 训练模型命令末尾加上

-show_imgs,如果看到正确的bbox标在物体上(windows或者aug...jpg图片上)

- 训练模型命令末尾加上

- 对于每个你想检测的物体,在训练集中至少存在一个相似物体:形状、物体侧面、相对大小、旋转角度、翻转、光照等。所以你的训练数据集包括图像与对象在不同:比例,旋转,灯光,从不同的方面,在不同的背景-你应该最好有2000不同的图像为每个类或更多,你应该训练2000*类迭代或更多。

- desirable that your training dataset include images with non-labeled objects that you do not want to detect - negative samples without bounded box (empty .txt files) - use as many images of negative samples as there are images with objects

- 最好的标注方式:无论是指标物体的可见部分,或是标注可见部分和被遮挡部分,或者完整物体包含一点边界,都可,关键在于你想如何检测这个物体。

- 对于检测一张图片中存在大量目标的情况,可以在

.cfg文件的最后一个[yolo]层或者[region]层添加参数max=200(YoloV3在整个图片全局最大值是可以检测出0.0615234375*(width*height)个物体)。 - 对于小物体检测:(图片缩放到416*416后小于16*16)将

.cfg中L895改为layer=23, L892改stride=4,L989改为stride=4。为啥??? - 同时训练小目标和大目标可以用更改后的模型:

- 如果要训练的模型需要区分左右边,比如左右手,把

.cfg文件的flip=0翻转数据增强关掉 - 通用规则-训练集应该包含与测试集相同的相对尺寸,对测试集每一个物体,训练集里至少应用有同类别同一个相对尺寸的样本。

train_network_width * train_obj_width / train_image_width ~= detection_network_width * detection_obj_width / detection_image_width - 加速训练(同时会降低精度),设置

.cfg文件的136层stopbackward=1 - 每一个:对象的模型,侧面,光照,比例,每一个30度的转弯和倾斜角度-从神经网络的内部角度来看,这些是不同的对象。因此,你想要检测的对象越多,就应该使用越复杂的网络模型。

- 为了使检测到的有界盒更精确,可以在每个[yolo]层中添加3个参数ignore_thresh = .9 iou_normalizer=0.5 iou_loss=giou,它会增加mAP@0.9,减少mAP@0.5。

- 除非你是专家,否则不要改anchor。

- 将

训练后 - 用于检测

- 增加

.cfg图像分辨率,这样会提高precision,并且有助于检测小目标 - 没必要重新训练网络,仅需要使用416*416的模型

.weights即可 - 为了得到更高的精度accuracy,提高模型分辨率,若是出现

Out of memory,就更改.cfg文件的subdivisions=16,32 or 64

- 增加