@wanghuijiao

2021-03-24T08:16:54.000000Z

字数 4262

阅读 3018

视频理解领域小样本学习调研报告

学习笔记

- 视频理解领域小样本学习调研报告

- 0 前言

- 1. 分类

- 2. 常用数据集总结

- 3. 开源代码

- 4. 论文简述

- 4.1 ProtoGAN: Towards Few Shot Learning for Action Recognition

- 4.2 A Generative Approach to Zero-Shot and Few-Shot Action Recognition

- 4.3 TARN: Temporal Attentive Relation Network for Few-Shot and Zero-Shot Action Recognition

- 4.4 CMN: Compound memory networks for few-shot video classification.

- 4.5 OTAM: Few-shot video classification via temporal alignment

- 4.6 ARN: Few-shot Action Recognition with Permutation-invariant Attention

- 4.7 AMeFu-Net:Depth Guided Adaptive Meta-Fusion Network for Few-shot Video Recognition

- 4.8 Temporal-Relational CrossTransformers for Few-Shot Action Recognition

0 前言

- 本篇调研了小样本学习在动作识别领域的进展,旨在快速掌握最新研究进展,方便评估小样本学习是否能够有效应用到行为识别领域。

- 术语解释

- N-way K-shot:在测试时,从测试集中随机选出N个类,从N个类中任意选取K+X个样本,其中K个样本N类(共K*N个样本)组成Support Set, X个样本N类(共X*N个样本)组成Query Set。支撑集Support Set中的视频为Query Set提供参考信息。

- Episode training:

- 参考资料

- 这是一个复旦CV博士的总结:小样本视频动作识别论文整理 few-shot video action recognition

其他

- 能用的数据集

- 别人已经实现的demo

- 开源代码

- 如果要用在摔倒上要怎么做

- 方法原理上可行否?比如是否适用于目前的问题:有一些样本很多,有一些类别样本很少。方向很重要!发现不行及时止损。

- 工业上有什么应用?

问题分析,解决步骤,大概流程,

- 问题定义,解决问题,结论

- 数据集长什么样

1. 分类

Action Genome(li Feifei2019)提出的分类:

- 用样本较多的类别数据学习一个分类器,然后用该分类器去鉴别少样本类别。4.2论文属于第一种。

- 2、通过学习不变性或者分解来实现少样本分类。CMN\TARN、ProtoGAN都属于第二类方法。

ProtoGAN提出的分类

- 1.元学习:

在训练阶段模仿小样本推理过程。CMN - 2.表征学习:

尝试学习新样本与已知小样本间的相似性。OTAM - 3.生成模型:

通过生成模型合成数据来增强新类别的样本量。ProtoGAN

2. 常用数据集总结

| 数据集 | 动作类别数 | 总视频数 | train:val:test 或train:test | SOTA |

|---|---|---|---|---|

| UCF101 | 101 | 13320 | 51:50 | 95.5%(by AMeFu-Net) |

| HMDB51 | 51 | 6766 | 26:25 | 75.5% (by AMeFu-Net) |

| Olympic-Sports | 16 | 783 | 8:8 | 86.3%(by ProtoGAN) |

| miniMIT | 200 | 200*550 | 120:40:40 | 56.7%(by ARN) |

| Kinetics-100 | 100 | 100*100 | 64:12:24 | 86.8%(by AMeFu-Net) |

| Something-Something V2-100 | 100 | 100*100 | 64:12:24 | 52.3%(by OTAM) |

- 小样本数据集长啥样

- Kinetics-100: 来自于Kinetics,从中抽取了100个类别,每类100个视频;对于小样本视频识别任务,CMN中第一次依据类别将其划分为64:12:24,分别作为train/val/test集。

- Something-Something-V2: OTAM采用了和Kinetics-100同样的设置:从SSV2中随机抽取100类,每类随机抽取100个视频片段样本,按类别划分64类用于训练,12类用于验证,24类用于测试。

- UCF101:

- HMDB51:

- 咋训练

- Episode训练: 很多方法采用了叫做Episode训练的方式,这种方式来源于元学习,就是将数据集划分成一个小单位叫做episode,每次训练或者测试时,读取数据和计算分类精度都是按照epidsode计算的,最终取平均值即为最终的精度。

- Episode长啥样:每个episode由支撑集和query视频组成,模仿人类学习的过程,比如:人类在见到一头大象时,会将

结论

- 需要准备多少数据:每类准备50-100个视频片段

3. 开源代码

3.1 TRX

- 重点查看:CVPR2021-Temporal-Relational CrossTransformers for Few-Shot Action Recognition

- Github-trx

- 基于以下文章和repo:

- CNAPS: 图像分类任务的小样本学习

- GitHub

- NIPS2019-Fast and Flexible Multi-Task Classification Using Conditional Neural Adaptive Processes

- ICML2020-TASKNORM: Rethinking Batch Normalization for Meta-Learning

- google-research/meta-dataset:谷歌repo, 数据集,Github

3.2 Few-shot-action-recognition

3.3 SL-DML: Signal Level Deep Metric Learning for Multimodal One-Shot Action Recognition

4. 论文简述

4.1 ProtoGAN: Towards Few Shot Learning for Action Recognition

- 相关资料:

- Github

- 论文基本信息:

- 领域:小样本学习

- 作者单位:印度理工学院 & 奔驰印度研发

- 发表期刊和时间:ICCV2019

- 谷歌引用量:13

- 一句话总结:

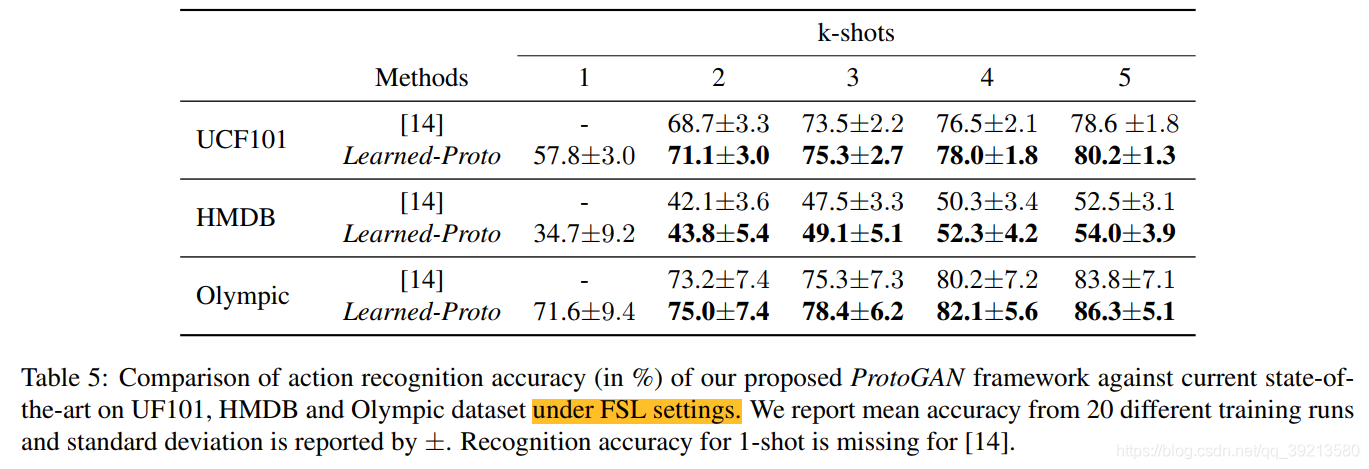

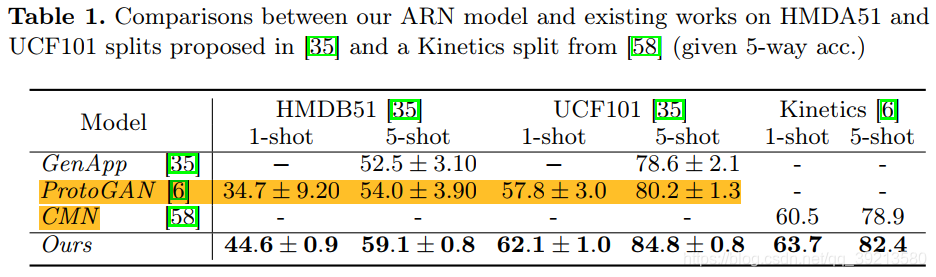

- 提出ProtoGAN结构,在UCF101(80.2%), HMDB51(54%) 和 Olympic-Sports(86.3%)达到SOTA效果。

- 简述

- 思想: 通过ProtoGAN生成制定新类别的视频特征解决小样本视频识别问题。且首次提出Generalized Few-Shot Learning(G-FSL) 设置Benchmark.

- G-FSL:与FSL不同之处在于,FSL只考虑新类别(Novel Classes)的识别效果,G-FSL将在训练中拥有足够样本量的类别也考虑进去了(Seen Classes)。

- 效果:

- 在FSL设置下对比效果

- 在FSL设置下对比效果

4.2 A Generative Approach to Zero-Shot and Few-Shot Action Recognition

- 相关资料:

- Github

- 论文基本信息:

- 领域:小样本学习

- 作者单位:印度理工学院马德拉斯分校

- 发表期刊和时间:2018WACV

- 谷歌引用量:56

- 一句话总结:

- -

4.3 TARN: Temporal Attentive Relation Network for Few-Shot and Zero-Shot Action Recognition

- 相关资料:

- Github

- 论文基本信息:

- 领域:小样本学习

- 作者单位:伦敦玛丽女王大学

- 发表期刊和时间:BMVC2019

- 谷歌引用量: 15

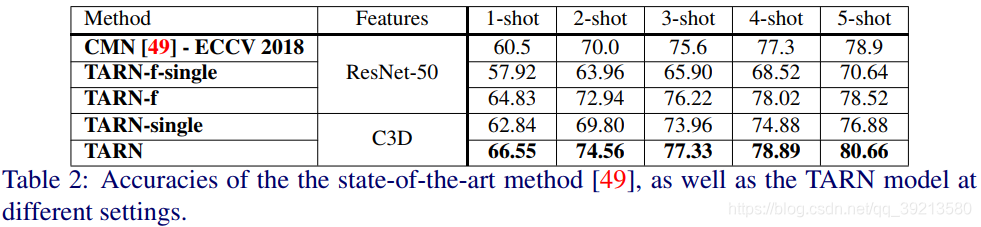

- 一句话总结:

- 提出TARN结构,小样本版Kenetics数据集上精度达80.66%。

4.4 CMN: Compound memory networks for few-shot video classification.

- 相关资料:

- Github

- 论文基本信息:

- 领域:小样本学习

- 作者单位:悉尼科技大学

- 发表期刊和时间: ECCV2018

- 谷歌引用量:47

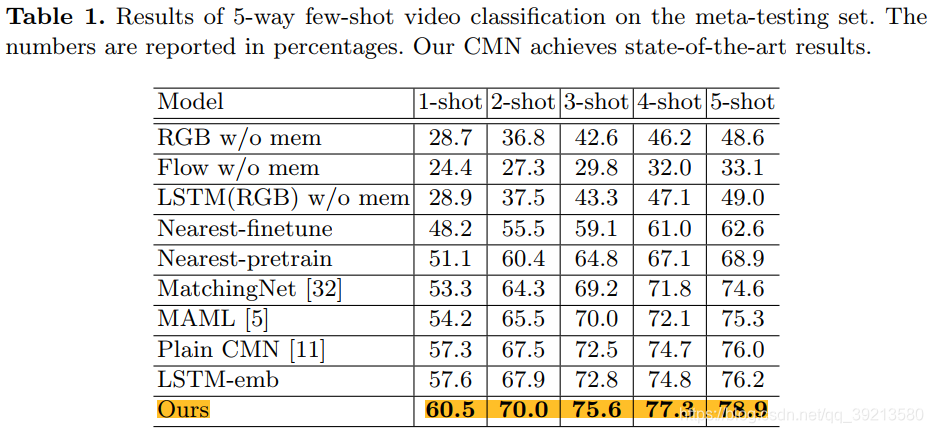

- 一句话总结:

- 提出Compound Memory Network(CMN)结构,在小样本版Kinetics数据集上精度达78.9%。

- 补充

- 数据集制作:在Kinetics数据集上随机挑选100类,每类100个样本视频作为小样本数据集。其中64类为训练集,12类为验证集,24类为测试集。

4.5 OTAM: Few-shot video classification via temporal alignment

- 相关资料:

- Github

- 论文基本信息:

- 领域:小样本学习

- 作者单位:斯坦福

- 发表期刊和时间:CVPR2020

- 谷歌引用量:24

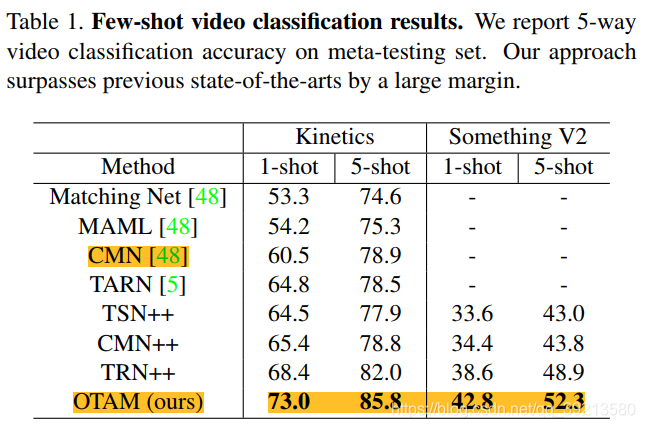

- 一句话总结:

- 提出OTAM结构,小样本版Kinetics精度达85.8%。

4.6 ARN: Few-shot Action Recognition with Permutation-invariant Attention

- 相关资料:

- Github

- 论文基本信息:

- 领域:小样本学习

- 作者单位:牛津&澳大利亚国立&香港大学

- 发表期刊和时间:CVPR2020

- 谷歌引用量:11

- 一句话总结:

- 提出 Action Relation Network(ARN) 结构,在 HMDB51, UCF101, miniMIT 三个数据集上达到SOTA,UCF101数据集精度为84.8%

4.7 AMeFu-Net:Depth Guided Adaptive Meta-Fusion Network for Few-shot Video Recognition

- 相关资料:

- Github

- 论文基本信息:

- 领域:小样本学习

- 作者单位:复旦&牛津

- 发表期刊和时间:ACM2020

- 谷歌引用量:--

- 一句话总结:

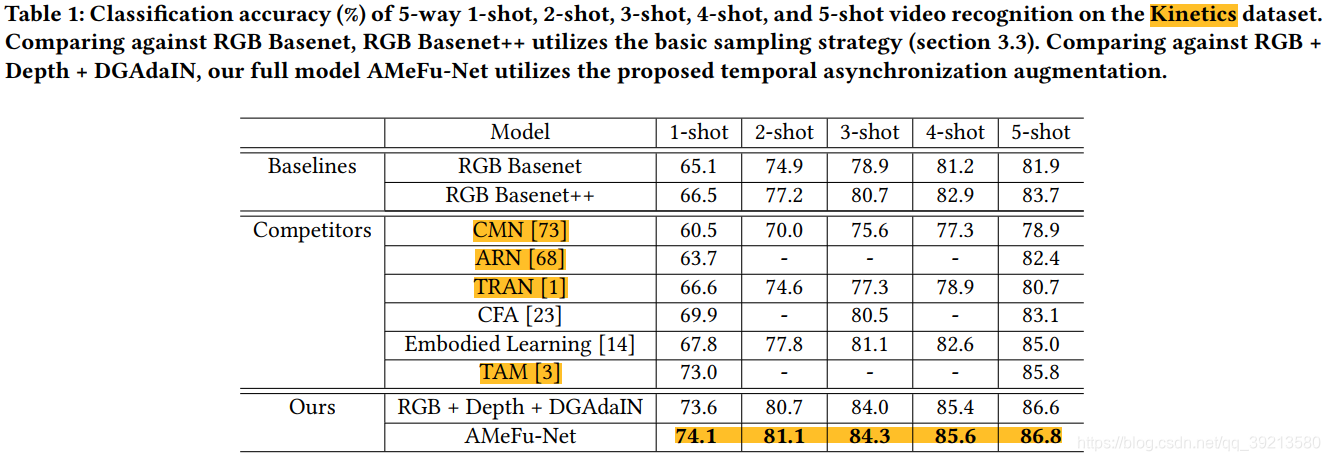

- 提出AMeFu-Net,小样本版Kinetics精度达86.8%.

4.8 Temporal-Relational CrossTransformers for Few-Shot Action Recognition

- 相关资料:

- Github

- 论文基本信息:

- 领域:小样本学习

- 作者单位:布里斯托大学(英国)

- 发表期刊和时间:ArXiv(好像是被CVPR2021接收了)

- 谷歌引用量:--

- 一句话总结: