@awsekfozc

2015-12-11T17:18:34.000000Z

字数 1669

阅读 1655

Sqoop基础应用

Sqoop

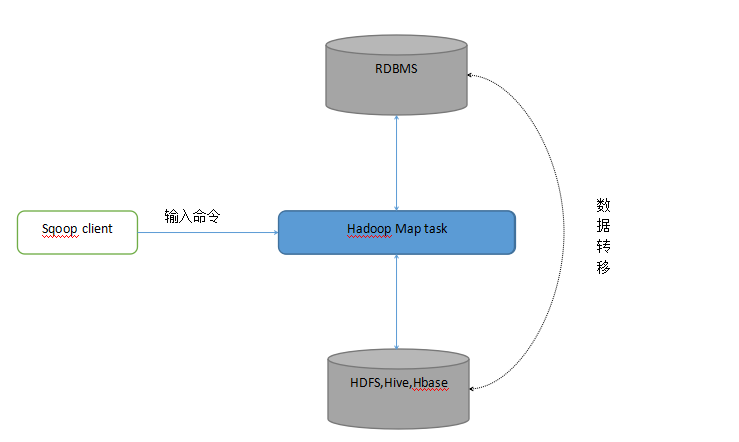

1)架构概述

1. 传统数据库和hadoop HDFS之间数据转移桥梁。如(HDFS,Hive,Hbase)数据转移。

2. 利用Map task加快数据传输速度,批处理方式数据传输。

2)使用要点

- RDBMS端:

- 1.jdbcurl,数据库连接

- 2.user,连接用户

- 3.password,连接密码

- 4.table,使用的表-

- 操作:

- import:导入

- export:导出

- hadoop:

- 对HDFS而言:path,路径

- 对hive而言:tabelname,表

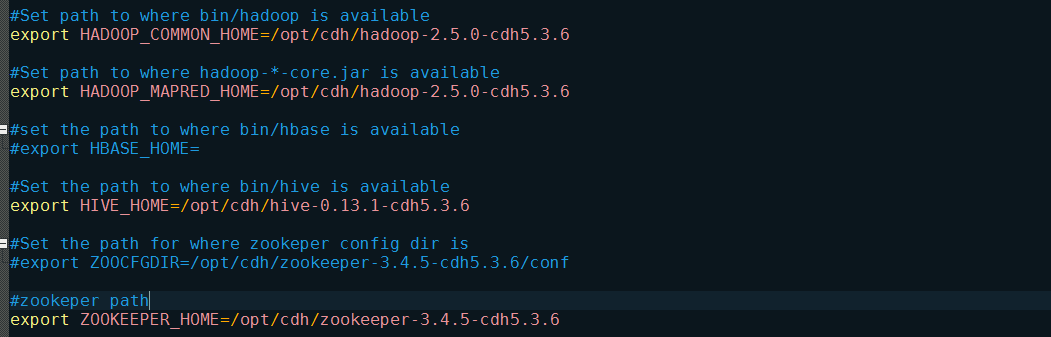

3)基本配置

sqoop-env.sh

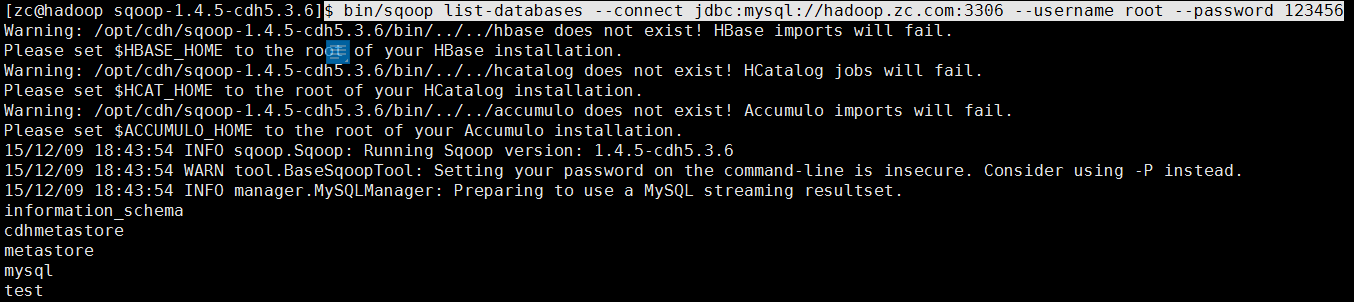

##mysql驱动$ cp /opt/cdh/hive-0.13.1-cdh5.3.6/lib/mysql-connector-java-5.1.27-bin.jar ./lib/##测试连接mysql$ bin/sqoop list-databases --connect jdbc:mysql://hadoop.zc.com:3306 --username root --password 123456

3)import

- 简单示例

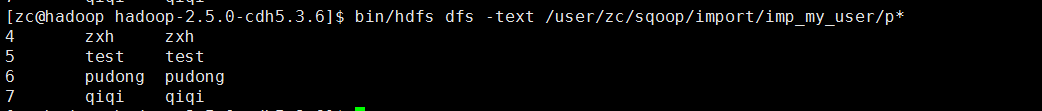

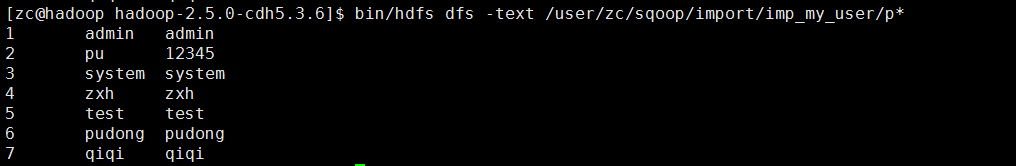

###wherebin/sqoop import \--connect jdbc:mysql://hadoop.zc.com:3306/test \--username root \--password 123456 \--table my_user \--where " id > 3 " \--split-by id \--num-mappers 1 \--target-dir /user/zc/sqoop/import/imp_my_user \--fields-terminated-by "\t" \--delete-target-dir

###querybin/sqoop import \--connect jdbc:mysql://hadoop.zc.com:3306/test \--username root \--password 123456 \--query 'select * from my_user WHERE $CONDITIONS' \--num-mappers 1 \--target-dir /user/zc/sqoop/import/imp_my_user \--fields-terminated-by "\t" \--delete-target-dir

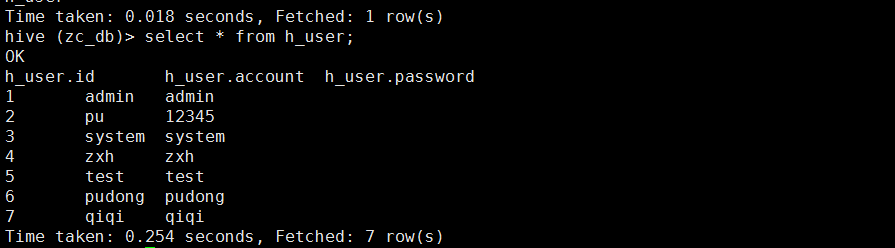

###import hivebin/sqoop import \--connect jdbc:mysql://hadoop.zc.com:3306/test \--username root \--password 123456 \--table my_user \--num-mappers 1 \--fields-terminated-by "\t" \--delete-target-dir \--hive-database zc_db \--hive-import \--hive-table h_user

4)export

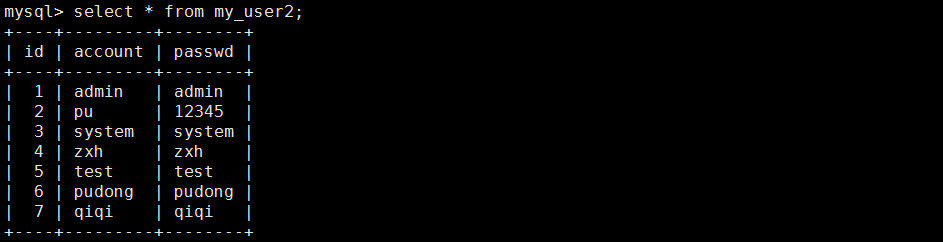

###export hdfsbin/sqoop export \--connect jdbc:mysql://hadoop.zc.com:3306/test \--username root \--password 123456 \--table my_user2 \--num-mappers 1 \--input-fields-terminated-by "\t" \--export-dir /user/zc/sqoop/import/imp_my_user

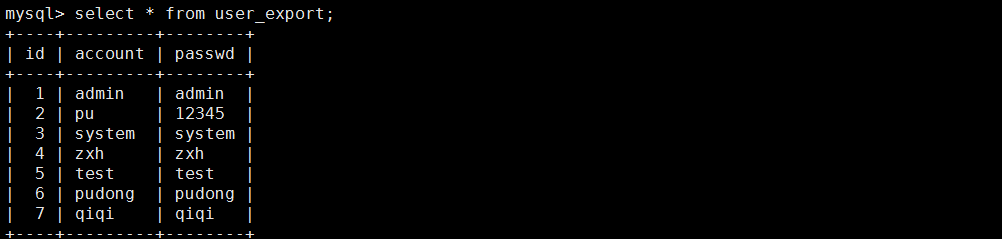

###export hivebin/sqoop export \--connect jdbc:mysql://hadoop.zc.com/test \--username root \--password 123456 \--table user_export \--num-mappers 1 \--input-fields-terminated-by "\t" \--export-dir /user/hive/warehouse/zc_db.db/h_user

在此输入正文