@awsekfozc

2015-12-05T11:02:44.000000Z

字数 2154

阅读 2065

HDFS HA

HA

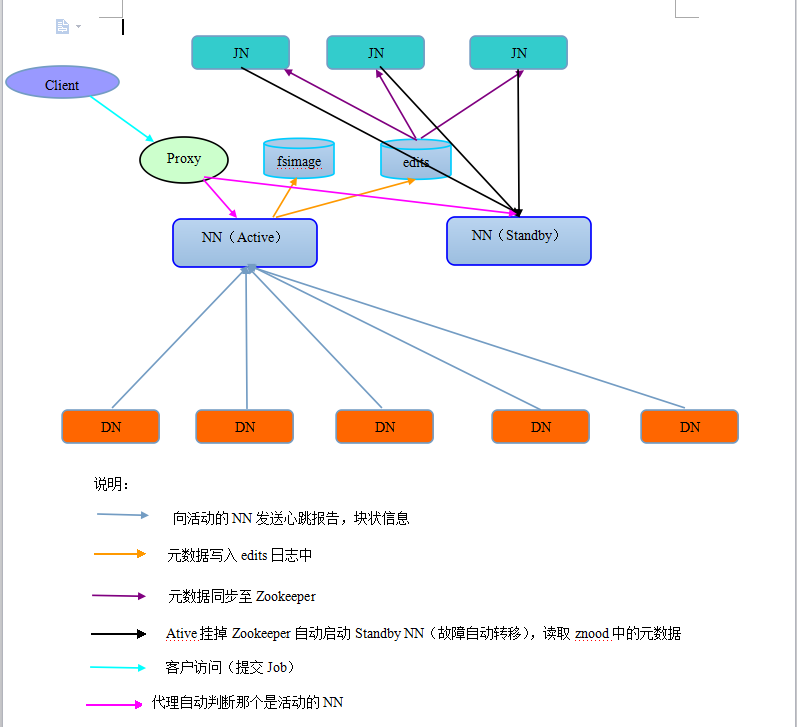

HDFS HA图解

HDFS HA配置

删除以下配置

<!--删除默认文件系统配置core-site.xml--><property><name>fs.defaultFS</name><value>hdfs://hadoop.zc.com:8020</value></property><!--删除hdfs-site.xml--><property><name>dfs.namenode.secondary.http-address</name><value>hadoop02.zc.com:50090</value></property>

hdfs-site.xml

<!--命名空间设置ns1--><property><name>dfs.nameservices</name><value>ns1</value></property><!--namenodes节点ID:nn1,nn2(配置在命名空间ns1下)--><property><name>dfs.ha.namenodes.ns1</name><value>nn1,nn2</value></property><!--nn1,nn2节点地址配置--><property><name>dfs.namenode.rpc-address.ns1.nn1</name><value>hadoop.zc.com:8020</value></property><property><name>dfs.namenode.rpc-address.ns1.nn2</name><value>hadoop01.zc.com:8020</value></property><!--nn1,nn2节点WEB地址配置--><property><name>dfs.namenode.http-address.ns1.nn1</name><value>hadoop.zc.com:50070</value></property><property><name>dfs.namenode.http-address.ns1.nn2</name><value>hadoop01.zc.com:50070</value></property><!--配置edits日志存放地址--><property><name>dfs.namenode.shared.edits.dir</name><value>qjournal://hadoop.zc.com:8485;hadoop01.zc.com:8485;hadoop02.zc.com:8485/ns1</value></property><!--配置edits日志物理存放目录--><property><name>dfs.journalnode.edits.dir</name><value>/opt/app/hadoop-2.5.0/data/dfs/jn</value></property>

core-site.xml

<!--配置文件系统位置--><property><name>fs.defaultFS</name><value>hdfs://ns1</value></property>

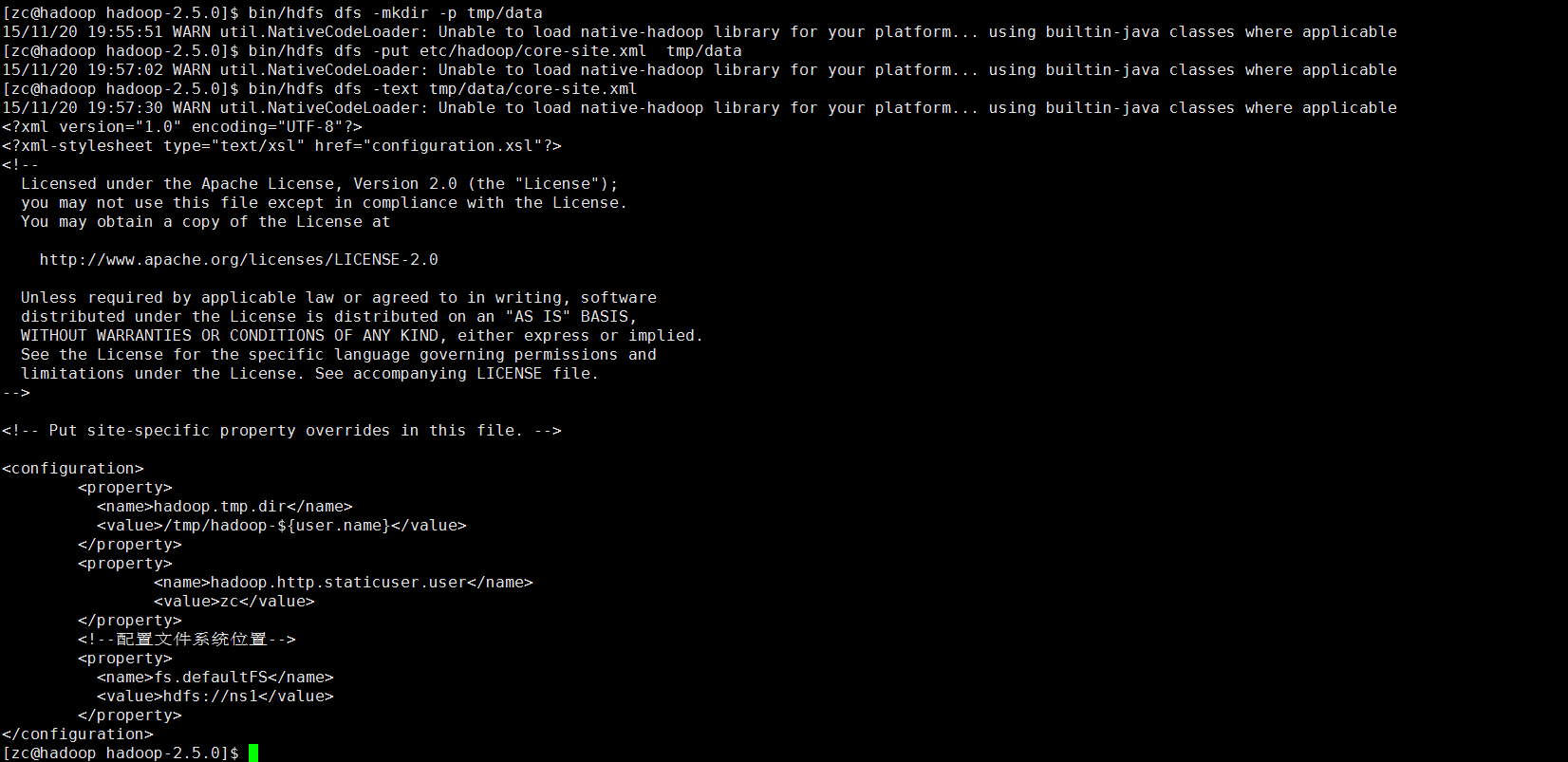

HDFS HA启动

<!--启动JN(配置为JN都要启动)-->$ sbin/hadoop-daemon.sh start journalnode<!--格式化nn1并启动nn1-->$ bin/hdfs namenode -format$ sbin/hadoop-daemon.sh start namenode<!--nn1元数据同步到nn2(nn2上运行),并启动nn2-->$ bin/hdfs namenode -bootstrapStandby$ sbin/hadoop-daemon.sh start namenode<!--nn1切换到Active状态-->$ bin/hdfs haadmin -transitionToActive nn1<!--启动datanode-->

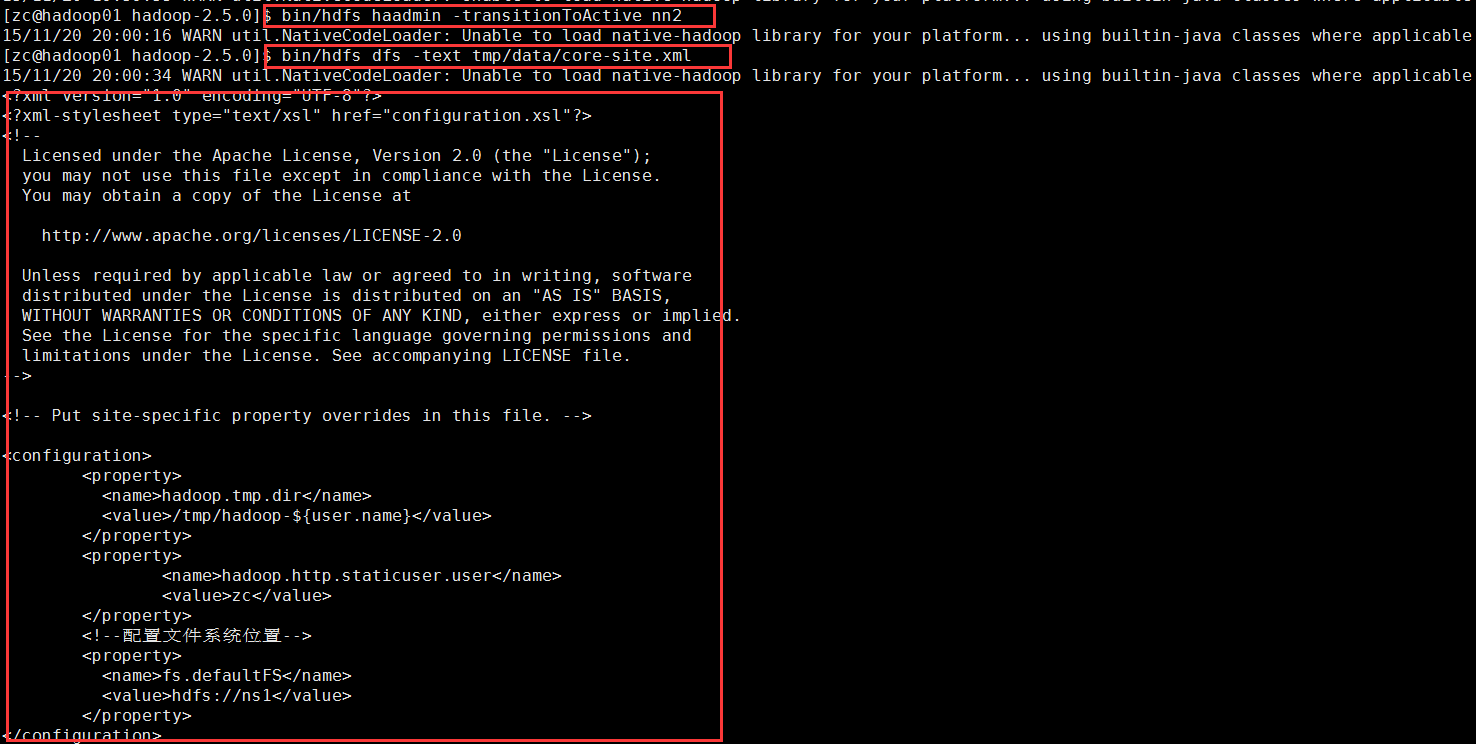

HDFS HA测试

HDFS HA自动故障转移

shut down集群

<!--nn1-->$ sbin/stop-dfs.sh

参数配置

<!--启用故障转移(hdfs-site.xml)--><property><name>dfs.ha.automatic-failover.enabled.ns1</name><value>true</value></property><!--znood节点(core-site.xml)--><property><name>ha.zookeeper.quorum</name><value>hadoop.zc.com:2181,hadoop01.zc.com:2181,hadoop02.zc.com:2181</value></property>

初始化ZK

<!--启动zk组件-->$ bin/zkServer.sh start<!--nn1:初始化zk-->$ bin/hdfs zkfc -formatZK

启动HDFS集群

<!--nn1-->$ sbin/start-dfs.sh<!--nn1 nn2-->$ hadoop-daemon.sh start zkfc

在此输入正文