@sasaki

2016-03-07T09:24:36.000000Z

字数 7374

阅读 3033

Hive——数据仓库

BigData

版本控制

@Title Hive——数据仓库@Version v1.0@Timestamp 2016-02-19 16:34@Author Nicholas@Mail redskirt@outlook.com

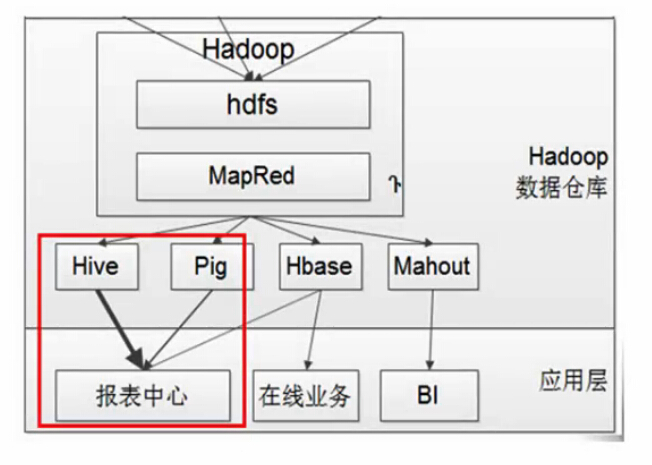

Hadoop最基本的两大模块:分布式存储和分布式计算。

Hive Metastore

- Embedded Mode (单用户模式)

- Local Mode (多用户模式、元数据库在本机)

- Remote Server Mode(元数据为独立的数据库)

内部表指hive创建并通过load data inpath进数据库的表,这种表可以理解为数据和表结构都保存在一起的数据表。当你通过DROP TABLE table_name 删除元数据中表结构的同时,表中的数据也同样会从hdfs中被删除。

外部表指在表结构创建以前,数据已经保存在hdfs中了,通过创建表结构,将数据格式化到表的结构里。当DROP TABLE table_name 的时候,hive仅仅会删除元数据的表结构,而不会删除hdfs上的文件,所以,相比内部表,外部表可以更放心大胆的使用。

[root@master tmp]# hive16/02/19 16:49:48 WARN conf.HiveConf: DEPRECATED: Configuration property hive.metastore.local no longer has any effect. Make sure to provide a valid value for hive.metastore.uris if you are connecting to a remote metastore.Logging initialized using configuration in jar:file:/opt/cloudera/parcels/CDH-5.3.8-1.cdh5.3.8.p0.5/jars/hive-common-0.13.1-cdh5.3.8.jar!/hive-log4j.propertieshive> show databases;OKdefaultTime taken: 0.806 seconds, Fetched: 1 row(s)hive> show tables;OKTime taken: 0.083 secondshive> create table test (a int, b string);OKTime taken: 0.304 seconds# 分别建立存储外部表和内部表的目录[root@master tmp]# hadoop fs -mkdir /user/root/hive/external[root@master tmp]# hadoop fs -mkdir /user/root/hive/internal[root@master tmp]# hadoop fs -put SogouQ.reduced /user/root/hive/external[root@master tmp]# hadoop fs -put SogouQ.reduced /user/root/hive/internal# 创建外部表hive> create external table log_in(time varchar(8), userid varchar(30), query string, pagerank int, clickrank int, site string) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' location 'hdfs://192.168.53.230:8020/user/root/hive/external';OKTime taken: 0.096 secondshive> select * from log_in limit 10;OK00:00:00 2982199073774412 [360安全卫士] 8 3 download.it.com.cn/softweb/software/firewall/antivirus/20067/17938.html00:00:00 07594220010824798 [哄抢救灾物资] 1 1 news.21cn.com/social/daqian/2008/05/29/4777194_1.shtml00:00:00 5228056822071097 [75810部队] 14 5 www.greatoo.com/greatoo_cn/list.asp?link_id=276&title=%BE%DE%C2%D6%D0%C2%CE%C500:00:00 6140463203615646 [绳艺] 62 36 www.jd-cd.com/jd_opus/xx/200607/706.html00:00:00 8561366108033201 [汶川地震原因] 3 2 www.big38.net/00:00:00 23908140386148713 [莫衷一是的意思] 1 2 www.chinabaike.com/article/81/82/110/2007/2007020724490.html00:00:00 1797943298449139 [星梦缘全集在线观看] 8 5 www.6wei.net/dianshiju/????\xa1\xe9|????do=index00:00:00 00717725924582846 [闪字吧] 1 2 www.shanziba.com/00:00:00 41416219018952116 [霍震霆与朱玲玲照片] 2 6 bbs.gouzai.cn/thread-698736.html00:00:00 9975666857142764 [电脑创业] 2 2 ks.cn.yahoo.com/question/1307120203719.htmlTime taken: 0.083 seconds, Fetched: 10 row(s)

Partitions

- 传统数据库常常使用“天表”、“月表”、“年表”的方式组织数据库以避免单表过大导致的性能问题。

- Hive使用partitions概念解决该问题,比如,相较于“天表”,Hive每天创建一个新的partition。当使用partitions时,可以在不扫描整个表的情况下操作必要的数据。

hive> CREATE TABLE log_partitions(time varchar(8), userid varchar(30), query string, pagerank int, clickrank int, site string) PARTITIONED BY (day STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t';OKTime taken: 0.826 seconds[root@master ~]# hadoop fs -cp /user/root/hive/external/SogouQ.reduced /user/root/hive/partitionshive> LOAD DATA INPATH '/user/root/hive/partitions' INTO TABLE log_partitions PARTITION(day='2014-01-01');Loading data to table default.log_partitions partition (day=2014-01-01)chgrp: changing ownership of 'hdfs://master:8020/user/hive/warehouse/log_partitions/day=2014-01-01/SogouQ.reduced': User does not belong to hivePartition default.log_partitions{day=2014-01-01} stats: [numFiles=1, numRows=0, totalSize=160939045, rawDataSize=0]OKTime taken: 1.044 seconds# 建表时未指定目录,该表将默认放到/user/hive/warehouse中[root@master ~]# hadoop fs -ls /user/hive/warehouseFound 2 itemsdrwxrwxrwt - root hive 0 2016-02-24 11:02 /user/hive/warehouse/log_partitionsdrwxrwxrwt - root hive 0 2016-02-19 16:51 /user/hive/warehouse/test# 指定的partitions条件被创建为目录[root@master ~]# hadoop fs -ls /user/hive/warehouse/log_partitionsFound 1 itemsdrwxrwxrwt - root hive 0 2016-02-24 11:02 /user/hive/warehouse/log_partitions/day=2014-01-01[root@master ~]# hadoop fs -ls -R /user/hive/warehouse/log_partitionsdrwxrwxrwt - root hive 0 2016-02-24 11:02 /user/hive/warehouse/log_partitions/day=2014-01-01-rwxrwxrwt 3 root root 160939045 2016-02-24 11:01 /user/hive/warehouse/log_partitions/day=2014-01-01/SogouQ.reduced# 查询时可带上分区条件查询hive> select * from log_partitions where day='2014-01-01' limit 10;OK00:00:00 2982199073774412 [360安全卫士] 8 3 download.it.com.cn/softweb/software/firewall/antivirus/20067/17938.htm2014-01-0100:00:00 07594220010824798 [哄抢救灾物资] 1 1 news.21cn.com/social/daqian/2008/05/29/4777194_1.shtml 2014-01-0100:00:00 5228056822071097 [75810部队] 14 5 www.greatoo.com/greatoo_cn/list.asp?link_id=276&title=%BE%DE%C2%D6%D0%C2%CE%C5 2014-01-0100:00:00 6140463203615646 [绳艺] 62 36 www.jd-cd.com/jd_opus/xx/200607/706.html 2014-01-0100:00:00 8561366108033201 [汶川地震原因] 3 2 www.big38.net/ 2014-01-0100:00:00 23908140386148713 [莫衷一是的意思] 1 2 www.chinabaike.com/article/81/82/110/2007/2007020724490.html 2014-01-0100:00:00 1797943298449139 [星梦缘全集在线观看] 8 5 www.6wei.net/dianshiju/????\xa1\xe9|????do=index 2014-01-0100:00:00 00717725924582846 [闪字吧] 1 2 www.shanziba.com/ 2014-01-0100:00:00 41416219018952116 [霍震霆与朱玲玲照片] 2 6 bbs.gouzai.cn/thread-698736.html 2014-01-0100:00:00 9975666857142764 [电脑创业] 2 2 ks.cn.yahoo.com/question/1307120203719.html 2014-01-01Time taken: 0.152 seconds, Fetched: 10 row(s)# 查看表结构,可看到有一个名为day的Partitions域hive> desc log_partitions;OKtime varchar(8)userid varchar(30)query stringpagerank intclickrank intsite stringday string# Partition Information# col_name data_type commentday stringTime taken: 0.124 seconds, Fetched: 12 row(s)

- 将会在Hdfs 中 page_view文件夹中创建按日期命名的文件夹

../sogouqueryfish/day=2014-01-01/..../sogouqueryfish/day=2014-01-02/....../sogouqueryfish/day=2014-01-06/..

- 注意事项:

- 只对离散字段建partition

- 尽管在表中创建分区有很多好处,但过度使用可能带来害处。

1)Hive为一张表创建一个分区后,必须为每一个分区维护额外的元数据来重定向查询。

2)最好在完全理解数据的查询类型后,再选择合适的列进行分区,这非常重要。因为分区有可能在提高部分查询的性能的同时降低其他查询的性能。

Bucket

- Bucket类似于Partition。 Buckets根据查询常用的关键字的哈希值将数据分丌

到到用户自定义集合中。当需要做分区的列为连续值时,适合使用Bucket。

假设,在user_id列上执行查询 ,在user_id列上使用buckets。

CREATE TABLE page_views_old(user_id INT, session_id INT, url STRING)PARTITIONED BY(day INT) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';LOAD DATA INPATH '/data/PageView/pageview.sample' INTO TABLEpage_views_old PARTITION(day=1);set hive.enforce.bucketing = true;CREATE TABLE page_views(user_id INT, session_id INT, url STRING) PARTITIONEDBY(day INT) clustered by(user_id) into 10 buckets ROW FORMAT DELIMITEDFIELDS TERMINATED BY ',';from page_views_old insert overwrite table page_views partition(day=1) selectuser_id,session_id,url where day=1;

通过以上语句,表中的数据将会根据user_id的哈希数分成10个buckets。因此,当查询确定的user_id时,程序首先计算user_id的哈希数然后只查找对应的bucket。

查询搜索次数最多的用户及其搜索的内容倒序排列,前10条记录

select sf.userid, sf.query, count(sf.query) sco, tt.co from (select userid, count(3) co from log_in group by userid order by co desc) as tt join log_in as sf on tt.userid = sf.userid group by sf.userid,sf.query,tt.co order by tt.co desc,sco desc limit 10;

Hive Beeline模式

[root@slave01 ~]# beelineBeeline version 0.13.1-cdh5.3.8 by Apache Hive!connect jdbc:hive2://master:10000scan complete in 3msConnecting to jdbc:hive2://master:10000Enter username for jdbc:hive2://master:10000: hiveEnter password for jdbc:hive2://master:10000: ****Connected to: Apache Hive (version 0.13.1-cdh5.3.8)Driver: Hive JDBC (version 0.13.1-cdh5.3.8)Transaction isolation: TRANSACTION_REPEATABLE_READ0: jdbc:hive2://master:10000>0: jdbc:hive2://master:10000> show tables;+-----------------+--+| tab_name |+-----------------+--+| log_in || log_partitions || sogouquery || test |+-----------------+--+4 rows selected (0.823 seconds)

search-qeury项目

[root@master tmp]# hadoop fs -mkdir /user/root/search-queryhive> CREATE EXTERNAL TABLE search_query(time varchar(8), userid varchar(30), query string, pagerank int, clickrank int, site string) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' location 'hdfs://192.168.53.230:8020/user/root/search-query';OKTime taken: 0.07 seconds