@gnat-xj

2018-04-08T17:44:31.000000Z

字数 37493

阅读 3692

SLAMBOOK

https://gitlab.com/juantu/slambook

Ch2

hello slam

Ch3

useEigen

#include <Eigen/Core>// 稠密矩阵的代数运算(逆,特征值等)#include <Eigen/Dense>// 声明一个2*3的float矩阵Eigen::Matrix<double, 3, 5> matrix_35;matrix_35 << 1, 2, 3, 4, 5,6, 7, 8, 9, 10,86, 7, 8, 9, 10;cout << matrix_35 << endl;Eigen::Vector3d v_3d;Eigen::Matrix<float,3,1> vd_3d; // 这是一样的Eigen::Matrix< double, Eigen::Dynamic, Eigen::Dynamic > matrix_dynamic;Eigen::MatrixXd matrix_x; // 更简单的// 访问matrix_23(i,j);// cast 类型Eigen::Matrix<double, 2, 1> result = matrix_23.cast<double>() * v_3d;matrix_33 = Eigen::Matrix3d::Random(); // 随机数矩阵cout << matrix_33.transpose() << endl; // 转置cout << matrix_33.sum() << endl; // 各元素和cout << matrix_33.trace() << endl; // 迹cout << 10*matrix_33 << endl; // 数乘cout << matrix_33.inverse() << endl; // 逆cout << matrix_33.determinant() << endl; // 行列式Eigen::SelfAdjointEigenSolver<Eigen::Matrix3d> eigen_solver ( matrix_33.transpose()*matrix_33 );cout << "Eigen values = \n" << eigen_solver.eigenvalues() << endl;cout << "Eigen vectors = \n" << eigen_solver.eigenvectors() << endl;Eigen::Matrix<double,MATRIX_SIZE,1> x2 = matrix_NN.colPivHouseholderQr().solve(v_Nd);cout <<"sum of x-x2: " << (x - x2).sum() << endl;

useGeometry

#include <Eigen/Core>#include <Eigen/Geometry>Eigen::Matrix3d rotation_matrix = Eigen::Matrix3d::Identity();Eigen::AngleAxisd rotation_vector ( M_PI/4, Eigen::Vector3d ( 0,0,1 ) ); //沿 Z 轴旋转 45 度rotation_matrix = rotation_vector.toRotationMatrix();Eigen::Vector3d euler_angles = rotation_matrix.eulerAngles ( 2,1,0 ); // ZYX顺序,即roll pitch yaw顺序Eigen::Isometry3d T=Eigen::Isometry3d::Identity(); // 虽然称为3d,实质上是4*4的矩阵T.rotate ( rotation_vector ); // 按照rotation_vector进行旋转T.pretranslate ( Eigen::Vector3d ( 1,3,4 ) ); // 把平移向量设成(1,3,4)// 对于仿射和射影变换,使用 Eigen::Affine3d 和 Eigen::Projective3d 即可,略// 四元数// 可以直接把AngleAxis赋值给四元数,反之亦然Eigen::Quaterniond q = Eigen::Quaterniond ( rotation_vector );cout<<"quaternion = \n"<<q.coeffs() <<endl; // 请注意coeffs的顺序是(x,y,z,w),w为实部,前三者为虚部// 也可以把旋转矩阵赋给它q = Eigen::Quaterniond ( rotation_matrix );cout<<"quaternion = \n"<<q.coeffs() <<endl;// 使用四元数旋转一个向量,使用重载的乘法即可v_rotated = q*v; // 注意数学上是qvq^{-1}cout<<"(1,0,0) after rotation = "<<v_rotated.transpose()<<endl;

visualizeGeometry

null

Ch4

useSophus

#include <Eigen/Core>#include <Eigen/Geometry>#include "sophus/so3.h"#include "sophus/se3.h"// 沿Z轴转90度的旋转矩阵Eigen::Matrix3d R = Eigen::AngleAxisd(M_PI/2, Eigen::Vector3d(0,0,1)).toRotationMatrix();Sophus::SO3 SO3_R(R); // Sophus::SO(3)可以直接从旋转矩阵构造Sophus::SO3 SO3_v( 0, 0, M_PI/2 ); // 亦可从旋转向量构造Eigen::Quaterniond q(R); // 或者四元数Sophus::SO3 SO3_q( q );// 使用对数映射获得它的李代数Eigen::Vector3d so3 = SO3_R.log();// hat 为向量到反对称矩阵cout<<"so3 hat=\n"<<Sophus::SO3::hat(so3)<<endl;// 相对的,vee为反对称到向量cout<<"so3 hat vee= "<<Sophus::SO3::vee( Sophus::SO3::hat(so3) ).transpose()<<endl; // transpose纯粹是为了输出美观一些// 增量扰动模型的更新Eigen::Vector3d update_so3(1e-4, 0, 0); //假设更新量为这么多Sophus::SO3 SO3_updated = Sophus::SO3::exp(update_so3)*SO3_R;// 注意这里不用先 hat (应该也可以, 重载过)// 李代数se(3) 是一个六维向量,方便起见先typedef一下typedef Eigen::Matrix<double,6,1> Vector6d;Vector6d se3 = SE3_Rt.log();cout<<"se3 = "<<se3.transpose()<<endl;// 观察输出,会发现在Sophus中,se(3)的平移在前,旋转在后.// 同样的,有hat和vee两个算符cout<<"se3 hat = "<<endl<<Sophus::SE3::hat(se3)<<endl;cout<<"se3 hat vee = "<<Sophus::SE3::vee( Sophus::SE3::hat(se3) ).transpose()<<endl;

Ch5

imageBasics

#include <opencv2/core/core.hpp>#include <opencv2/highgui/highgui.hpp>cv::Mat image;image = cv::imread ( argv[1] ); //cv::imread函数读取指定路径下的图像// if ( image.data == nullptr ) //数据不存在,可能是文件不存在image.colsimage.channels()// image.type() != CV_8UC1 && image.type() != CV_8UC3 )unsigned char* row_ptr = image.ptr<unsigned char> ( y ); // row_ptr是第y行的头指针unsigned char* data_ptr = &row_ptr[ x*image.channels() ]; // data_ptr 指向待访问的像素数据// unsigned char data = data_ptr[c]; // data为I(x,y)第c个通道的值cv::Mat image_clone = image.clone();image_clone ( cv::Rect ( 0,0,100,100 ) ).setTo ( 255 );

joinMap

#include <Eigen/Geometry>#include <pcl/point_types.h>#include <pcl/io/pcd_io.h>#include <pcl/visualization/pcl_visualizer.h>vector<Eigen::Isometry3d, Eigen::aligned_allocator<Eigen::Isometry3d>> poses; // 相机位姿double data[7] = {0};for ( auto& d:data ) { fin>>d; }Eigen::Quaterniond q( data[6], data[3], data[4], data[5] );Eigen::Isometry3d T(q);T.pretranslate( Eigen::Vector3d( data[0], data[1], data[2] ));poses.push_back( T );typedef pcl::PointXYZRGB PointT;typedef pcl::PointCloud<PointT> PointCloud; // 需要指定 point cloud 里面点的类型PointCloud::Ptr pointCloud( new PointCloud ); // 用 ptr 来包装点云, 自动析构unsigned int d = depth.ptr<unsigned short> ( v )[u]; // 深度值if ( d==0 ) continue; // 为0表示没有测量到Eigen::Vector3d point;point[2] = double(d)/depthScale;point[0] = (u-cx)/fx*point[2];point[1] = (v-cy)/fy*point[2];Eigen::Vector3d pointWorld = T*point;PointT p ;p.x = pointWorld[0];p.y = pointWorld[1];p.z = pointWorld[2];p.b = color.data[ v*color.step+u*color.channels() ];p.g = color.data[ v*color.step+u*color.channels()+1 ];p.r = color.data[ v*color.step+u*color.channels()+2 ];pointCloud->points.push_back( p );pointCloud->is_dense = false;cout<<"点云共有"<<pointCloud->size()<<"个点."<<endl;pcl::io::savePCDFileBinary("map.pcd", *pointCloud );

Ch6

ceres_curve_fitting

#include <opencv2/core/core.hpp>#include <ceres/ceres.h>// 代价函数的计算模型struct CURVE_FITTING_COST {CURVE_FITTING_COST ( double x, double y ) : _x ( x ), _y ( y ) {}template <typename T>bool operator() (const T *const abc, // 模型参数,有3维T* residual // 残差) const{residual[0] = T (_y) - ceres::exp (abc[0]*T(_x)*T(_x) + abc[1]*T(_x) + abc[2]); // y-exp(ax^2+bx+c)return true;}const double _x, _y; // x,y数据};double abc[3] = {0,0,0}; // abc参数的估计值ceres::Problem problem;for ( int i=0; i<N; i++ ) {problem.AddResidualBlock ( // 向问题中添加误差项// 使用自动求导,模板参数:误差类型,输出维度,输入维度,维数要与前面struct中一致new ceres::AutoDiffCostFunction<CURVE_FITTING_COST, 1, 3> (new CURVE_FITTING_COST ( x_data[i], y_data[i] )),nullptr, // 核函数,这里不使用,为空abc // 待估计参数);}// 配置求解器ceres::Solver::Options options; // 这里有很多配置项可以填options.linear_solver_type = ceres::DENSE_QR; // 增量方程如何求解options.minimizer_progress_to_stdout = true; // 输出到coutceres::Solver::Summary summary; // 优化信息ceres::Solve ( options, &problem, &summary ); // 开始优化// 输出结果cout<<summary.BriefReport() <<endl;

g2o_curve_fitting

// 曲线模型的顶点,模板参数:优化变量维度和数据类型// dimention and estimate typeclass CurveFittingVertex: public g2o::BaseVertex<3, Eigen::Vector3d> {public:EIGEN_MAKE_ALIGNED_OPERATOR_NEWvirtual void setToOriginImpl() // 重置{ _estimate << 0,0,0; }virtual void oplusImpl( const double* update ) // 更新{ _estimate += Eigen::Vector3d(update); }// 存盘和读盘:留空virtual bool read( istream& in ) {}virtual bool write( ostream& out ) const {}};// 误差模型 模板参数:观测值维度,类型,连接顶点类型class CurveFittingEdge: public g2o::BaseUnaryEdge<1,double,CurveFittingVertex>{public:EIGEN_MAKE_ALIGNED_OPERATOR_NEWCurveFittingEdge( double x ): BaseUnaryEdge(), _x(x) {}// 计算曲线模型误差void computeError(){const CurveFittingVertex* v = static_cast<const CurveFittingVertex*> (_vertices[0]);const Eigen::Vector3d abc = v->estimate();_error(0,0) = _measurement - std::exp( abc(0,0)*_x*_x + abc(1,0)*_x + abc(2,0) ) ;}virtual bool read( istream& in ) {}virtual bool write( ostream& out ) const {}public:double _x; // x 值, y 值为 _measurement};// 构建图优化,先设定g2otypedef g2o::BlockSolver< g2o::BlockSolverTraits<3,1> > Block; // 每个误差项优化变量维度为3,误差值维度为1Block::LinearSolverType* linearSolver = new g2o::LinearSolverDense<Block::PoseMatrixType>(); // 线性方程求解器Block* solver_ptr = new Block( linearSolver ); // 矩阵块求解器// 梯度下降方法,从GN, LM, DogLeg 中选g2o::OptimizationAlgorithmWithHessian *solver;if (argc > 2) {if (strcmp(argv[2],"GN")) {cout << "using GN" << endl;solver = new g2o::OptimizationAlgorithmGaussNewton(solver_ptr );} else if (strcmp(argv[2],"LM")) {cout << "using LM" << endl;solver = new g2o::OptimizationAlgorithmLevenberg( solver_ptr );} else if (strcmp(argv[2],"DogLeg")) {cout << "using DogLeg" << endl;solver = new g2o::OptimizationAlgorithmDogleg(solver_ptr );}} else {cout << "using default solver: Levenberg (options are 'GN', 'LM', 'DogLeg')" << endl;solver = new g2o::OptimizationAlgorithmLevenberg( solver_ptr );}g2o::SparseOptimizer optimizer; // 图模型optimizer.setAlgorithm( solver ); // 设置求解器optimizer.setVerbose( true ); // 打开调试输出// 往图中增加顶点CurveFittingVertex* v = new CurveFittingVertex();v->setEstimate( Eigen::Vector3d(0,0,0) );v->setId(0);optimizer.addVertex( v );// 往图中增加边for ( int i=0; i<N; i++ ){CurveFittingEdge* edge = new CurveFittingEdge( x_data[i] );edge->setId(i);edge->setVertex( 0, v ); // 设置连接的顶点edge->setMeasurement( y_data[i] ); // 观测数值edge->setInformation( Eigen::Matrix<double,1,1>::Identity()*1/(w_sigma*w_sigma) ); // 信息矩阵:协方差矩阵之逆optimizer.addEdge( edge );}// 执行优化cout<<"start optimization"<<endl;chrono::steady_clock::time_point t1 = chrono::steady_clock::now();optimizer.initializeOptimization();optimizer.optimize(100);chrono::steady_clock::time_point t2 = chrono::steady_clock::now();chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>( t2-t1 );cout<<"solve time cost = "<<time_used.count()<<" seconds. "<<endl;cout<<"actual model: " << a << " " << b << " " << c << endl;// 输出优化值Eigen::Vector3d abc_estimate = v->estimate();cout<<"estimated model: "<<abc_estimate.transpose()<<endl;

Ch7

feature_extraction.cpp

std::vector<KeyPoint> keypoints_1, keypoints_2;Mat descriptors_1, descriptors_2;Ptr<FeatureDetector> detector = ORB::create();Ptr<DescriptorExtractor> descriptor = ORB::create();// Ptr<FeatureDetector> detector = FeatureDetector::create(detector_name);// Ptr<DescriptorExtractor> descriptor = DescriptorExtractor::create(descriptor_name);Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create ( "BruteForce-Hamming" );//-- 第一步:检测 Oriented FAST 角点位置detector->detect ( img_1,keypoints_1 );//-- 第二步:根据角点位置计算 BRIEF 描述子descriptor->compute ( img_1, keypoints_1, descriptors_1 );//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离vector<DMatch> matches;//BFMatcher matcher ( NORM_HAMMING );matcher->match ( descriptors_1, descriptors_2, matches );//-- 第四步:匹配点对筛选double min_dist=10000, max_dist=0;//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离min_dist = min_element( matches.begin(), matches.end(), [](const DMatch& m1, const DMatch& m2) {return m1.distance<m2.distance;} )->distance;max_dist = max_element( matches.begin(), matches.end(), [](const DMatch& m1, const DMatch& m2) {return m1.distance<m2.distance;} )->distance;//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.std::vector< DMatch > good_matches;for ( int i = 0; i < descriptors_1.rows; i++ ) {if ( matches[i].distance <= max ( 2*min_dist, 30.0 ) ) {good_matches.push_back ( matches[i] );}}drawMatches ( img_1, keypoints_1, img_2, keypoints_2, good_matches, img_goodmatch );

pose_estimation_2d2d.cpp

void find_feature_matches (const Mat& img_1, const Mat& img_2,std::vector<KeyPoint>& keypoints_1,std::vector<KeyPoint>& keypoints_2,std::vector< DMatch >& matches );Mat pose_estimation_2d2d (std::vector<KeyPoint> keypoints_1,std::vector<KeyPoint> keypoints_2,std::vector< DMatch > matches,Mat& R, Mat& t );// 像素坐标转相机归一化坐标Point2d pixel2cam ( const Point2d& p, const Mat& K );cout<<"一共找到了"<<matches.size() <<"组匹配点"<<endl;Mat R,t;Mat E = pose_estimation_2d2d ( keypoints_1, keypoints_2, matches, R, t );// Eigen::Matrix<double,Dynamic,Dynamic> eigenTxR; cv2eigen(txR, eigenTxR);// Eigen::Matrix<double,Dynamic,Dynamic> eigenE; cv2eigen(E, eigenE);// cout << "diff: " << eigenE.array() / eigenTxR.array();Mat ratio;divide(txR, E, ratio);cout << "diff: " << ratio;//-- 验证对极约束Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 );for ( DMatch m: matches ) {Point2d pt1 = pixel2cam ( keypoints_1[ m.queryIdx ].pt, K );Point2d pt2 = pixel2cam ( keypoints_2[ m.trainIdx ].pt, K );Mat y1 = ( Mat_<double> ( 3,1 ) << pt1.x, pt1.y, 1 );Mat y2 = ( Mat_<double> ( 3,1 ) << pt2.x, pt2.y, 1 );Mat d = y2.t() * t_x * R * y1;cout << "epipolar constraint = " << d << endl;}//////////////Point2d pixel2cam ( const Point2d& p, const Mat& K ) {return Point2d(// ( x -cx ) / fx( p.x - K.at<double> ( 0,2 ) ) / K.at<double> ( 0,0 ),// ( y-cy ) / fy( p.y - K.at<double> ( 1,2 ) ) / K.at<double> ( 1,1 ));}Mat pose_estimation_2d2d ( std::vector<KeyPoint> keypoints_1,std::vector<KeyPoint> keypoints_2,std::vector< DMatch > matches,Mat& R, Mat& t ){// 相机内参,TUM Freiburg2Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 );//-- 把匹配点转换为vector<Point2f>的形式vector<Point2f> points1;vector<Point2f> points2;for ( int i = 0; i < ( int ) matches.size(); i++ ) {points1.push_back ( keypoints_1[matches[i].queryIdx].pt );points2.push_back ( keypoints_2[matches[i].trainIdx].pt );}//-- 计算基础矩阵Mat fundamental_matrix;fundamental_matrix = findFundamentalMat ( points1, points2, CV_FM_8POINT );cout<<"fundamental_matrix is "<<endl<< fundamental_matrix<<endl;//-- 计算本质矩阵Point2d principal_point ( 325.1, 249.7 ); //相机光心, TUM dataset标定值, cx, cydouble focal_length = 521; //相机焦距, TUM dataset标定值, fx, fyMat essential_matrix;essential_matrix = findEssentialMat ( points1, points2, focal_length, principal_point );cout<<"essential_matrix is "<<endl<< essential_matrix<<endl;//-- 计算单应矩阵Mat homography_matrix;// ransacReprojThresholdhomography_matrix = findHomography ( points1, points2, RANSAC, 3 );cout<<"homography_matrix is "<<endl<<homography_matrix<<endl;//-- 从本质矩阵中恢复旋转和平移信息.recoverPose ( essential_matrix, points1, points2, R, t, focal_length, principal_point );cout<<"R is "<<endl<<R<<endl;cout<<"t is "<<endl<<t<<endl;return essential_matrix;}

pose_estimation_3d2d.cpp

void bundleAdjustment (const vector<Point3f> points_3d,const vector<Point2f> points_2d,const Mat& K,Mat& R, Mat& t);// 建立3D点Mat d1 = imread ( argv[3], CV_LOAD_IMAGE_UNCHANGED ); // 深度图为16位无符号数,单通道图像Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 );vector<Point3f> pts_3d;vector<Point2f> pts_2d;for ( DMatch m:matches ) {ushort d = d1.ptr<unsigned short> (int ( keypoints_1[m.queryIdx].pt.y )) [ int ( keypoints_1[m.queryIdx].pt.x ) ];if ( d == 0 ) // bad depthcontinue;float dd = d/5000.0;Point2d p1 = pixel2cam ( keypoints_1[m.queryIdx].pt, K );pts_3d.push_back ( Point3f ( p1.x*dd, p1.y*dd, dd ) );pts_2d.push_back ( keypoints_2[m.trainIdx].pt );}Mat r, t;solvePnP ( pts_3d, pts_2d, K, Mat(), r, t, false ); // 调用OpenCV 的 PnP 求解,可选择EPNP,DLS等方法Mat R;cv::Rodrigues ( r, R ); // r为旋转向量形式,用Rodrigues公式转换为矩阵bundleAdjustment ( pts_3d, pts_2d, K, R, t );void bundleAdjustment (const vector< Point3f > points_3d,const vector< Point2f > points_2d,const Mat& K,Mat& R, Mat& t ){// 初始化g2otypedef g2o::BlockSolver< g2o::BlockSolverTraits<6,3> > Block; // pose 维度为 6, landmark 维度为 3Block::LinearSolverType* linearSolver = new g2o::LinearSolverCSparse<Block::PoseMatrixType>(); // 线性方程求解器Block* solver_ptr = new Block ( linearSolver ); // 矩阵块求解器g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg ( solver_ptr );g2o::SparseOptimizer optimizer;optimizer.setAlgorithm ( solver );// vertexg2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap(); // camera poseEigen::Matrix3d R_mat;cv2eigen(R, R_mat);// R_mat <<// R.at<double> ( 0,0 ), R.at<double> ( 0,1 ), R.at<double> ( 0,2 ),// R.at<double> ( 1,0 ), R.at<double> ( 1,1 ), R.at<double> ( 1,2 ),// R.at<double> ( 2,0 ), R.at<double> ( 2,1 ), R.at<double> ( 2,2 );pose->setId ( 0 );pose->setEstimate ( g2o::SE3Quat (R_mat,Eigen::Vector3d ( t.at<double> ( 0,0 ), t.at<double> ( 1,0 ), t.at<double> ( 2,0 ) )) );optimizer.addVertex ( pose );int index = 1;for ( const Point3f p:points_3d ) // landmarks{g2o::VertexSBAPointXYZ* point = new g2o::VertexSBAPointXYZ();point->setId ( index++ );point->setEstimate ( Eigen::Vector3d ( p.x, p.y, p.z ) );point->setMarginalized ( true ); // g2o 中必须设置 marg 参见第十讲内容optimizer.addVertex ( point );}// parameter: camera intrinsicsg2o::CameraParameters* camera = new g2o::CameraParameters (K.at<double> ( 0,0 ), Eigen::Vector2d ( K.at<double> ( 0,2 ), K.at<double> ( 1,2 ) ), 0);camera->setId ( 0 );optimizer.addParameter ( camera );// edgesindex = 1;for ( const Point2f p:points_2d ){g2o::EdgeProjectXYZ2UV* edge = new g2o::EdgeProjectXYZ2UV();edge->setId ( index );edge->setVertex ( 0, dynamic_cast<g2o::VertexSBAPointXYZ*> ( optimizer.vertex ( index ) ) );edge->setVertex ( 1, pose );edge->setMeasurement ( Eigen::Vector2d ( p.x, p.y ) );edge->setParameterId ( 0,0 );edge->setInformation ( Eigen::Matrix2d::Identity() );optimizer.addEdge ( edge );index++;}chrono::steady_clock::time_point t1 = chrono::steady_clock::now();optimizer.setVerbose ( true );optimizer.initializeOptimization();optimizer.optimize ( 100 );chrono::steady_clock::time_point t2 = chrono::steady_clock::now();chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>> ( t2-t1 );cout<<"optimization costs time: "<<time_used.count() <<" seconds."<<endl;cout<<endl<<"after optimization:"<<endl;cout<<"T="<<endl<<Eigen::Isometry3d ( pose->estimate() ).matrix() <<endl;

pose_estimation_3d3d.cpp

void pose_estimation_3d3d (const vector<Point3f>& pts1,const vector<Point3f>& pts2,Mat& R, Mat& t);void bundleAdjustment(const vector<Point3f>& points_3d,const vector<Point3f>& points_2d,Mat& R, Mat& t);// g2o edgeclass EdgeProjectXYZRGBDPoseOnly : public g2o::BaseUnaryEdge<3, Eigen::Vector3d, g2o::VertexSE3Expmap>{public:EIGEN_MAKE_ALIGNED_OPERATOR_NEW;EdgeProjectXYZRGBDPoseOnly( const Eigen::Vector3d& point ) : _point(point) {}virtual void computeError(){const g2o::VertexSE3Expmap* pose = static_cast<const g2o::VertexSE3Expmap*> ( _vertices[0] );// measurement is p, point is p'_error = _measurement - pose->estimate().map( _point );}virtual void linearizeOplus(){g2o::VertexSE3Expmap* pose = static_cast<g2o::VertexSE3Expmap *>(_vertices[0]);g2o::SE3Quat T(pose->estimate());Eigen::Vector3d xyz_trans = T.map(_point);double x = xyz_trans[0];double y = xyz_trans[1];double z = xyz_trans[2];_jacobianOplusXi(0,0) = 0;_jacobianOplusXi(0,1) = -z;_jacobianOplusXi(0,2) = y;_jacobianOplusXi(0,3) = -1;_jacobianOplusXi(0,4) = 0;_jacobianOplusXi(0,5) = 0;_jacobianOplusXi(1,0) = z;_jacobianOplusXi(1,1) = 0;_jacobianOplusXi(1,2) = -x;_jacobianOplusXi(1,3) = 0;_jacobianOplusXi(1,4) = -1;_jacobianOplusXi(1,5) = 0;_jacobianOplusXi(2,0) = -y;_jacobianOplusXi(2,1) = x;_jacobianOplusXi(2,2) = 0;_jacobianOplusXi(2,3) = 0;_jacobianOplusXi(2,4) = 0;_jacobianOplusXi(2,5) = -1;}bool read ( istream& in ) {}bool write ( ostream& out ) const {}protected:Eigen::Vector3d _point;};ushort d1 = depth1.ptr<unsigned short> ( int ( keypoints_1[m.queryIdx].pt.y ) ) [ int ( keypoints_1[m.queryIdx].pt.x ) ];ushort d2 = depth2.ptr<unsigned short> ( int ( keypoints_2[m.trainIdx].pt.y ) ) [ int ( keypoints_2[m.trainIdx].pt.x ) ];if ( d1==0 || d2==0 ) // bad depthcontinue;Point2d p1 = pixel2cam ( keypoints_1[m.queryIdx].pt, K );Point2d p2 = pixel2cam ( keypoints_2[m.trainIdx].pt, K );float dd1 = float ( d1 ) /5000.0;float dd2 = float ( d2 ) /5000.0;pts1.push_back ( Point3f ( p1.x*dd1, p1.y*dd1, dd1 ) );pts2.push_back ( Point3f ( p2.x*dd2, p2.y*dd2, dd2 ) );Mat R, t;pose_estimation_3d3d ( pts1, pts2, R, t );cout<<"ICP via SVD results: "<<endl;cout<<"R = "<<R<<endl;cout<<"t = "<<t<<endl;cout<<"R_inv = "<<R.t() <<endl;cout<<"t_inv = "<<-R.t() *t<<endl;cout<<"calling bundle adjustment"<<endl;// bundleAdjustment( pts1, pts2, R, t );void pose_estimation_3d3d (const vector<Point3f>& pts1,const vector<Point3f>& pts2,Mat& R, Mat& t){Point3f p1, p2; // center of massint N = pts1.size();for ( int i=0; i<N; i++ ) { p1 += pts1[i]; p2 += pts2[i]; }p1 = Point3f( Vec3f(p1) / N);p2 = Point3f( Vec3f(p2) / N);vector<Point3f> q1 ( N ), q2 ( N ); // remove the centerfor ( int i=0; i<N; i++ ) { q1[i] = pts1[i] - p1; q2[i] = pts2[i] - p2; }// compute q1*q2^TEigen::Matrix3d W = Eigen::Matrix3d::Zero();for ( int i=0; i<N; i++ ){W += Eigen::Vector3d ( q1[i].x, q1[i].y, q1[i].z ) * Eigen::Vector3d ( q2[i].x, q2[i].y, q2[i].z ).transpose();}cout<<"W="<<W<<endl;// SVD on WEigen::JacobiSVD<Eigen::Matrix3d> svd ( W, Eigen::ComputeFullU|Eigen::ComputeFullV );Eigen::Matrix3d U = svd.matrixU();Eigen::Matrix3d V = svd.matrixV();cout<<"U="<<U<<endl;cout<<"V="<<V<<endl;Eigen::Matrix3d R_ = U* ( V.transpose() );Eigen::Vector3d t_ = Eigen::Vector3d ( p1.x, p1.y, p1.z ) - R_ * Eigen::Vector3d ( p2.x, p2.y, p2.z );eigen2cv(R_, R);eigen2cv(t_, t);}void bundleAdjustment (const vector< Point3f >& pts1,const vector< Point3f >& pts2,Mat& R, Mat& t ){// 初始化g2otypedef g2o::BlockSolver< g2o::BlockSolverTraits<6,3> > Block; // pose维度为 6, landmark 维度为 3Block::LinearSolverType* linearSolver = new g2o::LinearSolverEigen<Block::PoseMatrixType>(); // 线性方程求解器Block* solver_ptr = new Block( linearSolver ); // 矩阵块求解器g2o::OptimizationAlgorithmGaussNewton* solver = new g2o::OptimizationAlgorithmGaussNewton( solver_ptr );g2o::SparseOptimizer optimizer;optimizer.setAlgorithm( solver );// vertexg2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap(); // camera posepose->setId(0);pose->setEstimate( g2o::SE3Quat(Eigen::Matrix3d::Identity(),Eigen::Vector3d( 0,0,0 )) );optimizer.addVertex( pose );// edgesint index = 1;vector<EdgeProjectXYZRGBDPoseOnly*> edges;for ( size_t i=0; i<pts1.size(); i++ ){EdgeProjectXYZRGBDPoseOnly* edge = new EdgeProjectXYZRGBDPoseOnly(Eigen::Vector3d(pts2[i].x, pts2[i].y, pts2[i].z) );edge->setId( index );edge->setVertex( 0, dynamic_cast<g2o::VertexSE3Expmap*> (pose) );edge->setMeasurement( Eigen::Vector3d(pts1[i].x, pts1[i].y, pts1[i].z) );edge->setInformation( Eigen::Matrix3d::Identity()*1e4 );optimizer.addEdge(edge);index++;edges.push_back(edge);}chrono::steady_clock::time_point t1 = chrono::steady_clock::now();optimizer.setVerbose( true );optimizer.initializeOptimization();optimizer.optimize(10);chrono::steady_clock::time_point t2 = chrono::steady_clock::now();chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2-t1);cout<<"optimization costs time: "<<time_used.count()<<" seconds."<<endl;cout<<endl<<"after optimization:"<<endl;cout<<"T="<<endl<<Eigen::Isometry3d( pose->estimate() ).matrix()<<endl;

triangulation.cpp

void triangulation (const vector<KeyPoint>& keypoint_1,const vector<KeyPoint>& keypoint_2,const std::vector< DMatch >& matches,const Mat& R, const Mat& t,vector<Point3d>& points);Mat R,t;pose_estimation_2d2d ( keypoints_1, keypoints_2, matches, R, t );//-- 三角化vector<Point3d> points;triangulation( keypoints_1, keypoints_2, matches, R, t, points );//-- 验证三角化点与特征点的重投影关系Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 );for ( int i=0; i<matches.size(); i++ ){Point2d pt1_cam = pixel2cam( keypoints_1[ matches[i].queryIdx ].pt, K );Point2d pt1_cam_3d(points[i].x/points[i].z,points[i].y/points[i].z);cout<<"point in the first camera frame: "<<pt1_cam<<endl;cout<<"point projected from 3D "<<pt1_cam_3d<<", d="<<points[i].z<<endl;// 第二个图Point2f pt2_cam = pixel2cam( keypoints_2[ matches[i].trainIdx ].pt, K );Mat pt2_trans = R*( Mat_<double>(3,1) << points[i].x, points[i].y, points[i].z ) + t;pt2_trans /= pt2_trans.at<double>(2,0);cout<<"point in the second camera frame: "<<pt2_cam<<endl;cout<<"point reprojected from second frame: "<<pt2_trans.t()<<endl;cout<<endl;}void pose_estimation_2d2d (const std::vector<KeyPoint>& keypoints_1,const std::vector<KeyPoint>& keypoints_2,const std::vector< DMatch >& matches,Mat& R, Mat& t ){// 相机内参,TUM Freiburg2Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 );//-- 把匹配点转换为vector<Point2f>的形式vector<Point2f> points1;vector<Point2f> points2;for ( int i = 0; i < ( int ) matches.size(); i++ ){points1.push_back ( keypoints_1[matches[i].queryIdx].pt );points2.push_back ( keypoints_2[matches[i].trainIdx].pt );}//-- 计算基础矩阵Mat fundamental_matrix;fundamental_matrix = findFundamentalMat ( points1, points2, CV_FM_8POINT );cout<<"fundamental_matrix is "<<endl<< fundamental_matrix<<endl;//-- 计算本质矩阵Point2d principal_point ( 325.1, 249.7 ); //相机主点, TUM dataset标定值int focal_length = 521; //相机焦距, TUM dataset标定值Mat essential_matrix;essential_matrix = findEssentialMat ( points1, points2, focal_length, principal_point );cout<<"essential_matrix is "<<endl<< essential_matrix<<endl;//-- 计算单应矩阵Mat homography_matrix;homography_matrix = findHomography ( points1, points2, RANSAC, 3 );cout<<"homography_matrix is "<<endl<<homography_matrix<<endl;//-- 从本质矩阵中恢复旋转和平移信息.recoverPose ( essential_matrix, points1, points2, R, t, focal_length, principal_point );cout<<"R is "<<endl<<R<<endl;cout<<"t is "<<endl<<t<<endl;}void triangulation (const vector< KeyPoint >& keypoint_1,const vector< KeyPoint >& keypoint_2,const std::vector< DMatch >& matches,const Mat& R, const Mat& t,vector< Point3d >& points ){Mat T1 = (Mat_<float> (3,4) <<1,0,0,0,0,1,0,0,0,0,1,0);Mat T2 = (Mat_<float> (3,4) <<R.at<double>(0,0), R.at<double>(0,1), R.at<double>(0,2), t.at<double>(0,0),R.at<double>(1,0), R.at<double>(1,1), R.at<double>(1,2), t.at<double>(1,0),R.at<double>(2,0), R.at<double>(2,1), R.at<double>(2,2), t.at<double>(2,0));Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 );vector<Point2f> pts_1, pts_2;for ( DMatch m:matches ){// 将像素坐标转换至相机坐标pts_1.push_back ( pixel2cam( keypoint_1[m.queryIdx].pt, K) );pts_2.push_back ( pixel2cam( keypoint_2[m.trainIdx].pt, K) );}Mat pts_4d;cv::triangulatePoints( T1, T2, pts_1, pts_2, pts_4d );// 转换成非齐次坐标for ( int i=0; i<pts_4d.cols; i++ ){Mat x = pts_4d.col(i);x /= x.at<float>(3,0); // 归一化Point3d p (x.at<float>(0,0),x.at<float>(1,0),x.at<float>(2,0));points.push_back( p );}}

Ch8

directmethod

opticalflow

sparse:

// 一次测量的值,包括一个世界坐标系下三维点与一个灰度值struct Measurement {Measurement ( Eigen::Vector3d p, float g ) : pos_world ( p ), grayscale ( g ) {}Eigen::Vector3d pos_world;float grayscale;};inline Eigen::Vector3d project2Dto3D ( int x, int y, int d,float fx, float fy, float cx, float cy,float scale) {float zz = float ( d ) /scale;float xx = zz* ( x-cx ) /fx;float yy = zz* ( y-cy ) /fy;return Eigen::Vector3d ( xx, yy, zz );}inline Eigen::Vector2d project3Dto2D ( float x, float y, float z,float fx, float fy, float cx, float cy) {float u = fx*x/z+cx;float v = fy*y/z+cy;return Eigen::Vector2d ( u,v );}// 直接法估计位姿// 输入:测量值(空间点的灰度),新的灰度图,相机内参; 输出:相机位姿// 返回:true为成功,false失败bool poseEstimationDirect ( const vector<Measurement>& measurements,cv::Mat* gray,Eigen::Matrix3f& intrinsics,Eigen::Isometry3d& Tcw );// project a 3d point into an image plane, the error is photometric error// an unary edge with one vertex SE3Expmap (the pose of camera)class EdgeSE3ProjectDirect: public BaseUnaryEdge< 1, double, VertexSE3Expmap>{public:EIGEN_MAKE_ALIGNED_OPERATOR_NEWEdgeSE3ProjectDirect() {}EdgeSE3ProjectDirect ( Eigen::Vector3d point, float fx, float fy, float cx, float cy, cv::Mat* image ): x_world_ ( point ), fx_ ( fx ), fy_ ( fy ), cx_ ( cx ), cy_ ( cy ), image_ ( image ){}virtual void computeError(){const VertexSE3Expmap* v =static_cast<const VertexSE3Expmap*> ( _vertices[0] );Eigen::Vector3d x_local = v->estimate().map ( x_world_ );float x = x_local[0]*fx_/x_local[2] + cx_;float y = x_local[1]*fy_/x_local[2] + cy_;// check x,y is in the imageif ( x-4<0 || ( x+4 ) >image_->cols || ( y-4 ) <0 || ( y+4 ) >image_->rows ){_error ( 0,0 ) = 0.0;this->setLevel ( 1 );}else{_error ( 0,0 ) = getPixelValue ( x,y ) - _measurement;}}// plus in manifoldvirtual void linearizeOplus( ){if ( level() == 1 ) {_jacobianOplusXi = Eigen::Matrix<double, 1, 6>::Zero();return;}VertexSE3Expmap* vtx = static_cast<VertexSE3Expmap*> ( _vertices[0] );Eigen::Vector3d xyz_trans = vtx->estimate().map ( x_world_ ); // q in bookdouble x = xyz_trans[0];double y = xyz_trans[1];double invz = 1.0/xyz_trans[2];double invz_2 = invz*invz;float u = x*fx_*invz + cx_;float v = y*fy_*invz + cy_;// jacobian from se3 to u,v// NOTE that in g2o the Lie algebra is (\omega, \epsilon), where \omega is so(3) and \epsilon the translation// 书上 195 页公式, omega 和 epsilon 顺序和书上不同Eigen::Matrix<double, 2, 6> jacobian_uv_ksai;jacobian_uv_ksai ( 0,0 ) = - x*y*invz_2 *fx_;jacobian_uv_ksai ( 0,1 ) = ( 1+ ( x*x*invz_2 ) ) *fx_;jacobian_uv_ksai ( 0,2 ) = - y*invz *fx_;jacobian_uv_ksai ( 0,3 ) = invz *fx_;jacobian_uv_ksai ( 0,4 ) = 0;jacobian_uv_ksai ( 0,5 ) = -x*invz_2 *fx_;jacobian_uv_ksai ( 1,0 ) = - ( 1+y*y*invz_2 ) *fy_;jacobian_uv_ksai ( 1,1 ) = x*y*invz_2 *fy_;jacobian_uv_ksai ( 1,2 ) = x*invz *fy_;jacobian_uv_ksai ( 1,3 ) = 0;jacobian_uv_ksai ( 1,4 ) = invz *fy_;jacobian_uv_ksai ( 1,5 ) = -y*invz_2 *fy_;Eigen::Matrix<double, 1, 2> jacobian_pixel_uv;jacobian_pixel_uv ( 0,0 ) = ( getPixelValue ( u+1,v )-getPixelValue ( u-1,v ) ) /2;jacobian_pixel_uv ( 0,1 ) = ( getPixelValue ( u,v+1 )-getPixelValue ( u,v-1 ) ) /2;_jacobianOplusXi = jacobian_pixel_uv*jacobian_uv_ksai;}// dummy read and write functions because we don't care...virtual bool read ( std::istream& in ) {}virtual bool write ( std::ostream& out ) const {}protected:// get a gray scale value from reference image (bilinear interpolated)inline float getPixelValue ( float x, float y ){uchar* data = & image_->data[ int ( y ) * image_->step + int ( x ) ];float xx = x - floor ( x );float yy = y - floor ( y );return float (( 1-xx ) * ( 1-yy ) * data[0] +xx* ( 1-yy ) * data[1] +( 1-xx ) *yy*data[ image_->step ] +xx*yy*data[image_->step+1]);}public:Eigen::Vector3d x_world_; // 3D point in world framefloat cx_=0, cy_=0, fx_=0, fy_=0; // Camera intrinsicscv::Mat* image_=nullptr; // reference image};int main ( int argc, char** argv ){if ( argc != 2 ) {cout << "usage: useLK path_to_dataset" << endl;return 1;}srand ( ( unsigned int ) time ( 0 ) );string path_to_dataset = argv[1];string associate_file = path_to_dataset + "/associate.txt";ifstream fin ( associate_file );string rgb_file, depth_file, time_rgb, time_depth;cv::Mat color, depth, gray;vector<Measurement> measurements;// 相机内参float cx = 325.5;float cy = 253.5;float fx = 518.0;float fy = 519.0;float depth_scale = 1000.0;Eigen::Matrix3f K;K<<fx,0.f,cx,0.f,fy,cy,0.f,0.f,1.0f;Eigen::Isometry3d Tcw = Eigen::Isometry3d::Identity();cv::Mat prev_color;// 我们以第一个图像为参考,对后续图像和参考图像做直接法for ( int index=0; index<10; index++ ) {cout<<"*********** loop "<<index<<" ************"<<endl;// 1305031473.127744 rgb/1305031473.127744.png 1305031473.124072 depth/1305031473.124072.pngfin>>time_rgb>>rgb_file>>time_depth>>depth_file;color = cv::imread ( path_to_dataset+"/"+rgb_file );depth = cv::imread ( path_to_dataset+"/"+depth_file, -1 );if ( color.data==nullptr || depth.data==nullptr )continue;cv::cvtColor ( color, gray, cv::COLOR_BGR2GRAY );if ( index ==0 ) {// 对第一帧提取FAST特征点vector<cv::KeyPoint> keypoints;cv::Ptr<cv::FastFeatureDetector> detector = cv::FastFeatureDetector::create();detector->detect ( color, keypoints );for ( auto kp:keypoints ) {// 去掉邻近边缘处的点if ( kp.pt.x < 20 || kp.pt.y < 20 || ( kp.pt.x+20 ) >color.cols || ( kp.pt.y+20 ) >color.rows )continue;ushort d = depth.ptr<ushort> ( cvRound ( kp.pt.y ) ) [ cvRound ( kp.pt.x ) ];if ( d==0 )continue;Eigen::Vector3d p3d = project2Dto3D ( kp.pt.x, kp.pt.y, d, fx, fy, cx, cy, depth_scale );float grayscale = float ( gray.ptr<uchar> ( cvRound ( kp.pt.y ) ) [ cvRound ( kp.pt.x ) ] );measurements.push_back ( Measurement ( p3d, grayscale ) );}prev_color = color.clone();continue;}// 使用直接法计算相机运动chrono::steady_clock::time_point t1 = chrono::steady_clock::now();poseEstimationDirect ( measurements, &gray, K, Tcw );chrono::steady_clock::time_point t2 = chrono::steady_clock::now();chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>> ( t2-t1 );cout<<"direct method costs time: "<<time_used.count() <<" seconds."<<endl;cout<<"Tcw="<<Tcw.matrix() <<endl;// plot the feature pointscv::Mat img_show ( color.rows*2, color.cols, CV_8UC3 );prev_color.copyTo ( img_show ( cv::Rect ( 0,0,color.cols, color.rows ) ) );color.copyTo ( img_show ( cv::Rect ( 0,color.rows,color.cols, color.rows ) ) );for ( Measurement m:measurements ) {if ( rand() > RAND_MAX/5 ) continue;Eigen::Vector3d p = m.pos_world;Eigen::Vector2d pixel_prev = project3Dto2D ( p ( 0,0 ), p ( 1,0 ), p ( 2,0 ), fx, fy, cx, cy );Eigen::Vector3d p2 = Tcw*m.pos_world;Eigen::Vector2d pixel_now = project3Dto2D ( p2 ( 0,0 ), p2 ( 1,0 ), p2 ( 2,0 ), fx, fy, cx, cy );if ( pixel_now(0,0)<0 || pixel_now(0,0)>=color.cols || pixel_now(1,0)<0 || pixel_now(1,0)>=color.rows )continue;float b = 255*float ( rand() ) /RAND_MAX;float g = 255*float ( rand() ) /RAND_MAX;float r = 255*float ( rand() ) /RAND_MAX;cv::circle ( img_show, cv::Point2d ( pixel_prev ( 0,0 ), pixel_prev ( 1,0 ) ), 8, cv::Scalar ( b,g,r ), 2 );cv::circle ( img_show, cv::Point2d ( pixel_now ( 0,0 ), pixel_now ( 1,0 ) +color.rows ), 8, cv::Scalar ( b,g,r ), 2 );cv::line ( img_show,cv::Point2d ( pixel_prev ( 0,0 ), pixel_prev ( 1,0 ) ),cv::Point2d ( pixel_now ( 0,0 ), pixel_now ( 1,0 ) +color.rows ),cv::Scalar ( b,g,r ), 1 );}cv::imshow ( "result", img_show );cv::waitKey ( 0 );}return 0;}bool poseEstimationDirect ( const vector< Measurement >& measurements, cv::Mat* gray, Eigen::Matrix3f& K, Eigen::Isometry3d& Tcw ){// 初始化g2otypedef g2o::BlockSolver<g2o::BlockSolverTraits<6,1>> DirectBlock; // 求解的向量是6*1的DirectBlock::LinearSolverType* linearSolver = new g2o::LinearSolverDense< DirectBlock::PoseMatrixType > ();DirectBlock* solver_ptr = new DirectBlock ( linearSolver );// g2o::OptimizationAlgorithmGaussNewton* solver = new g2o::OptimizationAlgorithmGaussNewton( solver_ptr ); // G-Ng2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg ( solver_ptr ); // L-Mg2o::SparseOptimizer optimizer;optimizer.setAlgorithm ( solver );optimizer.setVerbose( true );g2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap();pose->setEstimate ( g2o::SE3Quat ( Tcw.rotation(), Tcw.translation() ) );pose->setId ( 0 );optimizer.addVertex ( pose );// 添加边int id=1;for ( Measurement m: measurements ){EdgeSE3ProjectDirect* edge = new EdgeSE3ProjectDirect (m.pos_world,K ( 0,0 ), K ( 1,1 ), K ( 0,2 ), K ( 1,2 ), gray);edge->setVertex ( 0, pose );edge->setMeasurement ( m.grayscale );edge->setInformation ( Eigen::Matrix<double,1,1>::Identity() );edge->setId ( id++ );optimizer.addEdge ( edge );}cout<<"edges in graph: "<<optimizer.edges().size() <<endl;optimizer.initializeOptimization();optimizer.optimize ( 30 );Tcw = pose->estimate();}

L2

#include <iostream>#include <cmath>#include <Eigen/Core>#include <Eigen/Geometry>using namespace std;int main ( int argc, char** argv ){// w, x, y, zEigen::Quaterniond q1 = Eigen::Quaterniond (0.35,0.2,0.3,0.1); q1.normalize();Eigen::Quaterniond q2 = Eigen::Quaterniond (-0.5,0.4,-0.1,0.2); q2.normalize();Eigen::Isometry3d T1_cw = Eigen::Isometry3d::Identity();T1_cw.rotate ( q1 );T1_cw.pretranslate ( Eigen::Vector3d ( 0.3,0.1,0.1 ) );Eigen::Isometry3d T2_cw = Eigen::Isometry3d::Identity();T2_cw.rotate ( q2 );T2_cw.pretranslate ( Eigen::Vector3d ( -0.1,0.5,0.3 ) );cout << "Answer:\n" << T2_cw * T1_cw.inverse() * Eigen::Vector3d (0.5, 0.0, 0.2) << endl;return 0;}

Eigen 文档阅读

Linear algebra and decompositions

http://eigen.tuxfamily.org/dox/group__TutorialLinearAlgebra.html

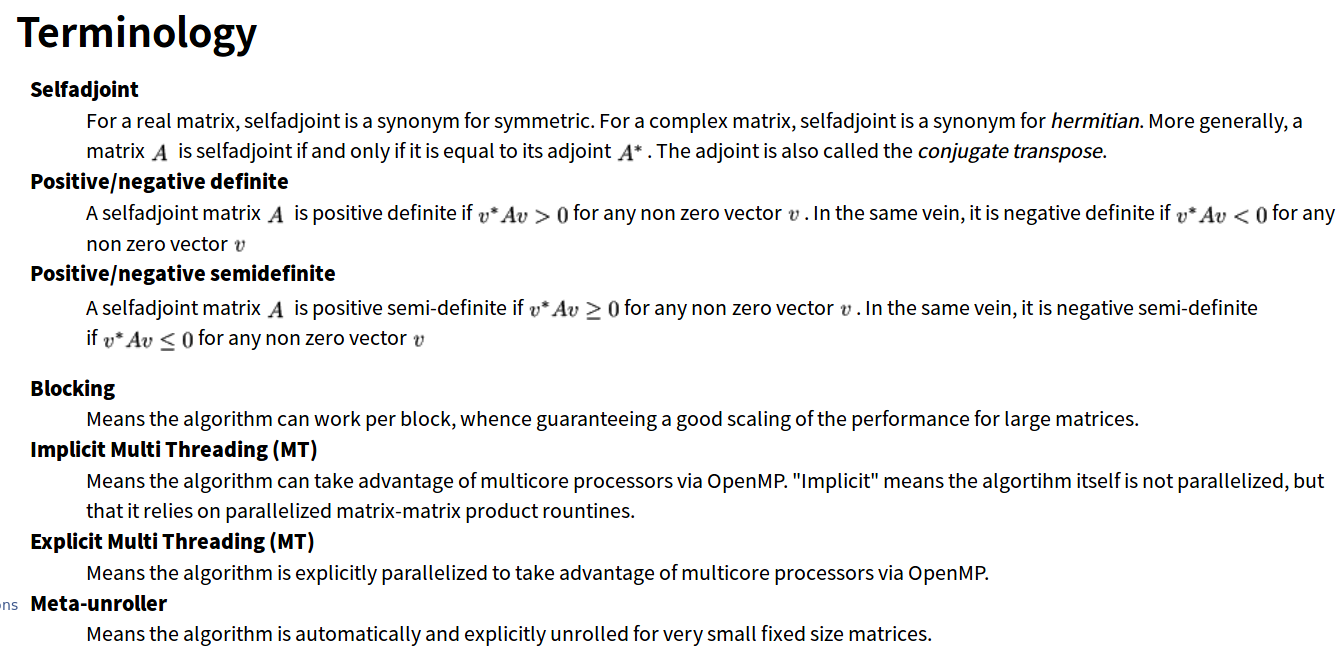

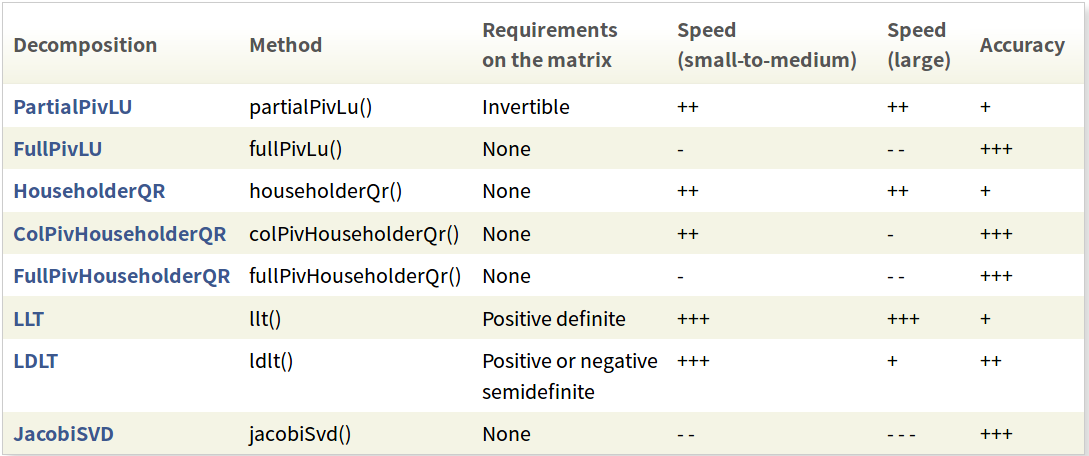

This page explains how to solve linear systems, compute various decompositions such as LU, QR, SVD, eigendecompositions... After reading this page, don't miss our catalogue of dense matrix decompositions.

最通用的是 colPivHouseHolderQr 分解. 一个折中.

如果正定的话, 就可以用 llt 和 ldlt.

For example, if your matrix is positive definite, the above table says that a very good choice is then the LLT or LDLT decomposition.

可能需要检验是否计算正确:

MatrixXd A = MatrixXd::Random(100,100);MatrixXd b = MatrixXd::Random(100,50);MatrixXd x = A.fullPivLu().solve(b);double relative_error = (A*x - b).norm() / b.norm(); // norm() is L2 normcout << "The relative error is:\n" << relative_error << endl;

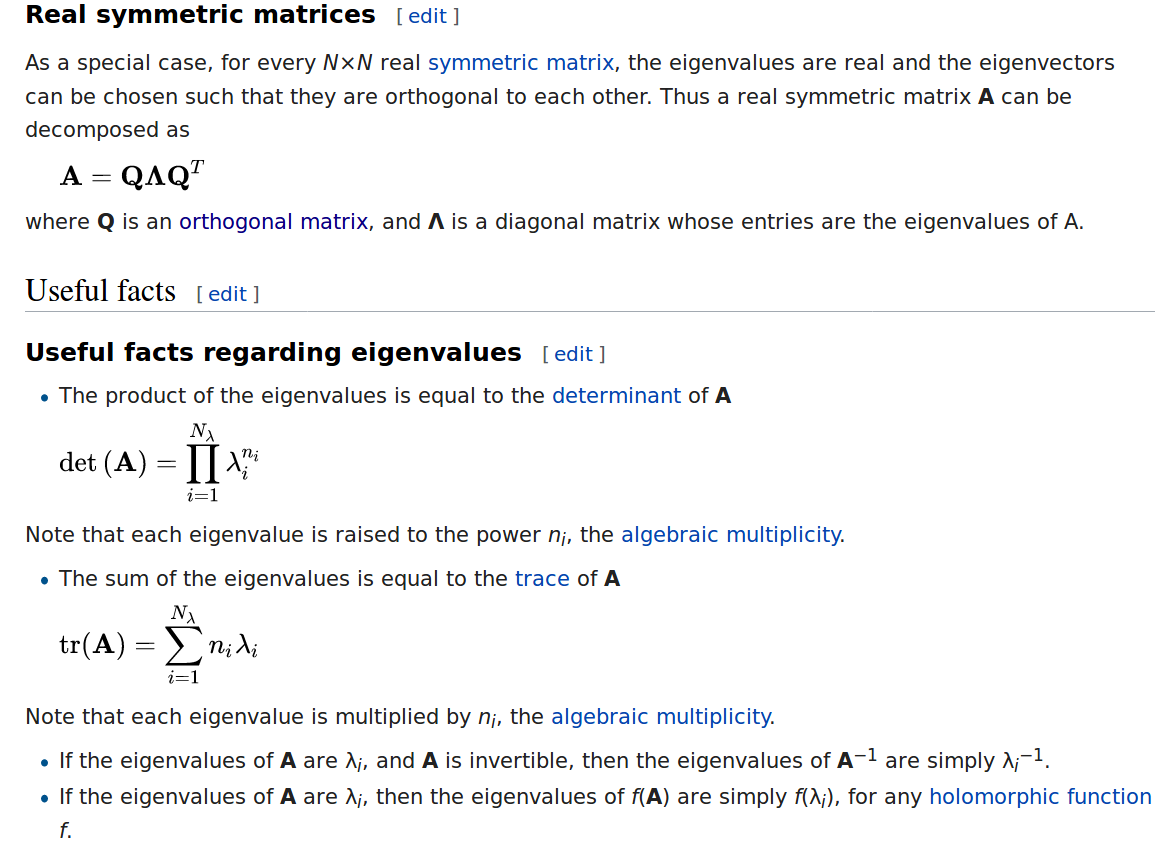

SelfAdjointtEigenSolver 之类的.A SelfAdjoint 是说对称, 对弈实矩阵来说就是对称阵. 对于 complex, 是 hermitten.

Where the conjugate transpose is denoted A^(H), A^(T) is the transpose, and z^_ is the complex conjugate. If a matrix is self-adjoint, it is said to be Hermitian. 所以用的 H 表示 conjugate transpose 啊.

You need an eigendecomposition here, see available such decompositions on this page. Make sure to check if your matrix is self-adjoint, as is often the case in these problems. Here's an example using SelfAdjointEigenSolver, it could easily be adapted to general matrices using EigenSolver or ComplexEigenSolver.

对与小矩阵 (4x4 及以下), 用 inverse 和 determinant 还不错. 如果是大矩阵, 就应该避免 (很慢), 用分解的方法来做. 而且, 实践上, 用 determinant 来看是否可以 invert 是很不对的 (有误差).

Rank-revealing decompositions

Rank-revealing decompositions offer at least a rank() method. They can also offer convenience methods such as isInvertible(), and some are also providing methods to compute the kernel (null-space) and image (column-space) of the matrix, as is the case with FullPivLU.

Solving linear least squares systems

http://eigen.tuxfamily.org/dox/group__LeastSquares.html

This page describes how to solve linear least squares systems using Eigen. An overdetermined system of equations, say Ax = b, has no solutions. In this case, it makes sense to search for the vector x which is closest to being a solution, in the sense that the difference Ax - b is as small as possible. This x is called the least square solution (if the Euclidean norm is used).

- Using the SVD decomposition (准确, 慢)

- Using the QR decomposition (折中)

- Using normal equations (不准, 快)

Of these, the SVD decomposition is generally the most accurate but the slowest, normal equations is the fastest but least accurate, and the QR decomposition is in between.

If the matrix A is ill-conditioned (条件数不够), then this is not a good method, because the condition number of ATA is the square of the condition number of A. This means that you lose twice as many digits using normal equation than if you use the other methods.

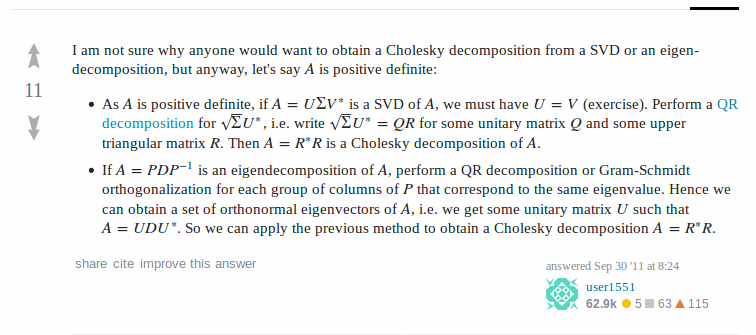

Cholesky 分解是分解成三角, 只对 Hermitian, 正定的矩阵有用.

L 是下三角, L* 是她的 conjugate transpose. 一般函数叫 llt.

LDL* 这个 L 是 lower unitriangular (lower unit triangular, 对角线上都是 1). D 是 diagnal.

函数叫 ldlt.

The LDL variant, if efficiently implemented, requires the same space and computational complexity to construct and use but avoids extracting square roots.6 Some indefinite matrices for which no Cholesky decomposition exists have an LDL decomposition with negative entries in D. For these reasons, the LDL decomposition may be preferred. For real matrices, the factorization has the form A = LDLT and is often referred to as LDLT decomposition (or LDLT decomposition, or LDL'). It is closely related to the eigendecomposition of real symmetric matrices, A = QΛQT.

In linear algebra, the Cholesky decomposition or Cholesky factorization is a decomposition of a Hermitian, positive-definite matrix into the product of a lower triangular matrix and its conjugate transpose, which is useful for efficient numerical solutions, e.g. Monte Carlo simulations. It was discovered by André-Louis Cholesky for real matrices. When it is applicable, the Cholesky decomposition is roughly twice as efficient as the LU decomposition for solving systems of linear equations.

Several people in this thread asked why you would ever want to do Cholesky on a non-positive-definite matrix. I thought I'd mention a case would motivate this question.

Cholesky decomposition is used to generate Gaussian random variants given a covariance matrix using xi=∑jLijzj

where each zj Normal(0,1) and L is the Cholesky decomposition of the covariance matrix.

#

http://eigen.tuxfamily.org/dox/group__TopicLinearAlgebraDecompositions.html

L3

cpp

他们每天假装关心你,站在道德的高地假惺惺说你的国家没有人权,怜悯你的苦难。但是当你真正争取到一点点尊严和体面,在此过程中损害了他们一根毛的利益,他们就会扔掉所谓的优雅体面怜悯和同情心,用最恶毒的词语咒骂你。他们自己开大排量汽车,却见不得穷人烧火取暖说你污染环境。自己享受血汗工厂廉价的商品服务,却"忧国忧民"觉得中国劳工涨工资成本高削弱国家竞争力。这就是我从来对某些发达国家口惠实不至的伪善嗤之以鼻的原因。这也是我对国内某些自私又爱表演的慈善家嗤之以鼻的原因。每到此时,我才更觉得放弃优越的物质生活,不畏艰难困苦,摒弃小我私心的白求恩大夫,农机专家/核物理学家寒春阳早夫妇这样的人才是真正高尚和脱离低级趣味的人。

作者:吴名士

链接:https://www.zhihu.com/question/270454447/answer/354818407

来源:知乎

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

考验人性三守则:一、不把被考验者置于极端的环境,而是确保他处于一个大体平常的生理、心理环境。你不能羞辱、打击别人,然后指望别人继续对你好。你不能在爆发大饥荒的时候要求女人饿死也不出卖肉体,男人饿死也不可偷盗。二、不对人性做出过高期待,差不多就行了。你必须了解社会,知道中不溜的人是个啥德行。三、出于公平原则,若被考验者反过来考验你,你要接受。

作者:城市猎人

链接:https://www.zhihu.com/question/35930303/answer/353387591

来源:知乎

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

其中有一条说:出轨是因为,我死水一般的婚姻生活让我备受孤独的煎熬。

因为大多数时候,人是选择做一个好人,而不是本性如此。

LiDAR 即 Light Detection And Ranging,

是一个集激光、全球定位系统

(GPS)和惯性导航系统(INS)三种现代尖端技术于一身的空间测量系统,

能够快速、精确地获取地面三维信息,被广泛用于地面数据探测和模型的恢

复、重建等应用中,在这些应用中显示了巨大的前景,并逐渐成为三维数据

模型获取的一种重要方法。

在使用机载 LiDAR 时需要除了激光数据意外的其他数据来保证激光数

据的精确性。在传感器移动时,传感器的高度、位置、姿态都需要记录下来,

用于确定每一束激光发出与接收时刻的位置与姿态。这些额外的信息对于

激光数据的完整性是至关重要的。

激光是以波长划分的,600-1000nm 的激光主要用于非科学用途,不过

由于这个波段的激光能够被人眼接收,所以此波段的激光的最大功率被限制

在低水平,一防止对人眼的伤害。一般使用 1550nm 波长的激光,因为这个

波段的激光不能够被人眼接收,所以能够以更高的功率使用。 长波激光主要

用于长距离、低精度的测量。1550nm 波长的另一个好处是它不会在夜视仪

下显示,因此非常适合于军事用途。

精度高是使用 LiDAR 数据的主要原因之一, LiDAR 技术对于测量大面

积的地形高程数据是一个省时、省力的一个选择。因此确定数据采集精度和

存储精度的等级对于 LiDAR 数据采集与之后的使用是很重要的。数据提供

商可以通过控制一些数据采集过程中的参数,来控制数据采集的精度与数据

采集成本。当这些数据被采集之后,就会被分析它的精度是否是能够满足后

面的应用需求。数据存储精度的确定是以最大化数据应用为原则的。LiDAR

数据的数据精度一般会随着元数据和质量监测报告一起提供给用户。

点数据一般使用 LAS 格式存储,LAS 格式是一种以二进制形式存储的

文件格式。LiDAR 点数据不仅仅只包含 X、Y、Z 三个坐标值,还可以存储

很多其它的数据,如反射强度、反射数量、点分类,具体参照图 5。当然这

些数据也可以使用文本文件的形式存储,但是这样使得数据文件大小变得很

大,不利于数据处理。

DEM 数据是规则的数据,一般存储在栅格数据文件中,如 GeoTiff(.tif)、

Esri Grid(.adf)、ERDAS 的 img。这些 DEM 数据可以使用许多内差技术生

成。这些内差技术简单的有最临近像元法,复杂的有克里金法,如图 6。最

常见的 DEM 生成使用距离的导数作为权值内差得到栅格点的高程。

单束激光多次反射

以穿透水面获得底部的数据点。水深 LiDAR 系统使用的激光在干净的水

体中最多可以穿透 70 米,但是在浑浊或者有波浪的水体中穿透能力仅仅只

有几米。

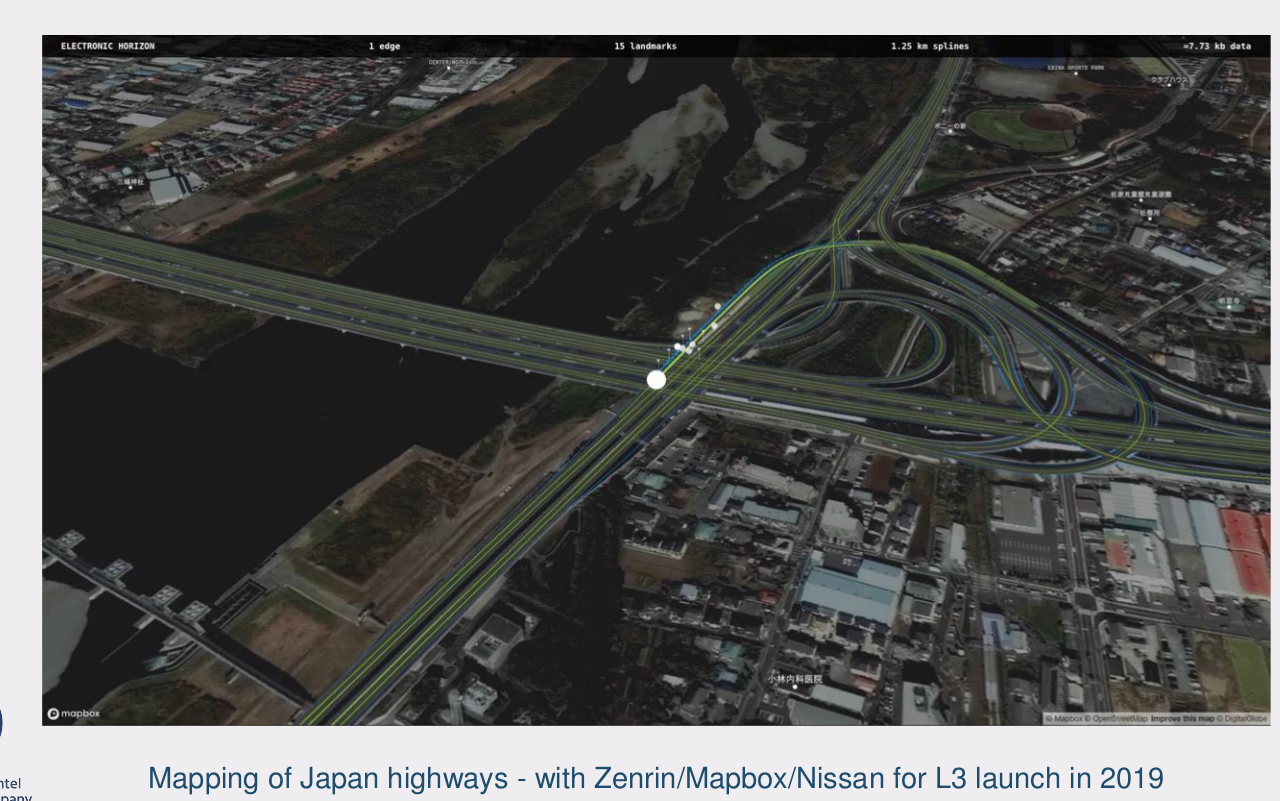

Towards Autonomous Driving

holistic - Bing dictionary

US[hoʊ'lɪstɪk]UK[həʊ'lɪstɪk]

adj.整体的;全面的;功能整体性的

网络全人;全盘的;整体论

holistic lane centering

Road

Experience

Management

(REM)

Sensing alone (righthand image) cannot robustly detect the drivable path to enable safe hands-

free control. The Roadbook data can bridge the gap as localization is based on a high degree of

redundancy of landmarks and is therefore robust.

where pedestrians are more vulnerable to accidents and

together with REM can provide data about infrastructure

(lanes, traffic signs) for decision makers

Absolute Safety is impossible – typical highway

situation

“Self-driving cars should be statistically

better than a human driver”

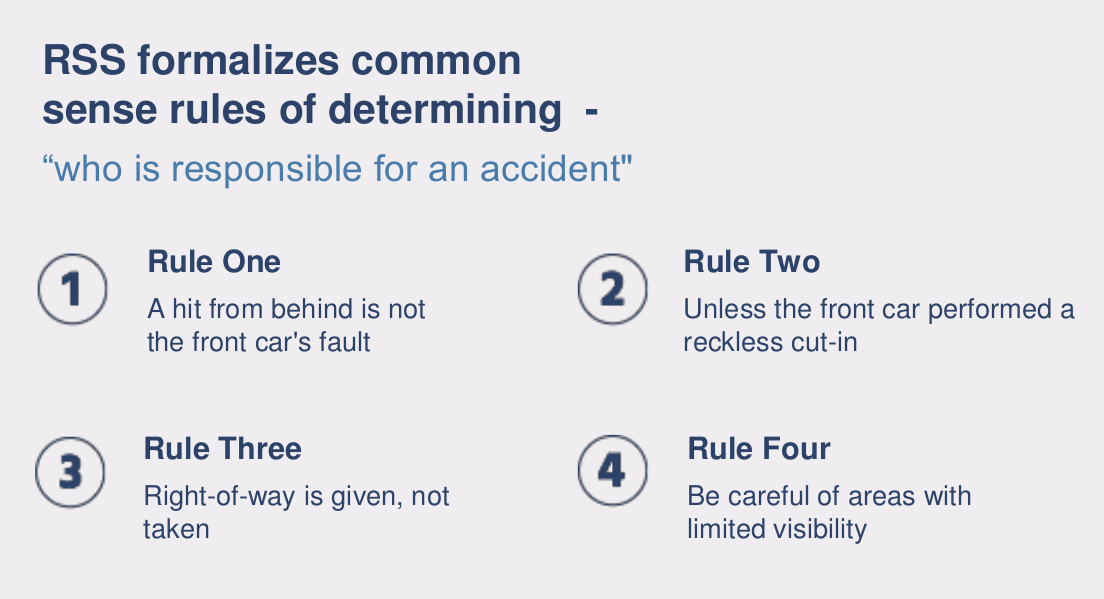

Goal: Self-driving cars should never be esponsible for accidents, meaning:

- Self-driving cars should never cause accidents

- Self-driving cars should properly respond to mistakes of other drivers

RSS is:

A mathematical, interpretable, model, formalizing the “common

sense" or “human judgement" of “who is responsible for an

accident"