@JunQiu

2018-09-18T10:13:06.000000Z

字数 5176

阅读 2548

airflow(tips)

summary_2018/09 tools

1、日常

1.1、airflow深入学习及一些TIPs

2、技术

1.1、airflow深入学习及一些TIPS

- DAG 具有自己的 scop 即作用域,决定能否被使用,在一个 py flie 里面可以有多个 DAG,但是 推荐each one should correspond to a single logical workflow.

dag_1 = DAG('this_dag_will_be_discovered')// dag_2 can not be uuedef my_function():dag_2 = DAG('but_this_dag_will_not')my_function()

While DAGs describe how to run a workflow, Operators determine what actually gets done. (two operators 需要共享信息)

- in general, if two operators need to share information, like a filename or small amount of data, you should consider combining them into a single operator. If it absolutely can’t be avoided, Airflow does have a feature for operator cross-communication called XCom

确定 operator 所属 DAG ??

- 当个单个 DAG 在 py 文件内,根据上下文的关系进行确定

// Operators do not have to be assigned to DAGs immediately (previously dag was a required argument).dag = DAG('my_dag', start_date=datetime(2016, 1, 1))# sets the DAG explicitlyexplicit_op = DummyOperator(task_id='op1', dag=dag)# deferred DAG assignmentdeferred_op = DummyOperator(task_id='op2')deferred_op.dag = dag# inferred DAG assignment (linked operators must be in the same DAG)inferred_op = DummyOperator(task_id='op3')inferred_op.set_upstream(deferred_op)

- how to set operator relationships ???

// operator relationships are set with the set_upstream() and set_downstream() methods. In Airflow 1.8, this can be done with the Python bitshift operators >> and <<.// example op1 >> op2 >> op3 << op4// 感觉这种写法很优雅with DAG('my_dag', start_date=datetime(2016, 1, 1)) as dag:(DummyOperator(task_id='dummy_1')>> BashOperator(task_id='bash_1',bash_command='echo "HELLO!"')>> PythonOperator(task_id='python_1',python_callable=lambda: print("GOODBYE!")))

- Some systems can get overwhelmed when too many processes hit them at the same time.how to avoid??

- 当有多个 DAG 共有100个Task需要同时执行时,这个会根据我们所使用的 Executor(一般是CeleryExecutor) 的 parallelism 来确定可以同时运行的Task Instance的数量,在排队中的顺序task的顺序是不一定的(没有向前的依赖)。

- 我们可以使用pool的方式来limit the execution parallelism ,确定可以运行的 Task Instance的数量,还可以和priority_weight结合使用,确立优先级。即Once capacity is reached, runnable tasks get queued and their state will show as such in the UI. As slots free up, queued tasks start running based on the priority_weight (of the task and its descendants).

// 在operator中指定pool,默认不会放进任务pool中pool='ep_data_pipeline_db_msg_agg',

- Variables

- 类似 ENV VAR ,在 Admin -> Variables)进行设置,在PY中使用,但是跑docker的话,可能没有多大的用处。

from airflow.models import Variablefoo = Variable.get("foo")bar = Variable.get("bar", deserialize_json=True)

Branching

- 分支感觉是一个比较好用的特性,如果想跳过某些任务

- If you want to skip some tasks, keep in mind that you can’t have an empty path, if so make a dummy task.

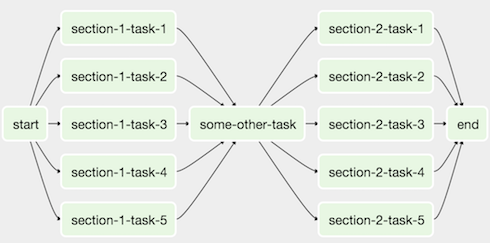

SubDAGs

- SubDAGs are perfect for repeating patterns. (可以很好的避免冗余)

- SubDAGs are perfect for repeating patterns. (可以很好的避免冗余)

#dags/subdag.pyfrom airflow.models import DAGfrom airflow.operators.dummy_operator import DummyOperator# Dag is returned by a factory methoddef sub_dag(parent_dag_name, child_dag_name, start_date, schedule_interval):dag = DAG('%s.%s' % (parent_dag_name, child_dag_name),schedule_interval=schedule_interval,start_date=start_date,)dummy_operator = DummyOperator(task_id='dummy_task',dag=dag,)return dag

Trigger Rules

- Though the normal workflow behavior is to trigger tasks when all their directly upstream tasks have succeeded, Airflow allows for more complex dependency settings.

- 感觉是一个比较好的特性,对流程更加复杂的控制,每个operators 都有 trigger_rule 属性,默认 all_success,可以选择的值:

1、all_success: (default) all parents have succeeded2、all_failed: all parents are in a failed or upstream_failed state3、all_done: all parents are done with their execution4、one_failed: fires as soon as at least one parent has failed, it does not wait for all parents to be done5、one_success: fires as soon as at least one parent succeeds, it does not wait for all parents to be done6、dummy: dependencies are just for show, trigger at will

how to avoid backfills or running jobs missed ??

- airflow的工作流是标准的工作流模式,从start time+interval执行第一次,会一直执行到当前时间的execution date,即每次间隔都会执行一次。当我们暂停一个调度,花了3个小时,执行间隔1小时,那么当重新启动调度时,airflow 会立即创建3个DAG Run,称为backfills or running jobs missed。

- how to avoid backfills or running jobs missed??

// 方案一 使用LatestOnlyOperator跳过,比较简单推荐import datetime as dtfrom airflow.models import DAGfrom airflow.operators.dummy_operator import DummyOperatorfrom airflow.operators.latest_only_operator import LatestOnlyOperatordag = DAG(dag_id='latest_only_with_trigger',schedule_interval=dt.timedelta(hours=4),start_date=dt.datetime(2016, 9, 20),)// skip all backfillslatest_only = LatestOnlyOperator(task_id='latest_only', dag=dag)// skip all backfills(not all_success)task1 = DummyOperator(task_id='task1', dag=dag)task1.set_upstream(latest_only)Tips:可以结合Trigger Rules使用// 方案二(没有试用过)使用Short Circuit Operator检查当前 DAG Run 是否为最新,不是最新的直接跳过整个 DAG

关于airflow 关于权重的处理,默认downstream :自身和下游(依赖它的)任务权重的和来与其它任务作比较(即任务依赖越多,可能权重就越大)

def priority_weight_total(self):if self.weight_rule == WeightRule.ABSOLUTE:return self.priority_weightelif self.weight_rule == WeightRule.DOWNSTREAM:upstream = Falseelif self.weight_rule == WeightRule.UPSTREAM:upstream = Trueelse:upstream = Falsereturn self.priority_weight + sum(map(lambda task_id: self._dag.task_dict[task_id].priority_weight,self.get_flat_relative_ids(upstream=upstream)))

一些比较重要的配置参数

- parallelism:能同时运行 Task Instance 的数量。与DAG无关,仅与Executor和Task有关。(gcp上默认30)

- dag_concurrency:同一个DAG Run能同时运行Task Instance的个数。(gcp上默认15)

- dag_dir_list_interval:读取dags文件夹的间隔。(gcp上默认100)

- max_active_runs_per_dag:同一个Dag能被同时激活的Dag Run的数量。(gcp上默认15)

DAG/Operator一些比较重要的参数

- retries(int):失败前重试的次数

- retry_delay(timedelta):重试前的等待时间

- max_retry_delay:重试之间的最长的等待时间

- priority_weight(int):默认1

- start_date(timedelta):真正第一次执行的时间并不是start_date,而是start_date+schedule_interval。

- execution_timeout(datetime.timedelta)

- schedule_interval

- end_date:调度结束时间。