@windmelon

2018-12-24T11:22:27.000000Z

字数 6157

阅读 3102

智能机器人实验(三):图像特征匹配、跟踪与相机运动估计实验

智能机器人实验

实验目的

- 在熟悉相机模型和点云图的基础上,深入理解图像特征点及其描述子概念,掌握常见特征点原理,编程实现双目图像中 ORB特征点的提取和匹配方法。

- 运用 ICP方法对匹配的3D特征点进行SVD分解和非线性优化,求解相机姿态运动 。

- 为实现连续的相机姿态解算,需要跟踪特征点在后一帧画面的位置,需掌握相机较特征点法更为快速的光流特征跟踪原理,数学描述及其编程实现

实验内容

- g2o库的安装与使用

- ORB特征点提取

- 双目图像的位姿变换体验

- ICP法相机位姿估计

- 光流法特征跟踪

实验平台和工具

Ubuntu 16.04 虚拟机

C++

实验步骤

1. g2o库的安装与使用

安装依赖库

sudo apt-get install libeigen3-dev libsuitesparse-dev libqt4-dev qt4-qmake libqglviewer-qt4-dev

sudo apt-get install cmake libeigen3-dev libsuitesparse-dev libqt4-qmake libqglviewer-dev

下载g2o源码

git clone https://github.com/RainerKuemmerle/g2o.git

cd g2o

mkdir build

cd build

cmake ..

sudo make install

2. ORB特征点提取

解压实验代码

cd ch7

cmake .

make

运行程序

./feature_extraction 1.png 2.png

3. 双目图像的位姿变换体验

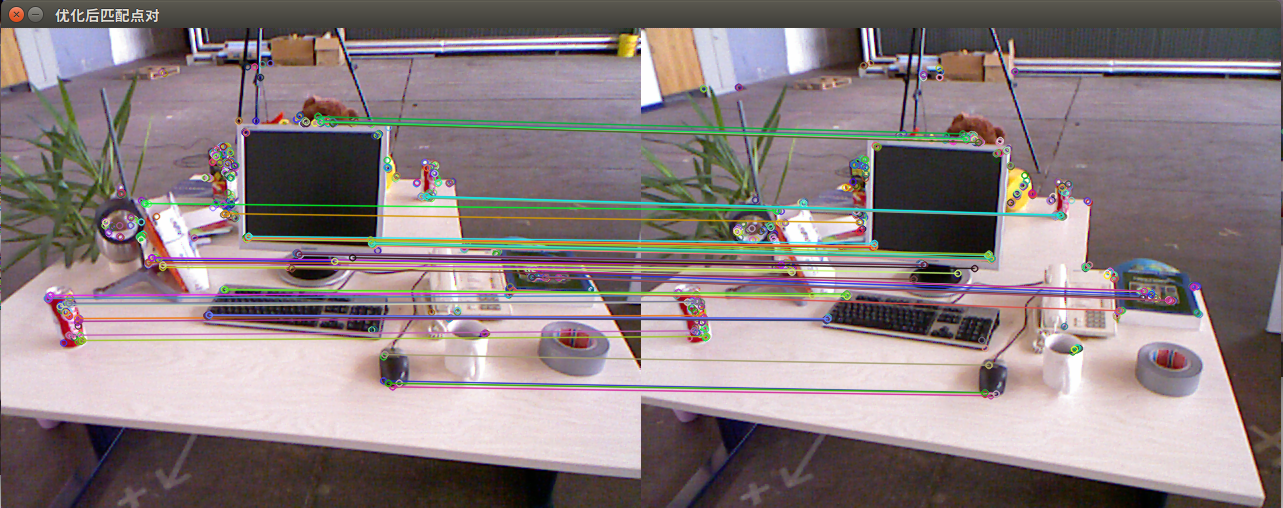

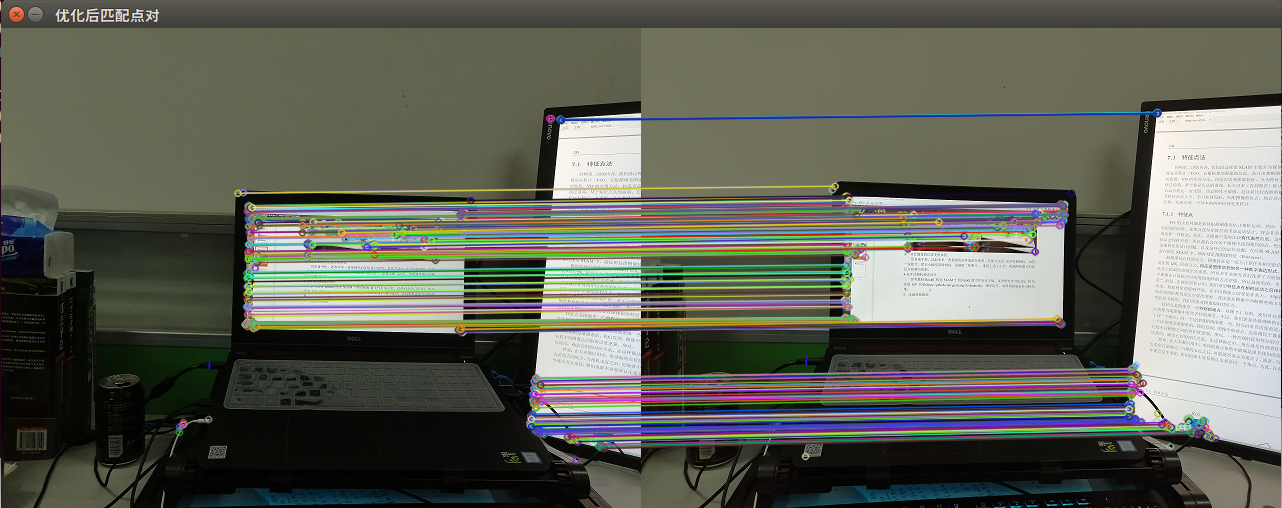

用手机拍摄两张照片进行特征点提取匹配

关键代码分析

//-- 初始化std::vector<KeyPoint> keypoints_1, keypoints_2;Mat descriptors_1, descriptors_2;Ptr<FeatureDetector> detector = ORB::create();Ptr<DescriptorExtractor> descriptor = ORB::create();// Ptr<FeatureDetector> detector = FeatureDetector::create(detector_name);// Ptr<DescriptorExtractor> descriptor = DescriptorExtractor::create(descriptor_name);Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create ( "BruteForce-Hamming" );//-- 第一步:检测 Oriented FAST 角点位置detector->detect ( img_1,keypoints_1 );detector->detect ( img_2,keypoints_2 );//-- 第二步:根据角点位置计算 BRIEF 描述子descriptor->compute ( img_1, keypoints_1, descriptors_1 );descriptor->compute ( img_2, keypoints_2, descriptors_2 );Mat outimg1;drawKeypoints( img_1, keypoints_1, outimg1, Scalar::all(-1), DrawMatchesFlags::DEFAULT );imshow("ORB特征点",outimg1);

为两张图片创建存放关键点和描述子的变量,先通过

detector->detect()函数找到两张图的关键点(FAST角点)

再使用

descriptor->compute()为每个关键点计算(BRIEF)描述子

并画出第一张图的特征点

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离vector<DMatch> matches;//BFMatcher matcher ( NORM_HAMMING );matcher->match ( descriptors_1, descriptors_2, matches );//-- 第四步:匹配点对筛选double min_dist=10000, max_dist=0;//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离for ( int i = 0; i < descriptors_1.rows; i++ ){double dist = matches[i].distance;if ( dist < min_dist ) min_dist = dist;if ( dist > max_dist ) max_dist = dist;}printf ( "-- Max dist : %f \n", max_dist );printf ( "-- Min dist : %f \n", min_dist );

根据所计算的两张图象的描述子,使用Hamming距离进行匹配

并输出最大距离和最小距离

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.std::vector< DMatch > good_matches;for ( int i = 0; i < descriptors_1.rows; i++ ){if ( matches[i].distance <= max ( 2*min_dist, 30.0 ) ){good_matches.push_back ( matches[i] );}}//-- 第五步:绘制匹配结果Mat img_match;Mat img_goodmatch;drawMatches ( img_1, keypoints_1, img_2, keypoints_2, matches, img_match );drawMatches ( img_1, keypoints_1, img_2, keypoints_2, good_matches, img_goodmatch );imshow ( "所有匹配点对", img_match );imshow ( "优化后匹配点对", img_goodmatch );

对匹配进行优化

设置阈值为max(2*min_dist,30.0),取所有距离低于该阈值的特征点组合

绘制出匹配和优化匹配的图片

4. ICP法相机位姿估计

SVD方法

非线性优化方法

关键代码分析

1.SVD法

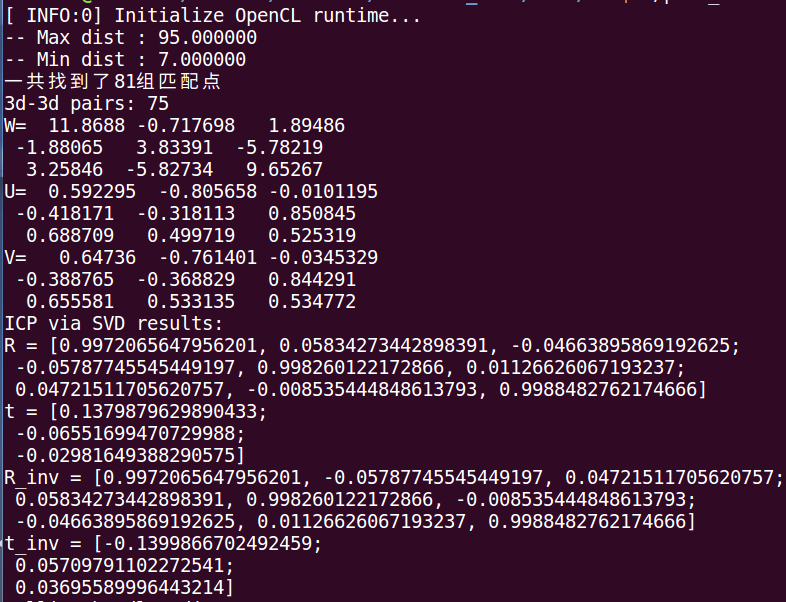

//-- 读取图像Mat img_1 = imread ( argv[1], CV_LOAD_IMAGE_COLOR );Mat img_2 = imread ( argv[2], CV_LOAD_IMAGE_COLOR );vector<KeyPoint> keypoints_1, keypoints_2;vector<DMatch> matches;find_feature_matches ( img_1, img_2, keypoints_1, keypoints_2, matches );cout<<"一共找到了"<<matches.size() <<"组匹配点"<<endl;

读取RGB图像,进行特征点匹配,输出匹配的关键点个数

// 建立3D点Mat depth1 = imread ( argv[3], CV_LOAD_IMAGE_UNCHANGED ); // 深度图为16位无符号数,单通道图像Mat depth2 = imread ( argv[4], CV_LOAD_IMAGE_UNCHANGED ); // 深度图为16位无符号数,单通道图像Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 );vector<Point3f> pts1, pts2;for ( DMatch m:matches ){ushort d1 = depth1.ptr<unsigned short> ( int ( keypoints_1[m.queryIdx].pt.y ) ) [ int ( keypoints_1[m.queryIdx].pt.x ) ];ushort d2 = depth2.ptr<unsigned short> ( int ( keypoints_2[m.trainIdx].pt.y ) ) [ int ( keypoints_2[m.trainIdx].pt.x ) ];if ( d1==0 || d2==0 ) // bad depthcontinue;Point2d p1 = pixel2cam ( keypoints_1[m.queryIdx].pt, K );Point2d p2 = pixel2cam ( keypoints_2[m.trainIdx].pt, K );float dd1 = float ( d1 ) /5000.0;float dd2 = float ( d2 ) /5000.0;pts1.push_back ( Point3f ( p1.x*dd1, p1.y*dd1, dd1 ) );pts2.push_back ( Point3f ( p2.x*dd2, p2.y*dd2, dd2 ) );}

读取深度图像,矩阵K保存了相机内参,用于完成像素坐标到相机坐标的转换,并使用匹配的点的RGB信息和深度信息建立3D点

cout<<"3d-3d pairs: "<<pts1.size() <<endl;Mat R, t;pose_estimation_3d3d ( pts1, pts2, R, t );cout<<"ICP via SVD results: "<<endl;cout<<"R = "<<R<<endl;cout<<"t = "<<t<<endl;cout<<"R_inv = "<<R.t() <<endl;cout<<"t_inv = "<<-R.t() *t<<endl;

输出求得的旋转矩阵和平移向量

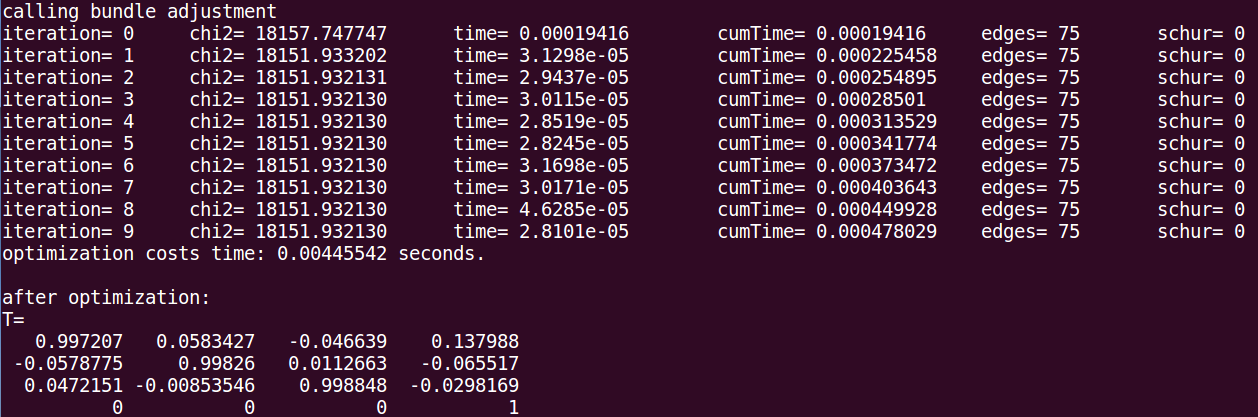

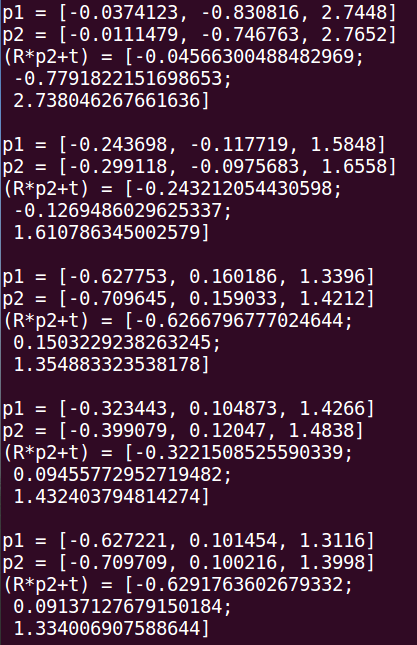

2.非线性优化法

cout<<"calling bundle adjustment"<<endl;bundleAdjustment( pts1, pts2, R, t );// verify p1 = R*p2 + tfor ( int i=0; i<5; i++ ){cout<<"p1 = "<<pts1[i]<<endl;cout<<"p2 = "<<pts2[i]<<endl;cout<<"(R*p2+t) = "<<R * (Mat_<double>(3,1)<<pts2[i].x, pts2[i].y, pts2[i].z) + t<<endl;cout<<endl;}

使用Bundle Adjustment方法迭代求出相机位姿

并取前五个点进行验证

结果对比

非线性优化方法的结果与SVD的结果几乎一模一样,可以认为在本实验中SVD给出的结果已经是最优值

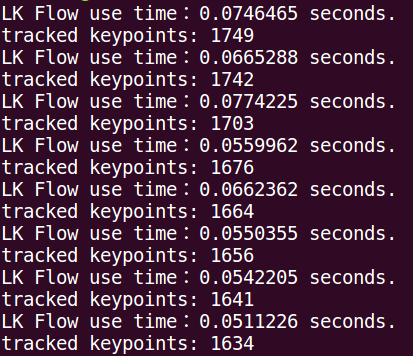

5. 光流法特征跟踪

关键代码分析

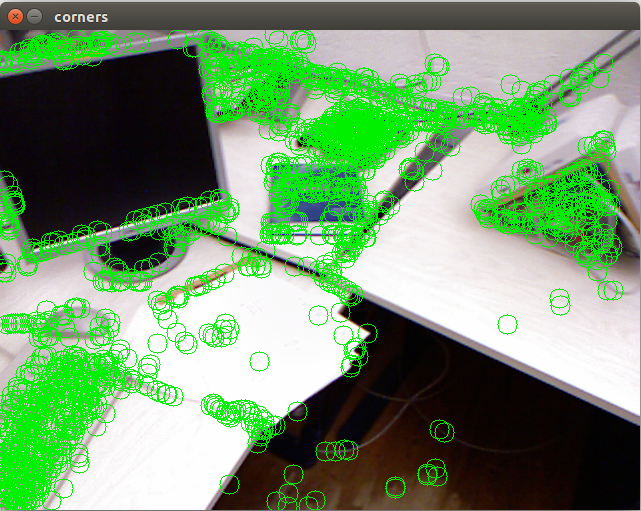

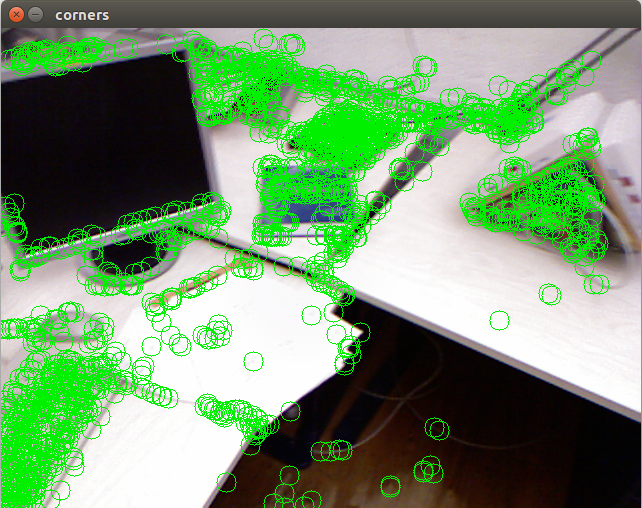

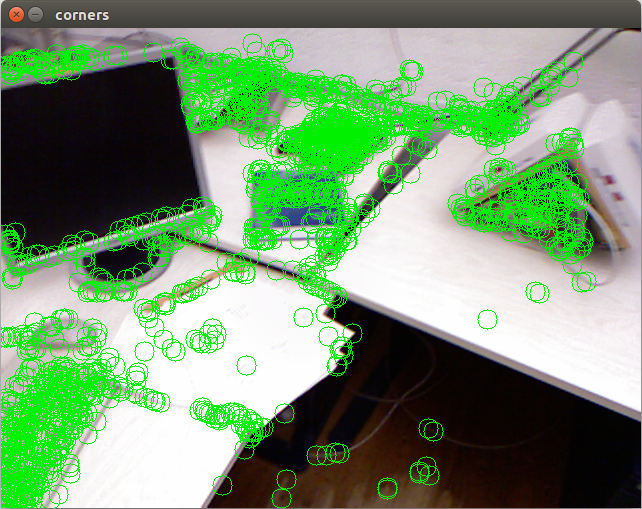

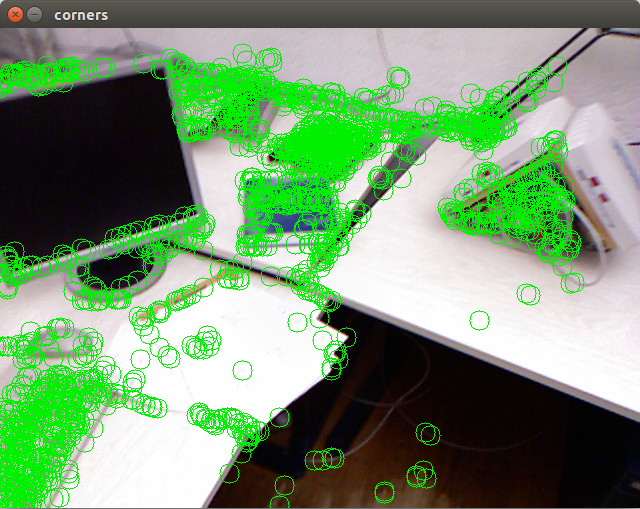

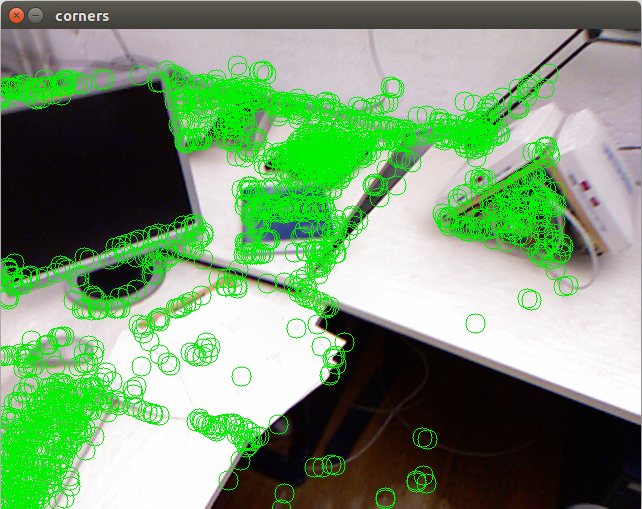

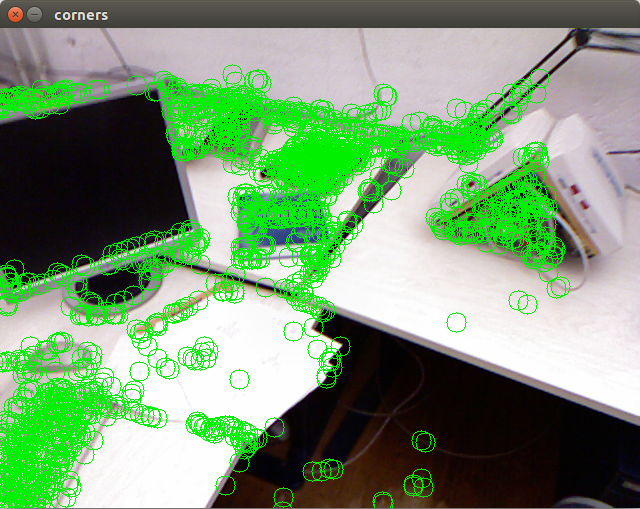

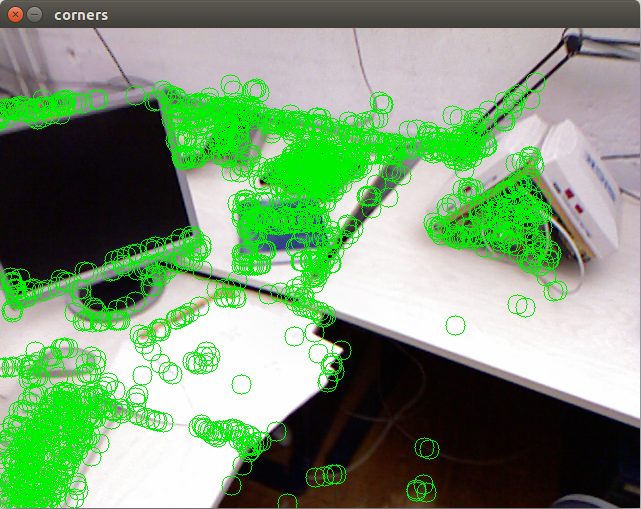

fin>>time_rgb>>rgb_file>>time_depth>>depth_file;color = cv::imread( path_to_dataset+"/"+rgb_file );depth = cv::imread( path_to_dataset+"/"+depth_file, -1 );if (index ==0 ){// 对第一帧提取FAST特征点vector<cv::KeyPoint> kps;cv::Ptr<cv::FastFeatureDetector> detector = cv::FastFeatureDetector::create();detector->detect( color, kps );for ( auto kp:kps )keypoints.push_back( kp.pt );last_color = color;continue;}

对第一帧,使用FAST特征点检测算法提取出特征点

// 对其他帧用LK跟踪特征点vector<cv::Point2f> next_keypoints;vector<cv::Point2f> prev_keypoints;for ( auto kp:keypoints )prev_keypoints.push_back(kp);vector<unsigned char> status;vector<float> error;chrono::steady_clock::time_point t1 = chrono::steady_clock::now();cv::calcOpticalFlowPyrLK( last_color, color, prev_keypoints, next_keypoints, status, error );chrono::steady_clock::time_point t2 = chrono::steady_clock::now();chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>( t2-t1 );cout<<"LK Flow use time:"<<time_used.count()<<" seconds."<<endl;

对于之后的帧,使用LK算法跟踪特征点,这里需要的参数为上一刻图片的RGB信息,此刻图片的RGB信息,上一刻图片的特征点信息,计算后生成此刻特征点的信息

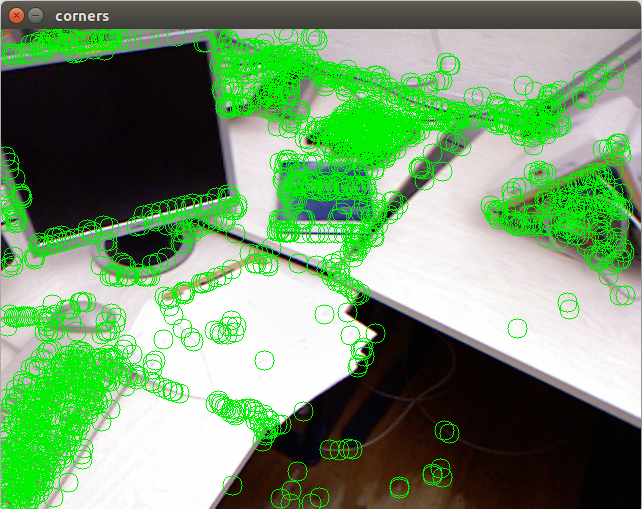

// 把跟丢的点删掉int i=0;for ( auto iter=keypoints.begin(); iter!=keypoints.end(); i++){if ( status[i] == 0 ){iter = keypoints.erase(iter);continue;}*iter = next_keypoints[i];iter++;}cout<<"tracked keypoints: "<<keypoints.size()<<endl;if (keypoints.size() == 0){cout<<"all keypoints are lost."<<endl;break;}// 画出 keypointscv::Mat img_show = color.clone();for ( auto kp:keypoints )cv::circle(img_show, kp, 10, cv::Scalar(0, 240, 0), 1);cv::imshow("corners", img_show);cv::waitKey(0);last_color = color;

根据status存储的信息,把状态值为0(没有跟踪到)的关键点删除,根据剩余的点,在原图上绘制关键点

根据实验结果可以看出,没有新的关键点加入,只用LK法跟踪关键点,关键点越来越少。

实验总结

特征点概念

特征点是图像中一些特别的地方,例如:边缘,角点和区块

辨识的难度区块>边缘>角点

特征点的两个组成部分:

- 关键点:指出该点在图中的位置,还可能有朝向信息

- 描述子:描述关键点周围像素的信息,用向量形式存储

典型的特征点:SIFT,SURF,ORB等

本实验使用的是ORB特征点

ICP算法

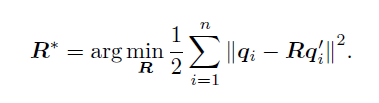

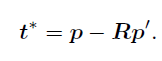

SVD方法

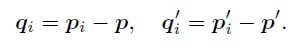

1. 计算两组点的质心位置p; p′,然后计算每个点的去质心坐标

2. 根据以下优化问题计算旋转矩阵

3. 根据第二步的R,计算t

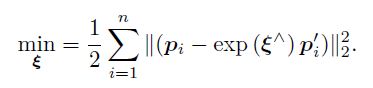

非线性优化方法

构造最小误差函数,迭代求最小值

光流法

LK光流法

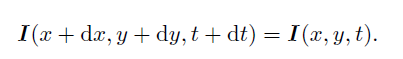

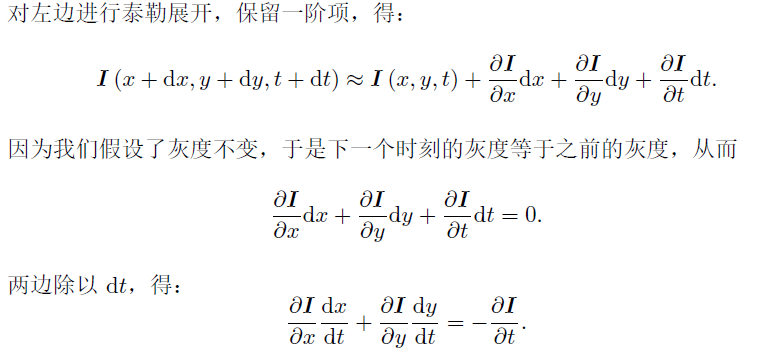

LK光流法是基于一个假设

灰度不变假设

基于该假设,可以得到

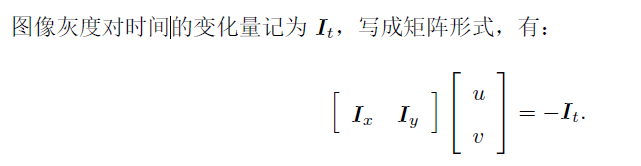

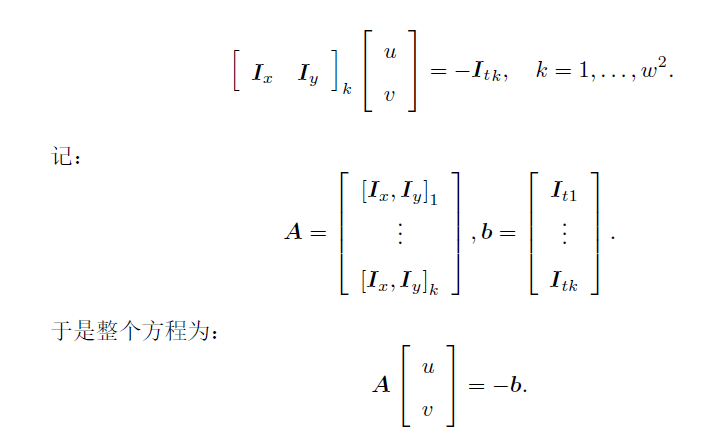

因为只有一个式子无法计算出像素的运动参数u,v,所以引入一个w * w大小的窗口

因此可以列出w^2个方程

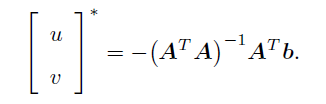

这样就可以解出u,v,从而对不同时刻的图片中的特征点进行跟踪