@nalan90

2017-08-24T03:29:10.000000Z

字数 3568

阅读 1212

Hadoop 添加/删除datanode节点

大数据

摘自:http://blog.leanote.com/post/moonssang/Hadoop-%E6%B7%BB%E5%8A%A0%E6%96%B0%E8%8A%82%E7%82%B9

/etc/hosts

[hadoop@master hadoop]$ cat /etc/hosts## 追加slave172.16.1.162 master172.16.1.163 slave1172.16.1.164 slave2172.16.1.165 slave3

配置master免密码登录slave (新节点)

## 将master节点/home/hadoop/.ssh/id_rsa.pub内容复制到从节点/home/hadoop/.ssh/authorized_keys## /home/hadoop/.ssh 为700[hadoop@dev-164 ~]$ ls -ld .sshdrwx------ 2 hadoop hadoop 29 Aug 23 15:58 .ssh## /home/hadoop/.ssh/authorized_keys 为600[hadoop@dev-164 ~]$ ls -l .ssh/authorized_keys-rw------- 1 hadoop hadoop 396 Aug 23 15:58 .ssh/authorized_keys[hadoop@dev-164 .ssh]$ pwd/home/hadoop/.ssh[hadoop@dev-164 .ssh]$ lsauthorized_keys[hadoop@dev-164 .ssh]$ cat authorized_keysssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDIOOEMRSgX3OothfEzneBnoZqfIlD3a5oaDzRmqKDISFx1sXWTBAtKCKRocq4pWU7DKN82hwcskWFlPnxpz2zP42gohPPpz8SuXXMsDsSKbkVpHduaPG9QvKFJRqtPNNnZQ4A5jZ02lZCcvZ3FDzdpyFTecyRejqdS0Q2EfVswQ7Xc/MySrk2/c7DaC/Xrz1oxu/wsHf45vDj0NiXAadufyIGN0SIxJbW50IB3eAKABQuwNU5CQRkTAcJf59xGixarRo4gqtCFAdtdyHoP/RIYgC1dWafA5TIFGbHuwfFEWluJQJPwpQ1w5mIJkRoPgwWVLI2bscghSzEVIGrRBuZZ hadoop@dev-162

其他配置参考

https://www.zybuluo.com/nalan90/note/854642

https://www.zybuluo.com/nalan90/note/860287

## 注意:[hadoop@master hadoop]$ cat etc/hadoop/hdfs-site.xml## 修改dfs.replication为指定的个数<configuration><property><name>dfs.replication</name><value>3</value></property></configuration>

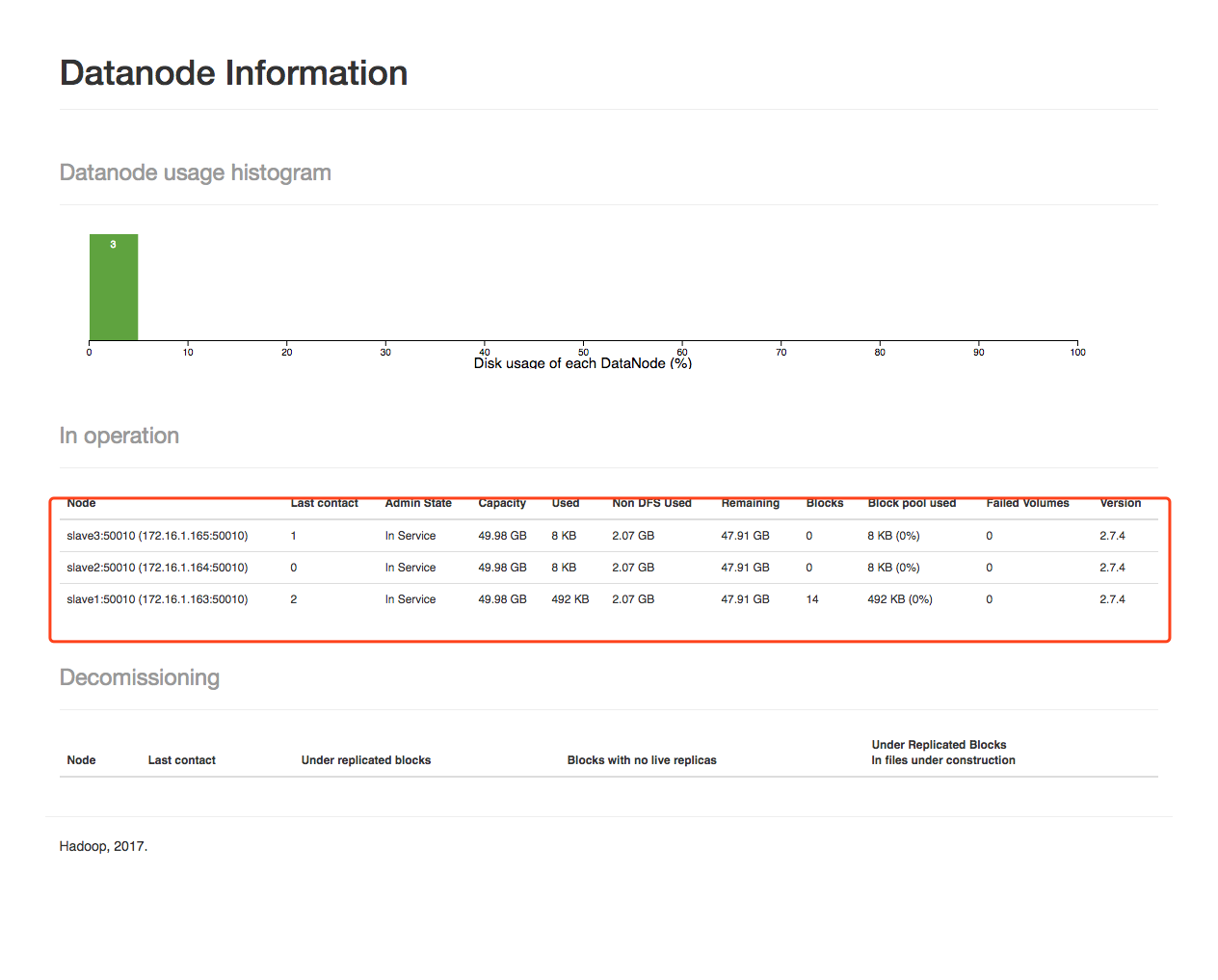

启动新节点并检查

## 启动新节点,在新节点执行./sbin/hadoop-daemon.sh start datanode(重设datanode数据路径时需要重启datanode)./sbin/yarn-daemon.sh start nodemanager## 刷新集群节点[hadoop@master hadoop]$ hdfs dfsadmin -refreshNodesRefresh nodes successful## 查看新的节点信息[hadoop@master hadoop]$ hdfs dfsadmin -reportConfigured Capacity: 160982630400 (149.93 GB)Present Capacity: 154322644992 (143.72 GB)DFS Remaining: 154322124800 (143.72 GB)DFS Used: 520192 (508 KB)DFS Used%: 0.00%Under replicated blocks: 0Blocks with corrupt replicas: 0Missing blocks: 0Missing blocks (with replication factor 1): 0-------------------------------------------------Live datanodes (3):Name: 172.16.1.165:50010 (slave3)Hostname: slave3Decommission Status : NormalName: 172.16.1.163:50010 (slave1)Hostname: slave1Decommission Status : NormalName: 172.16.1.164:50010 (slave2)Hostname: slave2Decommission Status : Normal

WebUI

卸载节点

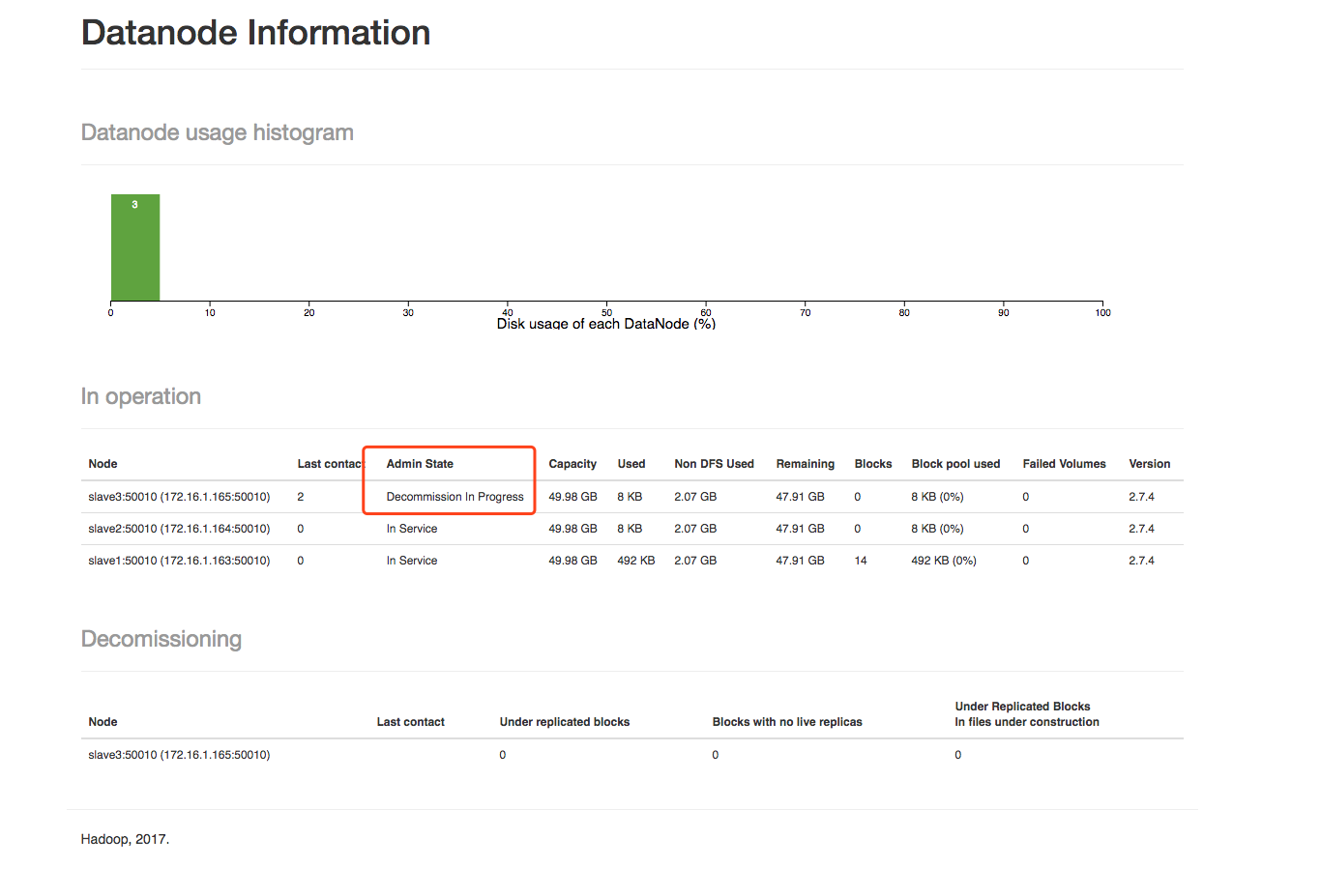

## 修改conf/hdfs-site.xml文件<property><name>dfs.hosts.exclude</name><value>/usr/local/hadoop/etc/hadoop/dfs-hosts.exclude</value><description>Names a file that contains a list of hosts that are not permitted to connect to the namenode. The full pathname of the file must be specified.If the value is empty, no hosts are excluded.</description></property>## 将要下架的机器写到dfs-hosts.exclude中slave4## 强制重新加载配置 进入hadoop根目录bin/hdfs dfsadmin -refreshNodes

WebUI

http://172.16.1.162:50070/dfshealth.html#tab-datanode## 正在执行Decommission,会显示:Decommission Status : Decommission in progress

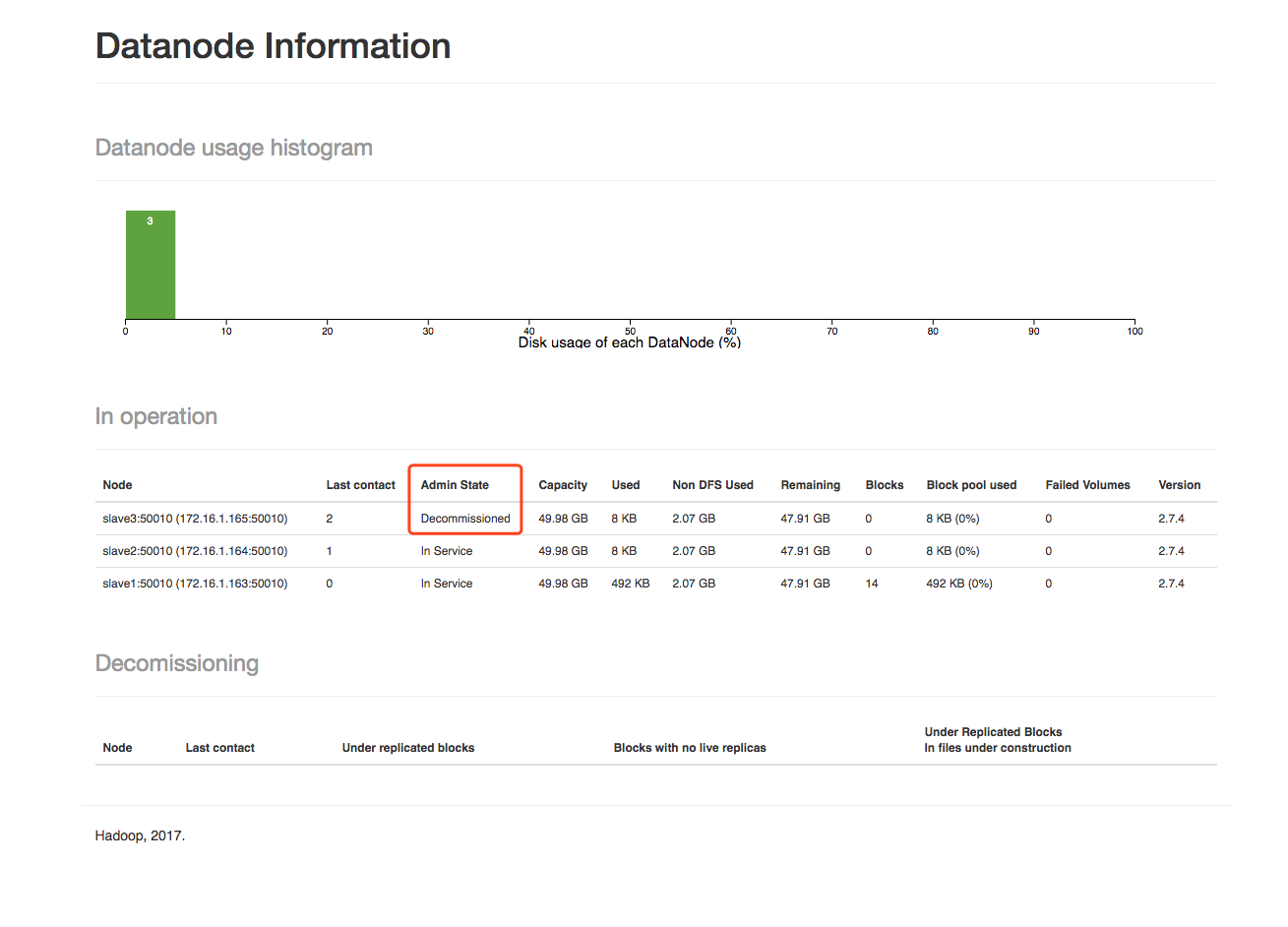

## 执行完毕后,会显示:Decommission Status : Decommissioned

## 命令行查看节点信息[hadoop@master hadoop]$ hdfs dfsadmin -refreshNodesRefresh nodes successful[hadoop@master hadoop]$ hdfs dfsadmin -reportConfigured Capacity: 107321761792 (99.95 GB)Present Capacity: 102881239040 (95.82 GB)DFS Remaining: 102880718848 (95.82 GB)DFS Used: 520192 (508 KB)DFS Used%: 0.00%Under replicated blocks: 0Blocks with corrupt replicas: 0Missing blocks: 0Missing blocks (with replication factor 1): 0-------------------------------------------------Live datanodes (3):Name: 172.16.1.165:50010 (slave3)Hostname: slave3Decommission Status : Decommissioned ## 节点已下线Name: 172.16.1.163:50010 (slave1)Hostname: slave1Decommission Status : NormalName: 172.16.1.164:50010 (slave2)Hostname: slave2Decommission Status : Normal## 在卸载机器上关闭DataNode进程sbin/hadoop-daemon.sh stop datanode## 删除节点后恢复,如果删除后想重新加入:1)删除dfs-hosts.exclude文件中要重新加入的节点2)hadoop dfsadmin -refreshNodes3) 重启新加入的节点datanode 进程./sbin/hadoop-daemon.sh start datanode