@nalan90

2018-03-22T07:19:34.000000Z

字数 15611

阅读 968

k8s集群部署

自动化运维

etcd环境部署请参考: etcd集群搭建

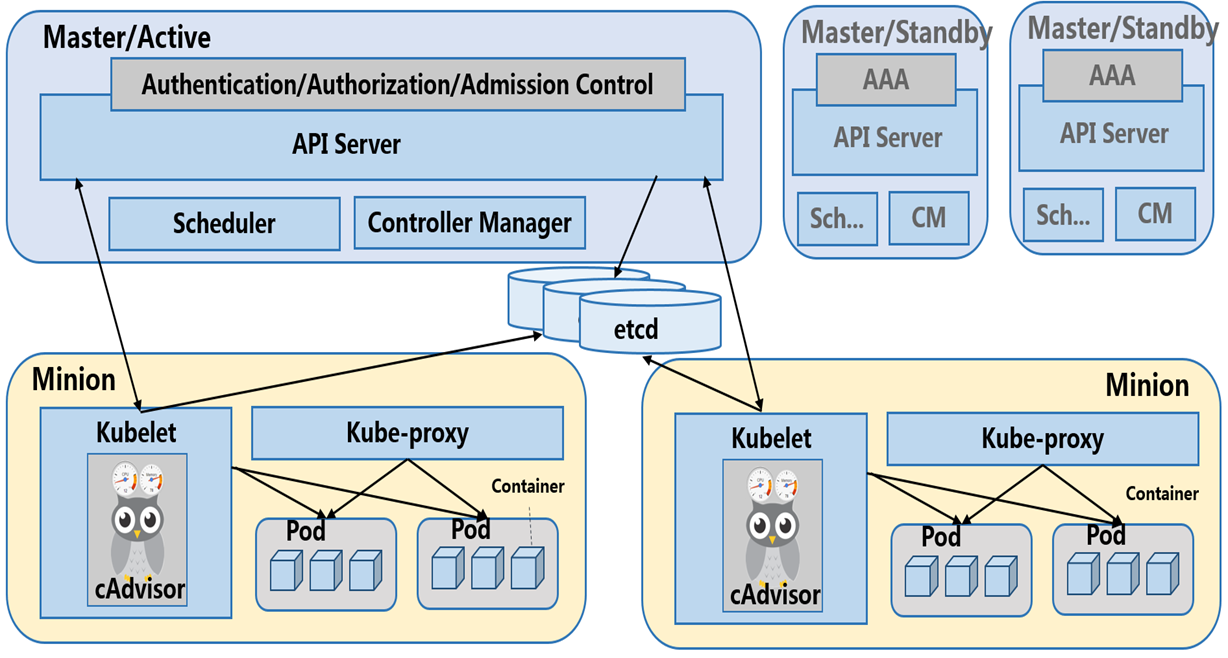

k8s架构

环境准备

- 服务器

- dev-161 (172.16.1.161) master + etcd

- dev-162 (172.16.1.162) node + etcd

- dev-163 (172.16.1.163) node + etcd

- dev-164 (172.16.1.164) node

- dev-165 (172.16.1.165) node

- 操作系统

- CentOS 7.3

- k8s

- v1.8.9

- etcd版本

- v3.0以上

- flannel

- v0.7

- docker

- docker 1.13.1

- 环境目录

- etcd

- /opt/data/etcd/data (data-dir)

- /opt/data/etcd/wal (wal-dir)

- /opt/data/etcd/logs (log-dir)

- k8s

- /opt/kubernetes/cfg (config)

- /opt/kubernetes/bin (binary file)

- etcd

软件及脚本

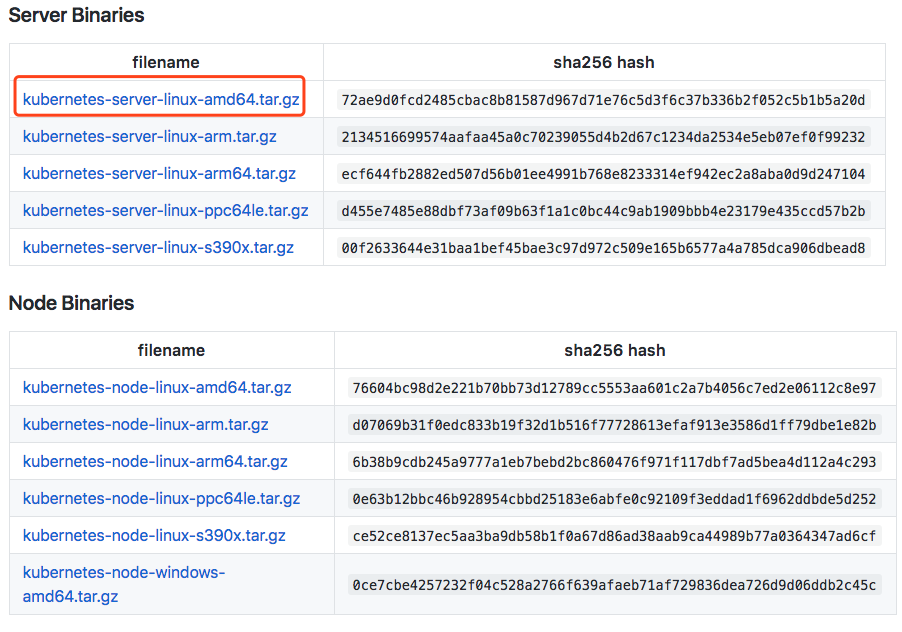

- binary file

里面包启master、node节点所需运行的所有二进制包

- shell(master)

- apiserver: https://github.com/kubernetes/kubernetes/blob/master/cluster/centos/master/scripts/apiserver.sh

- controller manager: https://github.com/kubernetes/kubernetes/blob/master/cluster/centos/master/scripts/controller-manager.sh

- scheduler: https://github.com/kubernetes/kubernetes/blob/master/cluster/centos/master/scripts/scheduler.sh

- shell(node)

目录及软件安装

## 批量创建工作目录work:~ ys$ for item in 161 162 163 164 165;do ssh root@dev-$item 'mkdir -p /opt/kubernetes/{cfg,bin}';done## 安装docker-ce (使用阿里docker-ce镜像)# step 1: 安装必要的一些系统工具yum install -y yum-utils device-mapper-persistent-data lvm2# Step 2: 添加软件源信息yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# Step 3: 更新并安装 Docker-CEyum makecache fastyum -y install docker-cework:~ ys$ for item in 161 162 163 164 165; do ssh root@dev-$item 'yum install -y yum-utils device-mapper-persistent-data lvm2 && yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo && yum makecache fast && yum install -y docker-ce && systemctl enable docker';done## 安装flannelwork:~ ys$ for item in 161 162 163 164 165; do ssh root@dev-$item 'yum install -y flannel && systemctl enable flanneld';done## 关闭swap分区work:~ ys$ for item in 161 162 163 164 165; do ssh root@dev-$item 'swapoff -a';done

master节点部署

[root@dev-161 bin]# lltotal 418592-rwxr-xr-x 1 root root 193631216 Mar 13 00:47 kube-apiserver-rwxr-xr-x 1 root root 128511681 Mar 13 00:47 kube-controller-manager-rwxr-xr-x 1 root root 52496673 Mar 13 00:48 kubectl-rwxr-xr-x 1 root root 53989846 Mar 13 00:47 kube-scheduler[root@dev-161 bin]# cp kube-* /opt/kubernetes/bin/[root@dev-161 bin]# ll /opt/kubernetes/bin/total 367324-rwxr-xr-x 1 root root 193631216 Mar 22 11:00 kube-apiserver-rwxr-xr-x 1 root root 128511681 Mar 22 11:00 kube-controller-manager-rwxr-xr-x 1 root root 53989846 Mar 22 11:00 kube-scheduler

- 修改apiserver.sh如下:

MASTER_ADDRESS=${1:-"172.16.1.161"}ETCD_SERVERS=${2:-"http://172.16.1.161:2379,http://172.16.1.162:2379,http://172.16.1.163:2379"}SERVICE_CLUSTER_IP_RANGE=${3:-"10.10.10.0/16"}ADMISSION_CONTROL=${4:-""}cat <<EOF >/opt/kubernetes/cfg/kube-apiserverKUBE_LOGTOSTDERR="--logtostderr=true"KUBE_LOG_LEVEL="--v=4"KUBE_ETCD_SERVERS="--etcd-servers=${ETCD_SERVERS}"KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"KUBE_API_PORT="--insecure-port=8080"NODE_PORT="--kubelet-port=10250"KUBE_ADVERTISE_ADDR="--advertise-address=${MASTER_ADDRESS}"KUBE_ALLOW_PRIV="--allow-privileged=false"KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=${SERVICE_CLUSTER_IP_RANGE}"KUBE_ADMISSION_CONTROL="--admission-control=${ADMISSION_CONTROL}"EOFKUBE_APISERVER_OPTS=" \${KUBE_LOGTOSTDERR} \\\${KUBE_LOG_LEVEL} \\\${KUBE_ETCD_SERVERS} \\\${KUBE_API_ADDRESS} \\\${KUBE_API_PORT} \\\${NODE_PORT} \\\${KUBE_ADVERTISE_ADDR} \\\${KUBE_ALLOW_PRIV} \\\${KUBE_SERVICE_ADDRESSES} \\\${KUBE_ADMISSION_CONTROL}"cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserverExecStart=/opt/kubernetes/bin/kube-apiserver ${KUBE_APISERVER_OPTS}Restart=on-failure[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable kube-apiserversystemctl restart kube-apiserver

- 启动apiserver

## 执行shell[root@dev-161 shell]# sh apiserver.shCreated symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.## 启动kube-apiserver[root@dev-161 shell]# systemctl start kube-apiserver## 查看状态[root@dev-161 shell]# systemctl status kube-apiserver● kube-apiserver.service - Kubernetes API ServerLoaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)Active: active (running) since Thu 2018-03-22 11:05:42 CST; 18s agoDocs: https://github.com/kubernetes/kubernetesMain PID: 6460 (kube-apiserver)Memory: 181.8MCGroup: /system.slice/kube-apiserver.service└─6460 /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=http://172.16.1.161:2379,http://172.16.1.162:2379,http://172.16.1.163:2379 --insecure-bind-address=0.0.0.0 --insecure-port=8080 --kubelet-port=10250 --advertise-address=172.16.1.161 --allow-privileged=false --service-cluster-ip-range=10.10.10.0/16 --admission-control=

- 修改controller-manager.sh如下:

MASTER_ADDRESS=${1:-"172.16.1.161"}cat <<EOF >/opt/kubernetes/cfg/kube-controller-managerKUBE_LOGTOSTDERR="--logtostderr=true"KUBE_LOG_LEVEL="--v=4"KUBE_MASTER="--master=${MASTER_ADDRESS}:8080"KUBE_LEADER_ELECT="--leader-elect"EOFKUBE_CONTROLLER_MANAGER_OPTS=" \${KUBE_LOGTOSTDERR} \\\${KUBE_LOG_LEVEL} \\\${KUBE_MASTER} \\\${KUBE_LEADER_ELECT}"cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-managerExecStart=/opt/kubernetes/bin/kube-controller-manager ${KUBE_CONTROLLER_MANAGER_OPTS}Restart=on-failure[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable kube-controller-managersystemctl restart kube-controller-manager

- 启动controller-manager服务

[root@dev-161 shell]# sh controller-manager.shCreated symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.[root@dev-161 shell]# systemctl start kube-controller-manager[root@dev-161 shell]# systemctl status kube-controller-manager● kube-controller-manager.service - Kubernetes Controller ManagerLoaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)Active: active (running) since Thu 2018-03-22 11:17:34 CST; 13s agoDocs: https://github.com/kubernetes/kubernetesMain PID: 6532 (kube-controller)Memory: 15.2MCGroup: /system.slice/kube-controller-manager.service└─6532 /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=172.16.1.161:8080 --leader-elect

- 修改scheduler.sh如下

MASTER_ADDRESS=${1:-"172.16.1.161"}cat <<EOF >/opt/kubernetes/cfg/kube-schedulerKUBE_LOGTOSTDERR="--logtostderr=true"KUBE_LOG_LEVEL="--v=4"KUBE_MASTER="--master=${MASTER_ADDRESS}:8080"KUBE_LEADER_ELECT="--leader-elect"KUBE_SCHEDULER_ARGS=""EOFKUBE_SCHEDULER_OPTS=" \${KUBE_LOGTOSTDERR} \\\${KUBE_LOG_LEVEL} \\\${KUBE_MASTER} \\\${KUBE_LEADER_ELECT} \\\$KUBE_SCHEDULER_ARGS"cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-schedulerExecStart=/opt/kubernetes/bin/kube-scheduler ${KUBE_SCHEDULER_OPTS}Restart=on-failure[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable kube-schedulersystemctl restart kube-scheduler

- 启动kube-scheduler

[root@dev-161 shell]# sh scheduler.shCreated symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.[root@dev-161 shell]# systemctl start kube-scheduler[root@dev-161 shell]# systemctl status kube-scheduler● kube-scheduler.service - Kubernetes SchedulerLoaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled)Active: active (running) since Thu 2018-03-22 11:22:28 CST; 11s agoDocs: https://github.com/kubernetes/kubernetesMain PID: 6601 (kube-scheduler)Memory: 6.6MCGroup: /system.slice/kube-scheduler.service└─6601 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=172.16.1.161:8080 --leader-elect

node节点部署(以dev-162为例)

[root@dev-162 bin]# lltotal 181948-rwxr-xr-x 1 root root 138166656 Mar 13 00:47 kubelet-rwxr-xr-x 1 root root 48144192 Mar 13 00:47 kube-proxy[root@dev-162 bin]# cp kubele kube-proxy /opt/kubernetes/bin/[root@dev-162 bin]# ll /opt/kubernetes/bin/total 181948-rwxr-xr-x 1 root root 138166656 Mar 22 11:33 kubelet-rwxr-xr-x 1 root root 48144192 Mar 22 11:33 kube-proxy[root@dev-162 bin]# cd ../shell/[root@dev-162 shell]# lltotal 12-rwxr-xr-x 1 root root 2387 Mar 20 11:30 flannel.sh-rwxr-xr-x 1 root root 3013 Mar 20 11:30 kubelet.sh-rwxr-xr-x 1 root root 1719 Mar 20 11:30 proxy.sh

- 设置网络

[root@dev-162 ~]#etcdctl mk /coreos.com/network/config "{\"Network\": \"10.10.0.0/16\", \"SubnetLen\": 24, \"Backend\": { \"Type\": \"vxlan\" } }"{"Network": "10.10.0.0/16", "SubnetLen": 24, "Backend": { "Type": "vxlan" } }[root@dev-161 shell]# etcdctl get /coreos.com/network/config{"Network": "10.10.0.0/16", "SubnetLen": 24, "Backend": { "Type": "vxlan" } }

- 修改/etc/sysconfig/flanneld如下

FLANNEL_ETCD_ENDPOINTS="http://172.16.1.161:2379,http://172.16.1.162:2379,http://172.16.1.163:2379"FLANNEL_ETCD_PREFIX="/coreos.com/network"

- 启动flanneld、docker

[root@dev-162 ~]# systemctl start flanneld[root@dev-162 ~]# systemctl status flanneld● flanneld.service - Flanneld overlay address etcd agentLoaded: loaded (/usr/lib/systemd/system/flanneld.service; enabled; vendor preset: disabled)Active: active (running) since Thu 2018-03-22 13:14:19 CST; 2min 52s agoProcess: 6493 ExecStartPost=/usr/libexec/flannel/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker (code=exited, status=0/SUCCESS)Main PID: 6485 (flanneld)Memory: 4.9MCGroup: /system.slice/flanneld.service└─6485 /usr/bin/flanneld -etcd-endpoints=http://172.16.1.161:2379,http://172.16.1.162:2379,http://172.16.1.163:2379 -etcd-prefix=/coreos.com/network

- 查看flannel相关配置

[root@dev-162 flannel]# cat /run/flannel/dockerDOCKER_OPT_BIP="--bip=10.10.63.1/24"DOCKER_OPT_IPMASQ="--ip-masq=true"DOCKER_OPT_MTU="--mtu=1450"DOCKER_NETWORK_OPTIONS=" --bip=10.10.63.1/24 --ip-masq=true --mtu=1450"[root@dev-162 flannel]# cat /run/flannel/subnet.envFLANNEL_NETWORK=10.10.0.0/16FLANNEL_SUBNET=10.10.63.1/24FLANNEL_MTU=1450FLANNEL_IPMASQ=false

- 修改docker.service

## 添加--log-level=error $DOCKER_NETWORK_OPTIONSExecStart=/usr/bin/dockerd --log-level=error $DOCKER_NETWORK_OPTIONS[root@dev-162 flannel]# systemctl daemon-reload[root@dev-162 flannel]# systemctl start docker[root@dev-162 flannel]# systemctl status docker● docker.service - Docker Application Container EngineLoaded: loaded (/usr/lib/systemd/system/docker.service; disabled; vendor preset: disabled)Drop-In: /usr/lib/systemd/system/docker.service.d└─flannel.confActive: active (running) since Thu 2018-03-22 13:32:15 CST; 8s agoDocs: https://docs.docker.comMain PID: 9996 (dockerd)Memory: 23.9MCGroup: /system.slice/docker.service├─ 9996 /usr/bin/dockerd --log-level=error --bip=10.10.63.1/24 --ip-masq=true --mtu=1450└─10001 docker-containerd --config /var/run/docker/containerd/containerd.toml

- 修改kubelet.sh如下

MASTER_ADDRESS=${1:-"172.16.1.161"}NODE_ADDRESS=${2:-"172.16.1.162"}DNS_SERVER_IP=${3:-"10.10.10.2"}DNS_DOMAIN=${4:-"cluster.local"}KUBECONFIG_DIR=${KUBECONFIG_DIR:-/opt/kubernetes/cfg}# Generate a kubeconfig filecat <<EOF > "${KUBECONFIG_DIR}/kubelet.kubeconfig"apiVersion: v1kind: Configclusters:- cluster:server: http://${MASTER_ADDRESS}:8080/name: localcontexts:- context:cluster: localname: localcurrent-context: localEOFcat <<EOF >/opt/kubernetes/cfg/kubeletKUBE_LOGTOSTDERR="--logtostderr=true"KUBE_LOG_LEVEL="--v=4"NODE_ADDRESS="--address=${NODE_ADDRESS}"NODE_PORT="--port=10250"NODE_HOSTNAME="--hostname-override=${NODE_ADDRESS}"KUBELET_KUBECONFIG="--kubeconfig=${KUBECONFIG_DIR}/kubelet.kubeconfig"KUBE_ALLOW_PRIV="--allow-privileged=false"KUBELET__DNS_IP="--cluster-dns=${DNS_SERVER_IP}"KUBELET_DNS_DOMAIN="--cluster-domain=${DNS_DOMAIN}"KUBELET_ARGS=""EOFKUBELET_OPTS=" \${KUBE_LOGTOSTDERR} \\\${KUBE_LOG_LEVEL} \\\${NODE_ADDRESS} \\\${NODE_PORT} \\\${NODE_HOSTNAME} \\\${KUBELET_KUBECONFIG} \\\${KUBE_ALLOW_PRIV} \\\${KUBELET__DNS_IP} \\\${KUBELET_DNS_DOMAIN} \\\$KUBELET_ARGS"cat <<EOF >/usr/lib/systemd/system/kubelet.service[Unit]Description=Kubernetes KubeletAfter=docker.serviceRequires=docker.service[Service]EnvironmentFile=-/opt/kubernetes/cfg/kubeletExecStart=/opt/kubernetes/bin/kubelet ${KUBELET_OPTS}Restart=on-failureKillMode=process[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable kubeletsystemctl restart kubelet

- 启动kubelet

## File exists问题处理[root@dev-163 shell]# sh kubelet.shFailed to execute operation: File exists[root@dev-163 shell]# systemctl disable kubeletRemoved symlink /etc/systemd/system/multi-user.target.wants/kubelet.service.[root@dev-162 shell]# sh kubelet.shCreated symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.[root@dev-162 shell]# systemctl start kubelet[root@dev-162 shell]# systemctl status kubelet● kubelet.service - Kubernetes KubeletLoaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)Active: active (running) since Thu 2018-03-22 13:33:18 CST; 41s agoMain PID: 10129 (kubelet)Memory: 22.7MCGroup: /system.slice/kubelet.service└─10129 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --address=172.16.1.162 --port=10250 --hostname-override=172.16.1.162 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --allow-privileged=false --cluster-dns=10.10.10.2 --cluster-domain=cluster.local

- 修改proxy.sh

MASTER_ADDRESS=${1:-"172.16.1.161"}NODE_ADDRESS=${2:-"172.16.1.162"}cat <<EOF >/opt/kubernetes/cfg/kube-proxyKUBE_LOGTOSTDERR="--logtostderr=true"KUBE_LOG_LEVEL="--v=4"NODE_HOSTNAME="--hostname-override=${NODE_ADDRESS}"KUBE_MASTER="--master=http://${MASTER_ADDRESS}:8080"EOFKUBE_PROXY_OPTS=" \${KUBE_LOGTOSTDERR} \\\${KUBE_LOG_LEVEL} \\\${NODE_HOSTNAME} \\\${KUBE_MASTER}"cat <<EOF >/usr/lib/systemd/system/kube-proxy.service[Unit]Description=Kubernetes ProxyAfter=network.target[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-proxyExecStart=/opt/kubernetes/bin/kube-proxy ${KUBE_PROXY_OPTS}Restart=on-failure[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable kube-proxysystemctl restart kube-proxy

- 启动kube-proxy

[root@dev-162 shell]# sh proxy.shCreated symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.[root@dev-162 shell]# systemctl start kube-proxy[root@dev-162 shell]# systemctl status kube-proxy● kube-proxy.service - Kubernetes ProxyLoaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)Active: active (running) since Thu 2018-03-22 13:36:01 CST; 15s agoMain PID: 10266 (kube-proxy)Memory: 7.2MCGroup: /system.slice/kube-proxy.service‣ 10266 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=172.16.1.162 --master=http://172.16.1.161:8080

最终效果

[root@dev-161 shell]# etcdctl ls /coreos.com/network/subnets/coreos.com/network/subnets/10.10.63.0-24/coreos.com/network/subnets/10.10.26.0-24/coreos.com/network/subnets/10.10.44.0-24/coreos.com/network/subnets/10.10.27.0-24[root@dev-161 shell]# kubectl get nodesNAME STATUS ROLES AGE VERSION172.16.1.162 Ready <none> 2h v1.8.9172.16.1.163 Ready <none> 15m v1.8.9172.16.1.164 Ready <none> 9m v1.8.9172.16.1.165 Ready <none> 5m v1.8.9[root@dev-162 ~]# route -nKernel IP routing tableDestination Gateway Genmask Flags Metric Ref Use Iface0.0.0.0 172.16.1.1 0.0.0.0 UG 0 0 0 ens310.10.0.0 0.0.0.0 255.255.0.0 U 0 0 0 flannel.110.10.63.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 ens3172.16.0.0 0.0.0.0 255.255.0.0 U 0 0 0 ens3

Tips

- 下载pause镜像

## 由于国内网络环境的原因,pause镜像拖管在google的仓库,需要作如下操作(所有node节点):docker pull registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0docker tag registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 gcr.io/google_containers/pause-amd64:3.0

- 修改Docker镜像源地址

[root@dev-161 nginx]# vim /usr/lib/systemd/system/docker.serviceExecStart=/usr/bin/dockerd --registry-mirror=https://registry.docker-cn.com