@lokvahkoor

2020-07-14T12:45:38.000000Z

字数 1593

阅读 863

Dive into DL阅读笔记:3 深度学习基础

AI

3.1-3.3 线性回归

https://zh.d2l.ai/chapter_deep-learning-basics/linear-regression.html#

- Linear Regression解决回归问题,Softmax Regression解决分类问题

- 线性回归模型:

- 线性回归损失函数(单样本误差):

- 常数1/2使对平方项求导后的常数系数为1,这样在形式上稍微简单一些

- 在求数值解的优化算法中,小批量随机梯度下降(mini-batch stochastic gradient descent)在深度学习中被广泛使用

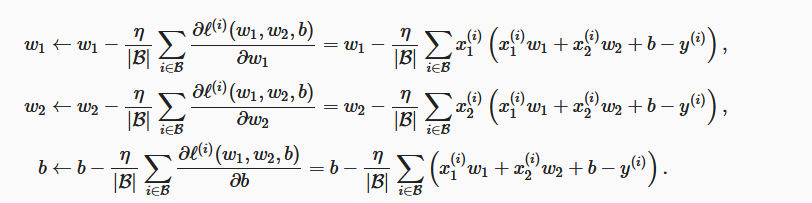

- 参数迭代公式:

- 这里大Beta代表batch size

pytorch中的广播机制:当对两个形状不同的Tensor按元素运算时,可能会触发广播(broadcasting)机制:先适当复制元素使这两个Tensor形状相同后再按元素运算

- 例如:

x = torch.arange(1, 3).view(1, 2)print(x)y = torch.arange(1, 4).view(3, 1)print(y)print(x + y)

- 输出:

tensor([[1, 2]])tensor([[1],[2],[3]])tensor([[2, 3],[3, 4],[4, 5]])

- 注意在使用批梯度下降更新参数时,要把梯度除以batch_size:

param.data -= lr * param.grad / batch_size - 使用pytorch提供的data包来读取数据:

import torch.utils.data as Data # 由于data常用作变量名,因此这里将导入的data模块用Data代替batch_size = 10# 将训练数据的特征和标签组合dataset = Data.TensorDataset(features, labels)# 随机读取小批量data_iter = Data.DataLoader(dataset, batch_size, shuffle=True)

利用pytorch简洁地定义模型:

完整定义:

class LinearNet(nn.Module):def __init__(self, n_feature):super(LinearNet, self).__init__()self.linear = nn.Linear(n_feature, 1)# forward 定义前向传播def forward(self, x):y = self.linear(x)return ynet = LinearNet(num_inputs)print(net) # 使用print可以打印出网络的结构

利用

nn.Sequential快速定义:# 写法一net = nn.Sequential(nn.Linear(num_inputs, 1)# 此处还可以传入其他层)# 写法二net = nn.Sequential()net.add_module('linear', nn.Linear(num_inputs, 1))# net.add_module ......# 写法三from collections import OrderedDictnet = nn.Sequential(OrderedDict([('linear', nn.Linear(num_inputs, 1))# ......]))

torch.utils.data模块提供了有关数据处理的工具,torch.nn模块定义了大量神经网络的层,torch.nn.init模块定义了各种初始化方法,torch.optim模块提供了很多常用的优化算法