@Matrixzhu

2021-08-14T20:52:16.000000Z

字数 6146

阅读 852

Some study to the ML animal behaviour analysis

- Some study to the ML animal behaviour analysis

- 0.0 Introduction

- 1.0 Pose estimation and behavior classification of broiler chickens based on deep neural networks

- 2.0 Assessing machine learning classifiers for the detection of animals’ behavior using depth-based tracking

- 3.0 Multiperson Activity Recognition Based on Bone Keypoints Detection

- 4.0 Summary

0.0 Introduction

In this file I would introduct several papers which apply maching learning methods to analysis the behaviour of human and animal. I would focus on what features are extracted and what models are applied to make pose classification or behaviour analysis. Some of my personal idea to the project is in the summary section.

1.0 Pose estimation and behavior classification of broiler chickens based on deep neural networks

1.1 Keywords:

- research goal: classify chicken in 8 pose, e.g. standing, walking, running

- feature extraction method: deeplabcut ( estimate the chicken skeleton)

- classify method: naive bayesian

1.2 Skeleton extract and processing

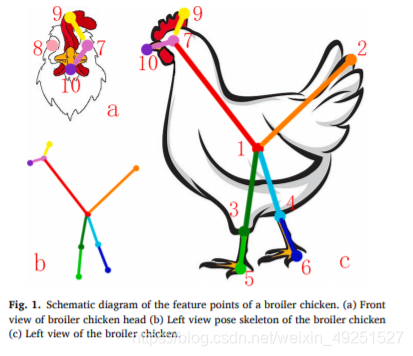

- This paper use the same pose estimation tool as I am using. This tool apply transfer Resnet which can produce a feasible model with at least 100 labeled frame. The fig below is an example skeleton.

- Several prepocessing method to the skeleton grap is applied.

- flip the head of chiken to the right

- skeleton is centered and standardized. Node 1 of skeleton would always be put in the coordinate (960, 540). The whole graph would zoom into the same size with the center of node 1.

- expanding on the behavioral posture of

the chicken and make a linear difference for each behavioral feature point.

1.3 Feature seletion (input vector of the later classifer model)

- This part is base on the Zhuang et al., 2018. Same group of authors' work.

- feature points: The definition is vague, it seems the coordinate of the skeleton node

- connection lines: The lines between skeleton node

- skeleton angle:

In the view of papaer " When the chicken is healthy, it is standing, and the angle β between the skeleton branch and the horizontal plane is relatively large. When the chicken is dispirited, its skeleton may be in an approximately horizontal state, in which case the angle β is relatively small".

concavity:

in the view of paper "There is a concave structure in the middle of a healthy broiler chicken’s head and cocktail when standing, but a sick broiler chicken’s head sags and the upper back arches due to the slump".

shape features:

- elongation: The elongation is the ratio of height to width of the circumscribed rectangle.

- are-line: The area-linear rate is the ratio of chicken contour area to circumference.

- circularity: The circularity is the extent to which the contour of the chicken is close to being circular

1.4 Classify model

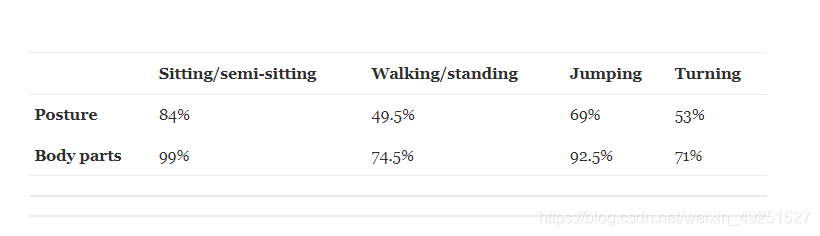

- naive bayesian is applied here, there is nothing new in this part. The fig below is the experiment result.

2.0 Assessing machine learning classifiers for the detection of animals’ behavior using depth-based tracking

Patricia Pons a, Javier Jaena, Alejandro Catala b

2.1 keywords:

- experiment goal: classifer cats' body parts and poses

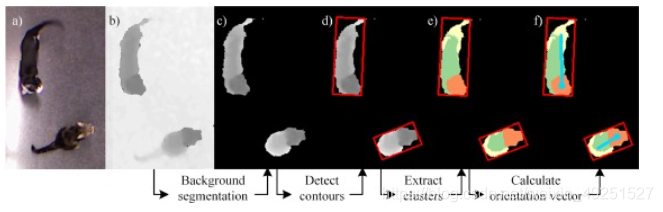

- detection method: deep-base image provided by the Microsoft Kinect® v1.0 sensor

- classifer method: decision tree, svm, knn, logistic regression

2.2 Feature selection and classifer

There are two kinds feature extract model are applied in this paper. Supervised learning model, and knowledge based model.

2.2.1 supervised model:

- This method would first use labeled image to produce a body part classify model, and train a pose classifer model base on the result of first one. Following features are considered.

- width and height of the cat’s contour,

- clusters basic info (centroid, average depth, and number of pixels),

- distance between head to body centroids, distance between tail and

body centroids, - distance between head and tail centroids,

- angle between the vectors from body to tail and from body to head,

- depth

- differences between clusters (head and body, head and tail, body

and tail), with a total of 21 features

2.2.2 knowledge base model

- This model do not require to label the body parts of the cat. K-mean would cluster the cat body into three parts, head, haunch and tail (knowledge base). Cat's pose still need to be label. The following features are extracted.

- dimensions of the cat’s contour,

- number of pixels for each cluster

- average depth for each cluster

3.0 Multiperson Activity Recognition Based on Bone Keypoints Detection

LIMengGhe,XU HongGji,SHILeiGxin,ZHAO WenGjieandLIJuan

This paper is not write in english

3.1 Keywords

- research goal: human action detedtion base on the skeleton graph

- detection method: openpose

- classifer model: svm

3.2 skeleton extraction

3.3 Feature extraction

- body velocity: measure the relative speed for every node to the neck node

- angular velocity: measure the angular velocity of the vector from neck to other node

- node velocity: measure the speed of node movement.

Three features of every body would be record and form a feature vector, the dimension would be (1, 3*num_node). This paper also consider temperal feature of the body action. Slide window is applied to form a time sequence feature matrix, and use this matrix as the svm model's input.

3.4 Classifer model

Svm model is applied here. It is pity that they do not use a time serial model like Lstm.

However the result of expriment indicate that svm is powerful enough.

| split | hug | shake hands |

|---|---|---|

| training set | 0.94 | 0.99 |

| test set | 0.92 | 0.97 |

4.0 Summary

According to our project's description the most important parts would lie on the feature extraction and classifer model selection.

For the feature extraction the above three papers show three different style of skills. All of three methods are kownledge-based which depends on the experiment animal subject and the experiment purpose. We can also try to build on our own knowledge-base model, this require further study or may be our veterinarian friends can provide some information. Besides, Zhuang et al., 2018 has very resemble topic to ours which try to detect the sickness of the broilers. Further more, we can choose not to extract feature from the skeleton graph but input it directly into the model like graph neural network. In this way we can skip knowledge-base model part.

For the classifer model we can do as the methods in the above papers to use some traditional machine learning model, e.g. svm, Naive baysian, ramdom forest. In our project dog may not have a single pose to present their painful, but present by a sequence of movement. Thus, time serial model like Lstm could be used as classifer.