@chensiqi

2021-06-04T14:33:32.000000Z

字数 26556

阅读 1668

容器自动化(十):K8S容器云平台入门(下)

云计算专题之容器自动化

--私人课件,不公开,不出版,禁止传播

想做好运维工作,人先要学会勤快;

居安而思危,勤记而补拙,方可不断提高;

别人资料不论你用着再如何爽那也是别人的;

自己总结东西是你自身特有的一种思想与理念的展现;

精髓不是看出来的,精髓是记出来的;

请同学们在学习的过程中养成好的学习习惯;

勤于实践,抛弃教案,勤于动手,整理文档。

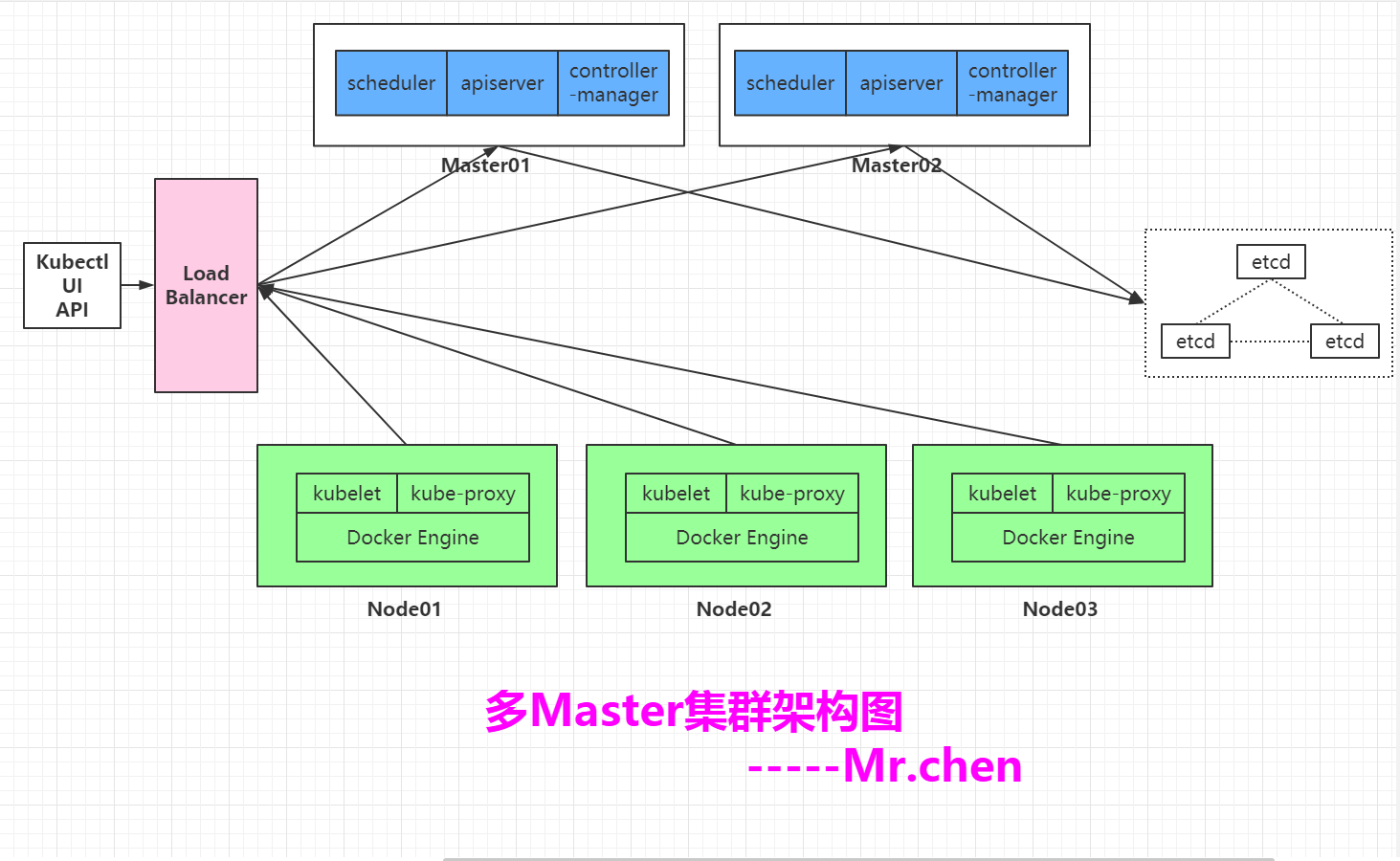

第二章 Kubernetes生产级高可用集群部署

| 角色 | IP | 组件 | 推荐配置 |

|---|---|---|---|

| master01 | 192.168.200.207 | kube-apiserver/kube-controller-manager/kube-scheduler/etcd | CPU:2C+ 内存:4G+ |

| master02 | 192.168.200.208 | kube-apiserver/kube-controller-manager/kube-scheduler/etcd | CPU:2C+ 内存:4G+ |

| node01 | 192.168.200.209 | kubelet/kube-proxy/docker/flannel/etcd | CPU:2C+ 内存:4G+ |

| node02 | 192.168.200.210 | kubelet/kube-proxy/docker/flannel | CPU:2C+ 内存:4G+ |

| Load_Balancer_Master | 192.168.200.205,VIP:192.168.200.100 | Nginx L4 | CPU:2C+ 内存:4G+ |

| Load_Balancer_Backup | 192.168.200.206 | Nginx L4 | CPU:2C+ 内存:4G+ |

| Registry_Harbor | 192.168.200.211 | Harbor | CPU:2C+ 内存:4G+ |

2.11 多master集群-部署Master02组件

假如我们要在Master01的基础上创建Master02,只需要进行以下几步操作

2.11.1 拷贝Master01上/opt/kubernetes目录所有内容到Master02的/opt下

[root@Master01 ~]# scp -r /opt/kubernetes/ root@192.168.200.208:/opt/root@192.168.200.208s password:kube-apiserver 100% 184MB 103.7MB/s 00:01kubectl 100% 55MB 100.9MB/s 00:00kube-controller-manager 100% 155MB 92.6MB/s 00:01kube-scheduler 100% 55MB 100.2MB/s 00:00token.csv 100% 84 67.0KB/s 00:00kube-apiserver 100% 958 818.7KB/s 00:00kube-controller-manager 100% 483 1.2MB/s 00:00kube-scheduler 100% 93 44.1KB/s 00:00ca.pem 100% 1359 3.3MB/s 00:00server-key.pem 100% 1675 3.6MB/s 00:00server.pem 100% 1643 3.1MB/s 00:00ca-key.pem 100% 1679 3.3MB/s 00:00

2.11.2 拷贝Master01上的systemd管理的启动脚本到Master02上

[root@Master01 ~]# cd /usr/lib/systemd/system[root@Master01 system]# pwd/usr/lib/systemd/system[root@Master01 system]# ls kube-apiserver.service kube-scheduler.service kube-controller-manager.servicekube-apiserver.service kube-controller-manager.service kube-scheduler.service[root@Master01 system]# scp kube-apiserver.service kube-controller-manager.service kube-scheduler.service root@192.168.200.208:/usr/lib/systemd/system/root@192.168.200.208s password:kube-apiserver.service 100% 282 645.3KB/s 00:00kube-controller-manager.service 100% 317 457.8KB/s 00:00kube-scheduler.service 100% 282 670.3KB/s 00:00

2.11.3 在Master02上修改刚才拷贝的kube-apiserver文件里涉及到IP地址部分的代码

#修改kube-apiserver配置文件[root@Master02 ~]# cd /opt/kubernetes/[root@Master02 kubernetes]# cd cfg/[root@Master02 cfg]# sed -n '5p;7p' kube-apiserver--bind-address=192.168.200.207 \ #本行修改为本地IP--advertise-address=192.168.200.207 \ #本行修改为本地IP[root@Master02 cfg]# vim kube-apiserver +5[root@Master02 cfg]# sed -n '5p;7p' kube-apiserver--bind-address=192.168.200.208 \--advertise-address=192.168.200.208 \

2.11.4 启动Master02的各个组件服务,并设置开机自动启动

#启动kube-apiserver服务[root@Master02 ~]# systemctl start kube-apiserver[root@Master02 ~]# systemctl enable kube-apiserverCreated symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.#验证apiserver服务是否启动成功[root@Master02 ~]# ps -ef | grep kube-apiserver | grep -v greproot 1702 1 26 19:51 ? 00:00:07 /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://192.168.200.207:2379,https://192.168.200.208:2379,https://192.168.200.209:2379 --bind-address=192.168.200.208 --secure-port=6443 --advertise-address=192.168.200.208 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pem#启动kube-scheduler服务[root@Master02 ~]# systemctl start kube-scheduler[root@Master02 ~]# systemctl enable kube-schedulerCreated symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.#验证kube-scheduler服务是否启动成功[root@Master02 ~]# ps -ef | grep kube-scheduler | grep -v greproot 1739 1 0 19:53 ? 00:00:00 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect#启动kube-controller-manager服务[root@Master02 ~]# systemctl start kube-controller-manager[root@Master02 ~]# systemctl enable kube-controller-managerCreated symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.#验证kube-controller-manager是否启动成功[root@Master02 ~]# ps -ef | grep kube-controller-manager | grep -v greproot 1777 1 5 19:54 ? 00:00:00 /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.10.10.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem --root-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --experimental-cluster-signing-duration=87600h0m0s

2.11.5 在Master02上进行集群状态检查

[root@Master02 ~]# ln -s /opt/kubernetes/bin/kubectl /usr/local/bin/[root@Master02 ~]# which kubectl/usr/local/bin/kubectl[root@Master02 ~]# kubectl get csNAME STATUS MESSAGE ERRORscheduler Healthy okcontroller-manager Healthy oketcd-1 Healthy {"health":"true"}etcd-0 Healthy {"health":"true"}etcd-2 Healthy {"health":"true"}[root@Master02 ~]# kubectl get nodeNAME STATUS ROLES AGE VERSION192.168.200.209 Ready <none> 12d v1.12.1192.168.200.210 Ready <none> 11d v1.12.1

2.12 多master集群-Nginx+keepalived(高可用)

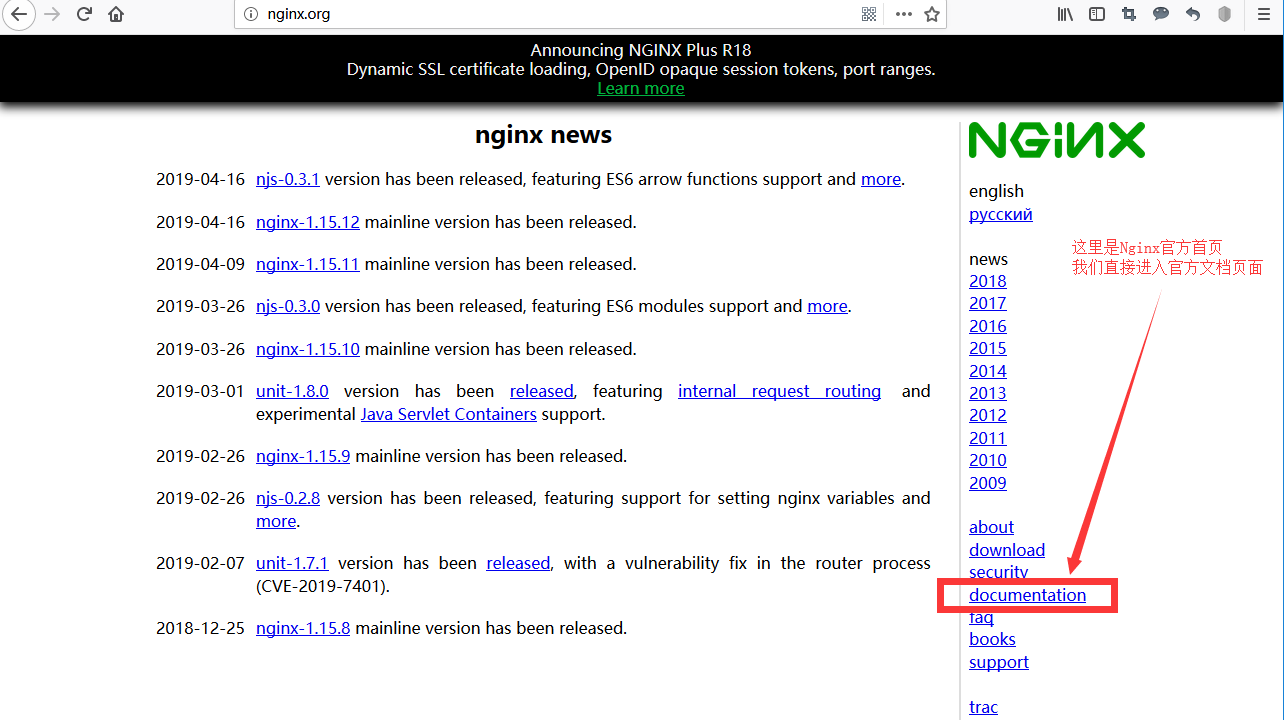

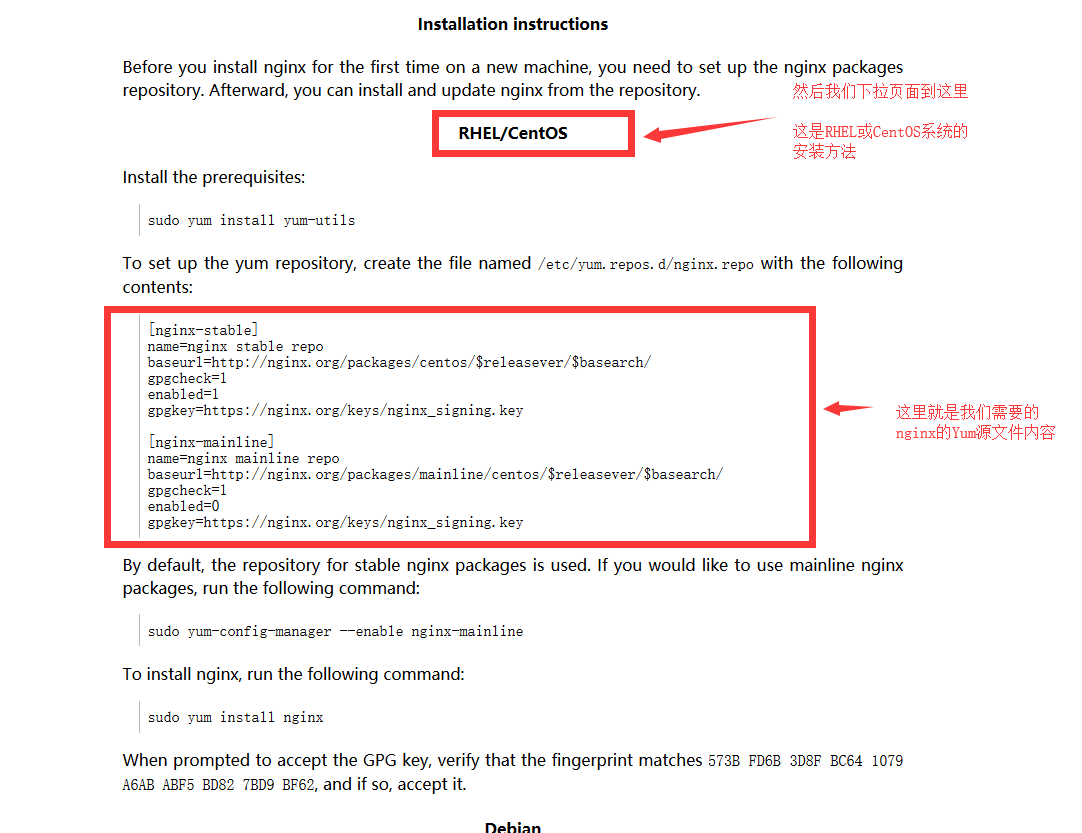

Nginx很常用,而且现在的Yum安装版的配置很全,已经无需源码编译

而且,从1.9.10版本开始,Nginx在编译时只需要加上--with-stream,即可支持四层负载均衡

那么,我们如何从官网找到我们需要的yum安装源呢?

http://nginx.org

需要注意的是,Yum源代码里的$releaserver需要修改成我们用的操作系统的版本,我们用Centos7那么就写7即可。

2.12.1 Yum安装Nginx

#配置Nginx的Yum源文件[root@LB-Nginx-Master ~]# vim /etc/yum.repos.d/nginx.repo[root@LB-Nginx-Master ~]# cat /etc/yum.repos.d/nginx.repo[nginx]name=nginx repobaseurl=http://nginx.org/packages/centos/7/$basearch/gpgcheck=0enabled=1#Yum安装Nginx[root@LB-Nginx-Master ~]# yum -y install nginx

2.12.2 修改Nginx配置文件

#修改一下配置文件的参数,添加stream负载均衡池#配置文件修改后的结果如下所示:[root@LB-Nginx-Master ~]# cat /etc/nginx/nginx.confuser nginx;worker_processes 4; #修改一下processeserror_log /var/log/nginx/error.log warn;pid /var/run/nginx.pid;events {worker_connections 1024;}stream { #添加一个stream四层负载均衡池upstream k8s-apiserver {server 192.168.200.207:6443;server 192.168.200.208:6443;}server {listen 192.168.200.205:6443; #模拟监听的本地端口proxy_pass k8s-apiserver; #因为四层所以这里不是URL,请同学们注意}}http {include /etc/nginx/mime.types;default_type application/octet-stream;log_format main '$remote_addr - $remote_user [$time_local] "$request" ''$status $body_bytes_sent "$http_referer" ''"$http_user_agent" "$http_x_forwarded_for"';access_log /var/log/nginx/access.log main;sendfile on;#tcp_nopush on;keepalive_timeout 65;#gzip on;include /etc/nginx/conf.d/*.conf;}

2.12.3 启动Nginx

[root@LB-Nginx-Master ~]# systemctl start nginx[root@LB-Nginx-Master ~]# ps -ef | grep nginx | grep -v greproot 21840 1 0 21:03 ? 00:00:00 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.confnginx 21841 21840 0 21:03 ? 00:00:00 nginx: worker processnginx 21842 21840 0 21:03 ? 00:00:00 nginx: worker processnginx 21843 21840 0 21:03 ? 00:00:00 nginx: worker processnginx 21844 21840 0 21:03 ? 00:00:00 nginx: worker process[root@LB-Nginx-Master ~]# netstat -antp | grep 6443tcp 0 0 192.168.200.205:6443 0.0.0.0:* LISTEN 21840/nginx: master

2.12.4 修改Node节点的组件配置文件,将指向Master的IP地址,指向LB-Nginx(目前没有做VIP)

#修改Node01节点配置文件[root@node01 cfg]# pwd/opt/kubernetes/cfg[root@node01 cfg]# grep 207 *bootstrap.kubeconfig: server: https://192.168.200.207:6443 #修改这个文件flanneld:FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.200.207:2379,https://192.168.200.208:2379,https://192.168.200.209:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem"kubelet.kubeconfig: server: https://192.168.200.207:6443 #修改这个文件kube-proxy.kubeconfig: server: https://192.168.200.207:6443 #修改这个文件#查看修改后的结果[root@node01 cfg]# vim bootstrap.kubeconfig +5[root@node01 cfg]# vim kubelet.kubeconfig +5[root@node01 cfg]# vim kube-proxy.kubeconfig +5[root@node01 cfg]# grep -n 205 *bootstrap.kubeconfig:5: server: https://192.168.200.205:6443kubelet.kubeconfig:5: server: https://192.168.200.205:6443kube-proxy.kubeconfig:5: server: https://192.168.200.205:6443#重启动[root@node01 cfg]# systemctl restart kubelet[root@node01 cfg]# systemctl restart kube-proxy

在其他Node节点重复上面的操作即可。

2.12.5 在Master节点上进行集群访问测试

[root@Master01 system]# kubectl get nodeNAME STATUS ROLES AGE VERSION192.168.200.209 Ready <none> 12d v1.12.1192.168.200.210 Ready <none> 12d v1.12.1[root@Master01 system]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 23dnginx NodePort 10.0.0.192 <none> 80:31442/TCP 11d

2.12.6 给LB-Nginx添加一个访问日志(便于观察学习)

#添加K8S访问日志[root@LB-Nginx-Master ~]# vim /etc/nginx/nginx.conf[root@LB-Nginx-Master ~]# sed -n '13,24p' /etc/nginx/nginx.confstream {log_format main "$remote_addr $upstream_addr - $time_local $status"; #添加本行access_log /var/log/nginx/k8s-access.log main; #添加本行upstream k8s-apiserver {server 192.168.200.207:6443;server 192.168.200.208:6443;}server {listen 192.168.200.205:6443;proxy_pass k8s-apiserver;}}特别说明:remote_addr:请求的来源IPupstream_addr:负载均衡池的转发目标IPtime_local:本地时间status:访问状态码

2.12.7 重启Nginx,并观察日志

[root@LB-Nginx-Master ~]# systemctl reload nginx[root@LB-Nginx-Master ~]# ls /var/log/nginx/access.log error.log k8s-access.log#重启Node节点的kubelet服务,观察k8s-access.log[root@node01 cfg]# systemctl restart kubelet[root@node02 cfg]# systemctl restart kubelet#查看日志[root@LB-Nginx-Master ~]# cat /var/log/nginx/k8s-access.log192.168.200.209 192.168.200.207:6443 - 21/Apr/2019:21:44:02 +0800 200192.168.200.209 192.168.200.207:6443 - 21/Apr/2019:21:44:02 +0800 200192.168.200.210 192.168.200.208:6443 - 21/Apr/2019:21:45:04 +0800 200192.168.200.210 192.168.200.207:6443 - 21/Apr/2019:21:45:04 +0800 200

2.12.8 部署Nginx-Slave及keepalived高可用

Nginx+keepalived的高可用过程部署,由于同学们已经学过了,这里就不再描述

配置完高可用以后,同学们需要注意修改Node节点的指向IP地址,从LB-Nginx-Master上指向VIP地址即可

第三章 kubectl命令行管理工具

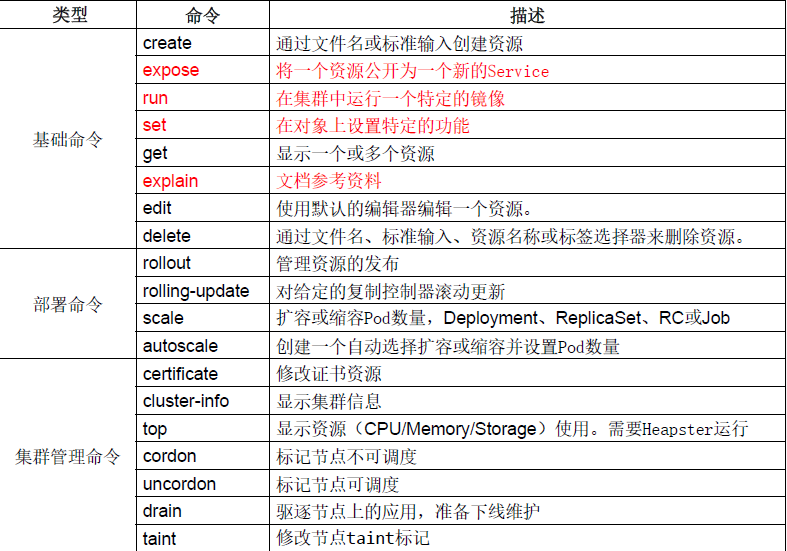

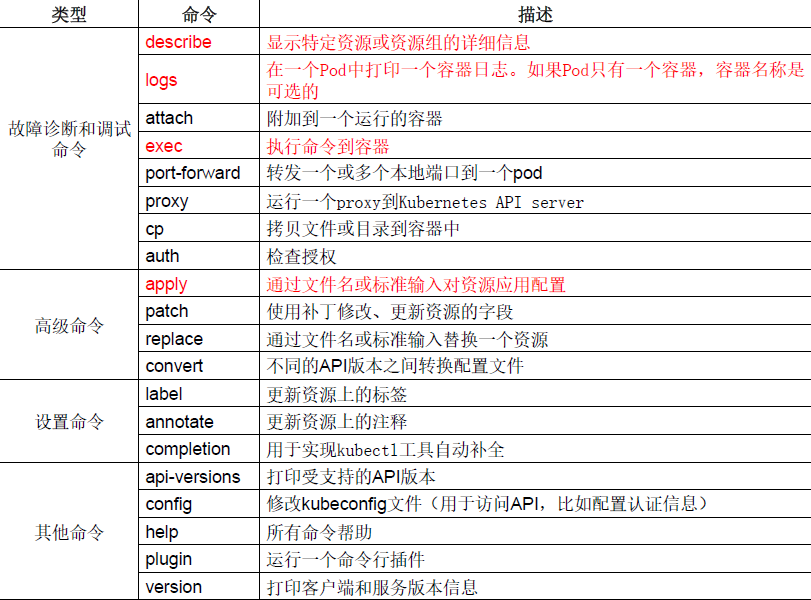

3.1 kubectl管理命令概要

#kubectl create通过文件创建资源[root@Master01 system]# kubectl create --help | grep -A 2 UsageUsage:kubectl create -f FILENAME [options]#kubectl run在集群中运行一个指定的镜像[root@Master01 system]# kubectl run --help | grep -A 2 UsageUsage:kubectl run NAME --image=image [--env="key=value"] [--port=port] [--replicas=replicas] [--dry-run=bool] [--overrides=inline-json] [--command] -- [COMMAND] [args...] [options]#kubectl expose将容器公开为一个服务[root@Master01 system]# kubectl expose --help | grep -A 2 UsageUsage:kubectl expose (-f FILENAME | TYPE NAME) [--port=port] [--protocol=TCP|UDP|SCTP] [--target-port=number-or-name] [--name=name] [--external-ip=external-ip-of-service] [--type=type] [options]#kubectl set在对象上设置指定的功能[root@Master01 system]# kubectl set --help | grep -A 2 UsageUsage:kubectl set SUBCOMMAND [options]#kubectl get显示一个或多个资源[root@Master01 system]# kubectl get --help | grep -A 2 UsageUsage:kubectl get [(-o|--output=)json|yaml|wide|custom-columns=...|custom-columns-file=...|go-template=...|go-template-file=...|jsonpath=...|jsonpath-file=...] (TYPE[.VERSION][.GROUP] [NAME | -l label] | TYPE[.VERSION][.GROUP]/NAME ...) [flags] [options]#kubectl edit使用默认编辑器编辑一个资源[root@Master01 system]# kubectl edit --help | grep -A 2 UsageUsage:kubectl edit (RESOURCE/NAME | -f FILENAME) [options]#kubectl delete通过文件名,资源名称等删除资源[root@Master01 system]# kubectl delete --help | grep -A 2 UsageUsage:kubectl delete ([-f FILENAME] | TYPE [(NAME | -l label | --all)]) [options]

实操演示:认识kubectl

#获取集群中的pods资源信息[root@Master01 system]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-dbddb74b8-ggpxm 1/1 Running 3 12d#获取集群中所有资源信息[root@Master01 system]# kubectl get allNAME READY STATUS RESTARTS AGEpod/nginx-dbddb74b8-ggpxm 1/1 Running 3 12dNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEservice/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 23dservice/nginx NodePort 10.0.0.192 <none> 80:31442/TCP 12dNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEdeployment.apps/nginx 1 1 1 1 12dNAME DESIRED CURRENT READY AGEreplicaset.apps/nginx-dbddb74b8 1 1 1 12d#更加详细的展示集群中所有资源的信息[root@Master01 system]# kubectl get all -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEpod/nginx-dbddb74b8-ggpxm 1/1 Running 3 12d 172.17.12.2 192.168.200.209 <none>NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTORservice/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 23d <none>service/nginx NodePort 10.0.0.192 <none> 80:31442/TCP 12d run=nginxNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTORdeployment.apps/nginx 1 1 1 1 12d nginx nginx run=nginxNAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTORreplicaset.apps/nginx-dbddb74b8 1 1 1 12d nginx nginx pod-template-hash=dbddb74b8,run=nginx#更加详细的展示pods中资源的信息[root@Master01 system]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEnginx-dbddb74b8-ggpxm 1/1 Running 3 12d 172.17.12.2 192.168.200.209 <none>#查看指定命名空间的pods资源[root@Master01 system]# kubectl get pods -o wide -n kube-systemNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEkubernetes-dashboard-6bff7dc67d-jl7pg 1/1 Running 1 14h 172.17.12.3 192.168.200.209 <none>特别说明:命名空间就相当于K8S中的一个虚拟集群

3.2 kubectl工具管理集群应用生命周期

当我们用kubectl下指令去创建pods的时候

它会先创建一个deployment资源部署监控器来执行pods的创建任务

同时,deployment还会监控pods资源的健康状态,如果发现pods副本缺失,会自动创建pods来弥补

3.2.1 Pods创建

创建的作用就是生成一个deployment来执行pods资源的创建任务

: kubectl run nginx --replicas=3 --image=nginx:1.14 --port=80

命令说明:

- kubectl run :kubectl启动pods的命令

- nginx:deploy的名字

- --replicas:pods副本数

- --image:镜像的名字

- --port:开放容器的内部访问端口

#查看默认命名空间的所有pods资源信息[root@Master01 ~]# kubectl get podsNo resources found.#启动一个deploy名字叫做nginx[root@Master01 ~]# kubectl run nginx --replicas=3 --image=nginx:1.14 --port=80kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.deployment.apps/nginx created#查看刚刚创建的pods副本[root@Master01 ~]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-65868c9459-2pz44 1/1 Running 0 3snginx-65868c9459-9tlzt 1/1 Running 0 3snginx-65868c9459-rfdkd 1/1 Running 0 3s#查看pods资源及deployment资源部署监控器[root@Master01 ~]# kubectl get pods,deployNAME READY STATUS RESTARTS AGEpod/nginx-65868c9459-2pz44 1/1 Running 0 75spod/nginx-65868c9459-9tlzt 1/1 Running 0 75spod/nginx-65868c9459-rfdkd 1/1 Running 0 75sNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEdeployment.extensions/nginx 3 3 3 3 75s

3.2.2 Pods发布

发布的作用就是打开pods的对外访问服务(暴露对外的访问端口)

- :kubectl expose deployment nginx --port=80 --type=NodePort --target-port=80 --name=nginx-service

命令说明:

- kubectl expose:开启外部访问服务的命令

- deployment nginx:指定deploy的名字

- --port=80:集群内访问端口(供ClusterIP访问的端口)

- --type=NodePort:要开启的服务类型

- --target-port=80:容器内业务的监听端口(也可以当作Pod的监听端口)

- --name=nginx-service:本次发布的服务的名字

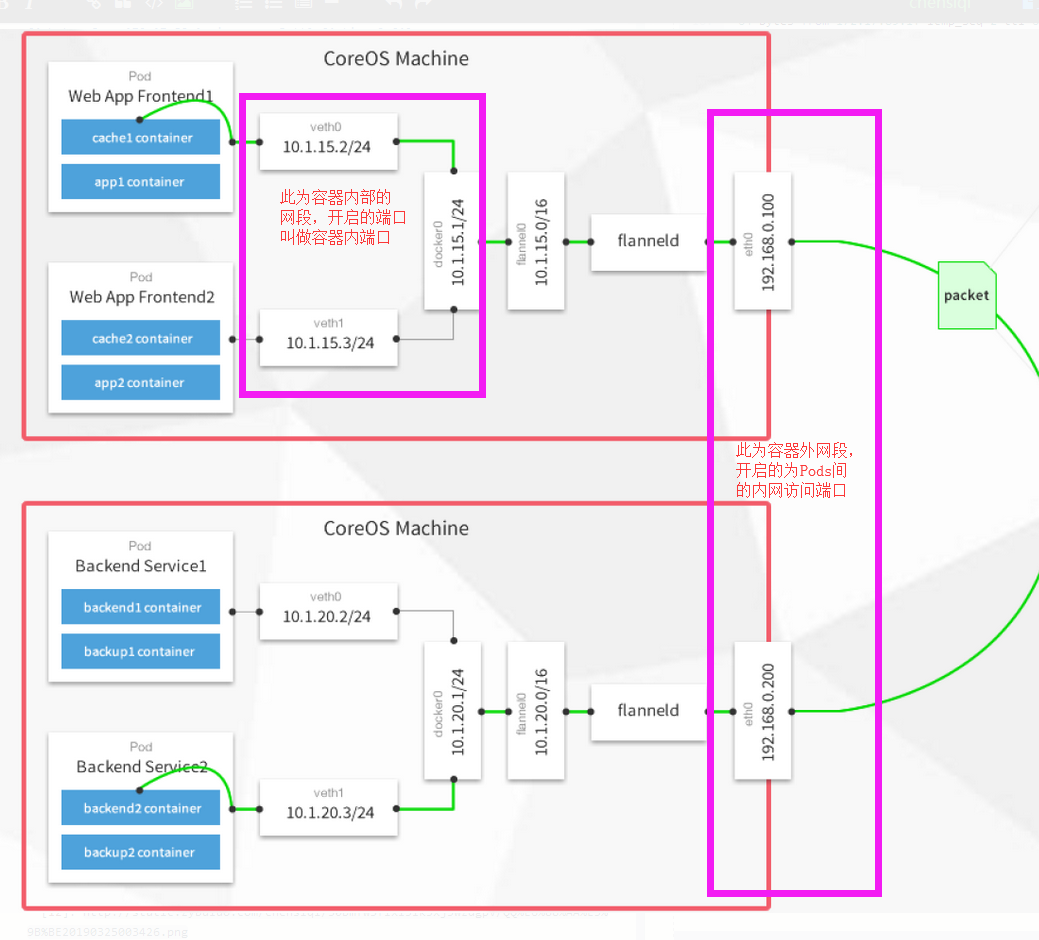

:什么叫做集群内端口?什么叫做容器外的内网端口?

发布的目的是开启node集群内的Pods的ClusterIP访问端口的同时开启集群的外部访问服务端口

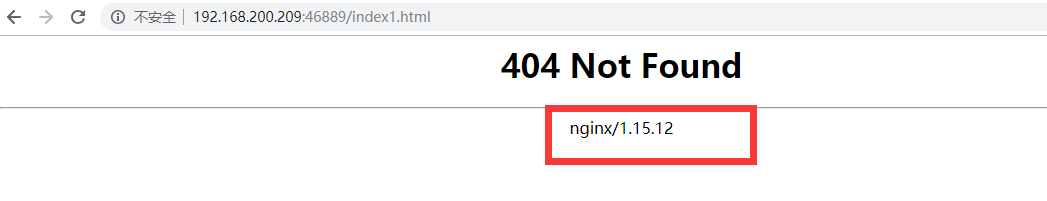

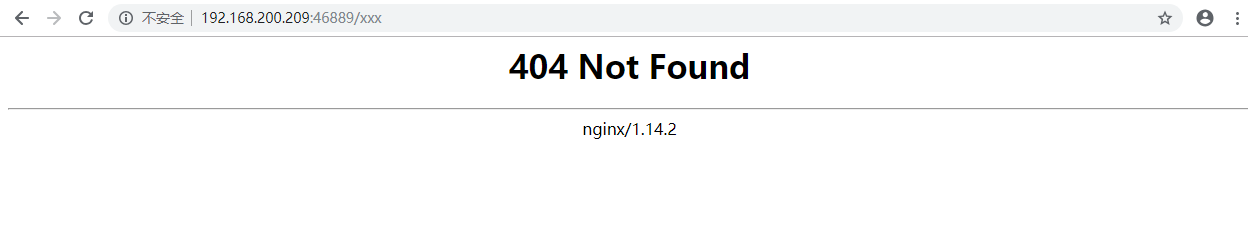

#查看集群的service服务(对外访问服务)[root@Master01 ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 24d#将pods公开为一个服务,提供外部访问[root@Master01 ~]# kubectl expose deployment nginx --port=80 --type=NodePort --target-port=80 --name=nginx-serviceservice/nginx-service exposed[root@Master01 ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 24dnginx-service NodePort 10.0.0.193 <none> 80:46889/TCP 8s说明:80:46889 集群内Node节点之间Pod业务的ClusterIP访问端口映射到集群的外部访问服务端口46889

3.2.3 Pods更新

更新的作用就是升级镜像的版本或者副本数

- :kubectl set image deploy/nginx nginx=nginx:1.15

- 命令说明:

- kubectl set:设置pods的特定功能

- image:指定要设置的功能

- deploy/nginx:指定deploy的名字

- nginx=nginx:1.15 指定镜像的版本号

#查看pods的资源信息[root@Master01 ~]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEnginx-7b67cfbf9f-6nxmb 1/1 Running 1 48m 172.17.3.2 192.168.200.209 <none>nginx-7b67cfbf9f-7fmgx 1/1 Running 1 48m 172.17.74.2 192.168.200.210 <none>nginx-7b67cfbf9f-h6hkv 1/1 Running 1 48m 172.17.3.3 192.168.200.209 <none>#查看某个pod的镜像具体描述信息里的版本信息[root@Master01 ~]# kubectl describe pod nginx-7b67cfbf9f-6nxmb | grep nginx:1.14Image: nginx:1.14Normal Pulled 49m kubelet, 192.168.200.209 Container image "nginx:1.14" already present on machineNormal Pulled 10m kubelet, 192.168.200.209 Container image "nginx:1.14" already present on machine#更新pods的镜像的版本[root@Master01 ~]# kubectl set image deploy/nginx nginx=nginx:1.15deployment.extensions/nginx image updated#查看Pods资源情况(ImagePullBackOff为正在pull镜像中)[root@Master01 ~]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-56fbc658b-wgrqj 0/1 ImagePullBackOff 0 50snginx-7b67cfbf9f-6nxmb 1/1 Running 1 53mnginx-7b67cfbf9f-7fmgx 1/1 Running 1 53mnginx-7b67cfbf9f-h6hkv 1/1 Running 1 53m[root@Master01 ~]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-56fbc658b-wgrqj 0/1 ImagePullBackOff 0 101snginx-7b67cfbf9f-6nxmb 1/1 Running 1 54mnginx-7b67cfbf9f-7fmgx 1/1 Running 1 54mnginx-7b67cfbf9f-h6hkv 1/1 Running 1 54m[root@Master01 ~]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-56fbc658b-sfzbh 0/1 ContainerCreating 0 23snginx-56fbc658b-wgrqj 1/1 Running 0 2m24snginx-7b67cfbf9f-6nxmb 1/1 Running 1 54mnginx-7b67cfbf9f-7fmgx 1/1 Running 1 54m[root@Master01 ~]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-56fbc658b-br59r 1/1 Running 0 15snginx-56fbc658b-sfzbh 1/1 Running 0 43snginx-56fbc658b-wgrqj 1/1 Running 0 2m44s#查看pods的镜像的具体描述信息里的版本信息[root@Master01 ~]# kubectl describe pod nginx-56fbc658b-br59r | grep nginx:1.15Image: nginx:1.15Normal Pulled 69s kubelet, 192.168.200.209 Container image "nginx:1.15" already present on machine

3.2.4 Pods回滚

当我们更新的项目突然有了问题;

我们需要将Pods回滚到上一个版本

- :kubectl rollout history deploy/nginx

- 命令说明:

- kubectl rollout:管理资源的发布的命令

- history deploy/nginx:指定要查看发布历史的deploy的名称

- :kubectl rollout undo deploy/nginx

- 命令说明:

- kubectl rollout:管理资源的发布的命令

- undo deploy/nginx:指定要回滚到上一个版本的deploy的名字

#查看Pods发布过的版本情况[root@Master01 ~]# kubectl rollout history deploy/nginxdeployment.extensions/nginxREVISION CHANGE-CAUSE1 <none>2 <none>#回滚到上一个版本[root@Master01 ~]# kubectl rollout undo deploy/nginxdeployment.extensions/nginx#查看pods回滚情况[root@Master01 ~]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEnginx-56fbc658b-br59r 0/1 Terminating 0 30m 172.17.3.2 192.168.200.209 <none>nginx-56fbc658b-sfzbh 0/1 Terminating 0 31m 172.17.3.3 192.168.200.209 <none>nginx-56fbc658b-wgrqj 0/1 Terminating 0 33m 172.17.74.3 192.168.200.210 <none>nginx-7b67cfbf9f-j4jvr 1/1 Running 0 9s 172.17.74.2 192.168.200.210 <none>nginx-7b67cfbf9f-nxn8n 1/1 Running 0 8s 172.17.3.2 192.168.200.209 <none>nginx-7b67cfbf9f-tmlds 1/1 Running 0 6s 172.17.3.3 192.168.200.209 <none>[root@Master01 ~]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEnginx-56fbc658b-br59r 0/1 Terminating 0 30m 172.17.3.2 192.168.200.209 <none>nginx-56fbc658b-sfzbh 0/1 Terminating 0 31m 172.17.3.3 192.168.200.209 <none>nginx-56fbc658b-wgrqj 0/1 Terminating 0 33m 172.17.74.3 192.168.200.210 <none>nginx-7b67cfbf9f-j4jvr 1/1 Running 0 14s 172.17.74.2 192.168.200.210 <none>nginx-7b67cfbf9f-nxn8n 1/1 Running 0 13s 172.17.3.2 192.168.200.209 <none>nginx-7b67cfbf9f-tmlds 1/1 Running 0 11s 172.17.3.3 192.168.200.209 <none>[root@Master01 ~]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEnginx-7b67cfbf9f-j4jvr 1/1 Running 0 24s 172.17.74.2 192.168.200.210 <none>nginx-7b67cfbf9f-nxn8n 1/1 Running 0 23s 172.17.3.2 192.168.200.209 <none>nginx-7b67cfbf9f-tmlds 1/1 Running 0 21s 172.17.3.3 192.168.200.209 <none>

3.2.5 Pods删除

当我们需要删除Pods时;

我们不能去删除Pods,而是要删除管理Pods的deployment;

如果Pods被发布了对外访问服务,我们还需要删除service

- :kubectl rollout undo deploy/nginx

- 命令说明:

- kubectl rollout:管理资源的发布的命令

- undo deploy/nginx:指定要回滚到上一个版本的deploy的名字

#查看Pods和deploy[root@Master01 ~]# kubectl get pods,deploy -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEpod/nginx-7b67cfbf9f-j4jvr 1/1 Running 0 7m21s 172.17.74.2 192.168.200.210 <none>pod/nginx-7b67cfbf9f-nxn8n 1/1 Running 0 7m20s 172.17.3.2 192.168.200.209 <none>pod/nginx-7b67cfbf9f-tmlds 1/1 Running 0 7m18s 172.17.3.3 192.168.200.209 <none>NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTORdeployment.extensions/nginx 3 3 3 3 92m nginx nginx:1.14 run=nginx#删除指定的deploy[root@Master01 ~]# kubectl delete deploy/nginxdeployment.extensions "nginx" deleted#查看删除的结果[root@Master01 ~]# kubectl get pods,deploy -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEpod/nginx-7b67cfbf9f-nxn8n 0/1 Terminating 0 7m40s <none> 192.168.200.209 <none>pod/nginx-7b67cfbf9f-tmlds 0/1 Terminating 0 7m38s <none> 192.168.200.209 <none>[root@Master01 ~]# kubectl get pods,deploy -o wideNo resources found.#查看发布的service[root@Master01 ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 24dnginx-service NodePort 10.0.0.193 <none> 80:46889/TCP 79m#删除指定的service[root@Master01 ~]# kubectl delete svc/nginx-serviceservice "nginx-service" deleted#查看删除结果[root@Master01 ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 24d

3.3 kubectl工具远程连接K8S集群

想要管理kubenetes集群,必须在K8S的Master节点上;

要想远程来管理,那么需要进行如下操作

3.3.1 通过脚本和证书生成kubectl的配置文件

#在Master01上,准备一个ssh_kubeconfig.sh脚本[root@Master01 scripts]# pwd/server/scripts[root@Master01 scripts]# ls ssh_kubeconfig.shssh_kubeconfig.sh[root@Master01 scripts]# cat ssh_kubeconfig.sh#!/bin/bashkubectl config set-cluster kubernetes \--server=https://192.168.200.205:6443 \ #负载均衡器VIP或Master01的IP和端口--embed-certs=true \--certificate-authority=ca.pem \--kubeconfig=configkubectl config set-credentials cluster-admin \--certificate-authority=ca.pem \--embed-certs=true \--client-key=admin-key.pem \--client-certificate=admin.pem \--kubeconfig=configkubectl config set-context default --cluster=kubernetes --user=cluster-admin --kubeconfig=configkubectl config use-context default --kubeconfig=config#创建一个目录将需要的证书和脚本拷贝进去[root@Master01 scripts]# mkdir -p /ssh_config[root@Master01 scripts]# cp ssh_kubeconfig.sh /ssh_config/[root@Master01 scripts]# ls k8s-cert/admin.pem k8s-cert/admin-key.pem k8s-cert/ca.pemk8s-cert/admin-key.pem k8s-cert/admin.pem k8s-cert/ca.pem[root@Master01 scripts]# cp k8s-cert/admin.pem k8s-cert/admin-key.pem k8s-cert/ca.pem /ssh_config/[root@Master01 scripts]# cd /ssh_config/[root@Master01 ssh_config]# lsadmin-key.pem admin.pem ca.pem ssh_kubeconfig.sh#执行脚本生成config远程连接的配置文件[root@Master01 ssh_config]# ./ssh_kubeconfig.shCluster "kubernetes" set.User "cluster-admin" set.Context "default" created.Switched to context "default".[root@Master01 ssh_config]# lsadmin-key.pem admin.pem ca.pem config ssh_kubeconfig.sh

3.3.2 kubectl的远程连接测试

#将kubectl二进制命令文件拷贝到Harbor(192.168.200.211)上[root@Master01 ssh_config]# scp /usr/local/bin/kubectl root@192.168.200.211:/usr/local/bin/The authenticity of host '192.168.200.211 (192.168.200.211)' cant be established.ECDSA key fingerprint is SHA256:DbY5ZLFytaIrrM0hUUSYj12DHprd/boGy3Kim6rMrJA.ECDSA key fingerprint is MD5:59:39:e3:1a:6e:f8:66:4e:0d:de:08:80:cc:89:f4:20.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added '192.168.200.211' (ECDSA) to the list of known hosts.root@192.168.200.211s password:kubectl 100% 55MB 81.5MB/s 00:00#在Harbor的机器上进行远程连接测试[root@Harbor ~]# hostname -I192.168.200.211[root@Harbor ~]# which kubectl/usr/local/bin/kubectl[root@Harbor ~]# kubectl get nodeThe connection to the server localhost:8080 was refused - did you specify the right host or port?#我们发现kubectl并不起作用。#现在我们将之前生成的config配置文件也拷贝过来[root@Master01 ssh_config]# scp config root@192.168.200.211:~/root@192.168.200.211s password:config 100% 6273 7.9MB/s 00:00#再次在Harbor服务器上进行kubectl的远程连接测试[root@Harbor ~]# lsanaconda-ks.cfg config[root@Harbor ~]# kubectl --kubeconfig=./config get nodeNAME STATUS ROLES AGE VERSION192.168.200.209 Ready <none> 24d v1.12.1192.168.200.210 Ready <none> 23d v1.12.1

第四章 YAML文件(资源编排)

YAML是"YAML不是一种标记语言"的外语缩写;

它是一种直观的能够被电脑识别的数据序列化格式,是一个可读性高并且容易被人类阅读,容易和脚本语言交互,用来表达资料序列的编程语言;

它是类似于标准通用标记语言的子集XML的数据描述语言,语法比XML简单很多。

4.1 YAML文件格式说明

- 缩进表示层级关系

- 不支持指标符“tab”缩进,使用空格缩进

- 通常开头缩进2个空格

- 字符后缩进1个空格,如11冒号,逗号等

- "---" 表示YAML格式,一个文件的开始

- "#"注释

因为在之前的学习中,Ansible那里已经多次练习过;

所以,这里对于格式不再多说

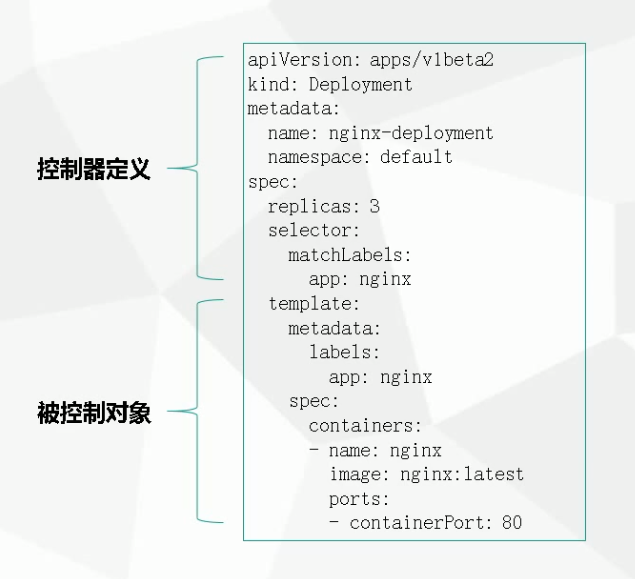

4.2 YAML文件创建资源对象

#创建一个目录,制作资源创建的YAML[root@Master01 ~]# mkdir demo[root@Master01 ~]# cd demo/[root@Master01 demo]# pwd/root/demo[root@Master01 demo]# vim nginx-deployment.yaml[root@Master01 demo]# cat nginx-deployment.yamlapiVersion: apps/v1kind: Deploymentmetadata:name: nginx-deploymentlabels:app: nginxspec:replicas: 3selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: nginximage: nginx:1.15.4ports:- containerPort: 80#根据YAML资源文件创建资源[root@Master01 demo]# kubectl create --help | grep -A 2 UsageUsage:kubectl create -f FILENAME [options][root@Master01 demo]# kubectl create -f nginx-deployment.yamldeployment.apps/nginx-deployment created[root@Master01 demo]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-deployment-d55b94fd-2hhw5 0/1 ContainerCreating 0 69snginx-deployment-d55b94fd-gtvtp 1/1 Running 0 69snginx-deployment-d55b94fd-gvfzg 1/1 Running 0 69s[root@Master01 demo]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEnginx-deployment-d55b94fd-2hhw5 0/1 ContainerCreating 0 85s <none> 192.168.200.209 <none>nginx-deployment-d55b94fd-gtvtp 1/1 Running 0 85s 172.17.74.2 192.168.200.210 <none>nginx-deployment-d55b94fd-gvfzg 1/1 Running 0 85s 172.17.3.3 192.168.200.209 <none>[root@Master01 demo]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEnginx-deployment-d55b94fd-2hhw5 1/1 Running 0 86s 172.17.3.2 192.168.200.209 <none>nginx-deployment-d55b94fd-gtvtp 1/1 Running 0 86s 172.17.74.2 192.168.200.210 <none>nginx-deployment-d55b94fd-gvfzg 1/1 Running 0 86s 172.17.3.3 192.168.200.209 <none>

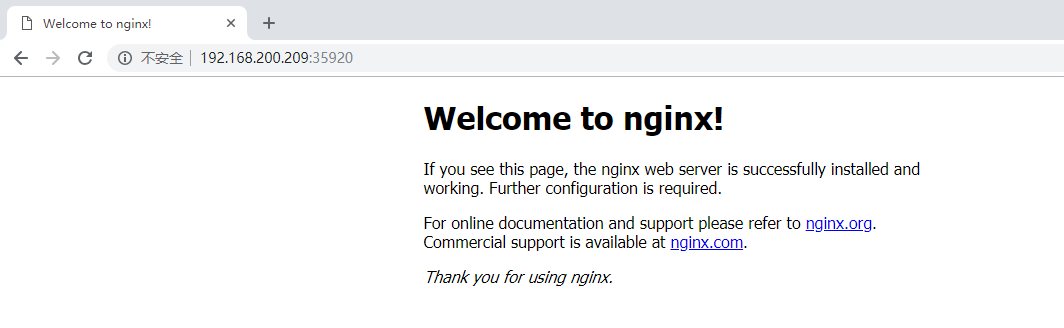

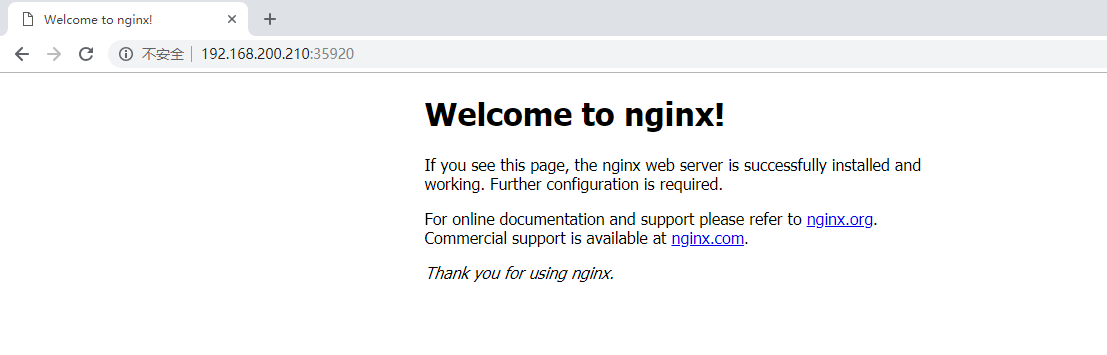

4.3 YAML文件发布资源对象

[root@Master01 demo]# vim nginx-service.yaml[root@Master01 demo]# cat nginx-service.yamlapiVersion: v1kind: Servicemetadata:name: nginx-servicelabels:app: nginxspec:type: NodePortports:- port: 80targetPort: 80selector:app: nginx[root@Master01 demo]# kubectl create -f nginx-service.yamlservice/nginx-service created[root@Master01 demo]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEnginx-deployment-d55b94fd-2hhw5 1/1 Running 1 16m 172.17.7.3 192.168.200.209 <none>nginx-deployment-d55b94fd-gtvtp 1/1 Running 1 16m 172.17.13.2 192.168.200.210 <none>nginx-deployment-d55b94fd-gvfzg 1/1 Running 1 16m 172.17.7.2 192.168.200.209 <none>[root@Master01 demo]# kubectl get svc -o wideNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTORkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 35d <none>nginx-service NodePort 10.0.0.42 <none> 80:35920/TCP 8m13s app=nginx

4.4 YAML字段太多,记不住?

- 用run命令生成

- kubectl run --image=nginx my-deploy -o yaml --dry-run > my-deploy.yaml

- 用get命令导出

- kubectl get my-deploy/nginx -o=yaml --export > my-deploy.yaml

- Pod容器的字段拼写忘记了

- kubectl explain pods.spec.containers

#用run命令生成[root@Master01 demo]# kubectl run nginx --image=nginx:1.15.2 --port=80 --replicas=3 -o yaml --dry-run > my-deploymentkubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.[root@Master01 demo]# cat my-deploymentapiVersion: apps/v1beta1kind: Deploymentmetadata:creationTimestamp: nulllabels:run: nginxname: nginxspec:replicas: 3selector:matchLabels:run: nginxstrategy: {}template:metadata:creationTimestamp: nulllabels:run: nginxspec:containers:- image: nginx:1.15.2name: nginxports:- containerPort: 80resources: {}status: {}#用get命令导出[root@Master01 demo]# kubectl get deploy/nginx-deployment --exportNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEnginx-deployment 3 0 0 0 <unknown>[root@Master01 demo]# kubectl get deploy/nginx-deployment --export -o yaml > my-deploy.yaml[root@Master01 demo]# cat my-deploy.yamlapiVersion: extensions/v1beta1kind: Deploymentmetadata:annotations:deployment.kubernetes.io/revision: "1"creationTimestamp: nullgeneration: 1labels:app: nginxname: nginx-deploymentselfLink: /apis/extensions/v1beta1/namespaces/default/deployments/nginx-deploymentspec:progressDeadlineSeconds: 600replicas: 3revisionHistoryLimit: 10selector:matchLabels:app: nginxstrategy:rollingUpdate:maxSurge: 25%maxUnavailable: 25%type: RollingUpdatetemplate:metadata:creationTimestamp: nulllabels:app: nginxspec:containers:- image: nginx:1.15.4imagePullPolicy: IfNotPresentname: nginxports:- containerPort: 80protocol: TCPresources: {}terminationMessagePath: /dev/termination-logterminationMessagePolicy: FilednsPolicy: ClusterFirstrestartPolicy: AlwaysschedulerName: default-schedulersecurityContext: {}terminationGracePeriodSeconds: 30status: {}