@chensiqi

2021-06-02T15:12:18.000000Z

字数 41033

阅读 1475

容器自动化(九):K8S容器云平台入门(中)

云计算专题之容器自动化

--私人课件,不公开,不出版,禁止传播

想做好运维工作,人先要学会勤快;

居安而思危,勤记而补拙,方可不断提高;

别人资料不论你用着再如何爽那也是别人的;

自己总结东西是你自身特有的一种思想与理念的展现;

精髓不是看出来的,精髓是记出来的;

请同学们在学习的过程中养成好的学习习惯;

勤于实践,抛弃教案,勤于动手,整理文档。

二,Kubernetes生产级高可用集群部署

| 角色 | IP | 组件 | 推荐配置 |

|---|---|---|---|

| master01 | 192.168.200.207 | kube-apiserver/kube-controller-manager/kube-scheduler/etcd | CPU:2C+ 内存:4G+ |

| master02 | 192.168.200.208 | kube-apiserver/kube-controller-manager/kube-scheduler/etcd | CPU:2C+ 内存:4G+ |

| node01 | 192.168.200.209 | kubelet/kube-proxy/docker/flannel/etcd | CPU:2C+ 内存:4G+ |

| node02 | 192.168.200.210 | kubelet/kube-proxy/docker/flannel | CPU:2C+ 内存:4G+ |

| Load_Balancer_Master | 192.168.200.205,VIP:192.168.200.100 | Nginx L4 | CPU:2C+ 内存:4G+ |

| Load_Balancer_Backup | 192.168.200.206 | Nginx L4 | CPU:2C+ 内存:4G+ |

| Registry_Harbor | 192.168.200.211 | Harbor | CPU:2C+ 内存:4G+ |

2.7 单Master集群-在Master节点部署组件

基本流程:

- 自签SSL证书

- 部署kube-apiserver

- 部署kube-controller-manager

- 部署kube-scheduler

配置文件-->systemd管理组件-->启动

在部署K8S之前一定要确保etcd,flannel,docker是正常工作的,否则先解决问题再继续。

2.7.1 自签APIServer的SSL证书

#在master01查看事先准备好的k8s-cert.sh证书脚本[root@Master01 scripts]# pwd/server/scripts[root@Master01 scripts]# lscfssl.sh etcd-cert etcd-cert.sh etcd.sh flannel.sh k8s-cert.sh[root@Master01 scripts]# cat k8s-cert.sh#!/bin/bashcat > ca-config.json <<FOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}FOFcat > ca-csr.json <<FOF{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing","O": "k8s","OU": "System"}]}FOFcfssl gencert -initca ca-csr.json | cfssljson -bare ca -#-----------------------------cat > server-csr.json <<FOF{"CN": "kubernetes","hosts": ["10.0.0.1","127.0.0.1","192.168.200.205", #LB-Master-IP,脚本里必须去掉#号后内容"192.168.200.206", #LB-Backup-IP,脚本里必须去掉#号后内容"192.168.200.207", #Master01-IP,脚本里必须去掉#号后内容"192.168.200.208", #Master02-IP,脚本里必须去掉#号后内容"192.168.200.100" #LB-VIP,脚本里必须去掉#号后内容]"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]}FOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server#--------------------------------------------cat > admin-csr.json <<FOF{"CN": "admin","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "System"}]}FOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin#------------------------------------------cat > kube-proxy-csr.json <<FOF{"CN": "system:kube-proxy","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]}FOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy#创建一个k8s认证文件的目录[root@Master01 scripts]# mkdir k8s-cert[root@Master01 scripts]# lscfssl.sh etcd-cert etcd-cert.sh etcd.sh flannel.sh k8s-cert k8s-cert.sh#将k8s-cert.sh脚本复制到k8s-cert目录下,并执行脚本生成证书文件[root@Master01 scripts]# cp k8s-cert.sh k8s-cert/[root@Master01 scripts]# cd k8s-cert[root@Master01 k8s-cert]# lsk8s-cert.sh[root@Master01 k8s-cert]# ./k8s-cert.sh2019/03/27 21:29:04 [INFO] generating a new CA key and certificate from CSR2019/03/27 21:29:04 [INFO] generate received request2019/03/27 21:29:04 [INFO] received CSR2019/03/27 21:29:04 [INFO] generating key: rsa-20482019/03/27 21:29:04 [INFO] encoded CSR2019/03/27 21:29:04 [INFO] signed certificate with serial number 6988684056664890180191262851542601788273888549602019/03/27 21:29:04 [INFO] generate received request2019/03/27 21:29:04 [INFO] received CSR2019/03/27 21:29:04 [INFO] generating key: rsa-20482019/03/27 21:29:04 [INFO] encoded CSR2019/03/27 21:29:04 [INFO] signed certificate with serial number 2470504728178439816205705574811958523769827453932019/03/27 21:29:04 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable forwebsites. For more information see the Baseline Requirements for the Issuance and Managementof Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);specifically, section 10.2.3 ("Information Requirements").2019/03/27 21:29:04 [INFO] generate received request2019/03/27 21:29:04 [INFO] received CSR2019/03/27 21:29:04 [INFO] generating key: rsa-20482019/03/27 21:29:05 [INFO] encoded CSR2019/03/27 21:29:05 [INFO] signed certificate with serial number 2502819937307973101793974079426537192721992240122019/03/27 21:29:05 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable forwebsites. For more information see the Baseline Requirements for the Issuance and Managementof Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);specifically, section 10.2.3 ("Information Requirements").2019/03/27 21:29:05 [INFO] generate received request2019/03/27 21:29:05 [INFO] received CSR2019/03/27 21:29:05 [INFO] generating key: rsa-20482019/03/27 21:29:05 [INFO] encoded CSR2019/03/27 21:29:05 [INFO] signed certificate with serial number 4524147592693064002136555609951931886126709475012019/03/27 21:29:05 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable forwebsites. For more information see the Baseline Requirements for the Issuance and Managementof Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);specifically, section 10.2.3 ("Information Requirements").[root@Master01 k8s-cert]# lsadmin.csr admin.pem ca-csr.json k8s-cert.sh kube-proxy-key.pem server-csr.jsonadmin-csr.json ca-config.json ca-key.pem kube-proxy.csr kube-proxy.pem server-key.pemadmin-key.pem ca.csr ca.pem kube-proxy-csr.json server.csr server.pem

2.7.2 部署Master01组件(apiserver,controller,scheuler)

- Kube-apiserver

- kube-controller-manager

- kube-scheduler

配置文件--> systemd管理组件-->启动

从官网下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群

https://github.com/kubernetes/kubernetes/releases

(1)部署kube-apiserver组件

#在master01上下载kubernetes二进制包版本号V1.12.1[root@Master01 ~]# wget https://dl.k8s.io/v1.12.1/kubernetes-server-linux-amd64.tar.gz[root@Master01 ~]# ls kubernetes-server-linux-amd64.tar.gzkubernetes-server-linux-amd64.tar.gz#创建kubernetes程序目录[root@Master01 scripts]# mkdir -p /opt/kubernetes/{bin,cfg,ssl}#将解压出来的kubernetes的二进制进制文件移动到/opt/kubernetes/bin目录下[root@Master01 ~]# ls kubernetes-server-linux-amd64.tar.gzkubernetes-server-linux-amd64.tar.gz[root@Master01 ~]# tar xf kubernetes-server-linux-amd64.tar.gz[root@Master01 ~]# cd kubernetes[root@Master01 kubernetes]# lsaddons kubernetes-src.tar.gz LICENSES server[root@Master01 kubernetes]# cd server/bin/[root@Master01 bin]# lsapiextensions-apiserver kube-apiserver.docker_tag kube-proxycloud-controller-manager kube-apiserver.tar kube-proxy.docker_tagcloud-controller-manager.docker_tag kube-controller-manager kube-proxy.tarcloud-controller-manager.tar kube-controller-manager.docker_tag kube-schedulerhyperkube kube-controller-manager.tar kube-scheduler.docker_tagkubeadm kubectl kube-scheduler.tarkube-apiserver kubelet mounter[root@Master01 bin]# cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/[root@Master01 bin]# ls /opt/kubernetes/bin/kube-apiserver kube-controller-manager kubectl kube-scheduler#首先要确保/opt/etcd/ssl下有指定认证文件[root@Master01 scripts]# ls /opt/etcd/ssl/ca-key.pem ca.pem server-key.pem server.pem#拷贝K8S证书到指定目录下[root@Master01 scripts]# cd ~[root@Master01 ~]# cd /server/scripts/k8s-cert[root@Master01 k8s-cert]# ll ca.pem ca-key.pem server*.pem-rw-r--r-- 1 root root 1359 3月 27 21:29 ca.pem-rw-r--r-- 1 root root 1359 3月 27 21:29 ca-key.pem-rw------- 1 root root 1675 3月 27 21:29 server-key.pem-rw-r--r-- 1 root root 1643 3月 27 21:29 server.pem[root@Master01 k8s-cert]# cp ca.pem ca-key.pem server*.pem /opt/kubernetes/ssl/[root@Master01 k8s-cert]# ls /opt/kubernetes/ssl/ca.pem ca-key.pem server-key.pem server.pem #拷贝这四个证书到此目录下#查看事先写好的apiserver.sh脚本[root@Master01 ~]# cd /server/scripts/[root@Master01 scripts]# cat apiserver.sh#!/bin/bashMASTER_ADDRESS=$1ETCD_SERVERS=$2cat <<FOF >/opt/kubernetes/cfg/kube-apiserverKUBE_APISERVER_OPTS="--logtostderr=true \\--v=4 \\--etcd-servers=${ETCD_SERVERS} \\--bind-address=${MASTER_ADDRESS} \\--secure-port=6443 \\--advertise-address=${MASTER_ADDRESS} \\--allow-privileged=true \\--service-cluster-ip-range=10.0.0.0/24 \\--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\--authorization-mode=RBAC,Node \\--kubelet-https=true \\--enable-bootstrap-token-auth \\--token-auth-file=/opt/kubernetes/cfg/token.csv \\--service-node-port-range=30000-50000 \\--tls-cert-file=/opt/kubernetes/ssl/server.pem \\--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\--client-ca-file=/opt/kubernetes/ssl/ca.pem \\--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\--etcd-cafile=/opt/etcd/ssl/ca.pem \\--etcd-certfile=/opt/etcd/ssl/server.pem \\--etcd-keyfile=/opt/etcd/ssl/server-key.pem"FOFcat <<FOF >/usr/lib/systemd/system/kube-apiserver.service[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserverExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetFOFsystemctl daemon-reloadsystemctl enable kube-apiserversystemctl restart kube-apiserver#通过脚本生成apiserver配置文件和systemd启动脚本[root@Master01 scripts]# ./apiserver.sh 192.168.200.207 https://192.168.200.207:2379,https://192.168.200.208:2379,https://192.168.200.209:2379Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.#查看kube-apiserver的配置文件[root@Master01 ~]# cat /opt/kubernetes/cfg/kube-apiserverKUBE_APISERVER_OPTS="--logtostderr=true \--v=4 \--etcd-servers=https://192.168.200.207:2379,https://192.168.200.208:2379,https://192.168.200.209:2379 \--bind-address=192.168.200.207 \--secure-port=6443 \--advertise-address=192.168.200.207 \--allow-privileged=true \--service-cluster-ip-range=10.0.0.0/24 \--enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \--authorization-mode=RBAC,Node \--kubelet-https=true \--enable-bootstrap-token-auth \--token-auth-file=/opt/kubernetes/cfg/token.csv \--service-node-port-range=30000-50000 \--tls-cert-file=/opt/kubernetes/ssl/server.pem \--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \--client-ca-file=/opt/kubernetes/ssl/ca.pem \--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \--etcd-cafile=/opt/etcd/ssl/ca.pem \--etcd-certfile=/opt/etcd/ssl/server.pem \--etcd-keyfile=/opt/etcd/ssl/server-key.pem"特别说明:--logtostderr:启用日志--v:日志等级--etcd-servers:etcd集群地址--bind-address:监听地址--secure-port:https安全端口--advertise-address:集群通告地址--allow-privileged:启用授权--service-cluster-ip-range:Service虚拟IP地址段--enable-admission-plugins:准入控制模块--authorization-mode:认证授权,启用RBAC授权和节点自管理--enable-bootstrap-token-auth:启用TLS bootstrap功能,后面会提到--token-auth-file token文件--service-node-port-range:Service Node类型默认分配端口范围

#我们此时还不能启动apiserver,因为配置文件里的token.csv认证文件还没有生成#生成一段随机16位字符串作为token认证内容[root@Master01 ~]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '87ba208e8fe7dfd393b93bf0f7749898[root@Master01 ~]# head -c 16 /dev/urandom | od -An -t x | tr -d ' ' > /opt/kubernetes/cfg/token.csv[root@Master01 ~]# vim /opt/kubernetes/cfg/token.csv[root@Master01 ~]# cat /opt/kubernetes/cfg/token.csv #token认证文件生成结束(没有的手动补全)df3334281501df44c2bea4db952c1ee8,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

特别说明:

df3334281501df44c2bea4db952c1ee8,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

- df3334281501df44c2bea4db952c1ee8:十六位随机组合token认证数字

- kubelet-bootstrap:标识一个用户

- 10001:隶属的用户组

- system:kubelet-bootstrap:加入到当前K8S的一个角色

#启动kube-apiserver[root@Master01 ~]# systemctl daemon-reload[root@Master01 ~]# systemctl start kube-apiserver[root@Master01 ~]# ps -ef | grep kube-apiserverroot 28492 1 23 22:39 ? 00:00:00 /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://192.168.200.207:2379,https://192.168.200.208:2379,https://192.168.200.209:2379 --bind-address=192.168.200.207 --secure-port=6443 --advertise-address=192.168.200.207 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pemroot 28501 27502 0 22:39 pts/0 00:00:00 grep --color=auto kube-apiserver

(2)部署kube-controller-manager组件

#在Master01上查看已经写好的controller-manager.sh脚本[root@Master01 scripts]# ls controller-manager.shcontroller-manager.sh[root@Master01 scripts]# cat controller-manager.sh#!/bin/bashMASTER_ADDRESS=$1cat <<FOF >/opt/kubernetes/cfg/kube-controller-managerKUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \\--v=4 \\--master=${MASTER_ADDRESS}:8080 \\--leader-elect=true \\--address=127.0.0.1 \\--service-cluster-ip-range=10.10.10.0/24 \\--cluster-name=kubernetes \\--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\--root-ca-file=/opt/kubernetes/ssl/ca.pem \\--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\--experimental-cluster-signing-duration=87600h0m0s"FOFcat <<FOF >/usr/lib/systemd/system/kube-controller-manager.service[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-managerExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetFOFsystemctl daemon-reloadsystemctl enable kube-controller-managersystemctl start kube-controller-manager#执行脚本获取controller-manager配置文件及systemd启动脚本[root@Master01 scripts]# ./controller-manager.sh 127.0.0.1#查看服务是否启动[root@Master01 scripts]# ps -ef | grep kuberoot 28944 1 3 23:32 ? 00:00:08 /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://192.168.200.207:2379,https://192.168.200.208:2379,https://192.168.200.209:2379 --bind-address=192.168.200.207 --secure-port=6443 --advertise-address=192.168.200.207 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pemroot 28963 1 1 23:34 ? 00:00:01 /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.10.10.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem --root-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --experimental-cluster-signing-duration=87600h0m0sroot 28994 28226 0 23:37 pts/1 00:00:00 grep --color=auto kube

(3)部署kube-scheduler组件

#在Master01上,查看事先准备好的scheduler.sh脚本[root@Master01 ~]# cd /server/scripts/[root@Master01 scripts]# cat scheduler.sh#!/bin/bashMASTER_ADDRESS=$1cat <<FOF >/opt/kubernetes/cfg/kube-schedulerKUBE_SCHEDULER_OPTS="--logtostderr=true \\--v=4 \\--master=${MASTER_ADDRESS}:8080 \\--leader-elect"FOFcat <<FOF >/usr/lib/systemd/system/kube-scheduler.service[Unit]Description=Kubernetes.SchedulerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-schedulerExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetFOFsystemctl daemon-reloadsystemctl enable kube-schedulersystemctl restart kube-scheduler#给脚本x权限后,执行该脚本[root@Master01 scripts]# ./scheduler.sh 127.0.0.1#查看kube-scheduler进程,是否启动[root@Master01 scripts]# ps -ef | grep kube-scheduler | grep -v greproot 30020 1 0 17:52 ? 00:00:00 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect#查看一下schduler的配置文件:[root@Master01 ~]# cat /opt/kubernetes/cfg/kube-schedulerKUBE_SCHEDULER_OPTS="--logtostderr=true \--v=4 \--master=127.0.0.1:8080 \--leader-elect"特别说明:--master:连接本地的apiserver--leader-elect:当该组件启动多个时,自动选举(HA)

#最后我们检查一个master集群的健康状态#将K8S的命令做一个软链接[root@Master01 scripts]# cd ~[root@Master01 ~]# ls /opt/kubernetes/bin/kube-apiserver kube-controller-manager kubectl kube-scheduler[root@Master01 ~]# ln -s /opt/kubernetes/bin/* /usr/local/bin/#执行集群健康状态检查命令[root@Master01 ~]# kubectl get csNAME STATUS MESSAGE ERRORcontroller-manager Healthy okscheduler Healthy oketcd-2 Healthy {"health":"true"}etcd-0 Healthy {"health":"true"}etcd-1 Healthy {"health":"true"}

2.8 单Master集群-在Node节点部署组件

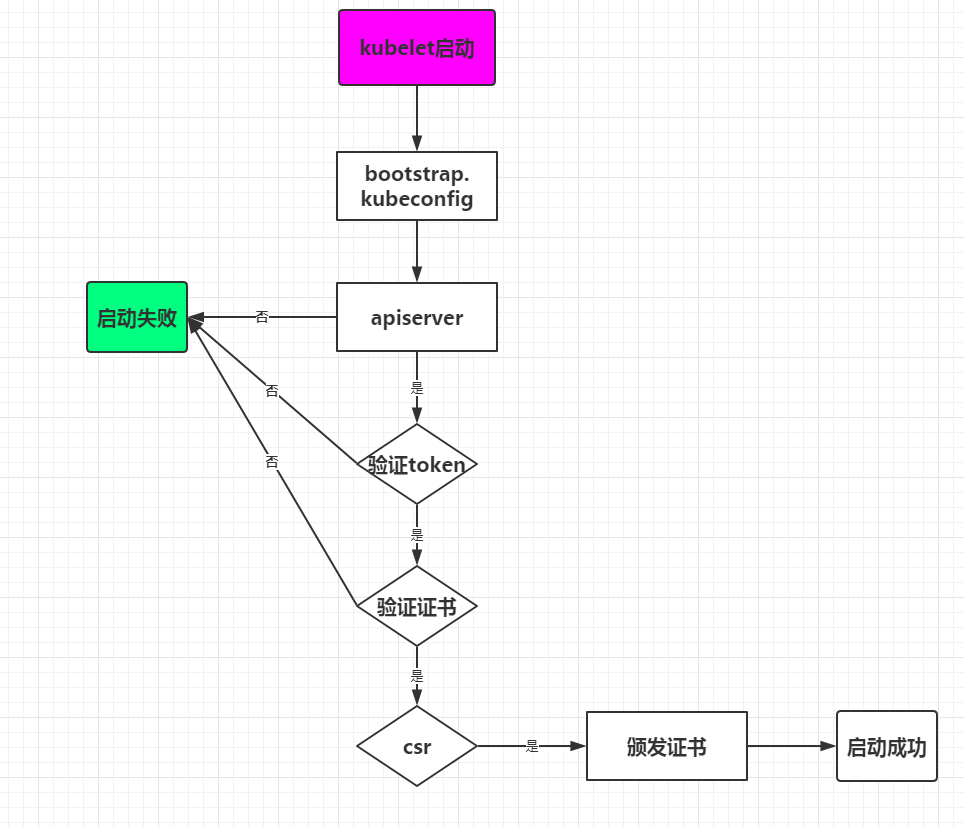

Master apiserver启用TLS认证后,Node节点kubelet组件想要加入集群,必须使用CA签发的有效证书才能与apiserver通信,当Node节点很多时,签署证书是一件很繁琐的事情,因此有了TLS Bootstrapping机制,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。

认证大致工作流程如图所示:

2.8.1 在Master节点将kubelet-bootstrap用户绑定到系统集群角色

#在master节点上创建kubelet-bootstrap用户角色(用于验证Node访问Master apiserver),并绑定到系统集群[root@Master01 ~]# cat /opt/kubernetes/cfg/token.csvdf3334281501df44c2bea4db952c1ee8,kubelet-bootstrap,10001,"system:kubelet-bootstrap"[root@Master01 ~]# which kubectl/usr/local/bin/kubectl[root@Master01 ~]# kubectl create clusterrolebinding kubelet-bootstrap \> --clusterrole=system:node-bootstrapper \> --user=kubelet-bootstrapclusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

2.8.2 在Master节点创建kubeconfig文件

#在master01上查看准备好的kubeconfig.sh脚本#注意脚本中的BOOTSTRAP_TOKEN需要设定成你自己的#注意脚本中的KUBE_APISERVER需要设定成你的master节点IP[root@Master01 scripts]# cat kubeconfig.sh#!/bin/bash#创建kubelet bootstrapping kubeconfigBOOTSTRAP_TOKEN=df3334281501df44c2bea4db952c1ee8 #特别注意,这行所有人都要修改KUBE_APISERVER="https://192.168.200.207:6443"#设置集群参数kubectl config set-cluster kubernetes \--certificate-authority=./ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=bootstrap.kubeconfig#设置客户端认证参数kubectl config set-credentials kubelet-bootstrap \--token=${BOOTSTRAP_TOKEN} \--kubeconfig=bootstrap.kubeconfig#设置上下文参数kubectl config set-context default \--cluster=kubernetes \--user=kubelet-bootstrap \--kubeconfig=bootstrap.kubeconfig#设置默认上下文kubectl config use-context default --kubeconfig=bootstrap.kubeconfig#-----------------------------------------#创建kube-proxy kubeconfig文件kubectl config set-cluster kubernetes \--certificate-authority=./ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=kube-proxy.kubeconfigkubectl config set-credentials kube-proxy \--client-certificate=./kube-proxy.pem \--client-key=./kube-proxy-key.pem \--embed-certs=true \--kubeconfig=kube-proxy.kubeconfigkubectl config set-context default \--cluster=kubernetes \--user=kube-proxy \--kubeconfig=kube-proxy.kubeconfigkubectl config use-context default --kubeconfig=kube-proxy.kubeconfig#将脚本复制到/server/scripts/k8s-cert/目录下#脚本必须在之前生成的k8s集群的证书目录下,以相对路径执行[root@Master01 scripts]# chmod +x kubeconfig.sh[root@Master01 scripts]# ls kubeconfig.shkubeconfig.sh[root@Master01 scripts]# pwd/server/scripts[root@Master01 scripts]# cp kubeconfig.sh k8s-cert/[root@Master01 k8s-cert]# ./kubeconfig.shCluster "kubernetes" set.User "kubelet-bootstrap" set.Context "default" created.Switched to context "default".Cluster "kubernetes" set.User "kube-proxy" set.Context "default" created.Switched to context "default".#查看生成的文件[root@Master01 k8s-cert]# ls bootstrap.kubeconfig kube-proxy.kubeconfigbootstrap.kubeconfig kube-proxy.kubeconfig

将最后生成的这两个文件拷贝到Node节点的/opt/kubernetes/cfg目录下

[root@Master01 k8s-cert]# which scp/usr/bin/scp[root@Master01 k8s-cert]# scp bootstrap.kubeconfig kube-proxy.kubeconfig 192.168.200.209:/opt/kubernetes/cfg/root@192.168.200.209's password:bootstrap.kubeconfig 100% 2169 4.0MB/s 00:00kube-proxy.kubeconfig 100% 6271 10.0MB/s 00:00

在Node节点上查看文件拷贝情况

[root@node01 ~]# cd /opt/kubernetes/cfg/[root@node01 cfg]# lsbootstrap.kubeconfig flanneld kube-proxy.kubeconfig

2.8.3 在Node节点部署kubelet组件

首先要将之前解压过的kubernetes的源码包中的两个二进制文件,拷贝到Node节点下的/opt/kubernetes/bin目录下

#将K8S源码包中的kubelet和kube-proxy拷贝到Node节点[root@Master01 ~]# pwd/root[root@Master01 ~]# cd kubernetes[root@Master01 kubernetes]# lsaddons kubernetes-src.tar.gz LICENSES server[root@Master01 kubernetes]# cd server/bin/[root@Master01 bin]# ls kubelet kube-proxykubelet kube-proxy[root@Master01 bin]# scp kubelet kube-proxy 192.168.200.209:/opt/kubernetes/bin/root@192.168.200.209s password:kubelet 100% 169MB 99.0MB/s 00:01kube-proxy 100% 48MB 94.5MB/s 00:00#在Node节点上查看拷贝情况[root@node01 cfg]# cd /opt/kubernetes/bin/[root@node01 bin]# lsflanneld kubelet kube-proxy mk-docker-opts.sh

然后将我们事先在Master节点准备好的脚本kubelet.sh拷贝到Node节点上

[root@Master01 scripts]# pwd/server/scripts[root@Master01 scripts]# ls kubelet.shkubelet.sh[root@Master01 scripts]# chmod +x kubelet.sh[root@Master01 scripts]# scp kubelet.sh 192.168.200.209:~/root@192.168.200.209s password:kubelet.sh 100% 1218 1.8MB/s 00:00#在Node节点查看拷贝情况,并执行脚本[root@node01 ~]# ls kubelet.shkubelet.sh[root@Master01 scripts]# cat kubelet.sh#!/bin/bashNODE_ADDRESS=$1DNS_SERVER_IP=${2:-"10.0.0.2"}cat <<FOF >/opt/kubernetes/cfg/kubeletKUBELET_OPTS="--logtostderr=true \\--v=4 \\--hostname-override=${NODE_ADDRESS} \\--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\--config=/opt/kubernetes/cfg/kubelet.config \\--cert-dir=/opt/kubernetes/ssl \\--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"FOFcat <<FOF >/opt/kubernetes/cfg/kubelet.configkind: KubeletConfigurationapiVersion: kubelet.config.k8s.io/v1beta1address: ${NODE_ADDRESS}port: 10250readOnlyPort: 10255cgroupDriver: cgroupfsclusterDNS: ["${DNS_SERVER_IP}"]clusterDomain: cluster.local.failSwapOn: falseauthentication:anonymous:enabled: trueFOFcat <<FOF >/usr/lib/systemd/system/kubelet.service[Unit]Description=Kubernetes KubeletAfter=dockerd.serviceRequires=dockerd.service[Service]EnvironmentFile=/opt/kubernetes/cfg/kubeletExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTSRestart=on-failureKillMode=process[Install]WantedBy=multi-user.targetFOFsystemctl daemon-reloadsystemctl enable kubeletsystemctl restart kubelet[root@node01 ~]# sh kubelet.sh 192.168.200.209#查看kubelet是否启动[root@node01 ~]# ps -ef | grep kubeletroot 61623 1 3 22:42 ? 00:00:00 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --hostname-override=192.168.200.209 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --config=/opt/kubernetes/cfg/kubelet.config --cert-dir=/opt/kubernetes/ssl --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0root 61635 60348 0 22:42 pts/0 00:00:00 grep --color=auto kubelet

kubelet在Node节点每隔一段时间,就会通过低权限用户bootstrap来向master节点上的apiserver申请证书。那么master节点上需要通过手动来授权向节点颁发证书。

#在Master审批Node加入集群[root@Master01 scripts]# kubectl get csr #查看master节点上收到的申请(Pending延迟决定)NAME AGE REQUESTOR CONDITIONnode-csr-gHxjWJd9EfxOw3Q0_0HgiBUqJ7AI07FPbxLpKgaYrVg 7m34s kubelet-bootstrap Pending#手动通过kubectl通过申请,kubectl certificate approve 加上申请的名字即可。[root@Master01 scripts]# kubectl certificate approve node-csr-gHxjWJd9EfxOw3Q0_0HgiBUqJ7AI07FPbxLpKgaYrVgcertificatesigningrequest.certificates.k8s.io/node-csr-gHxjWJd9EfxOw3Q0_0HgiBUqJ7AI07FPbxLpKgaYrVg approved#再次查看申请状态(Approved,Issued批准,发布)[root@Master01 scripts]# kubectl get csrNAME AGE REQUESTOR CONDITIONnode-csr-gHxjWJd9EfxOw3Q0_0HgiBUqJ7AI07FPbxLpKgaYrVg 8m49s kubelet-bootstrap Approved,Issued#在master上查看已经颁发证书的Node节点信息[root@Master01 scripts]# kubectl get nodeNAME STATUS ROLES AGE VERSION192.168.200.209 Ready <none> 32s v1.12.1

2.8.4 在Node节点部署kube-proxy组件

我们先将事先在Master节点准备好的脚本kube-proxy.sh拷贝到Node节点上

[root@Node01 ~]# cat kube-proxy.sh#!/bin/bashNODE_ADDRESS=$1cat <<FOF >/opt/kubernetes/cfg/kube-proxyKUBE_PROXY_OPTS="--logtostderr=true \\--v=4 \\--hostname-override=${NODE_ADDRESS} \\--cluster-cidr=10.0.0.0/24 \\--proxy-mode=ipvs \\--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"FOFcat <<FOF >/usr/lib/systemd/system/kube-proxy.service[Unit]Description=Kubernetes ProxyAfter=network.target[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-proxyExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetFOFsystemctl daemon-reloadsystemctl enable kube-proxysystemctl restart kube-proxy[root@Master01 scripts]# ls kube-proxy.shkube-proxy.sh[root@Master01 scripts]# chmod +x kube-proxy.sh[root@Master01 scripts]# scp kube-proxy.sh 192.168.200.209:~/root@192.168.200.209s password:kube-proxy.sh#在Node节点查看拷贝情况,并执行脚本[root@node01 ~]# ls kube-proxy.shkube-proxy.sh[root@node01 ~]# sh kube-proxy.sh 192.168.200.209Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.#查看启动是否成功[root@node01 ~]# ps -ef | grep proxyroot 62458 1 0 23:12 ? 00:00:00 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=192.168.200.209 --cluster-cidr=192.168.200.0/24 --proxy-mode=ipvs --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfigroot 62581 60348 0 23:12 pts/0 00:00:00 grep --color=auto proxy

2.8.5 再部署一个Node节点

#因为Node节点之间/opt/kubernetes目录里的内容是一样的#因此,我们拷贝Node01节点的/opt/kubernetes目录的内容过去[root@node01 ~]# scp -r /opt/kubernetes 192.168.200.210:/optroot@192.168.200.210's password:flanneld 100% 34MB 93.7MB/s 00:00mk-docker-opts.sh 100% 2139 2.1MB/s 00:00kubelet 100% 169MB 98.5MB/s 00:01kube-proxy 100% 48MB 103.6MB/s 00:00flanneld 100% 241 739.6KB/s 00:00bootstrap.kubeconfig 100% 2169 3.2MB/s 00:00kube-proxy.kubeconfig 100% 6271 11.6MB/s 00:00kubelet 100% 379 814.7KB/s 00:00kubelet.config 100% 274 682.5KB/s 00:00kubelet.kubeconfig 100% 2298 5.6MB/s 00:00kube-proxy 100% 196 568.7KB/s 00:00kubelet.crt 100% 2197 5.3MB/s 00:00kubelet.key 100% 1679 3.4MB/s 00:00kubelet-client-2019-04-08-22-43-32.pem 100% 1277 2.5MB/s 00:00kubelet-client-current.pem 100% 1277 2.9MB/s 00:00#将Node01节点的systemd启动管理脚本拷贝到另外一个Node02节点的目录下[root@node01 ~]# scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service 192.168.200.210:/usr/lib/systemd/system/root@192.168.200.210's password:kubelet.service 100% 266 542.4KB/s 00:00kube-proxy.service 100% 231 521.7KB/s 00:00#清空Node02节点的/opt/kubernets/ssl/目录下的所有证书(这是为之前Node01节点颁发的)[root@node02 kubernetes]# pwd/opt/kubernetes[root@node02 kubernetes]# ls ssl/kubelet-client-2019-04-08-22-43-32.pem kubelet.crtkubelet-client-current.pem kubelet.key[root@node02 kubernetes]# rm -f ssl/*[root@node02 kubernetes]# ls ssl/

然后我们需要给新的Node节点的配置文件修改为本机IP地址

#进入新Node节点的/opt/kubernetes/cfg目录下#修改kubelet,kubelet.config和kube-proxy三个文件里的IP地址(修改为新Node节点的IP地址)[root@node02 ~]# cd /opt/kubernetes/cfg/[root@node02 cfg]# lsbootstrap.kubeconfig kubelet kubelet.kubeconfig kube-proxy.kubeconfigflanneld kubelet.config kube-proxy[root@node02 cfg]# grep "209" *flanneld:FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.200.207:2379,https://192.168.200.208:2379,https://192.168.200.209:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem"kubelet:--hostname-override=192.168.200.209 \ #这个需要修改为新Node节点IPkubelet.config:address: 192.168.200.209 #这个需要修改为新Node节点IPkube-proxy:--hostname-override=192.168.200.209 \#这个需要修改为新Node节点IP[root@node02 cfg]# vim kubelet[root@node02 cfg]# vim kubelet.config[root@node02 cfg]# vim kube-proxy[root@node02 cfg]# grep "210" *kubelet:--hostname-override=192.168.200.210 \kubelet.config:address: 192.168.200.210kube-proxy:--hostname-override=192.168.200.210 \

做完这些事情后,我们就可以启动kubelet和kube-proxy服务了

[root@node02 cfg]# systemctl restart kubelet[root@node02 cfg]# systemctl restart kube-proxy[root@node02 cfg]# ps -ef | grep kuberoot 51230 1 3 20:32 ? 00:00:00 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --hostname-override=192.168.200.210 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --config=/opt/kubernetes/cfg/kubelet.config --cert-dir=/opt/kubernetes/ssl --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0root 51246 1 1 20:32 ? 00:00:00 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=192.168.200.210 --cluster-cidr=192.168.200.0/24 --proxy-mode=ipvs --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfigroot 51377 51072 0 20:32 pts/0 00:00:00 grep --color=auto kube

然后我们在Master节点查看是否有新的节点申请加入集群

[root@Master01 ~]# kubectl get csrNAME AGE REQUESTOR CONDITIONnode-csr-fDKZPFApSaC7XGP7l1Ik3EYsAbosKXYg7mj9cFPp3Vg 117s kubelet-bootstrap Pendingnode-csr-gHxjWJd9EfxOw3Q0_0HgiBUqJ7AI07FPbxLpKgaYrVg 21h kubelet-bootstrap Approved,Issued#手动审批节点加入集群[root@Master01 ~]# kubectl get csrNAME AGE REQUESTOR CONDITIONnode-csr-fDKZPFApSaC7XGP7l1Ik3EYsAbosKXYg7mj9cFPp3Vg 117s kubelet-bootstrap Pendingnode-csr-gHxjWJd9EfxOw3Q0_0HgiBUqJ7AI07FPbxLpKgaYrVg 21h kubelet-bootstrap Approved,Issued[root@Master01 ~]# kubectl certificate approve node-csr-fDKZPFApSaC7XGP7l1Ik3EYsAbosKXYg7mj9cFPp3Vgcertificatesigningrequest.certificates.k8s.io/node-csr-fDKZPFApSaC7XGP7l1Ik3EYsAbosKXYg7mj9cFPp3Vg approved[root@Master01 ~]# kubectl get nodeNAME STATUS ROLES AGE VERSION192.168.200.209 Ready <none> 22h v1.12.1192.168.200.210 Ready <none> 51s v1.12.1

2.9 运行一个测试示例检验集群工作状态

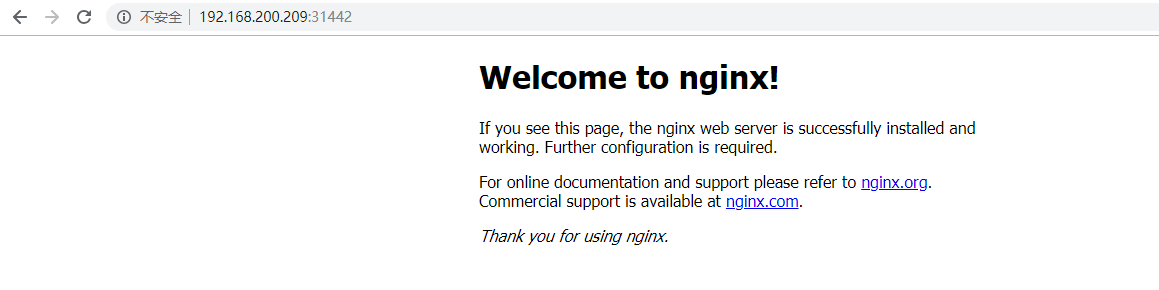

#创建一个nginx的pod并运行[root@Master01 ~]# kubectl run nginx --image=nginxkubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.deployment.apps/nginx created#查看pod情况,READY为0/1是还没有创建好;STATUS状态为容器正在建立[root@Master01 ~]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-dbddb74b8-ggpxm 0/1 ContainerCreating 0 9s[root@Master01 ~]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-dbddb74b8-ggpxm 0/1 ContainerCreating 0 15s[root@Master01 ~]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-dbddb74b8-ggpxm 0/1 ContainerCreating 0 38s[root@Master01 ~]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-dbddb74b8-ggpxm 0/1 ContainerCreating 0 52s#过了一会儿,容器终于创建好并运行[root@Master01 ~]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-dbddb74b8-ggpxm 1/1 Running 0 53s#让创建的Pod开放80端口,以便于外部能够访问[root@Master01 ~]# kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePortservice/nginx exposed#查看集群服务的开启情况[root@Master01 ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 11dnginx NodePort 10.0.0.192 <none> 80:31442/TCP 60s

特别说明:

- CLUSTER-IP:10.0.0.192为pod的内部访问IP地址

- POSRT(S):80:31442/TCP

- 80为pod暴露在集群内的访问端口

- 31442/TCP为pod暴露在集群外的访问端口

2.9.1 在K8S集群中,对Pod进行访问测试(两个Node节点上)

#特别注意:如果你的实验不是在一天完成的,那么你的虚拟机的Node节点的flannel服务可能已经挂掉了。因此,我们需要重启动flanneld和dockerd服务[root@node01 ~]# systemctl daemon-reload[root@node01 ~]# systemctl restart flanneld[root@node01 ~]# systemctl restart dockerd[root@node01 ~]# ps -ef | grep flanneldroot 76933 1 0 21:49 ? 00:00:00 /opt/kubernetes/bin/flanneld --ip-masq --etcd-endpoints=https://192.168.200.207:2379,https://192.168.200.208:2379,https://192.168.200.209:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem[root@node02 cfg]# systemctl daemon-reload[root@node02 cfg]# systemctl restart flanneld[root@node02 cfg]# systemctl restart dockerd[root@node02 cfg]# ps -ef | grep flanneldroot 59410 1 0 21:49 ? 00:00:00 /opt/kubernetes/bin/flanneld --ip-masq --etcd-endpoints=https://192.168.200.207:2379,https://192.168.200.208:2379,https://192.168.200.209:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem#在Node01和Node02上分别访问10.0.0.192:80[root@node01 ~]# curl 10.0.0.192:80<!DOCTYPE html><html><head><title>Welcome to nginx!</title><style>body {width: 35em;margin: 0 auto;font-family: Tahoma, Verdana, Arial, sans-serif;}</style></head><body><h1>Welcome to nginx!</h1><p>If you see this page, the nginx web server is successfully installed andworking. Further configuration is required.</p><p>For online documentation and support please refer to<a href="http://nginx.org/">nginx.org</a>.<br/>Commercial support is available at<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p></body></html>[root@node02 ~]# curl 10.0.0.192:80<!DOCTYPE html><html><head><title>Welcome to nginx!</title><style>body {width: 35em;margin: 0 auto;font-family: Tahoma, Verdana, Arial, sans-serif;}</style></head><body><h1>Welcome to nginx!</h1><p>If you see this page, the nginx web server is successfully installed andworking. Further configuration is required.</p><p>For online documentation and support please refer to<a href="http://nginx.org/">nginx.org</a>.<br/>Commercial support is available at<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p></body></html>

2.9.2 在K8S集群外,对Pod进行访问测试

由于我们还没有负载均衡器LB,因此我们需要查看一下,pod被创建在了哪个Node节点上

[root@Master01 ~]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEnginx-dbddb74b8-ggpxm 1/1 Running 2 19m 172.17.95.2 192.168.200.209 <none>[root@Master01 ~]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.0.0.1 <none> 443/TCP 11dnginx NodePort 10.0.0.192 <none> 80:31442/TCP 22m

通过查询,我们知道,Nginx的pod被创建在了IP为192.168.200.209的Node节点上

因此,我们在宿主机浏览器上通过访问http://192.168.200.209:31442进行访问

2.9.3 查看Pod的访问日志(进行匿名用户的集群权限绑定)

#我们在master上查看pod的访问日志[root@Master01 ~]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-dbddb74b8-ggpxm 1/1 Running 2 29m[root@Master01 ~]# kubectl logs nginx-dbddb74b8-ggpxmError from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy) ( pods/log nginx-dbddb74b8-ggpxm)

我们发现我们通过kubectl进行日志访问被权限拒绝了,这是因为:

kubernetes需要将system:anonymous用户的集群访问权限提高到cluster-admin(系统内置管理员权限)

#将匿名用户system:anonymous的权限绑定到集群的cluster-admin用户上[root@Master01 ~]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymousclusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created[root@Master01 ~]# kubectl logs nginx-dbddb74b8-ggpxm172.17.95.1 - - [09/Apr/2019:13:51:56 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"172.17.16.0 - - [09/Apr/2019:13:52:15 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"172.17.95.1 - - [09/Apr/2019:14:00:31 +0000] "GET / HTTP/1.1" 200 612 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36" "-"2019/04/09 14:00:31 [error] 6#6: *3 open() "/usr/share/nginx/html/favicon.ico" failed (2: No such file or directory), client: 172.17.95.1, server: localhost, request: "GET /favicon.ico HTTP/1.1", host: "192.168.200.209:31442", referrer: "http://192.168.200.209:31442/"172.17.95.1 - - [09/Apr/2019:14:00:31 +0000] "GET /favicon.ico HTTP/1.1" 404 556 "http://192.168.200.209:31442/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36" "-"172.17.95.1 - - [09/Apr/2019:14:02:03 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36" "-"

2.10 部署Web UI(Dashboard)

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dashboard

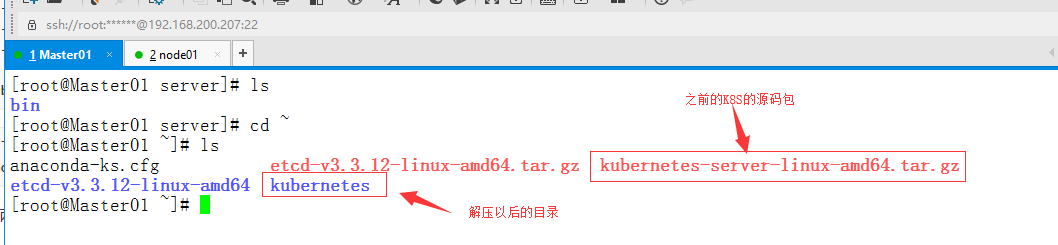

在我们之前的K8S的源码包里有dashboard插件

#在Master01上,进行如下操作[root@Master01 ~]# lsanaconda-ks.cfg etcd-v3.3.12-linux-amd64 etcd-v3.3.12-linux-amd64.tar.gz kubernetes kubernetes-server-linux-amd64.tar.gz#进入解压后的kubernetes源码包目录[root@Master01 ~]# cd kubernetes[root@Master01 kubernetes]# lsaddons kubernetes-src.tar.gz LICENSES server#解包kubernetes-src.tar.gz[root@Master01 kubernetes]# tar xf kubernetes-src.tar.gz[root@Master01 kubernetes]# lsaddons CHANGELOG.md docs LICENSES OWNERS_ALIASES server translationsapi cluster Godeps logo pkg staging vendorbuild cmd hack Makefile plugin SUPPORT.md WORKSPACEBUILD.bazel code-of-conduct.md kubernetes-src.tar.gz Makefile.generated_files README.md testCHANGELOG-1.12.md CONTRIBUTING.md LICENSE OWNERS SECURITY_CONTACTS third_party#进入dashboard插件目录[root@Master01 kubernetes]# cd cluster/addons/dashboard/[root@Master01 dashboard]# pwd/root/kubernetes/cluster/addons/dashboard[root@Master01 dashboard]# lsdashboard-configmap.yaml dashboard-rbac.yaml dashboard-service.yaml OWNERSdashboard-controller.yaml dashboard-secret.yaml MAINTAINERS.md README.md#创建dashboard各个组件[root@Master01 dashboard]# kubectl create -f dashboard-configmap.yamlconfigmap/kubernetes-dashboard-settings created[root@Master01 dashboard]# kubectl create -f dashboard-rbac.yamlrole.rbac.authorization.k8s.io/kubernetes-dashboard-minimal createdrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created[root@Master01 dashboard]# kubectl create -f dashboard-secret.yamlsecret/kubernetes-dashboard-certs createdsecret/kubernetes-dashboard-key-holder created#修改dashboard-controller.yaml文件的第34行的镜像下载地址,如下所示:[root@Master01 dashboard]# sed -n '34p' dashboard-controller.yamlimage: k8s.gcr.io/kubernetes-dashboard-amd64:v1.8.3[root@Master01 dashboard]# vim dashboard-controller.yaml +34#修改为如下内容[root@Master01 dashboard]# sed -n '34p' dashboard-controller.yamlimage: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.0#创建这个组件文件[root@Master01 dashboard]# kubectl create -f dashboard-controller.yamlserviceaccount/kubernetes-dashboard createddeployment.apps/kubernetes-dashboard created#查看dashboard镜像运行情况#之前测试启动的pods[root@Master01 dashboard]# kubectl get podsNAME READY STATUS RESTARTS AGEnginx-dbddb74b8-ggpxm 1/1 Running 2 11d#指定命名空间后,我们看到了dashboard的pods[root@Master01 dashboard]# kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEkubernetes-dashboard-6bff7dc67d-jl7pg 1/1 Running 0 51s#查看dashboard的pods日志,看看是否启动成功[root@Master01 ~]# kubectl logs kubernetes-dashboard-6bff7dc67d-jl7pg -n kube-system2019/04/21 03:55:55 Using in-cluster config to connect to apiserver2019/04/21 03:55:55 Using service account token for csrf signing2019/04/21 03:55:55 No request provided. Skipping authorization2019/04/21 03:55:55 Starting overwatch2019/04/21 03:55:55 Successful initial request to the apiserver, version: v1.12.12019/04/21 03:55:55 Generating JWE encryption key2019/04/21 03:55:55 New synchronizer has been registered: kubernetes-dashboard-key-holder-kube-system. Starting2019/04/21 03:55:55 Starting secret synchronizer for kubernetes-dashboard-key-holder in namespace kube-system2019/04/21 03:55:57 Initializing JWE encryption key from synchronized object2019/04/21 03:55:57 Creating in-cluster Heapster client2019/04/21 03:55:57 Auto-generating certificates2019/04/21 03:55:57 Metric client health check failed: the server could not find the requested resource (get services heapster). Retrying in 30 seconds.2019/04/21 03:55:57 Successfully created certificates2019/04/21 03:55:57 Serving securely on HTTPS port: 8443

出现上述的提示,表示已经启动成功

Metric client health check failed: the server could not find the requested resource (get services heapster). Retrying in 30 seconds.

这句话可以忽略

#打开dashboard-service.yaml配置文件,添加一行代码[root@Master01 dashboard]# pwd/root/kubernetes/cluster/addons/dashboard[root@Master01 dashboard]# vim dashboard-service.yaml[root@Master01 dashboard]# cat dashboard-service.yamlapiVersion: v1kind: Servicemetadata:name: kubernetes-dashboardnamespace: kube-systemlabels:k8s-app: kubernetes-dashboardkubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcilespec:type: NodePort #本行代码是后添加的selector:k8s-app: kubernetes-dashboardports:- port: 443targetPort: 8443#创建这个配置文件的组件[root@Master01 dashboard]# kubectl create -f dashboard-service.yamlservice/kubernetes-dashboard created#查看dashboad被创建在了哪个Node上[root@Master01 ~]# kubectl get pods -o wide -n kube-systemNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODEkubernetes-dashboard-6bff7dc67d-jl7pg 1/1 Running 1 8h 172.17.12.3 192.168.200.209 <none>#查看dashboard开启的内/外部访问端口[root@Master01 ~]# kubectl get svc -n kube-systemNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes-dashboard NodePort 10.0.0.46 <none> 443:42460/TCP 5h37m

特别提示:

通过查看,我们发现dashboard被创建在了Node节点192.168.200.209上

开启的内部访问端口为443,外部访问端口为42460

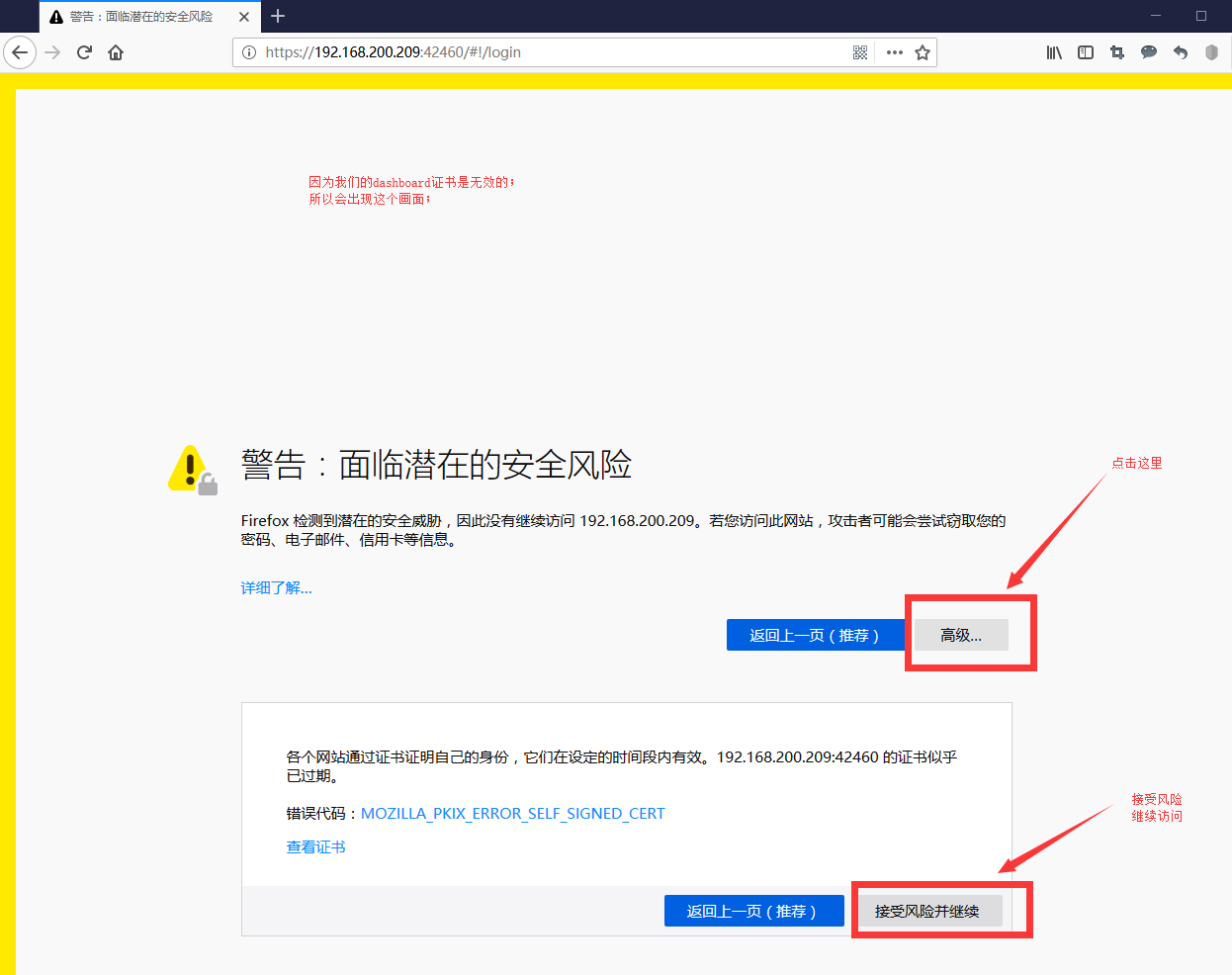

因为这是一个加密的https访问协议,我们使用的都是不合法的证书,所以有些浏览器可能访问不了

如果同学们实验到这里时无法访问,那么请关闭所有杀毒软件后,安装firefox火狐浏览器再次进行访问尝试

通过firefox进行访问尝试:

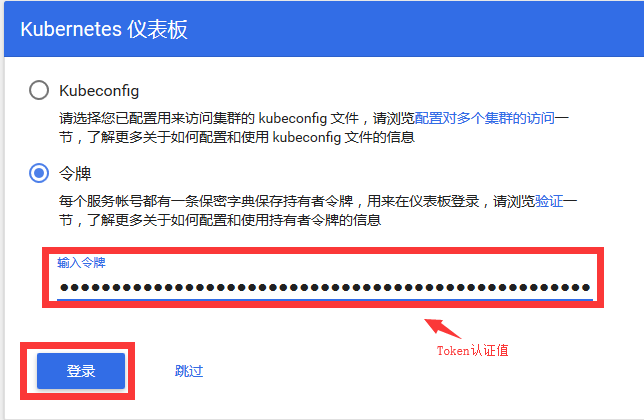

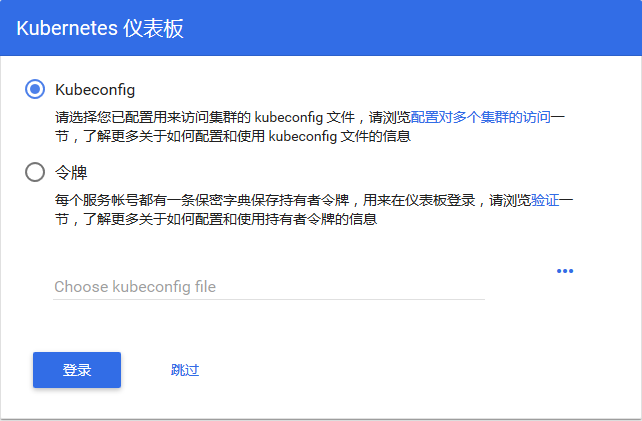

如果我们要访问dashboard仪表盘,那么我们此时还需要一个身份令牌。

#利用我们事先做好的k8s-admin.yaml认证配置文件,创建用户身份令牌[root@Master01 ~]# vim k8s-admin.yaml[root@Master01 ~]# cat k8s-admin.yamlapiVersion: v1kind: ServiceAccountmetadata:name: dashboard-adminnamespace: kube-system---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1beta1metadata:name: dashboard-adminsubjects:- kind: ServiceAccountname: dashboard-adminnamespace: kube-systemroleRef:kind: ClusterRolename: cluster-adminapiGroup: rbac.authorization.k8s.io[root@Master01 ~]# kubectl create -f k8s-admin.yamlserviceaccount/dashboard-admin createdclusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created#查看我们刚刚创建的用户的身份认证token值[root@Master01 ~]# kubectl get secret -n kube-systemNAME TYPE DATA AGEdashboard-admin-token-ppknr kubernetes.io/service-account-token 3 3m30sdefault-token-jjt2j kubernetes.io/service-account-token 3 23dkubernetes-dashboard-certs Opaque 0 9hkubernetes-dashboard-key-holder Opaque 2 9hkubernetes-dashboard-token-mms5w kubernetes.io/service-account-token 3 9h特别说明:dashboard-admin-token-ppknr:就是我们刚刚创建的那个用户dashboard-admin

#查看用户的token认证的详细信息[root@Master01 ~]# kubectl describe secret dashboard-admin-token-ppknr -n kube-systemName: dashboard-admin-token-ppknrNamespace: kube-systemLabels: <none>Annotations: kubernetes.io/service-account.name: dashboard-adminkubernetes.io/service-account.uid: 9514e04e-641f-11e9-910b-000c29090fc9Type: kubernetes.io/service-account-tokenData====namespace: 11 bytestoken: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tcHBrbnIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiOTUxNGUwNGUtNjQxZi0xMWU5LTkxMGItMDAwYzI5MDkwZmM5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.kxocPlazEo8yHPoFpHA7eHLP8qUhCwV21YHx-LL25yOP6_ZNN6HuTtw11AQH_oQ5R8fpet4vCbkABnaZtVOXBkQbO8oU2i5FgLW5o8-nH1Zn264X9fCmqZRcwBV6-q5dwDTwUfbn-3Yv5dibxq5bs_Uc5_fOL32zayiTHHZka85JBENz61R3tQrd3utQIez_yZQ78Uegx-Uk816oJ-zJcGQNuRKSpeJLP5p5AMgQ-TZ47gQUWaeEQsmRmxPw4pNQJ3aS7pM-VA74M3JbIGwgGZLMZ_sp6v0-JWBI3pH7zQkoCwQfnPVnk-oP_zcp4CSc3eKTLN2dqrIr2dImjqQ0QAca.crt: 1359 bytes

我们复制dashboard-admin的token认证信息里的token值到Web端进行认证登陆